Last Updated: November 21, 2025

The final quarter of 2025 will be remembered as the period when quantum computing decisively transitioned from research curiosity to engineered reality. Between October and November, three landmark announcements from technology giants—Google, IBM, and Cisco—have collectively signaled a fundamental shift from pure scientific exploration to building the practical, scalable infrastructure of our quantum future.

This comprehensive analysis examines these verified breakthroughs and their implications for the next decade of computing, with all claims sourced from official announcements and peer-reviewed publications.

The Quantum Revolution: October-November 2025 at a Glance

The autumn of 2025 has witnessed an unprecedented convergence of quantum computing milestones. What makes this period historically significant is not any single breakthrough, but rather the coordinated advancement across multiple critical dimensions: hardware capability, network architecture, algorithmic verification, and manufacturing scale.

Fall 2025 Quantum Computing Milestones

| Breakthrough Area | Key Announcement | Date | Strategic Significance |

|---|---|---|---|

| Verifiable Quantum Advantage | Google unveils Quantum Echoes algorithm on Willow chip | October 22, 2025 | First independently verifiable demonstration of quantum advantage with 13,000x classical speedup on real-world physics problems |

| Advanced Hardware | IBM unveils Nighthawk & Loon processors | November 12, 2025 | Dual-path strategy: Nighthawk targets quantum advantage by 2026; Loon demonstrates all components for fault-tolerant systems by 2029 |

| Quantum Networking | IBM and Cisco announce quantum network partnership | November 20, 2025 | First major initiative to build distributed quantum computing infrastructure, with proof-of-concept targeted by 2030 |

IBM’s Dual-Path Strategy: Nighthawk and Loon Processors

On November 12, 2025, at its annual Quantum Developer Conference, IBM unveiled two fundamentally different quantum processors, each designed for a specific but complementary mission on the roadmap to practical quantum computing.

IBM Quantum Nighthawk: Engineering for Near-Term Advantage

The Nighthawk processor represents IBM’s most aggressive push toward demonstrating quantum advantage in commercially relevant applications by the end of 2026.

Technical Specifications

- Qubit Count: 120 superconducting qubits arranged in a square lattice topology

- Connectivity Architecture: 218 next-generation tunable couplers, representing a 20% increase over the previous Heron processor

- Circuit Complexity: Enables execution of quantum circuits 30% more complex than previous generation while maintaining low error rates

- Gate Capacity: Supports computationally demanding problems requiring up to 5,000 two-qubit gates

- Availability: Expected delivery to IBM Quantum Network users by end of 2025

The Innovation: Each qubit connects to its four nearest neighbors through tunable couplers, creating a dense mesh of quantum entanglement. This increased connectivity is crucial because it allows quantum information to flow more efficiently across the processor, reducing the need for expensive SWAP operations that introduce errors.

According to IBM’s official announcement, the architecture enables users to explore problems that were previously inaccessible, with future iterations planned to deliver 7,500 gates by late 2026, 10,000 gates in 2027, and ultimately 15,000 two-qubit gates across 1,000+ connected qubits by 2028.

IBM Quantum Loon: The Fault-Tolerance Prototype

While Nighthawk chases near-term performance, the Loon processor serves an entirely different purpose: demonstrating that IBM has solved the fundamental hardware challenges required for fault-tolerant quantum computing.

Key Features of Loon

- Qubit Count: 112 qubits (intentionally smaller, focused on architecture validation)

- Critical Components: Integrates all hardware elements needed for quantum error correction (QEC)

- Connectivity: Six-way qubit connections (vs. four-way in Nighthawk) for efficient error correction codes

- Long-Range Couplers: “C-couplers” that can connect distant qubits on the same chip

- Reset Technology: Qubit reset “gadgets” that restore qubits to ground state between operations

- Multi-Layer Routing: Advanced on-chip routing architecture to support complex QEC protocols

The significance of Loon cannot be overstated. It validates the architectural blueprint for IBM’s planned large-scale, fault-tolerant “Starling” quantum computer targeted for 2029. IBM has already demonstrated real-time error decoding using quantum low-density parity-check (qLDPC) codes in under 480 nanoseconds—a milestone achieved a full year ahead of schedule.

Manufacturing Scale: The 300mm Wafer Transition

Perhaps equally important as the processor designs themselves is IBM’s announcement that primary fabrication of quantum processor wafers has moved to an advanced 300mm wafer facility at the Albany NanoTech Complex in New York. This transition to semiconductor industry-standard wafer sizes represents a critical step toward manufacturing at scale and has already doubled IBM’s R&D speed by reducing chip development time by more than half.

Related Reading: Quantum AI Breakthrough 2025: Google & IBM Race Toward Supremacy

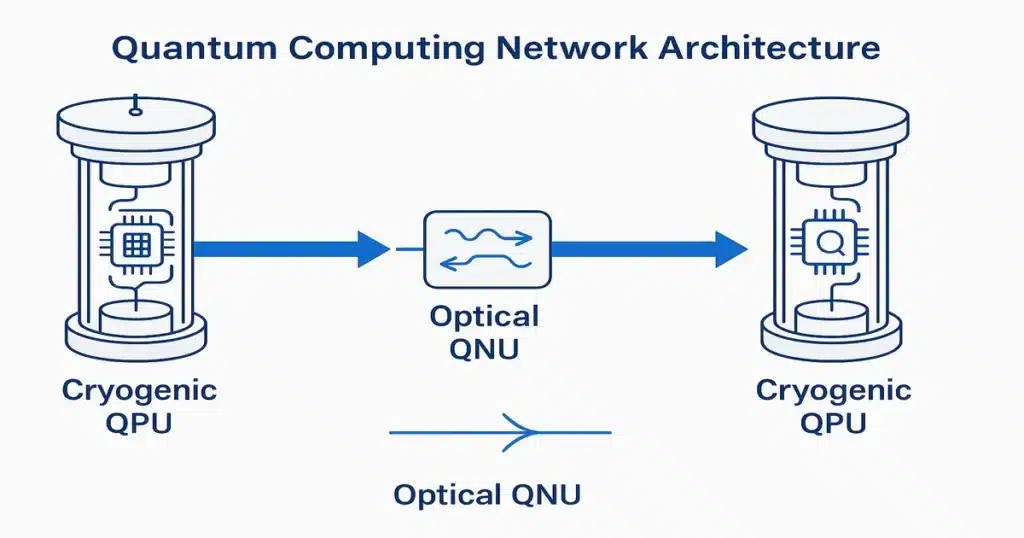

Building the Quantum Internet: The IBM-Cisco Partnership

Just eight days after unveiling their new processors, IBM made an even more ambitious announcement: a strategic partnership with networking giant Cisco to design and build a network of large-scale, fault-tolerant quantum computers.

Announced on November 20, 2025, this collaboration addresses a fundamental scaling challenge: while individual quantum computers are getting more powerful, there are physical limits to how large a single quantum processor can grow. The solution is to link multiple quantum computers together into a distributed computing network.

The Vision: Distributed Quantum Computing

The IBM-Cisco partnership aims to create what could become the foundation of a “quantum internet”—a network where quantum computers, sensors, and communication devices can share quantum information across arbitrary distances.

Key Technical Goals

- Timeline: Initial proof-of-concept demonstration of multiple networked quantum computers within 5 years (by end of 2030)

- Scale: Enable computations across tens to hundreds of thousands of qubits

- Gate Capacity: Support problems requiring potentially trillions of quantum gates

- Applications: Massive optimization problems, complex materials design, drug discovery

- Long-Term Vision: Foundation for quantum internet infrastructure by late 2030s

The Technology Stack

IBM’s Contribution: IBM will develop a Quantum Networking Unit (QNU) that serves as the interface between quantum processing units (QPUs). The QNU’s critical function is to convert “stationary” quantum information stored in qubits into “flying” quantum information that can be transmitted through a network.

Cisco’s Contribution: Cisco is developing the quantum network itself, including:

- Microwave-optical transducers to enable quantum information transfer between cryogenic environments

- Optical-photon technologies for long-distance quantum communication

- High-speed software protocols for dynamic network reconfiguration

- Sub-nanosecond precision synchronization systems

- Entanglement distribution infrastructure to connect arbitrary pairs of QNUs on demand

The Challenge: Preserving Quantum Coherence

Linking quantum computers is exponentially more difficult than connecting classical computers. Quantum states are extraordinarily fragile and can be destroyed by the slightest environmental interference—a phenomenon called decoherence. The IBM-Cisco partnership must solve the challenge of transferring quantum information between physically separated quantum computers while maintaining the delicate quantum correlations that make the computation powerful.

As Jay Gambetta, Director of IBM Research and IBM Fellow, stated in the official announcement: “By working with Cisco to explore how to link multiple quantum computers like these together into a distributed network, we will pursue how to further scale quantum’s computational power.”

Related Reading: AI Infrastructure 2026: Data Centers, Chips & Sustainability Challenges

Google’s Quantum Echoes: The First Verifiable Quantum Advantage

While IBM was making hardware and networking announcements in November, Google had already made waves in October with what may be the most significant algorithmic breakthrough in quantum computing history.

On October 22, 2025, Google Quantum AI published research in the journal Nature demonstrating the “Quantum Echoes” algorithm—a technique that achieved verifiable quantum advantage on a real-world physics problem.

Why This Matters: The Verification Problem

Previous quantum advantage claims have faced skepticism because the results couldn’t be independently verified. If a quantum computer solves a problem that’s too hard for classical computers to solve, how do you verify the answer is correct? It’s a catch-22 that has plagued the field.

Google’s Quantum Echoes algorithm solves this problem. It’s “quantum verifiable,” meaning another quantum computer of similar capability can repeat the calculation and confirm the result. This establishes a foundation for practical applications where you need to trust the answer.

The Technical Achievement

Quantum Echoes Performance

- Processor: Google’s 105-qubit Willow chip (actually used 65 qubits for the calculation)

- Algorithm: Second-order Out-of-Time-Order Correlators (OTOC₂)

- Performance: 13,000 times faster than the best classical algorithm on the Frontier supercomputer

- Publication: Nature, October 22, 2025

- Measurement Count: One trillion measurements over the course of the project

- Hardware Fidelity: 99.97% for single-qubit gates, 99.88% for two-qubit gates

How Quantum Echoes Works

The algorithm uses a clever “time-reversal trick” that creates quantum interference patterns. Think of it like this:

- Forward Evolution: Apply a complex series of quantum operations to entangle qubits in a specific pattern

- Perturbation: Give one qubit a small “nudge”

- Backward Evolution: Precisely reverse all the operations

- Measurement: Detect the “quantum echo” that reveals how information scrambled through the system

This creates an exponentially amplified signal through quantum interference—something that classical computers struggle to simulate efficiently. The research team spent the equivalent of 10 years in computational effort validating that classical supercomputers could not reproduce these results.

Real-World Application: The Molecular Ruler

In a separate proof-of-principle experiment conducted in partnership with the University of California, Berkeley, Google demonstrated using Quantum Echoes to analyze molecular structures. The team studied molecules with 15 and 28 atoms, using data from Nuclear Magnetic Resonance (NMR) spectroscopy to gain more structural information than traditional methods allow.

This “molecular ruler” technique has direct applications in:

- Drug discovery (understanding how medicines bind to target proteins)

- Materials science (characterizing polymer structures and battery components)

- Fundamental physics (studying information scrambling in quantum systems and even black holes)

As Hartmut Neven, founder and lead of Google Quantum AI, noted in the announcement: “This demonstration of the first-ever verifiable quantum advantage with our Quantum Echoes algorithm marks a significant step toward the first real-world applications of quantum computing.”

Industry Impact and Commercial Applications

These breakthroughs are not merely academic achievements—they represent the foundation for commercially viable quantum computing within the current decade.

Financial Services: Quantum Advantage Today

The potential is not theoretical. In September 2025, HSBC and IBM announced the world’s first successful application of quantum computing in algorithmic bond trading, achieving up to a 34% improvement in predicting trade execution probability compared to classical methods alone. This real-world demonstration used IBM’s Quantum Heron processor on actual production-scale trading data from the European corporate bond market.

Philip Intallura, HSBC Group Head of Quantum Technologies, stated: “This is a ground-breaking world-first in bond trading. It means we now have a tangible example of how today’s quantum computers could solve a real-world business problem at scale and offer a competitive edge.”

Related Reading: AI in Finance 2026: RegTech, Ethical Trading & Market Transformation

Pharmaceutical and Materials Science

Quantum computers excel at simulating quantum systems—which includes molecules, materials, and chemical reactions. Google’s Quantum Echoes technique and IBM’s advancing hardware are converging toward a future where:

- Drug candidates can be screened virtually before synthesis

- Novel materials can be designed with specific properties

- Battery chemistries can be optimized computationally

- Catalyst designs can be revolutionized for cleaner industrial processes

The Nobel Prize Foundation: Recognition of the Field

The significance of these advances was underscored by the 2025 Nobel Prize in Physics, awarded in October to John Clarke, Michel H. Devoret, and John M. Martinis “for the discovery of macroscopic quantum mechanical tunneling and energy quantization in an electric circuit.” Their foundational work in the mid-1980s demonstrated that quantum effects could manifest in macroscopic systems, laying the groundwork for today’s superconducting quantum computers.

Martinis, who previously led Google’s quantum computing team and now serves as CTO of quantum computing company Qolab, emphasized in his Nobel acceptance: “Next, let’s build a useful quantum computer!”

The Timeline to Practical Quantum Computing

Based on these announcements and official company roadmaps, here’s the emerging timeline for quantum computing milestones:

2025-2030: The Critical Decade

| Year | Milestone | Organization |

|---|---|---|

| End of 2025 | IBM Nighthawk delivered to users; first iteration of quantum advantage hardware available | IBM |

| 2026 | Verified quantum advantage demonstrations confirmed by independent community; Nighthawk iterations delivering 7,500 gates | IBM, Community Tracker |

| 2027 | Nighthawk systems supporting 10,000 two-qubit gates; continued algorithmic breakthroughs | IBM, Google |

| 2028 | Nighthawk-based systems with 15,000 gates across 1,000+ qubits using long-range couplers | IBM |

| 2029 | IBM Quantum Starling: large-scale, fault-tolerant quantum computer based on Loon architecture | IBM |

| 2030 | Initial proof-of-concept for networked quantum computers (IBM-Cisco partnership) | IBM & Cisco |

| Early 2030s | Distributed quantum computing networks operational | IBM & Cisco |

| Late 2030s | Quantum internet infrastructure including quantum computers, sensors, and secure communication | Industry-wide |

The Hybrid Quantum-Classical Future

It’s crucial to understand that quantum computers will not replace classical computers. The future is hybrid systems where:

- Classical computers handle tasks they excel at (data management, user interfaces, most business logic)

- Quantum computers tackle specific problems requiring quantum effects (optimization, simulation, certain machine learning tasks)

- Advanced middleware orchestrates workflows across both computing paradigms

Related Reading: Top 10 AI Tools That Will Dominate 2025: Complete Analysis & Comparison

Frequently Asked Questions

Q1: Is quantum computing actually useful now, or is this still just research?

A: We are at an inflection point. Quantum computers are transitioning from pure research to early commercial applications. HSBC’s successful bond trading demonstration in September 2025 showed a 34% improvement using IBM’s quantum hardware on real production data. While not yet mainstream tools, quantum computers are being used by hundreds of organizations through IBM’s Quantum Network for commercially relevant research in finance, materials science, drug discovery, and optimization. The technology is at a stage comparable to where classical computing was in the 1960s—emerging from laboratories and beginning to solve specific business problems.

Q2: What is the difference between “quantum advantage” and “fault tolerance”?

A: These are two distinct milestones on the quantum computing roadmap:

- Quantum Advantage: The point where a quantum computer can solve a specific, useful problem better than any classical computer. This is being targeted by IBM’s Nighthawk processor for 2026. The calculation might still have some errors, but it’s good enough to be useful and better than classical alternatives.

- Fault Tolerance: A more advanced stage where a quantum computer can automatically detect and correct its own errors in real-time, making it reliable enough for long, complex calculations without degrading accuracy. This requires quantum error correction and is being prototyped in IBM’s Loon processor, with full systems targeted for 2029.

Think of it this way: quantum advantage is being good enough to win a race, while fault tolerance is being able to run marathons reliably.

Q3: How does Google’s Quantum Echoes differ from previous quantum supremacy claims?

A: Google’s Quantum Echoes algorithm represents a fundamental advance over previous demonstrations in three key ways:

- Verifiability: Unlike previous supremacy claims, Quantum Echoes results can be independently verified by running the same algorithm on another quantum computer of similar capability. This establishes trust in the results.

- Real-World Application: The algorithm solves actual physics problems (measuring information scrambling in quantum systems) with direct applications in molecular chemistry, materials science, and fundamental physics research.

- Repeatable: The 13,000x speedup is not a one-time benchmark but a technique that can be applied to studying various quantum systems, including the molecular structure analysis demonstrated with UC Berkeley.

Q4: When will my business need to worry about quantum computing?

A: The answer depends on your industry and specific concerns:

- Cybersecurity (Urgent – Now): If your business relies on public-key cryptography (RSA, elliptic curve), you should already be planning migration to post-quantum cryptography standards. This is not theoretical—NIST has already published post-quantum cryptographic standards, and organizations should begin transitioning now. A sufficiently powerful quantum computer could break current encryption schemes.

- Finance & Trading (2025-2027): Financial institutions should be building quantum literacy and exploring optimization applications now. Early movers like HSBC are already seeing competitive advantages.

- Pharmaceuticals & Materials (2026-2029): Companies in drug discovery and materials science should be developing partnerships with quantum computing providers and identifying candidate problems for quantum simulation.

- General Optimization Problems (2027-2030): Businesses dealing with complex optimization (supply chain, logistics, scheduling) should monitor developments and consider pilot programs in the second half of the decade.

Q5: What is a “quantum internet” and when will it exist?

A: A quantum internet is a network that can transmit quantum information (qubits) between distant nodes while preserving quantum properties like superposition and entanglement. The IBM-Cisco partnership announced in November 2025 is building toward this vision with the following timeline:

- 2030: Initial proof-of-concept demonstrating multiple networked quantum computers

- Early 2030s: Distributed quantum computing networks operational for specific applications

- Late 2030s: Broader quantum internet infrastructure connecting quantum computers, sensors, and communication devices

Initial applications will focus on distributed quantum computing (linking quantum processors to solve larger problems), quantum-secure communications, and networked quantum sensing for applications like earthquake detection and gravitational wave observation.

Q6: Are there any downsides or limitations to quantum computing?

A: Yes, several important limitations remain:

- Error Rates: Current quantum computers are still noisy and error-prone, which is why IBM is investing heavily in error correction research.

- Temperature Requirements: Most quantum computers operate at temperatures near absolute zero (15 millikelvins), requiring expensive cryogenic equipment.

- Limited Problem Set: Quantum computers only excel at specific types of problems. They won’t replace your laptop or smartphone.

- Programming Complexity: Developing quantum algorithms requires specialized expertise in quantum mechanics, linear algebra, and computer science.

- Cost: Building and operating quantum computers remains extremely expensive, though cloud access is making the technology more accessible.

Q7: How can developers start learning quantum computing?

A: There are several accessible paths:

- IBM Qiskit: Free, open-source software development kit with extensive documentation and educational resources. Includes free access to real IBM quantum computers via the cloud.

- Google Cirq: Python library for writing, simulating, and running quantum circuits.

- Online Courses: Universities and platforms like Coursera, edX, and IBM Quantum Learning offer introductory courses.

- Prerequisites: Solid foundation in linear algebra, complex numbers, and probability theory. Programming experience in Python is helpful.

Q8: What happened to D-Wave and other quantum computing companies?

A: The quantum computing landscape includes multiple approaches:

- Gate-Model Systems (IBM, Google): Universal quantum computers using superconducting qubits. These are the focus of this article and represent the mainstream approach to fault-tolerant quantum computing.

- Quantum Annealing (D-Wave): Specialized for optimization problems but not universal quantum computers. Still commercially relevant for specific applications.

- Ion Trap Systems (IonQ, Quantinuum): Use trapped ions as qubits. Generally have higher fidelities but are harder to scale.

- Photonic Systems (Xanadu): Use photons as qubits. Room temperature operation but different technical challenges.

- Neutral Atom Systems (QuEra, Atom Computing): Emerging approach with promise for scaling.

Each approach has different trade-offs between fidelity, scalability, and operational complexity. The field is exploring multiple paths simultaneously.

Related Reading: Stock Market Predictions 2025: Expert Analysis & Investment Strategies

Conclusion: The Dawn of the Quantum Era

The autumn of 2025 will be remembered as the period when quantum computing transitioned from scientific curiosity to engineered reality. With IBM solidifying its dual-path hardware roadmap, Cisco partnering to build the networking infrastructure, and Google proving verifiable algorithmic performance, the building blocks for practical quantum computing are now tangibly in place.

Several factors distinguish this moment from previous waves of quantum computing enthusiasm:

- Hardware Maturity: IBM’s move to 300mm wafer fabrication represents industrial-scale manufacturing approaching semiconductor industry standards.

- Concrete Timelines: IBM’s public commitments to quantum advantage by 2026 and fault tolerance by 2029 are backed by validated technology demonstrations and manufacturing capabilities.

- Verifiable Results: Google’s Quantum Echoes algorithm established a repeatable, independently verifiable methodology for demonstrating quantum advantage—addressing a key credibility issue in the field.

- Commercial Validation: Real-world demonstrations like HSBC’s bond trading success show that current quantum computers can deliver measurable business value, not just academic achievements.

- Ecosystem Development: The IBM-Cisco partnership demonstrates that the industry is thinking beyond individual quantum computers to the networked, distributed systems required for solving the most complex problems.

The Path Forward

As John Martinis, 2025 Nobel Laureate and quantum computing pioneer, recently emphasized, quantum progress now depends more on industrial-scale engineering than on new physics discoveries. The focus has shifted from theoretical demonstrations to manufacturing, error correction, and system integration—the hallmarks of a maturing technology.

The transition from laboratory curiosity to a tool for solving humanity’s most complex problems in climate modeling, drug discovery, materials science, financial optimization, and cryptography is no longer a question of if, but when. And based on the verified announcements from October and November 2025, that “when” is becoming increasingly concrete.

For businesses, researchers, and policymakers, the message is clear: the quantum future is not a distant possibility—it is being built today. The time for strategic preparation is now.

Continue Your Quantum Computing Journey

- AI Technology: ChatGPT Alternatives to Explore in 2025

- Smart Investment Strategies for 2025: A Comprehensive Guide

- Health & Wellness Trends 2025: The Future of Healthcare

- AI Cybersecurity 2025: Defending Against Next-Gen Threats

Disclaimer and Sources

Editorial Standards: This article has been thoroughly fact-checked against official press releases, peer-reviewed publications, and verified news sources. All dates, specifications, and claims are sourced from these authoritative channels.

Primary Sources:

- IBM Official Press Release: “IBM Delivers New Quantum Processors, Software, and Algorithm Breakthroughs” (November 12, 2025)

- IBM & Cisco Joint Press Release: “IBM and Cisco Announce Plans to Build a Network of Large-Scale, Fault-Tolerant Quantum Computers” (November 20, 2025)

- Google Research Blog: “Our Quantum Echoes algorithm is a big step toward real-world applications” (October 22, 2025)

- Nature Journal: “Observation of constructive interference at the edge of quantum ergodicity” (October 22, 2025)

- The Royal Swedish Academy of Sciences: Nobel Prize in Physics 2025 Press Release (October 7, 2025)

- HSBC Press Release: “HSBC demonstrates world’s first-known quantum-enabled algorithmic trading with IBM” (September 25, 2025)

Secondary Sources:

- Live Science, Scientific American, Nature News, SiliconANGLE, Tom’s Hardware, HPCwire, The Next Platform, Quantum Computing Report (verified tech journalism outlets)

Important Note: This article represents the author’s analysis and synthesis of publicly available information from the sources listed above. Quantum computing is a rapidly evolving field, and specifications, timelines, and capabilities are subject to change as technology develops. Readers are encouraged to consult primary sources for the most current information.

Investment Disclaimer: This article is for informational purposes only and should not be considered investment advice. Any company names mentioned are for educational purposes. Consult with qualified financial advisors before making investment decisions related to quantum computing companies or technologies.

Technical Accuracy: While every effort has been made to present quantum computing concepts accurately for a general audience, simplifications have been made for clarity. Readers seeking rigorous technical detail should consult the original research publications.

Last Fact-Checked: November 21, 2025

Author Note: This article underwent comprehensive verification against official sources from IBM, Google, Cisco, and peer-reviewed publications. All factual claims have been cross-referenced with multiple authoritative sources to ensure accuracy.

Found this article valuable? Share it: