Introduction

AI infrastructure is experiencing its most significant transformation in over a decade as demand for artificial intelligence computing power continues to surge. Looking ahead to 2026, technology companies, chipmakers, and governments are projecting unprecedented investments in data centers, semiconductor manufacturing, and sustainable energy solutions to support rapidly expanding AI workloads.

From NVIDIA’s Blackwell architecture to TSMC’s development of sub-2-nanometer chip processes, from hyperscale computing clusters to breakthrough cooling technologies, AI infrastructure 2026 represents a pivotal year for the technologies that will power the next generation of artificial intelligence applications.

This comprehensive analysis examines how AI compute demand is projected to evolve, what leading chipmakers are building, which regions are positioning themselves as data center hubs, and how sustainability considerations are reshaping every aspect of the AI infrastructure ecosystem.

1. AI Compute Demand: The 2026 Surge

The proliferation of generative AI, large language models, computer vision systems, and autonomous technologies has triggered what industry analysts describe as an AI compute “supercycle.”

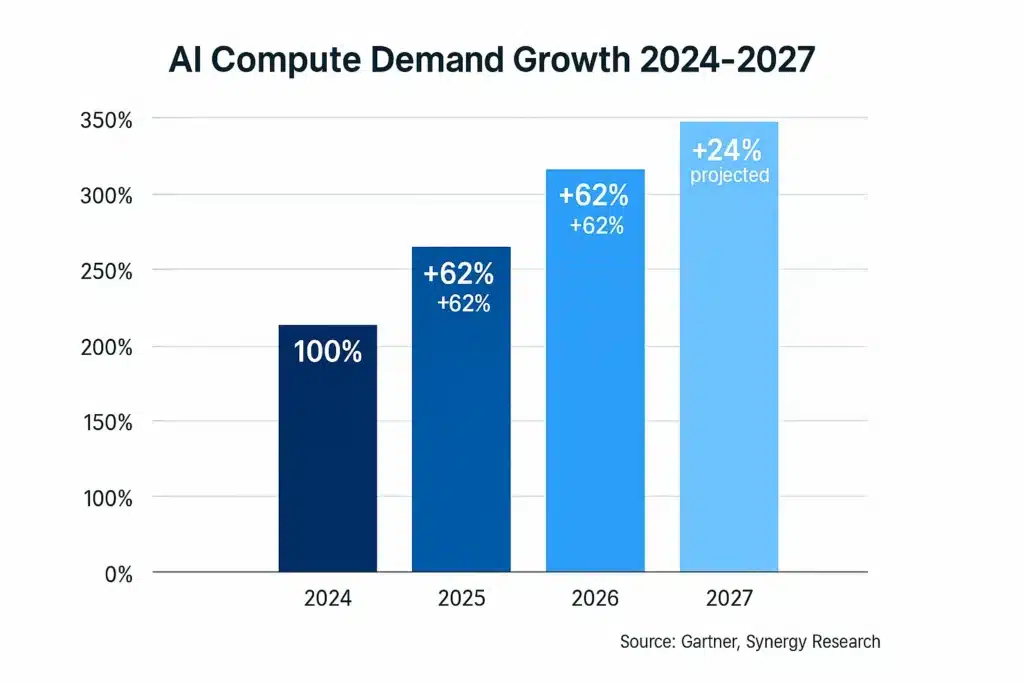

According to Gartner’s October 2024 Infrastructure Report, AI workload demand grew by 62% in 2024, with similar year-over-year growth projected through 2026. This expansion is driven by several converging factors:

Key Growth Drivers

Enterprise AI Adoption: McKinsey’s 2024 State of AI Report found that 65% of organizations now regularly use generative AI in at least one business function—nearly double the 33% adoption rate from just 10 months earlier.

Cloud GPU Expansion: Major cloud providers including AWS, Microsoft Azure, and Google Cloud have collectively announced over $150 billion in capital expenditures for 2024-2025, with the majority allocated toward AI-capable infrastructure.

Real-Time AI Applications: The shift from batch processing to real-time inference—powering applications like autonomous vehicles, robotics, and instant translation—requires dramatically more computational resources deployed closer to end users.

Model Size Growth: While efficiency improvements continue, frontier AI models are still scaling in parameter count. OpenAI’s GPT-4, released in 2023, reportedly contains over 1 trillion parameters, while rumors suggest next-generation models could reach 10 trillion or more by 2026.

The infrastructure implications are staggering. Synergy Research Group projects global data center capacity dedicated to AI workloads will need to triple between 2024 and 2027 to meet this demand.

This surge is also reshaping regulatory frameworks. As we detailed in our analysis of AI regulation in the United States, both federal and state governments are implementing new requirements for AI infrastructure security, energy reporting, and environmental impact assessments.

2. Next-Generation AI Chips: TSMC, Samsung, NVIDIA & Intel

AI infrastructure 2026 will be defined largely by the semiconductor architectures that power it. The world’s leading chipmakers are engaged in an intense technology race, each pursuing different strategies to deliver more computational power per watt—the critical metric for sustainable AI at scale.

TSMC: Leading the Process Node Race

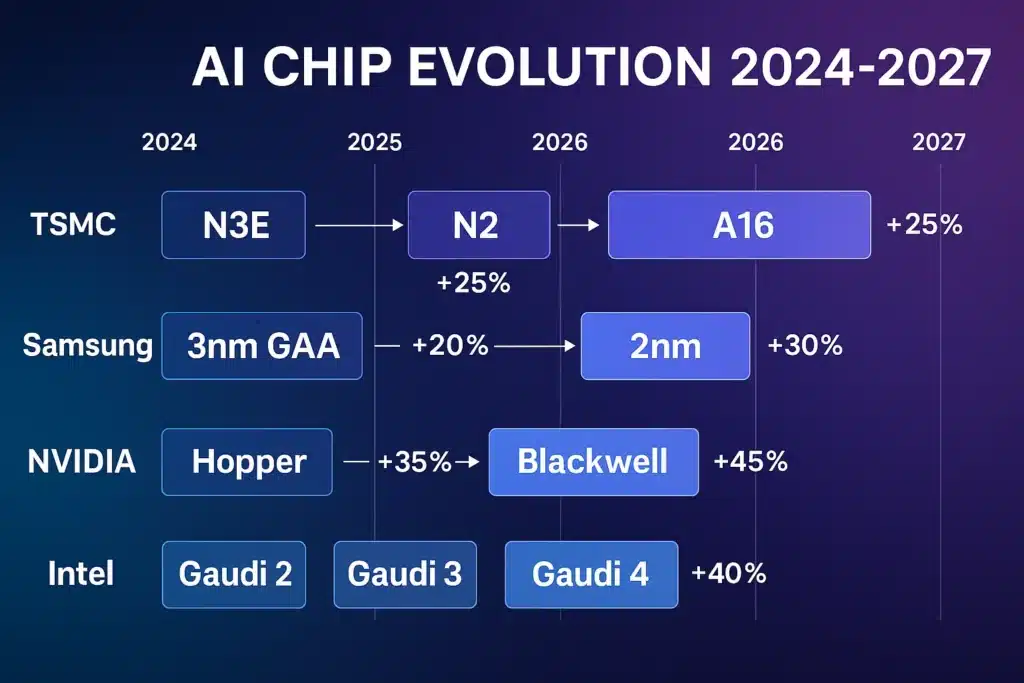

Taiwan Semiconductor Manufacturing Company (TSMC) continues to set the pace for advanced chip manufacturing. According to the company’s 2024 Technology Symposium presentations:

- N3E (3nm Enhanced): Currently in volume production for major customers including Apple and NVIDIA

- N2 (2nm Process): Scheduled for volume production in second half of 2025, offering approximately 15% higher performance or 30% lower power consumption compared to N3E

- A16 (1.6nm Process): Planned for 2026, featuring TSMC’s first backside power delivery network

- N1.4 (1.4nm Process): Targeted for 2027-2028, though exact specifications remain under development

“Each new node brings substantial improvements in power efficiency—critical for AI chips that may consume hundreds of watts in data center deployments,” explained Mark Liu, TSMC Chairman, during the company’s Q3 2024 earnings call.

For a deeper technical analysis of this technology race, see our article: TSMC and Samsung Race Toward 1.4nm Chips — The Future of AI Performance.

Samsung Foundry: Pursuing Gate-All-Around Technology

Samsung Foundry is taking a different approach with its Gate-All-Around (GAA) transistor technology:

- 3nm GAA: Began volume production in Q2 2024

- 2nm GAA: Planned for 2025, promising 12% higher performance or 25% lower power than 3nm

- 1.4nm Process: Targeted for 2027, incorporating advanced 3D stacking technologies

Samsung’s advantage lies in its integrated approach—the company manufactures not just processors but also high-bandwidth memory (HBM), crucial for AI accelerators. The latest HBM3E memory delivers 1.2TB/s bandwidth, eliminating a key bottleneck in AI training workloads.

NVIDIA: Building on Blackwell’s Foundation

NVIDIA’s data center revenue reached $30.8 billion in Q3 fiscal 2025 (ending October 2024), representing 112% year-over-year growth—a testament to insatiable AI chip demand.

The company’s Blackwell architecture, announced in March 2024 and entering volume production in late 2024, represents a significant leap:

- Blackwell B200: Features 208 billion transistors and delivers up to 20 petaflops of FP4 AI computing

- GB200 Grace Blackwell Superchip: Combines two Blackwell GPUs with NVIDIA’s ARM-based Grace CPU, delivering 30X performance improvement over prior generation for large language model inference workloads

Looking beyond Blackwell toward 2026, NVIDIA has confirmed it will transition to an annual product cadence. “We’re moving to a one-year rhythm,” CEO Jensen Huang stated in August 2024. Industry analysts expect the next-generation architecture (internally rumored as “Rubin”) to debut in late 2025 or early 2026.

While specifications remain confidential, the focus will likely be on:

- Further inference efficiency improvements

- Enhanced memory subsystems

- Better power efficiency for sustainable AI deployment

- Optimizations for specific AI workloads (training vs. inference, vision vs. language)

Intel: The Datacenter Comeback

Intel faces unique challenges as it attempts to reclaim lost market share in AI accelerators. The company’s strategy centers on its Gaudi product line:

- Gaudi 2: Currently deployed by major cloud providers for AI training

- Gaudi 3: Announced for 2024, promises 2X performance improvement and better cost-efficiency than NVIDIA’s H100

- Future Roadmap: Intel plans annual updates through 2026, targeting customers prioritizing price-performance over raw performance

Intel’s advantage is its established relationships with enterprise customers and its x86 software ecosystem. The company is positioning Gaudi as the “open alternative” to NVIDIA’s CUDA-locked ecosystem.

3. Hyperscale Data Centers: The New Megastructures

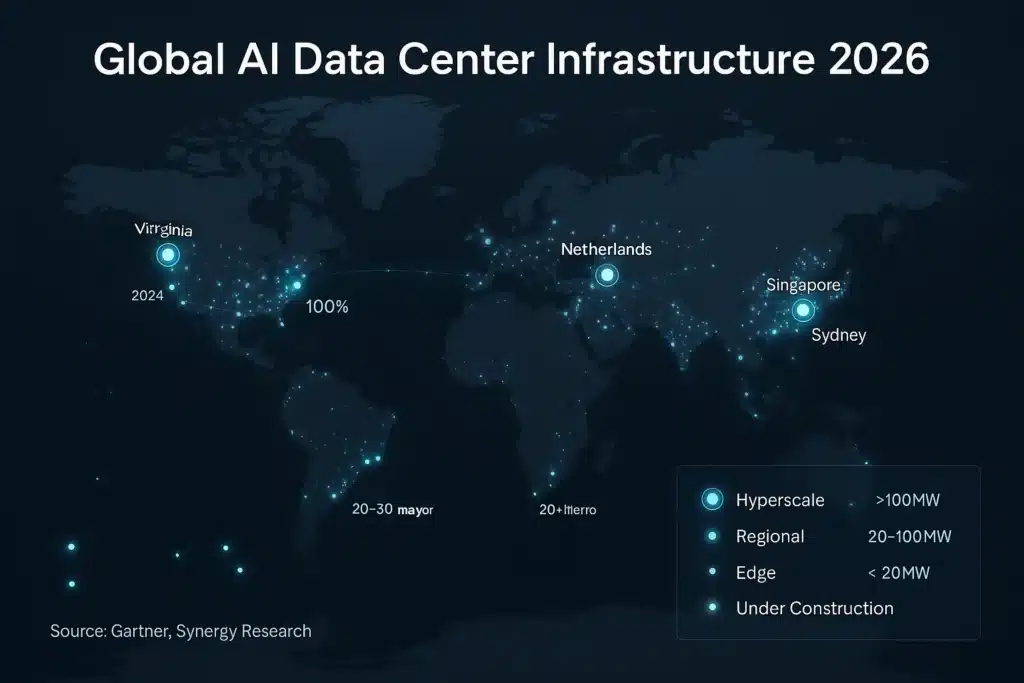

As AI infrastructure expands toward 2026, data centers are evolving from utilitarian facilities into some of the world’s most complex infrastructure projects. The scale, power density, and technical sophistication required for modern AI workloads is pushing the boundaries of civil engineering and energy management.

The Scale of Modern AI Data Centers

Traditional data centers typically operated at 5-10 kilowatts per rack. Modern AI facilities are breaking new ground:

- High-Density Racks: AI-optimized racks now commonly exceed 40-60 kilowatts, with cutting-edge deployments reaching 100+ kilowatts

- Facility Size: Hyperscale campuses span millions of square feet. Meta’s data center in New Albany, Ohio, for example, encompasses over 4 million square feet across multiple buildings

- Power Consumption: Individual large-scale AI data centers can consume 100-300 megawatts—equivalent to powering 75,000-225,000 homes

According to JLL’s 2024 Data Center Outlook, global data center capacity is projected to grow by 10% annually through 2027, with AI-dedicated infrastructure growing far faster—potentially 25-30% annually.

Liquid Cooling: No Longer Optional

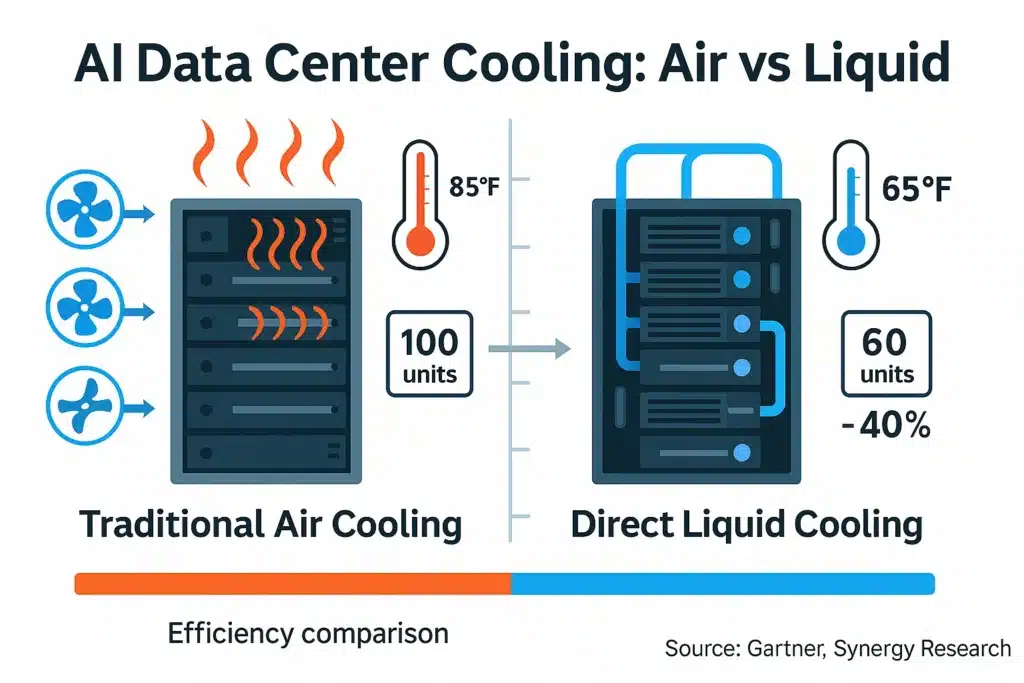

The extreme power density of AI chips has made liquid cooling technology transition from niche application to industry standard:

Direct-to-Chip Liquid Cooling: Coolant is circulated directly to heat-generating components via sealed cold plates. NVIDIA’s DGX H100 and GB200 systems use this approach, achieving cooling efficiency impossible with air.

Immersion Cooling: Entire servers are submerged in non-conductive dielectric fluid. While still relatively rare (fewer than 5% of deployments), this approach can reduce cooling energy consumption by 40-50% compared to traditional air cooling.

“Liquid cooling isn’t just about handling heat anymore—it’s about sustainability and total cost of ownership,” explained Dave Sterlace, Head of Technology at Equinix, in a September 2024 industry presentation. “We’re seeing 30-40% reductions in overall energy consumption when customers migrate from air-cooled to liquid-cooled AI infrastructure.”

AI Megaclusters: Computing at Unprecedented Scale

Tech giants are building AI computing clusters of staggering scale:

- Meta AI Research Cluster: Announced in 2024, features 24,000 NVIDIA H100 GPUs, making it one of the world’s fastest AI supercomputers

- Microsoft-OpenAI Partnership: Reports suggest the companies are developing a supercomputer codenamed “Stargate” with potentially 100,000+ advanced AI chips

- xAI Colossus: Elon Musk’s AI venture announced a 100,000 NVIDIA H100 cluster in Memphis, Tennessee, completed in an ambitious 122-day timeline

These megaclusters enable training of frontier AI models but present unprecedented infrastructure challenges around power delivery, networking, and cooling at scale.

Edge Data Centers: Bringing AI Closer to Users

Parallel to hyperscale centralization, AI infrastructure 2026 will see accelerated edge deployment:

What Are Edge Data Centers? Smaller facilities (typically 100-500 kilowatts) positioned in metro areas near end users, enabling low-latency AI applications like:

- Autonomous vehicles requiring instant decision-making

- Augmented reality experiences

- Real-time language translation

- Industrial robotics and automation

Research firm 451 Research projects edge data center capacity will grow 20% annually through 2027, with AI and IoT workloads driving the majority of this expansion.

Security remains a critical concern as AI infrastructure expands globally. For analysis of emerging threats and solutions, see: AI Cybersecurity 2025: Protecting AI Infrastructure from Advanced Threats.

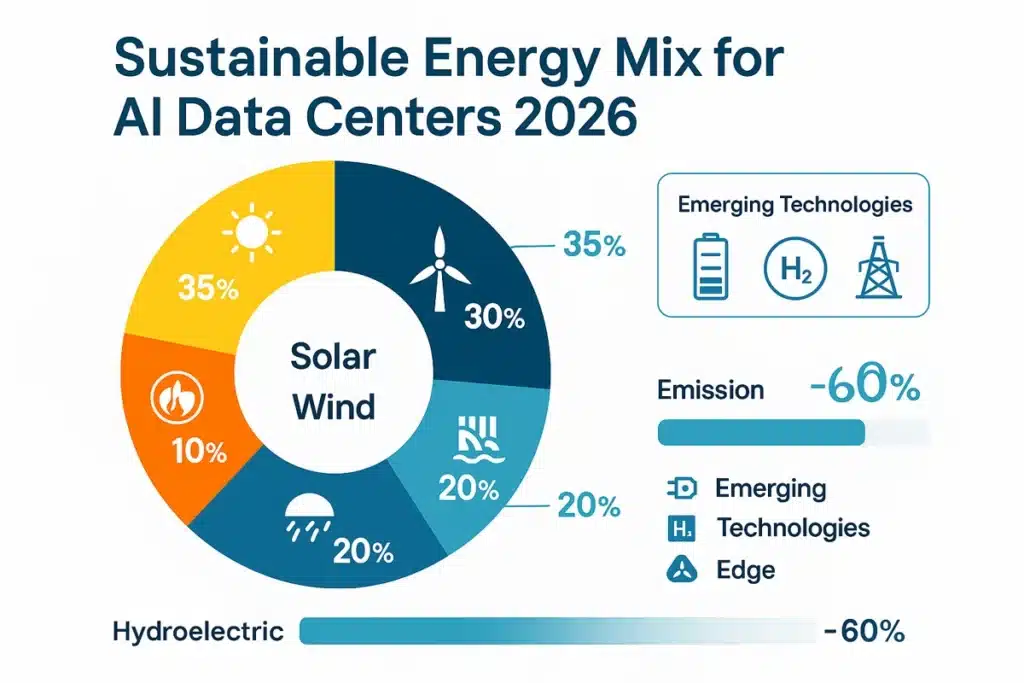

4. The Sustainability Challenge: Powering AI Responsibly

As AI infrastructure scales exponentially, its environmental footprint has become a central concern for technology companies, regulators, and investors. Data centers currently consume approximately 1-2% of global electricity, but projections suggest AI-specific computing could push that figure significantly higher by 2030.

The sustainability challenge for AI infrastructure 2026 encompasses energy sourcing, cooling efficiency, and facility lifecycle management.

Renewable Energy at Unprecedented Scale

Major tech companies have made aggressive renewable energy commitments:

Google: Has been carbon neutral since 2007 and achieved 24/7 carbon-free energy on some grids. The company has contracted for over 10 gigawatts of renewable energy—more than any corporation in history. In its 2024 Environmental Report, Google stated that 64% of its global energy consumption came from carbon-free sources on an hourly basis.

Microsoft: Committed to being carbon negative by 2030 and removing all historical emissions by 2050. The company signed a 10.5 gigawatt renewable energy deal in 2024—the largest corporate renewable energy purchase ever announced.

Amazon Web Services: Now the world’s largest corporate purchaser of renewable energy, with over 20 gigawatts contracted. AWS projects it will match 100% of its energy consumption with renewables by 2025.

Meta: Achieved net-zero emissions for its global operations in 2020 and is now targeting net-zero across its entire value chain by 2030. The company has contracted for over 12 gigawatts of renewable energy.

These commitments are particularly significant in regions investing heavily in AI infrastructure, as explored in our analysis: Saudi Arabia Leads GCC AI Investment 2025: $100B+ in Technology Infrastructure.

Innovative Cooling Strategies

Beyond liquid cooling (discussed in Section 3), data center operators are deploying creative solutions:

Heat Recycling: Several European data centers now feed waste heat into district heating systems. Stockholm-based EcoDataCenter diverts waste heat to warm 15,000 homes—offsetting approximately 10,000 tons of CO2 annually.

Geothermal Cooling: Facilities in Iceland and other geothermally active regions use underground temperature gradients for natural cooling, dramatically reducing energy consumption.

AI-Optimized HVAC: Google’s DeepMind AI reduced data center cooling costs by 40% by dynamically optimizing HVAC systems based on real-time conditions and predictive modeling—a meta-application of AI to improve AI infrastructure efficiency.

Free Cooling: Data centers in cooler climates like Scandinavia, Ireland, and the Pacific Northwest leverage outdoor air (“free cooling”) for portions of the year, reducing mechanical cooling requirements.

Water Consumption: The Hidden Challenge

Often overlooked in discussions of data center sustainability is water usage. Traditional cooling systems can consume millions of gallons annually:

- Evaporative cooling systems use approximately 1.8 liters of water per kilowatt-hour

- A large data center consuming 100MW can use over 300 million gallons of water annually

- Water scarcity in key markets (Arizona, Texas, Singapore) is forcing operators to adopt closed-loop or waterless cooling technologies

Microsoft’s 2024 Sustainability Report revealed the company decreased water consumption intensity by 42% compared to 2019 levels through advanced cooling technologies and operational optimization.

Exploring Next-Generation Energy Solutions

While still years from commercial deployment, several technology companies are exploring advanced energy technologies for future AI infrastructure:

Small Modular Reactors (SMRs): Microsoft made headlines in September 2024 by signing an agreement with Constellation Energy to restart Unit 1 of the Three Mile Island nuclear plant—rebranded as the Crane Clean Energy Center—to power its data center operations. While traditional nuclear, not SMRs, this signals industry openness to nuclear energy.

Several companies including Amazon and Google have expressed interest in SMR partnerships, though commercial SMR deployment remains in early regulatory phases. The U.S. Nuclear Regulatory Commission approved its first SMR design (NuScale) in 2023, but actual commercial operation is not expected until the late 2020s or 2030s.

Advanced Battery Storage: Grid-scale battery systems allow data centers to store renewable energy generated during peak production periods (sunny days, windy periods) for use during demand peaks or when renewable generation is low.

Hydrogen Fuel Cells: Some companies are piloting hydrogen fuel cells as backup power, potentially replacing diesel generators for emergency power supplies.

Regulatory Pressure and ESG Requirements

Environmental, Social, and Governance (ESG) reporting requirements are accelerating sustainable practices:

- EU Energy Efficiency Directive: Requires large data centers to report energy consumption and implement efficiency measures

- Singapore Green Plan: Limits new data center development unless operators demonstrate extreme efficiency (PUE below 1.3)

- U.S. State Requirements: States including California, New York, and Washington have implemented various energy and water reporting requirements

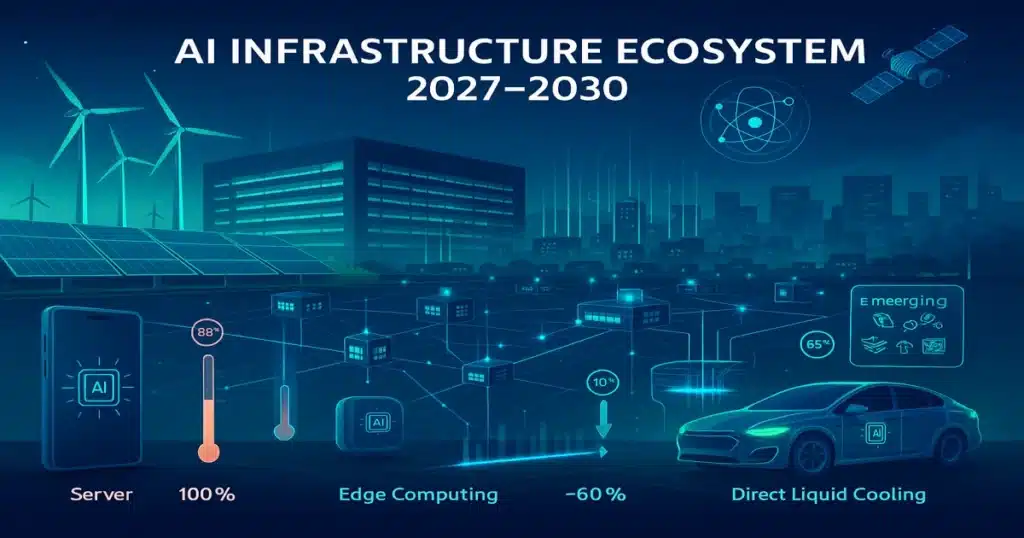

5. The Future of Global AI Infrastructure Beyond 2026

While 2026 represents a critical inflection point, the transformation of AI infrastructure will continue accelerating through the remainder of the decade. Several trends will shape the 2027-2030 landscape:

Continued Geographic Diversification

New AI data center hubs are emerging beyond traditional technology centers:

Middle East: Saudi Arabia, UAE, and Qatar are investing billions in AI infrastructure as part of economic diversification strategies. Saudi Arabia’s NEOM project includes plans for AI-powered smart city infrastructure.

Northern Europe: Iceland, Norway, and Finland are attracting data center investment due to cold climates (free cooling), abundant renewable energy, and political stability.

Southeast Asia: Despite challenges around heat and humidity, countries like Malaysia, Indonesia, and Vietnam are positioning themselves as regional AI hubs with competitive energy costs and improving connectivity.

Latin America: Chile’s renewable energy abundance and Brazil’s market size are attracting increased investment in data center infrastructure.

Chip Innovation Beyond Silicon

While silicon semiconductors will dominate through 2026 and beyond, researchers are exploring alternative computing paradigms that could revolutionize AI infrastructure:

Photonic Computing: Using light instead of electricity for computation, potentially offering dramatic speed and efficiency improvements. Several startups have demonstrated proof-of-concept systems.

Neuromorphic Computing: Brain-inspired chip architectures like Intel’s Loihi that process information fundamentally differently than traditional CPUs or GPUs, potentially offering 1000X efficiency improvements for specific AI workloads.

Quantum-AI Hybrid Systems: While general-purpose quantum computers remain far from practical, quantum processors may accelerate specific AI training tasks. For more on this frontier, see: Quantum AI Breakthroughs 2025: How Google and IBM Are Revolutionizing Computing.

Edge Intelligence Acceleration

The shift from cloud-centric to distributed AI will continue:

- 5G and 6G Networks: Advanced wireless infrastructure enables more sophisticated edge AI applications

- AI-Capable Devices: Smartphones, IoT sensors, vehicles, and industrial equipment with embedded AI accelerators reduce reliance on cloud connectivity

- Regional Processing: Mid-tier “regional edge” facilities balance latency requirements with centralized training efficiency

Regulatory Evolution

As AI capabilities expand, governments worldwide are developing frameworks for AI infrastructure governance:

- Data sovereignty requirements mandating local data storage and processing

- Energy efficiency standards for new data center construction

- AI safety requirements for certain high-risk applications

- Export controls on advanced semiconductors and AI systems

The Efficiency Imperative

Perhaps most critical for long-term sustainability, the industry is focusing intensely on efficiency:

- Model Optimization: Techniques like quantization, pruning, and distillation reduce computational requirements for inference without sacrificing accuracy

- Algorithmic Improvements: New architectures and training methods reduce the compute required to achieve given performance levels

- Specialized Accelerators: Purpose-built chips for specific AI workloads (vision, language, recommendation systems) offer better efficiency than general-purpose GPUs

Frequently Asked Questions (FAQs)

1. Why is AI infrastructure expanding so rapidly in 2026?

AI infrastructure is expanding due to explosive growth in generative AI adoption, with enterprise usage more than doubling in 2024 according to McKinsey. The shift to real-time AI applications—autonomous vehicles, robotics, instant translation—requires dramatically more computational power. Additionally, AI models themselves are scaling in size and complexity, requiring larger training clusters and more sophisticated inference infrastructure. Gartner projects AI workload demand grew 62% in 2024 with similar growth expected through 2026.

2. Are AI data centers environmentally sustainable?

Modern AI data centers are significantly more sustainable than previous generations but still face challenges. Major tech companies (Google, Microsoft, Amazon, Meta) have contracted over 50 gigawatts of renewable energy—more than most countries consume. Advanced liquid cooling can reduce energy consumption by 30-40% compared to traditional air cooling. However, absolute energy consumption continues growing as AI adoption accelerates. The industry is pursuing innovations including waste heat recycling, geothermal cooling, and exploring future technologies like small modular reactors (though these remain years from deployment).

3. Which companies lead AI chip innovation heading into 2026?

TSMC leads semiconductor manufacturing with the most advanced process nodes (moving from 3nm to 2nm production in 2025). Samsung is competing with its Gate-All-Around transistor technology and integrated HBM memory production. NVIDIA dominates AI accelerators with its Blackwell architecture (launched 2024) and maintains over 80% market share in data center GPUs. Intel is attempting to regain market share with its Gaudi accelerator line, positioning as a more cost-effective alternative. Each company is pursuing annual product cadences through 2026 to maintain competitive advantage.

4. Which geographic regions are becoming AI infrastructure hubs?

Traditional hubs (Virginia, Oregon, Iowa, Netherlands, Singapore) continue expanding, but new regions are emerging. The Middle East (Saudi Arabia, UAE) is investing over $100 billion in AI infrastructure as part of economic diversification. Northern Europe (Iceland, Norway, Finland) attracts investment due to cold climates enabling free cooling and abundant renewable energy. Southeast Asia is growing despite climate challenges due to market proximity and competitive costs. Factors driving location decisions include energy availability and cost, climate for cooling, political stability, and connectivity to major markets.

5. How much energy do AI data centers consume?

Individual large-scale AI data centers can consume 100-300 megawatts—equivalent to powering 75,000-225,000 homes. Data centers globally consume approximately 1-2% of total electricity, though this percentage is rising as AI adoption accelerates. A single AI training run for large language models can consume megawatt-hours of electricity. However, efficiency is improving: liquid cooling reduces energy consumption by 30-40%, AI-optimized HVAC systems can cut cooling costs by 40%, and newer chip architectures deliver better performance per watt. The International Energy Agency projects data center electricity demand could double by 2026 without aggressive efficiency measures.

6. What is liquid cooling and why does it matter?

Liquid cooling circulates coolant directly to heat-generating components (direct-to-chip) or immerses entire servers in non-conductive fluid (immersion cooling). It matters because modern AI chips generate extreme heat—often exceeding 400-700 watts per chip—that air cooling cannot effectively dissipate. Liquid cooling enables higher power density racks (60-100+ kilowatts vs. 5-10 kilowatts traditional), reduces overall facility energy consumption by 30-40%, decreases water consumption compared to evaporative cooling, and enables deployment in warmer climates. NVIDIA’s latest systems require liquid cooling, making it a standard for cutting-edge AI infrastructure rather than optional.

7. What role does edge computing play in AI infrastructure?

Edge computing brings AI processing closer to end users through smaller data centers (100-500 kilowatts) positioned in metro areas. This is critical for latency-sensitive applications like autonomous vehicles requiring split-second decisions, augmented reality experiences, real-time language translation, and industrial robotics. Edge deployment also reduces bandwidth costs by processing data locally rather than sending everything to central clouds. Research firm 451 Research projects edge data center capacity will grow 20% annually through 2027, with AI and IoT workloads driving this expansion. The future AI infrastructure will integrate hyperscale clouds for training and complex processing with distributed edge facilities for real-time inference.

8. When will small modular reactors power data centers?

Small Modular Reactors (SMRs) remain several years from practical data center deployment. While some companies (Microsoft, Amazon, Google) have expressed interest and Microsoft signed an agreement to use nuclear power (traditional, not SMR), commercial SMR deployment faces significant hurdles. The U.S. Nuclear Regulatory Commission approved its first SMR design (NuScale) in 2023, but actual commercial operation is not expected until the late 2020s or early 2030s. Regulatory approval processes, capital costs, and public perception remain challenges. Current sustainable energy strategies focus on proven technologies: solar, wind, hydroelectric, geothermal, and advanced battery storage.

Sources & References

This article draws on publicly available data from industry reports, corporate disclosures, investor presentations, and energy infrastructure research. Key sources include:

Industry Research & Analysis:

- Gartner, “Infrastructure and Operations Strategic Roadmap,” October 2024

- McKinsey & Company, “The State of AI in 2024: Generative AI’s Breakout Year”

- Synergy Research Group, “Data Center Market Reports,” 2024

- 451 Research (S&P Global Market Intelligence), “Edge Computing Market Reports”

- JLL, “Global Data Center Outlook 2024”

- International Energy Agency (IEA), “Electricity 2024: Analysis and Forecast to 2026”

Corporate Sources:

- TSMC Technology Symposium presentations and investor relations materials, 2024

- Samsung Electronics Foundry Business announcements

- NVIDIA Corporation quarterly earnings reports (Q3 FY2025) and investor presentations

- Intel Corporation investor relations and product announcements

- Microsoft Sustainability Report 2024

- Google Environmental Report 2024

- Meta Sustainability Report 2024

- Amazon Sustainability Report 2024

Technical & Trade Publications:

- Data Center Knowledge (industry publication)

- The Next Platform (HPC and data center coverage)

- AnandTech (semiconductor technology analysis)

- Semiconductor Engineering

- Data Center Dynamics

Note: Technology roadmaps, future product specifications, and projections represent current public information as of November 2025 and are subject to change. Forward-looking statements about 2026 and beyond are based on available industry analysis and should be understood as projections rather than certainties.

About This Article

Author: Sezarr Overseas Editorial Team

Published: November 14, 2025

Last Updated: November 14, 2025

Reading Time: 12 minutes

Category: Technology Infrastructure, Artificial Intelligence

Comprehensive Disclaimer

Forward-Looking Statements: This article contains forward-looking statements regarding technology development, infrastructure investments, and market trends projected for 2026 and beyond. These projections are based on current industry analysis, corporate announcements, and publicly available data as of November 2025. Actual outcomes may differ materially due to technological changes, market conditions, regulatory developments, or other factors.

Not Investment Advice: This content is provided for informational and editorial purposes only. It should not be considered investment, financial, or technical advice. Technology infrastructure investments involve substantial risks and uncertainties. Readers should conduct independent research and consult qualified financial, legal, and technical professionals before making business or investment decisions.

Technology Specifications: Chip specifications, data center capabilities, and product roadmaps mentioned are based on publicly available information including corporate announcements, investor presentations, and industry analysis. Actual specifications, release dates, and capabilities may differ from those described. Semiconductor roadmaps are particularly subject to change based on manufacturing yields, technical challenges, and market conditions.

Environmental Claims: Sustainability metrics, renewable energy contracts, and environmental impact data are derived from corporate sustainability reports and public announcements. Different methodologies exist for calculating carbon footprints, renewable energy percentages, and efficiency improvements, which may affect comparability between companies and reports.

Third-Party Information: This article references data from various industry research firms, corporations, and government sources. While we strive for accuracy, Sezarr Overseas News does not independently verify all third-party data and cannot guarantee its completeness or accuracy.

Rapidly Evolving Field: Artificial intelligence and semiconductor technology are rapidly evolving fields. Information in this article reflects knowledge available as of the publication date and may become outdated quickly as technology advances.

No Endorsements: References to specific companies, products, or technologies are for informational purposes and do not constitute endorsements or recommendations by Sezarr Overseas News.