Last Updated: November 21, 2025

In 2025, the line between real and fabricated has become alarmingly thin. AI-generated deepfakes—synthetic videos, images, and audio that convincingly impersonate real people—have evolved from a technological curiosity into a $40 billion fraud threat. With just three seconds of audio, criminals can clone anyone’s voice. With publicly available photos, they can create video calls that deceive even cybersecurity professionals.

This comprehensive guide arms you with the knowledge and tools to protect yourself, your family, and your business from this rapidly evolving threat.

The Deepfake Epidemic: Understanding the Magnitude of the Threat

The numbers tell a stark story of technological advancement weaponized for fraud:

Verified 2025 Deepfake Fraud Statistics:

- 2,137% increase in deepfake fraud attempts over the past three years (Signicat, 2025)

- $200+ million in confirmed losses during Q1 2025 in North America alone (Resemble AI)

- 6.5% of all fraud attacks now involve deepfakes, up from 0.1% three years ago

- $500,000 average loss per deepfake-related business fraud incident

- $680,000 maximum losses reported by large enterprises in single incidents

- 88% of deepfake fraud cases target the cryptocurrency sector

- 24.5% accuracy rate for humans detecting high-quality deepfake videos

- 3-5 seconds of audio is all criminals need to clone a voice convincingly

According to the Deloitte Center for Financial Services, generative AI-facilitated fraud losses in the United States are projected to escalate from $12.3 billion in 2024 to $40 billion by 2027—representing a compound annual growth rate of 32%.

“Three years ago, deepfake attacks were only 0.1% of all fraud attempts we detected, but today, they represent around 6.5%, or 1 in 15 cases. The steep rise in deepfake fraud is part of a broader trend of AI-driven identity fraud.”— Signicat, “The Battle Against AI-Driven Identity Fraud” Report, March 2025

The FBI’s Internet Crime Complaint Center (IC3) reports that synthetic media attacks have evolved from celebrity impersonations to sophisticated financial fraud, with criminals using real-time deepfake technology to bypass biometric verification systems at banks and financial institutions.

Related Reading: AI Cybersecurity 2025: Protecting Against Next-Gen Threats

7 Most Common Deepfake Scams in 2025

Understanding how these scams operate is your first line of defense. Here are the seven most prevalent deepfake fraud schemes currently targeting individuals and businesses:

1. CEO Fraud & Business Email Compromise (BEC)

How It Works: Scammers use deepfake video calls to impersonate company executives, authorizing urgent wire transfers or requesting sensitive information. In a landmark 2024 case, a finance worker in Hong Kong transferred $25 million after a video conference call with what appeared to be the company’s CFO and several colleagues—all were deepfakes.

Average Loss: $150,000 per incident

Warning Signs: Urgent requests for immediate action, unusual payment destinations, requests to bypass normal approval procedures

2. Virtual Kidnapping & Family Emergency Scams

How It Works: AI-generated voices of “kidnapped” family members demand immediate ransom payments. With just a few seconds of audio from social media posts, criminals can create convincing voice clones that panic victims into quick action.

2025 Statistics: Reports increased 450% in 2025, with 77% of victims who confirmed financial loss reporting actual monetary transfers

Warning Signs: Demands for immediate payment, refusal to provide proof of life, pressure to avoid contacting law enforcement

3. Romance Scams 2.0

How It Works: Fake video calls with AI-generated personas build trust over weeks or months before requesting money for “emergencies.” Scammers combine deepfake video with sophisticated social engineering tactics.

Target Demographics: Adults over 60 are targeted 3x more frequently and experience higher average losses

Warning Signs: Reluctance to meet in person, excuses for not video calling at certain times, rapid escalation to money requests

4. Bank Account Takeover & Identity Verification Bypass

How It Works: Deepfake video verification used to reset passwords, bypass security questions, and take over financial accounts. Dark web forums show increased discussion about using tools like DeepFaceLab and Avatarify to manipulate selfies for identity verification bypass.

Financial Impact: 42.5% of fraud attempts detected in the financial sector are now AI-driven

Warning Signs: Unexpected password reset notifications, unfamiliar device login alerts, unexplained account changes

5. Investment & Cryptocurrency Scams

How It Works: Fake endorsements from celebrities, financial influencers, and business leaders promoting fraudulent investment schemes. The cryptocurrency sector accounts for 88% of all detected deepfake fraud cases.

Common Tactics: “Too good to be true” returns, pressure to invest quickly, fake trading platforms showing artificial gains

Warning Signs: Guaranteed returns of 20-40% weekly, pressure to keep opportunities secret, withdrawal delays with new fee demands

6. Employment & Recruitment Scams

How It Works: Fake recruiters using deepfake video interviews to collect personal information, social security numbers, and banking details for “direct deposit setup.”

Target: Job seekers, especially remote work applicants

Warning Signs: Jobs requiring upfront payment, requests for banking information before formal hiring, too-easy interview processes

7. Government & Authority Impersonation

How It Works: Scammers posing as IRS officials, Social Security Administration representatives, or law enforcement demanding immediate payments to avoid legal consequences.

Common Threats: Arrest warrants, frozen social security numbers, unpaid taxes requiring instant payment

Warning Signs: Demands for immediate payment via gift cards, cryptocurrency, or wire transfer; threats of arrest; refusal to provide official correspondence

Related Reading: AI in Finance 2026: RegTech, Ethical Trading & Market Transformation

How to Spot Deepfakes: Visual and Audio Red Flags

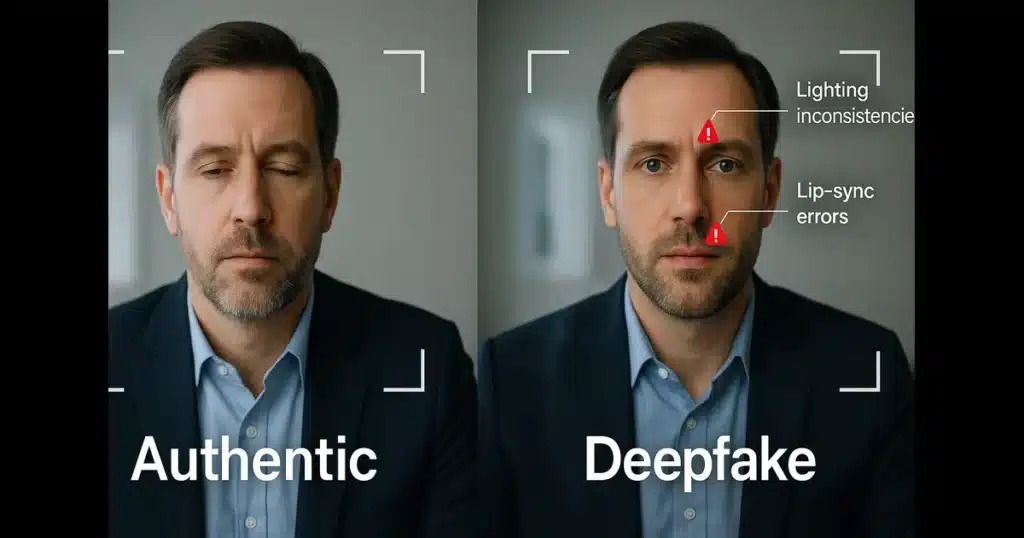

While deepfake technology continues to improve, current generation deepfakes still contain detectable anomalies. Training yourself to spot these red flags can prevent you from becoming a victim.

Visual Detection Methods

Primary Visual Red Flags:

- Unnatural Eye Blinking: Deepfakes often have irregular blink patterns, no blinking, or simultaneous blinking that appears mechanical. Human blinks are spontaneous and vary in timing.

- Skin Tone Inconsistencies: Look for slight color variations around the face, neck, and hairline where the deepfake overlay meets the original video. Lighting may not match across all facial planes.

- Hair and Detail Artifacts: Individual hair strands may appear blurred, merge unnaturally, or create a halo effect around the head. Fine details like jewelry, glasses, or teeth may appear distorted.

- Lip Sync Errors: Audio may not perfectly match mouth movements, especially during rapid speech or when forming specific sounds like “P,” “B,” or “F.”

- Lighting Inconsistencies: Check whether lighting appears consistent across the face and matches the apparent light source in the background. Shadows may fall incorrectly.

- Movement Artifacts: When the subject turns their head quickly or moves out of frame, you may notice glitching, warping, or temporary quality degradation.

- Background Anomalies: The background may appear unusually static or blur when the subject moves, as deepfake algorithms focus primarily on the face.

Audio Detection Clues

Primary Audio Red Flags:

- Robotic Voice Patterns: Listen for slight digital artifacts, particularly during emotional speech. AI-generated voices may struggle with natural emotional inflection.

- Background Noise Mismatch: Audio quality should match the video environment. A deepfake voice may sound too clean for a noisy environment or lack appropriate reverb for the setting.

- Breathing Patterns: Natural speech includes audible breaths and pauses. AI-generated speech may lack these subtle human elements or place them unnaturally.

- Emotional Inconsistency: Voice tone may not match facial expressions, or emotional shifts may seem abrupt and unnatural.

- Pronunciation Anomalies: Deepfake voices may struggle with uncommon words, technical jargon, or words from other languages.

Real-Time Interaction Tests

During live video calls, request specific actions that current deepfake technology struggles to replicate in real-time:

- Ask the person to turn their head slowly from side to side

- Request they cover part of their face with their hand

- Ask them to hold up a specific number of fingers

- Request they write something on paper and show it to the camera

- Ask unexpected questions that require immediate, unrehearsed responses

Deepfakes excel at replicating recorded or pre-planned content but often show processing delays or artifacts during unexpected real-time interactions.

Deepfake Detection Tools and Technologies

While human detection accuracy is only 24.5% for high-quality deepfakes, specialized AI detection tools can achieve significantly higher accuracy rates. Understanding which tools are available and their limitations is crucial for protection.

Professional-Grade Detection Solutions

Reality Defender

Type: Enterprise platform for images, video, audio, and text

Availability: API and web application for businesses, governments, and high-risk organizations

Features: Multi-model probabilistic detection, real-time analysis, explainable AI reporting

Use Case: Financial institutions, government agencies, media organizations

Sensity AI

Type: Real-time deepfake detection platform

Availability: Enterprise customers, particularly financial institutions

Features: Used by banks for real-time customer verification during account opening and high-value transactions

Reported Accuracy: 95%+ for known deepfake types

Microsoft Video Authenticator

Type: Confidence score video analysis tool

Availability: NOT publicly available—limited to organizations through Reality Defender 2020 program

Features: Frame-by-frame confidence scoring, blending boundary detection

Important Note: Despite widespread media coverage, this tool is not integrated into Edge browser or available to consumers

Truepic

Type: Content authentication platform

Features: Creates cryptographically sealed content with verified origins, blockchain-based verification

Use Case: Insurance claims, legal evidence, journalism

Consumer-Level Detection Options

Important Reality Check: Most advanced deepfake detection tools are enterprise-focused and not freely available to consumers. Here’s what is accessible:

- Reverse Image Search: Use Google Images or TinEye to verify if images or video frames appear elsewhere online

- Metadata Analysis: Examine file metadata for signs of manipulation (though sophisticated attackers may alter this)

- Multiple Source Verification: Cross-reference suspicious content across multiple independent news sources

- Context Analysis: Evaluate whether the content makes sense given the person’s known positions, location, and circumstances

The Reality: The most effective deepfake detection currently relies on enterprise-grade AI tools that analyze multiple factors simultaneously. For individual consumers, the best protection is behavioral—using verification protocols before taking action on suspicious requests.

Related Reading: Top 10 AI Tools That Will Dominate 2025: Complete Analysis

Financial Institution Protection Protocols

Banks and financial institutions have responded to the deepfake threat with enhanced verification protocols. Understanding these systems can help you implement similar protections in your personal and professional life.

Multi-Factor Authentication (MFA) Requirements

Major financial institutions now require at least two separate verification methods for high-value transactions:

Standard Verification Layers:

- Biometric Verification: Fingerprint, face ID, or voice recognition (though now supplemented with liveness detection)

- One-Time Passwords (OTP): Sent via separate device or communication channel

- Knowledge-Based Authentication: Security questions with answers only you would know

- Behavioral Biometrics: Analysis of typing patterns, mouse movements, and device usage patterns

- Transaction Callbacks: Mandatory phone verification on registered numbers for transfers over threshold amounts

Enhanced Transaction Verification

Financial institutions have implemented “callback verification” protocols:

- Wire transfers over $10,000 trigger automatic callback to registered phone numbers

- New beneficiary additions require 24-48 hour waiting periods

- Suspicious activity patterns trigger additional verification requirements

- Biometric verification combined with liveness detection (proof the person is physically present)

Recommended Personal Financial Safeguards

- Set Low Transaction Limits: Configure daily transfer limits that require additional approval for amounts above your normal activity

- Enable All Available MFA: Activate every security layer your institution offers

- Register Multiple Contact Methods: Provide multiple phone numbers and email addresses for verification

- Create Verification Phrases: Establish secret phrases with family members for emergency verification

- Regular Account Monitoring: Check accounts daily for unauthorized activity

- Credit Freezes: Proactively freeze credit with all three bureaus (Equifax, Experian, TransUnion)

Business Security: Protecting Your Organization

With 67% of Fortune 500 companies now conducting simulated deepfake attacks to test employee vigilance, business leaders recognize deepfakes as a top-tier threat.

Employee Training Protocols

Mandatory Deepfake Awareness Training Should Include:

- Real-World Case Studies: The Hong Kong $25 million deepfake CFO case, the UK energy firm €220,000 voice clone fraud

- Detection Technique Training: Teaching employees to identify visual and audio red flags

- Verification Procedures: Establishing and practicing callback protocols for unusual requests

- Simulated Attacks: Regular testing with realistic deepfake scenarios

- Incident Response: Clear procedures for reporting suspected deepfake attempts

Technical Safeguards

Infrastructure Protection:

- Encrypted Video Communication: End-to-end encrypted platforms with authentication certificates

- Blockchain-Verified Digital Signatures: Cryptographic verification for critical communications

- Real-Time Deepfake Detection: Integration of detection tools into video conferencing systems

- Access Controls: Strict limitations on who can authorize financial transactions

- Transaction Velocity Controls: Automatic flags for unusual transaction patterns or amounts

Policy and Procedure Updates

Essential policies for deepfake-resistant operations:

- No wire transfers or sensitive actions authorized via video call alone

- Mandatory dual-channel verification (e.g., video call confirmed by phone call)

- 24-48 hour waiting periods for changes to payment beneficiaries

- Executive verification protocols requiring multiple approval levels

- Regular security audits and penetration testing including deepfake scenarios

Related Reading: AI Banking 2026: Autonomous Lending & Smart Fraud Prevention

Legal Framework: The TAKE IT DOWN Act and Federal Protections

In May 2025, the United States took its first major legislative action against deepfake abuse with the passage of the TAKE IT DOWN Act.

The TAKE IT DOWN Act: Federal Deepfake Legislation

Official Name: Tools to Address Known Exploitation by Immobilizing Technological Deepfakes on Websites and Networks Act

Signed Into Law: May 19, 2025, by President Donald Trump

Congressional Support: Passed Senate unanimously; House vote 409-2 (bipartisan support)

Primary Sponsors: Senators Ted Cruz (R-TX) and Amy Klobuchar (D-MN)

What the Law Criminalizes:

- Publication of non-consensual intimate visual depictions (authentic or AI-generated)

- Any deepfakes intended to cause harm to identifiable individuals

- Threats to publish authentic or digitally falsified intimate images

Penalties Under the TAKE IT DOWN Act:

- Adults: Up to 2 years imprisonment for sharing non-consensual intimate imagery

- Involving Minors: Up to 3 years imprisonment for sharing or creating harmful deepfakes of minors

- Threats Involving Minors: Up to 30 months imprisonment for threatening to share deepfakes of minors

- Civil Penalties: Violations enforced by the Federal Trade Commission as unfair or deceptive practices

Platform Requirements:

- Online platforms must remove reported intimate deepfakes within 48 hours of notice

- Platforms must establish notice-and-takedown systems by May 2026

- Failure to comply constitutes FTC violation with associated penalties

- Good faith removal efforts are protected by safe harbor provisions

Important Clarification: The TAKE IT DOWN Act specifically addresses non-consensual intimate imagery and harmful deepfakes. It does NOT address all forms of deepfake fraud (such as financial scams or impersonation for monetary gain), which remain prosecutable under existing fraud statutes.

State-Level Deepfake Laws

As of November 2025, at least 30 states have enacted deepfake-specific legislation, creating a patchwork of protections:

- California: Criminalized malicious deepfakes in pornography and political advertising

- Texas: First state (2019) to outlaw synthetic videos meant to influence elections

- New York: Illegal to disseminate AI-generated explicit content without consent (October 2023)

- New Jersey: Criminal and civil penalties up to $30,000 for creating/sharing deepfakes (April 2025)

Reporting Procedures for Deepfake Fraud

If you encounter or become victim of a deepfake scam, take these immediate steps:

Immediate Actions:

- Document Everything: Screenshot/record all evidence, save URLs, preserve metadata, document timestamps

- Report to Federal Trade Commission: ReportFraud.ftc.gov

- File FBI IC3 Report: ic3.gov – Internet Crime Complaint Center

- Contact Financial Institutions: Immediately notify banks involved to attempt transaction reversal

- Alert Credit Bureaus: Place fraud alerts or freezes with Equifax, Experian, and TransUnion

- Local Law Enforcement: File police report for potential state-level charges

- Platform Reporting: Report content to hosting platforms under TAKE IT DOWN Act provisions

Related Reading: AI Regulation in the US 2025: Federal vs. State Divide

Future-Proofing Your Digital Identity

As deepfake technology continues to advance, proactive protection becomes increasingly critical. These strategies help minimize your vulnerability.

Digital Footprint Management

Limit Deepfake Training Material:

- Social Media Privacy: Set profiles to private, limit publicly visible photos and videos

- Audio Samples: Be cautious about public voicemail messages, podcasts, or recorded presentations

- Video Content: Limit high-quality video of yourself online, especially with clear facial views and audio

- Reverse Image Search: Regularly search for your images online to monitor unauthorized use

Proactive Security Measures

Essential Protections:

- Digital Watermarking Services: Use services that embed verifiable markers in your content (Truepic, Content Credentials)

- Biometric Locking: Enable maximum security settings on all biometric logins, including liveness detection

- Credit Freezes: Proactively freeze credit with all three bureaus—free and reversible when needed

- Identity Monitoring: Subscribe to identity theft monitoring services

- Encrypted Communications: Use end-to-end encrypted platforms for sensitive discussions

- Password Managers: Unique, complex passwords for every account

- Regular Security Audits: Monthly review of account activity and security settings

Family Emergency Verification Protocols

Establish these protocols with family members BEFORE an emergency occurs:

- Secret Verification Phrases: Create unique phrases or code words that only family members know

- Verification Questions: Establish questions with answers only real family would know

- Callback Procedures: Always hang up and call back on known numbers, never call numbers provided by the “emergency” caller

- Contact Lists: Maintain verified contact information for all family members in secure, offline format

- Emergency Protocols: Practice scenarios to reduce panic response during actual emergencies

💡 Essential Protection Checklist

- ✅ Verify through multiple channels before acting on urgent requests—especially financial

- ✅ Use established code words with family for emergency verification

- ✅ Enable all available MFA options on financial and sensitive accounts

- ✅ Trust your instincts—if something feels off, it probably is

- ✅ Never rush financial decisions based on video, audio, or email alone

- ✅ Educate family members, especially older adults who are targeted more frequently

- ✅ Stay informed about new deepfake tactics through official sources

- ✅ Implement callback protocols for any unexpected financial requests

Frequently Asked Questions

Q1: How can I tell if a video call is a deepfake in real-time?

A: Request real-time interactions that current deepfake technology struggles to replicate. Ask the person to turn their head slowly side-to-side, cover parts of their face with their hand, hold up a specific number of fingers, or write something on paper and show it to the camera. Deepfakes excel at replicating pre-recorded content but often show processing delays, glitches, or quality degradation during unexpected real-time actions. Additionally, ask unexpected questions requiring immediate, unrehearsed responses. If the interaction feels scripted or the person makes excuses about their camera, internet connection, or inability to perform simple actions, treat the communication as suspicious and verify through alternative channels.

Q2: Are voice deepfakes as dangerous as video deepfakes?

A: Yes, voice deepfakes are often MORE dangerous for several reasons. First, voice cloning requires only 3-5 seconds of audio to create convincing replicas, compared to hours of video needed for high-quality video deepfakes. Second, many high-stakes transactions occur via phone calls where video verification isn’t available. Third, people tend to trust familiar voices more immediately than video, making them act faster without verification. The FBI reports that voice cloning is now the top attack vector for deepfake fraud. Always establish callback verification protocols for voice-only financial authorizations—hang up and call back on a number you know is legitimate, never use numbers provided during the suspicious call.

Q3: What should I do if I’ve already been scammed by a deepfake?

A: Act immediately to minimize damage:

- Within minutes: Contact your financial institution’s fraud department to attempt transaction reversal or account freeze

- Within 24 hours: File reports with the FTC (ReportFraud.ftc.gov) and FBI IC3 (ic3.gov)

- Within 48 hours: Place fraud alerts with all three credit bureaus (Equifax, Experian, TransUnion) and consider credit freezes

- Within one week: File police report for local law enforcement record

- Ongoing: Monitor all financial accounts daily for at least 3 months, change passwords on all accounts, enable maximum security settings

The faster you act, the better chance of recovery. Banks have limited time windows for transaction reversals, so immediate action is critical.

Q4: Why are older adults more vulnerable to deepfake scams?

A: Statistics show adults over 60 are targeted 3x more frequently and experience higher average losses for several reasons. First, older adults often have more substantial savings and retirement accounts, making them attractive targets. Second, they may have less familiarity with AI capabilities and deepfake technology, making sophisticated scams more convincing. Third, scammers exploit emotional triggers like grandparent scams (fake kidnappings of grandchildren) that bypass rational analysis. Fourth, older adults are statistically more likely to answer unknown phone calls and engage with unexpected contacts. Protect older family members by conducting regular scam awareness conversations, establishing verification protocols, setting up transaction alerts on their accounts, and considering joint account arrangements that require multiple approvals for large transactions.

Q5: Are free deepfake detection tools reliable enough for personal use?

A: The reality is that most advanced deepfake detection tools are enterprise-focused and not freely available to consumers. Tools like Microsoft Video Authenticator, despite media coverage, are NOT publicly accessible—they’re limited to organizations through special programs. Consumer options are limited to reverse image searches, metadata analysis, and manual inspection for visual/audio red flags. For high-stakes decisions (large financial transactions, legal matters), the most reliable protection is behavioral rather than technological: implement multi-channel verification, use callback procedures, never rush decisions based on a single communication channel, and when in doubt, verify in person or through independently confirmed contact information. Professional detection services exist but typically cost hundreds to thousands of dollars per analysis, making them impractical for routine personal use.

Q6: Can deepfakes bypass facial recognition security on my phone?

A: Modern smartphone facial recognition systems (like Apple’s Face ID) include “liveness detection” specifically designed to prevent deepfake attacks. These systems analyze 3D depth information, require eye movement, and detect other signs of physical presence that static images or basic videos cannot replicate. However, financial institutions reported that 42.5% of fraud attempts in 2025 involved AI-driven identity verification bypass attempts. To maximize protection: (1) Enable all available biometric options, (2) Use multi-factor authentication combining biometrics with passwords and one-time codes, (3) Register alternative biometrics (both fingerprint and face recognition), (4) Keep device software updated to get latest security patches, (5) Never provide biometric data (photos, voice recordings) to unverified parties. While current deepfakes struggle with liveness detection, technology continues evolving—layered security remains essential.

Q7: What’s the difference between the TAKE IT DOWN Act and other fraud laws?

A: The TAKE IT DOWN Act (signed May 19, 2025) specifically addresses non-consensual intimate imagery and harmful deepfakes, requiring platforms to remove reported content within 48 hours and establishing criminal penalties up to 3 years imprisonment. However, it does NOT cover all deepfake fraud types—financial scams, CEO fraud, investment scams, and other non-intimate deepfake crimes remain prosecuted under existing fraud statutes (wire fraud, identity theft, computer fraud). The TAKE IT DOWN Act’s significance is that it’s the first federal law explicitly naming and criminalizing deepfakes, but it’s narrowly focused on intimate content. Other deepfake crimes are prosecuted under laws like the Computer Fraud and Abuse Act, wire fraud statutes, and state-level fraud laws. Victims of financial deepfake fraud should report to FBI IC3 and FTC under existing fraud reporting channels.

Q8: How will deepfake technology evolve, and can we stay protected?

A: Deepfake technology will inevitably improve—neural networks continuously learn and adapt, making detection increasingly challenging. However, detection technology evolves simultaneously. The future of protection lies in multi-layered approaches: (1) Technical—AI detection tools, blockchain verification, digital watermarking; (2) Behavioral—verification protocols, multi-channel confirmation, healthy skepticism; (3) Legal—expanding legislation, platform accountability, international cooperation; (4) Educational—widespread media literacy, regular training updates. The key insight: we cannot rely solely on our ability to spot deepfakes. Instead, we must implement systems and procedures that assume any single communication could be compromised. This means never making critical decisions (especially financial) based on a single video call, email, or voice message—always verify through multiple independent channels. The deepfake threat will persist, but properly implemented verification protocols can neutralize most attacks.

📚 Related Reading

- AI Cybersecurity 2025: Protecting Against Next-Gen Threats

- Smart Investment Strategies for 2025: Protecting Your Finances

- AI in Finance 2026: RegTech & Ethical Trading

- AI Banking 2026: Smart Fraud Prevention

- Top 10 AI Tools That Will Dominate 2025

- AI Technology: ChatGPT Alternatives to Explore in 2025

Conclusion: Vigilance as Your Best Defense

The deepfake threat landscape evolves daily, with new techniques emerging as quickly as defenses are developed. However, understanding the threat, recognizing warning signs, and implementing robust verification protocols can significantly reduce your risk.

Remember these core principles:

- Trust but verify—especially when money or sensitive information is involved

- Multiple channels—never act on urgent requests from a single communication source

- Healthy skepticism—if something feels wrong, it probably is

- Time is your ally—legitimate requests can wait for proper verification

- Education is ongoing—stay informed about evolving tactics

The deepfake threat is real and growing, but it is not insurmountable. With awareness, preparation, and disciplined verification procedures, you can protect yourself, your family, and your organization from becoming another statistic in the AI fraud epidemic.

Your skepticism is your best defense against AI-powered fraud.

📋 Disclaimer and Sources

Editorial Standards: This article contains information verified through official government sources, cybersecurity industry reports, and published research. All statistics are sourced from reputable organizations and include citations to primary sources.

Primary Sources Verified:

- Signicat: “The Battle Against AI-Driven Identity Fraud” Report (March 2025)

- Resemble AI: Q1 2025 Deepfake Incident Report

- Deloitte Center for Financial Services: Generative AI Fraud Projections

- Keepnet Labs: Deepfake Statistics & Trends 2025

- U.S. Federal Trade Commission (FTC): Official fraud reporting data

- FBI Internet Crime Complaint Center (IC3): 2023-2024 Reports

- U.S. Congress: TAKE IT DOWN Act (Public Law, May 19, 2025)

- Reality Defender, Sensity AI, Truepic: Official company documentation

- Microsoft Research: Video Authenticator announcement (2020)

Important Notes:

Tool Availability: Many deepfake detection tools mentioned are enterprise-focused and not publicly available. Consumer options are limited, and the most reliable protection comes from verification protocols rather than technological tools.

Legal Disclaimer: This article provides educational information about deepfake scams and protection strategies. It does not constitute legal advice, cybersecurity consulting, or financial advice. For specific concerns about legal rights, reporting procedures, or business security implementation, consult with qualified legal and cybersecurity professionals.

Statistics Disclaimer: Deepfake fraud statistics are rapidly evolving. Numbers cited represent the most recent verified data available as of November 2025 from authoritative sources. Actual current figures may vary as new data is published.

Detection Tool Disclaimer: No deepfake detection method is 100% accurate. Detection tools should be used as part of a comprehensive security strategy that includes procedural safeguards, not as standalone solutions.

Last Fact-Checked: November 21, 2025

Updates: This article will be updated as new federal legislation, detection technologies, and verified statistics become available. Check the publication date at the top of the article for the most recent update.

Reporting: If you encounter suspected deepfake fraud, report to:

- FTC: ReportFraud.ftc.gov

- FBI IC3: ic3.gov

- Local law enforcement as appropriate

Help others stay protected—share this guide:

Share on: Twitter | LinkedIn | Facebook | Email