Nvidia CEO Reports Explosive Demand for Blackwell AI Chips During Taiwan Partnership Summit

Nvidia Corporation CEO Jensen Huang made significant industry announcements this weekend when he told partners that his company is experiencing “very strong demand” for its next-generation Blackwell AI processors. Speaking at Taiwan Semiconductor Manufacturing Company’s annual sports day event in Hsinchu on Saturday, November 8, 2025, Huang’s comments underscore the accelerating global competition for advanced AI computing infrastructure.

The announcement came during Huang’s fourth public visit to Taiwan this year, highlighting the critical importance of Nvidia’s manufacturing partnership with TSMC as both companies navigate increasingly complex global semiconductor supply chains. With the Trump administration maintaining restrictions on advanced chip sales to China, Taiwan’s role as the primary production hub for cutting-edge AI processors has become even more strategically significant.

This analysis examines the factors driving Blackwell demand, the implications for global AI infrastructure deployment, and what industry experts project for the semiconductor market through 2026.

The Blackwell Phenomenon: Understanding Nvidia’s Latest Innovation

Technical Specifications and Capabilities

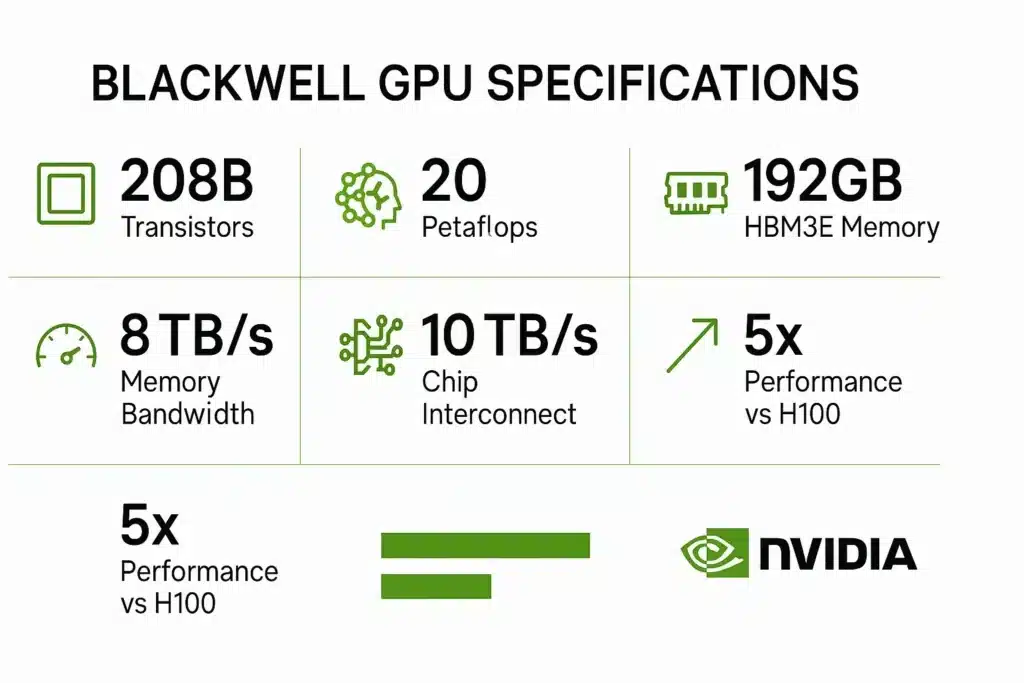

Nvidia’s Blackwell architecture represents the company’s most ambitious chip design to date. Unlike traditional single-die processors, Blackwell consists of two massive GPU dies working as a unified system, connected through what Nvidia calls a 10 terabyte-per-second chip-to-chip interconnect.

Verified Technical Specifications:

According to Nvidia’s official documentation and verified technical sources, each Blackwell chip configuration delivers:

- 208 billion transistors across the dual-die package (104 billion per die)

- Up to 20 petaflops of AI performance using FP4 precision format

- 192GB HBM3E memory with 8TB/sec bandwidth across an 8192-bit bus interface

- TSMC 4NP process node manufacturing technology

- Approximately 30% more transistors than the previous H100 Hopper generation (80 billion transistors)

- Fifth-generation Tensor Cores for accelerated AI computations

- Second-generation Transformer Engine supporting lower-precision calculations

The performance improvements are substantial. While Nvidia’s H100 chips delivered approximately 4 petaflops of FP8 AI performance, Blackwell’s 20 petaflops represents a theoretical 5x increase, though direct comparisons require noting the different precision formats (FP4 versus FP8).

Technical Context:

The dual-die design emerged as a necessity rather than preference. As chips approach the physical limits of semiconductor manufacturing equipment (known as the reticle limit), Nvidia chose to connect two maximum-sized dies rather than compromise on computational capacity. Each die measures at the reticle limit of approximately 814 square millimeters, making them among the largest chips currently in production.

Key Factors Driving Unprecedented Demand

Multiple converging factors are creating exceptional demand for Blackwell processors, according to industry analysis and market reports.

Enterprise AI Deployment Acceleration

The generative AI sector has transitioned from experimental pilots to production-scale deployments over the past 18 months. Organizations that initially tested AI applications with limited infrastructure now require massive computational resources to support enterprise-wide implementations. This shift from proof-of-concept to operational deployment creates sustained, predictable demand rather than speculative purchasing.

Cloud Provider Infrastructure Competition

Major cloud service providers—including Microsoft Azure, Amazon Web Services, and Google Cloud—recognize that AI computational capacity represents a defining competitive differentiator for the next decade. Industry observers note that these providers are securing substantial Blackwell allocations to ensure they can offer competitive AI services to their enterprise customers.

Strategic Stockpiling

The semiconductor shortage experiences of 2021-2023 taught technology companies harsh lessons about supply chain vulnerability. Many organizations now approach critical chip procurement with strategic reserve planning, securing allocations well beyond immediate needs to prevent competitive disadvantage from supply constraints.

Performance Economics

While individual Blackwell chips carry substantial price tags (discussed below), the performance-per-watt improvements and computational density can create favorable total cost of ownership compared to deploying larger numbers of previous-generation chips. For large-scale AI training and inference workloads, these economics drive strong preference for the latest architecture.

TSMC Sports Day Revelations: What Jensen Huang Said

Event Context and Strategic Significance

TSMC’s annual sports day typically functions as an internal company culture event rather than a venue for major industry announcements. However, Jensen Huang’s presence at the November 8 event—his fourth Taiwan visit in 2025—signals the intensifying nature of the Nvidia-TSMC relationship as demand for advanced AI chips accelerates.

The setting itself carries strategic messaging. By attending TSMC’s employee event, Huang demonstrates the partnership extends beyond transactional manufacturing relationships into strategic collaboration essential for both companies’ futures.

Key Quotes and Industry Implications

During the Hsinchu event, Huang provided reporters with several significant statements about Blackwell demand and production.

Verified Quotes from November 8, 2025:

“Nvidia builds the GPU, but we also build the CPU, the networking, the switches—there are a lot of chips associated with Blackwell,” Huang told reporters at the event, emphasizing the complexity of Blackwell system architectures beyond individual processors.

When discussing TSMC’s role, Huang stated: “TSMC is doing a very good job supporting us on wafers,” adding that “Nvidia’s success would not be possible without TSMC.”

TSMC CEO C.C. Wei confirmed during the same event that Huang had “asked for wafers,” though Wei declined to specify quantities for competitive reasons, stating only that the number was confidential.

Production Complexity

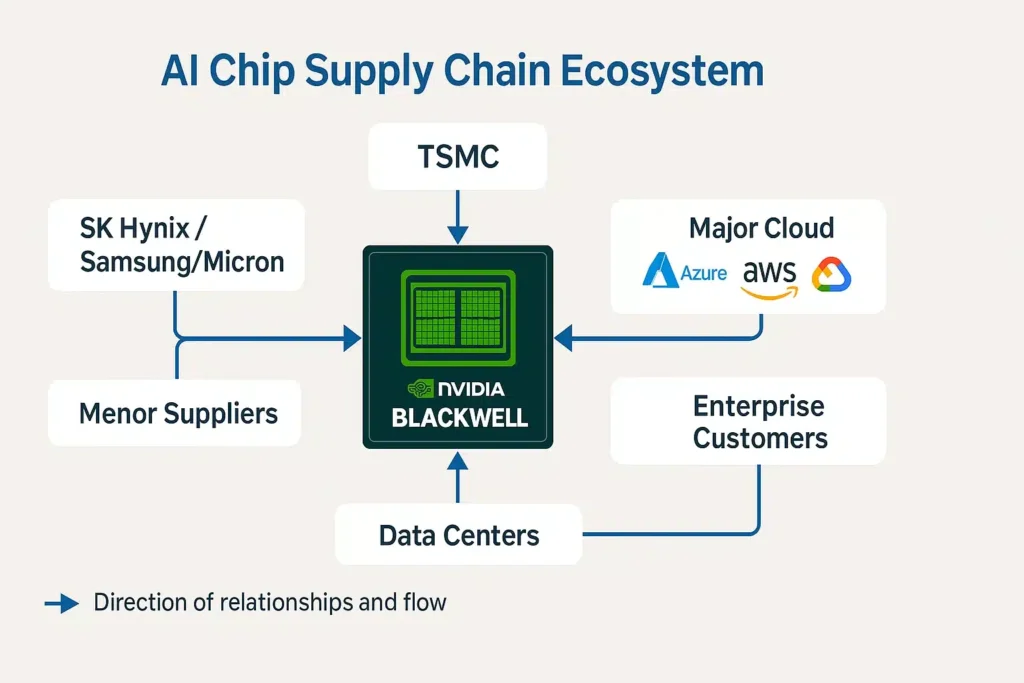

Huang’s emphasis on the multi-component nature of Blackwell systems illuminates why supply chain challenges extend beyond GPU manufacturing alone. Complete Blackwell-based systems require:

- GPU processors from TSMC

- High-bandwidth memory (HBM3E) from memory manufacturers including SK Hynix, Samsung, and Micron

- Custom networking chips and switches

- Grace ARM-based CPUs for certain configurations

- Specialized cooling systems for thermal management

- Power delivery infrastructure for the substantial electrical requirements

This systems-level complexity means that even with adequate GPU wafer supply from TSMC, other component constraints could limit overall system availability.

China Export Context

The day before the TSMC event, on November 7, Huang confirmed to reporters that there are “no active discussions” about selling Blackwell chips to China, maintaining compliance with U.S. export restrictions. This policy backdrop makes the Nvidia-TSMC partnership even more crucial, as Chinese chip manufacturers cannot access TSMC’s most advanced manufacturing nodes under current restrictions.

For detailed analysis of these export control dynamics, see our comprehensive coverage: US Nvidia Restrictions Widen: What the China Chip Ban Means for Crypto Mining.

Supply Chain Impact and Market Dynamics

Primary Beneficiaries and Competitive Effects

The surge in Blackwell demand creates ripple effects throughout the semiconductor and technology infrastructure sectors.

Immediate Beneficiaries:

Memory Manufacturers: SK Hynix, Samsung, and Micron are ramping high-bandwidth memory (HBM3E) production to meet Nvidia’s specifications. SK Hynix announced in late 2024 that it had sold out its 2025 HBM chip production, indicating the tight supply-demand balance for this critical component. These manufacturers benefit from both volume increases and the premium pricing power associated with cutting-edge memory technology.

TSMC: As the exclusive manufacturer for Blackwell processors, TSMC captures substantial revenue from Nvidia’s orders. While TSMC does not disclose customer-specific capacity allocations, industry analysts estimate that Nvidia represents a significant portion of TSMC’s advanced node production. For context on TSMC’s manufacturing capabilities, see: TSMC Samsung 1.4nm Chips 2025: Next-Generation Semiconductor Race.

Server and Infrastructure Manufacturers: Companies like Dell Technologies, Hewlett Packard Enterprise, and Supermicro are redesigning server platforms to accommodate Blackwell’s unique cooling requirements (each B200 GPU consumes approximately 1,200 watts, substantially more than H100’s 700 watts) and dense computational packaging.

Competitive Dynamics:

The intense Blackwell demand potentially disadvantages smaller AI chip companies attempting to gain market share. When Nvidia secures substantial manufacturing capacity at TSMC and memory suppliers allocate production to Nvidia’s specifications, alternative chip providers face longer lead times and potentially higher costs for comparable advanced manufacturing access.

AMD, Intel, and various AI chip startups continue developing competing solutions, but Nvidia’s combination of:

- Proven hardware performance

- Mature software ecosystem (CUDA platform with nearly two decades of development)

- Strategic manufacturing relationships

- First-mover advantages in AI training infrastructure

…creates formidable competitive barriers.

Geopolitical Context and Export Restrictions

Blackwell demand occurs against significant geopolitical tensions surrounding semiconductor technology and AI capabilities.

U.S. Export Control Framework:

The Trump administration has maintained and strengthened restrictions preventing sales of advanced AI processors to China, citing national security concerns about potential military applications and AI industry development that could challenge U.S. technological leadership.

These restrictions mean:

- Nvidia cannot sell Blackwell chips to Chinese customers

- Chinese organizations cannot access Blackwell computational capabilities through most cloud services

- Alternative chip designs specifically for the Chinese market must use less advanced specifications to comply with export rules

Taiwan’s Strategic Position:

With Chinese manufacturers unable to access the most advanced manufacturing nodes, and U.S. domestic production still years away from matching TSMC’s capabilities for cutting-edge processors, Taiwan remains the critical production chokepoint for global AI infrastructure.

This creates both opportunities and vulnerabilities:

- Opportunity: Taiwan’s semiconductor industry captures exceptional value from AI boom

- Vulnerability: Any disruption to Taiwan operations would severely impact global AI development

TSMC is building advanced facilities in Arizona to diversify geographically, but these facilities won’t reach meaningful production volumes until the late 2020s.

Global AI Infrastructure Bifurcation:

The export restrictions accelerate the division of global AI ecosystems into U.S./allied and Chinese spheres, each with distinct:

- Hardware platforms

- Software frameworks

- Training data sources

- Application deployments

This bifurcation has profound long-term implications for AI development, international technology collaboration, and competitive dynamics in AI-driven industries.

Organizations deploying AI systems globally must navigate these divided ecosystems, often maintaining separate infrastructure and capabilities for different geographic markets. For regulatory considerations in AI deployment, see: US AI Regulation 2025: Comprehensive Compliance Guide for Organizations.

Market Analysis and Expert Perspectives

Industry analysts view the current AI infrastructure buildout as fundamentally different from previous technology investment cycles.

Distinguishing Current Cycle from Speculative Bubbles:

Unlike the dot-com era, where speculative investment often preceded demonstrable business value, current AI infrastructure spending is driven by:

- Measurable productivity improvements from AI deployments

- Competitive necessity as AI capabilities become table stakes across industries

- Direct revenue generation from AI-powered products and services

- Operational cost reductions from AI automation

This foundation of tangible business value suggests more sustainable demand patterns than purely speculative technology cycles.

Pricing and Revenue Implications:

According to Jensen Huang’s March 2024 CNBC interview, individual Blackwell chips are priced between $30,000 and $40,000 per unit. However, Huang emphasized that Nvidia typically sells complete systems—such as DGX B200 servers with eight Blackwell GPUs or DGX B200 SuperPODs with 576 GPUs—rather than individual chips. These complete systems command prices reaching into millions of dollars per installation.

Important Note: These pricing figures represent Huang’s approximate disclosures rather than official published list prices, and actual customer costs vary based on configuration, volume, and relationship factors.

Supply Timeline Challenges:

Nvidia has begun shipping Blackwell systems to select customers, with broader availability expanding throughout 2025. However, the “very strong demand” Huang described at the TSMC event suggests supply will remain constrained into 2026.

For enterprises planning AI deployments, this creates challenging procurement dynamics:

- Lead times that were already measured in quarters may extend to year-plus timeframes for some configurations

- Organizations without existing allocations may compete for limited availability

- Premium pricing likely persists while demand exceeds supply

Cloud Provider Advantage:

Major cloud providers possess advantages in securing Blackwell allocations through:

- Strategic relationships with Nvidia developed over years

- Financial resources enabling large upfront commitments

- Scale justifying priority treatment

These providers will likely rent Blackwell computational capacity through their cloud services, potentially accelerating the trend toward AI-as-a-service rather than on-premises deployments for many organizations.

This dynamic mirrors developments in other regions, such as the Middle East, where Saudi Arabia GCC AI Investment 2025 is driving substantial infrastructure development through strategic cloud and technology partnerships.

Production Timeline and Future Outlook

Near-Term Production Expectations (Q4 2025 – Q2 2026):

Nvidia CFO Colette Kress stated in August 2025 that the company expects to ship “several billion dollars” in Blackwell revenue during Q4 2024 (Nvidia’s fiscal calendar). However, given the demand levels Huang described in November, supply constraints will likely persist through mid-2026 at minimum.

Manufacturing Scaling Challenges:

Several factors limit how quickly Blackwell production can scale:

TSMC Capacity Constraints: Advanced node manufacturing capacity cannot expand overnight. Building new fabrication facilities (fabs) requires 2-3 years and multi-billion dollar investments.

Memory Supply: HBM3E production involves complex manufacturing processes with limited global capacity. Memory manufacturers are expanding production, but scaling takes 12-18 months from commitment to output.

Yield Optimization: As with any new chip design, manufacturing yields improve over time as engineers optimize processes. Initial production may have lower yields than mature manufacturing.

System Integration: Even with adequate chip supply, assembling complete systems with proper cooling, power delivery, and networking requires sophisticated integration capabilities.

Competitive Response:

While Nvidia dominates current AI chip markets, competitors are not conceding the field:

AMD continues pushing its MI300 series AI accelerators, which offer competitive performance for certain workloads and potential cost advantages. AMD benefits from its relationships with cloud providers seeking to reduce dependence on single suppliers.

Intel is preparing its Gaudi3 AI accelerator and longer-term Falcon Shores product, though Intel faces catch-up challenges given Nvidia’s lead and Intel’s recent execution struggles.

Custom Silicon: Major cloud providers including Google (TPUs), Amazon (Trainium/Inferentia), and Microsoft (Maia) are developing custom AI chips for internal workloads, potentially reducing Nvidia dependence over time.

Startups: Numerous well-funded startups (Cerebras, Groq, SambaNova, others) are pursuing differentiated architectures for specific AI workloads.

However, Nvidia’s comprehensive ecosystem—combining hardware leadership, mature software platforms, and strategic manufacturing partnerships—creates substantial competitive moats that competitors will require years to challenge effectively.

Sustainability Questions:

Some observers question whether current AI infrastructure investment levels are sustainable or represent a temporary buildout phase that will moderate once initial capacity targets are met.

Arguments suggesting sustainable demand:

- AI applications continue expanding into new use cases

- Model sizes and computational requirements keep growing

- Regular hardware refresh cycles as new architectures deliver efficiency improvements

- Competition driving continued infrastructure expansion

Arguments suggesting potential moderation:

- Initial infrastructure buildout may exceed near-term demand

- Efficiency improvements might reduce chip requirements per workload

- Economic constraints could slow investment if recession emerges

- Alternative architectures or optimization techniques might reduce cutting-edge chip demand

Most industry analysts currently lean toward sustained demand, but acknowledge uncertainty about long-term trajectory.

Strategic Implications for Technology Leaders

For organizations making technology infrastructure decisions, the Blackwell demand dynamics create several strategic considerations:

Procurement Strategy:

Organizations with AI Infrastructure Requirements:

- Evaluate infrastructure roadmaps and consider accelerating procurement timelines to avoid extended lead times

- Explore cloud-first strategies if on-premises deployment timelines are prohibitively long

- Consider strategic partnerships with organizations that have secured allocations

- Assess whether current-generation hardware (H100/H200) meets near-term needs at more favorable availability

Planning Horizon Expansion:

The extended lead times for cutting-edge AI infrastructure require organizations to plan capacity requirements 12-18 months ahead rather than the 3-6 month horizons typical for most IT procurement. This demands better forecasting of AI workload growth and earlier commitment to infrastructure spending.

Vendor Diversification Considerations:

While Nvidia currently dominates high-end AI training and inference, organizations may want to:

- Develop competencies with alternative platforms to reduce single-vendor dependence

- Monitor competitive offerings that might provide acceptable performance at better availability

- Maintain flexibility to shift workloads across different hardware platforms

Geopolitical Risk Assessment:

Organizations operating globally should understand how export restrictions affect their AI deployment strategies:

- Maintaining separate infrastructure for different geographic regions

- Ensuring compliance with export control requirements

- Planning for potential future restrictions or requirement changes

- Considering how geopolitical tensions might impact supply chains

Energy and Facility Planning:

Blackwell’s substantial power requirements (1,200 watts per B200 GPU versus 700 watts for H100) create facility planning challenges:

- Data centers need adequate electrical capacity and cooling infrastructure

- Geographic location decisions should consider energy costs and availability

- Sustainability commitments must account for AI infrastructure energy consumption

The AI infrastructure boom is driving significant demand for electricity, specialized facilities, and technical talent. Regions with favorable energy costs, regulatory environments, and talent pools are becoming preferred locations for large-scale AI data centers.

Conclusion: AI Infrastructure Becomes Mission-Critical

Jensen Huang’s November 8 announcement at TSMC’s sports day, while appearing routine on the surface, reflects a fundamental shift in how the global economy approaches computational resources. The “very strong demand” for Blackwell chips represents more than another product cycle—it signals the recognition that artificial intelligence has transitioned from experimental technology to essential infrastructure.

Key Takeaways:

Demand Fundamentals Are Strong: Unlike speculative technology bubbles, Blackwell demand is driven by demonstrable business value, competitive necessity, and operational deployments generating measurable returns.

Supply Will Remain Constrained: Despite manufacturing scaling efforts, the combination of strong demand, production complexity, and long manufacturing lead times means supply constraints will persist well into 2026.

Geopolitical Factors Matter: Export restrictions, manufacturing concentration in Taiwan, and strategic competition between technology powers are shaping not just chip availability but the architecture of global AI infrastructure.

Strategic Planning Required: Organizations cannot treat AI infrastructure procurement casually. Extended lead times, limited availability, and substantial costs require strategic planning with 12-18 month horizons.

Ecosystem Effects Extend Broadly: The Blackwell phenomenon affects not just Nvidia and TSMC, but memory manufacturers, cloud providers, data center operators, energy infrastructure, and ultimately every industry deploying AI capabilities.

For investors, technologists, and business leaders, the message is unambiguous: the AI infrastructure race is accelerating, not slowing down. Organizations securing access to cutting-edge computational resources will have significant advantages in developing and deploying next-generation AI applications.

As we progress through 2025 and into 2026, the Nvidia-TSMC partnership celebrated in Taiwan this weekend will remain one of the most strategically important relationships in global technology. The fundamental question isn’t whether demand for advanced AI chips will remain strong—it’s whether the semiconductor industry can scale production fast enough to meet it.

The evidence from Huang’s Taiwan visit suggests we’re witnessing the foundation of a new technological era, where AI computational capacity becomes as strategically important as oil reserves, semiconductor manufacturing as critical as steel production, and access to advanced chips as economically significant as access to capital markets.

Whether this buildout proves to be a sustainable transformation or an excessive investment cycle will become clear over the next several years. But for now, the momentum is unmistakable, the demand is real, and the strategic implications are profound.

DISCLAIMERS

Investment Disclaimer: This article is for informational purposes only and does not constitute investment advice, financial guidance, or recommendations to buy or sell securities. Nvidia stock prices, chip pricing, specifications, and availability are subject to change. Production estimates and revenue projections discussed in this article represent industry analysis and public statements but have not been independently verified by Sezarr Overseas News. Readers should conduct their own research and consult with qualified financial advisors before making investment decisions.

Technical Specifications Disclaimer: Chip specifications cited in this article reflect manufacturer claims based on Nvidia’s official documentation and technical disclosures. Actual performance may vary based on configuration, workload characteristics, software optimization, and deployment environment. Comparative performance figures (e.g., “5x faster than H100”) reflect specific benchmark scenarios using particular precision formats and may not represent performance across all use cases.

Pricing Disclaimer: Chip pricing information is based on Jensen Huang’s March 2024 public interview statements and represents approximate ranges rather than published list prices. Actual customer costs vary significantly based on volume, configuration, contractual relationships, and market conditions. Nvidia typically sells complete systems rather than individual chips, making direct price comparisons complex.

Forward-Looking Statements: This article contains forward-looking statements about production timelines, demand levels, market developments, and industry trends. These statements involve uncertainties and risks, and actual outcomes may differ materially from projections discussed. Factors affecting outcomes include technological developments, competitive dynamics, economic conditions, regulatory changes, and geopolitical events.

Attribution and Sources: Information compiled from verified Reuters reports (November 8, 2025), CNBC interviews with Jensen Huang (March and October 2024), Nvidia official press releases and technical documentation, TSMC public statements, and semiconductor industry publications. Stock prices and market data current as of November 15, 2025. Where specific data sources are cited in text, readers are encouraged to consult original sources for complete context.

Geopolitical Information: References to export controls, international relations, and regulatory policies reflect circumstances as of November 2025 and may change. Organizations should consult legal counsel regarding compliance with export controls and international trade regulations.

Frequently Asked Questions

Q1: What are Nvidia Blackwell chips and why are they significant?

Nvidia Blackwell chips are next-generation AI processors featuring a revolutionary dual-die design with 208 billion transistors total. They deliver up to 20 petaflops of AI performance using FP4 precision, representing approximately 5x improvement over the previous H100 Hopper generation for certain workloads. Blackwell chips are significant because they provide the computational foundation for training and running the most advanced AI models, including large language models, generative AI applications, and complex machine learning systems that are becoming essential across industries.

Q2: How much do Nvidia Blackwell chips cost?

According to Jensen Huang’s March 2024 CNBC interview, Nvidia Blackwell chips are priced approximately between $30,000 and $40,000 per unit. However, these figures represent rough approximations rather than published list prices. Nvidia typically sells complete systems—such as DGX B200 servers containing eight Blackwell GPUs or DGX B200 SuperPODs with 576 GPUs—rather than individual chips. Complete system costs can reach into millions of dollars. Actual customer pricing varies based on configuration, volume commitments, and contractual relationships with Nvidia.

Q3: When will Nvidia Blackwell chips be widely available?

Nvidia began shipping Blackwell systems to select customers in late 2024, with availability expanding throughout 2025. However, Jensen Huang’s November 2025 statements about “very strong demand” suggest that supply will remain constrained well into 2026. Organizations that haven’t already secured allocations may face lead times of a year or more for some configurations. Cloud providers with strategic Nvidia relationships are likely to receive priority allocations, making cloud-based access to Blackwell computing potentially more accessible than direct hardware purchases for many organizations.

Q4: Who are the main customers for Nvidia Blackwell chips?

Major Blackwell customers include cloud infrastructure providers like Microsoft Azure, Amazon Web Services, Google Cloud, and Oracle Cloud Infrastructure, as well as technology companies including Meta, OpenAI, Tesla, and xAI. These organizations are deploying Blackwell chips in large-scale data centers to train and operate advanced AI models. Enterprise organizations primarily access Blackwell computational capacity through cloud services rather than direct hardware purchases, though some very large enterprises with substantial AI operations may procure dedicated systems.

Q5: What did Jensen Huang specifically say at the TSMC event on November 8, 2025?

At TSMC’s annual sports day in Hsinchu, Taiwan, Jensen Huang told reporters that Nvidia is experiencing “very strong demand” for Blackwell chips. He emphasized that “Nvidia builds the GPU, but we also build the CPU, the networking, the switches—there are a lot of chips associated with Blackwell,” highlighting the systems-level complexity of Blackwell deployments. Huang credited TSMC’s support, stating “TSMC is doing a very good job supporting us on wafers” and noting that “Nvidia’s success would not be possible without TSMC.” TSMC CEO C.C. Wei confirmed that Nvidia had requested additional wafer capacity, though he declined to specify quantities.

Q6: Why can’t Nvidia sell Blackwell chips to China?

Nvidia cannot sell Blackwell chips to China due to U.S. export control restrictions implemented by the Trump administration. These restrictions prevent sales of advanced AI processors to China, citing national security concerns about potential military applications and AI industry development that could challenge U.S. technological leadership. On November 7, 2025 (the day before the TSMC event), Jensen Huang confirmed there are “no active discussions” about selling Blackwell chips to China, reinforcing Nvidia’s compliance with these export restrictions.

Q7: What role does TSMC play in Blackwell production?

Taiwan Semiconductor Manufacturing Company (TSMC) serves as the exclusive manufacturer for Nvidia’s Blackwell chips, using its advanced 4NP process node technology. TSMC’s Taiwanese fabrication facilities currently represent the only global source for these cutting-edge processors. The Nvidia-TSMC partnership is strategically critical for both companies, with Nvidia depending on TSMC’s manufacturing capabilities and TSMC benefiting from substantial revenue from Nvidia’s orders. TSMC is building advanced facilities in Arizona for future geographic diversification, though these won’t reach meaningful production volumes until the late 2020s.

Q8: How does Blackwell compare to previous generation H100 chips?

Blackwell represents substantial improvements over H100 Hopper architecture chips across multiple dimensions. Blackwell contains 208 billion transistors versus H100’s 80 billion (2.6x increase). For AI performance, Blackwell delivers up to 20 petaflops using FP4 precision compared to H100’s approximately 4 petaflops using FP8 precision—representing about 5x improvement for comparable tasks, though direct comparisons require noting different precision formats. Blackwell includes 192GB HBM3E memory versus H100’s 80GB HBM3, providing greater capacity for large model training. However, Blackwell also consumes more power (approximately 1,200 watts per B200 GPU versus 700 watts for H100), requiring enhanced cooling infrastructure.

Q9: What factors are driving such strong demand for Blackwell chips?

Multiple factors are converging to create exceptional Blackwell demand. First, generative AI has transitioned from experimental pilots to production deployments requiring substantial computational infrastructure. Second, major technology companies view AI capacity as a strategic competitive differentiator, driving infrastructure buildouts that secure long-term positioning. Third, lessons from previous chip shortages encourage strategic stockpiling to avoid supply constraints. Fourth, Blackwell’s performance improvements create favorable economics for large-scale AI workloads despite high per-chip costs. Fifth, cloud providers need cutting-edge hardware to offer competitive AI services to enterprise customers.

Q10: Should organizations wait for Blackwell or purchase current-generation hardware?

This depends on specific circumstances and requirements. Organizations with immediate AI infrastructure needs and available H100/H200 supply should carefully evaluate whether current-generation hardware meets requirements, given Blackwell’s extended lead times and constrained availability. For many workloads, H100/H200 chips provide excellent performance and are available sooner. However, organizations with longer planning horizons, extremely large-scale requirements, or specific needs for Blackwell’s enhanced capabilities might justify waiting despite delays. Many organizations pursue hybrid strategies: deploying available current-generation hardware for near-term needs while securing Blackwell allocations for future expansion. Cloud-based access provides another option, enabling organizations to access Blackwell computing through major cloud providers rather than direct hardware procurement.