Executive Summary

Artificial intelligence is fundamentally reshaping global finance in 2025-2026. Major regulatory agencies including the U.S. SEC, U.K. FCA, Singapore MAS, and EU ESMA are implementing oversight frameworks emphasizing transparency, explainability, and ethical governance for AI-driven financial systems.

Key Developments:

- SEC: Proposed Predictive Data Analytics rules under review; AI examination priorities for 2025

- FCA: Principles-based approach using existing frameworks; AI Lab launched for testing

- MAS: FEAT principles (voluntary); AI Risk Management guidelines proposed November 2025

- ESMA: Guidance issued May 2024 requiring MiFID II compliance for AI systems

- Market Impact: Algorithmic trading accounts for 60-73% of U.S. equity volume; McKinsey estimates AI could add $200-340 billion annually to banking sector value

Financial institutions are deploying RegTech automation for compliance, anti-money laundering (AML), transaction monitoring, risk modeling, and cryptocurrency surveillance. Ethical trading AI frameworks aim to reduce market manipulation while maintaining fairness and transparency. This comprehensive analysis explores the evolving regulatory landscape, institutional adoption patterns, emerging risks, and the transformation of global financial markets under intelligent automation.

IMPORTANT DISCLAIMER: This article discusses proposed regulations, industry projections, and evolving frameworks as of November 2025. Regulatory requirements are subject to change. This content is for informational purposes only and does not constitute financial, legal, investment, or professional advice. Organizations should consult qualified legal and compliance professionals before implementing AI systems or making strategic decisions based on this analysis.

1. RegTech 3.0 Evolution — AI as the Compliance Partner

Regulatory Technology (RegTech) has evolved dramatically from basic rule-checking systems. As we move through 2025 into 2026, financial institutions worldwide are deploying GenAI-powered RegTech platforms with sophisticated capabilities:

Core RegTech AI Capabilities

✔ Real-Time Compliance Monitoring AI algorithms continuously cross-check millions of transactions against regulatory requirements as they occur, flagging potential violations within milliseconds.

✔ Automated Regulatory Reporting Institutions can generate near-instant compliance reports to regulators including the SEC, FCA, MAS, and FINRA, reducing manual reporting burdens by up to 70%.

✔ AI-Powered AML & Fraud Detection Machine learning models identify suspicious transaction patterns with significantly improved accuracy compared to traditional rule-based systems, reducing false positives that plague legacy systems.

✔ KYC Onboarding Automation Document verification, identity authentication, and risk classification processes that once took days now complete within seconds using computer vision and natural language processing.

✔ Predictive Risk Analysis Advanced models identify hidden exposures, liquidity vulnerabilities, and portfolio risks before they materialize into actual threats.

The Explainability Imperative

As regulators worldwide increasingly expect “explainable AI” (XAI), financial institutions must justify model decisions. This requirement is driving significant investment in AI transparency tools and documentation systems that can trace decision pathways and provide audit-ready explanations.

Related reading: AI Cybersecurity 2025 | AI Infrastructure 2026 | EU AI Act 2025

2. Ethical Trading AI — Reducing Market Manipulation

Algorithmic trading dominates modern financial markets. According to recent market research, approximately 60-73% of U.S. equity trading volume and similar proportions in European and Asian markets are now executed through algorithmic systems.

This dominance has prompted regulators to focus intensively on algorithmic behavior patterns and market integrity.

Key Monitoring Areas

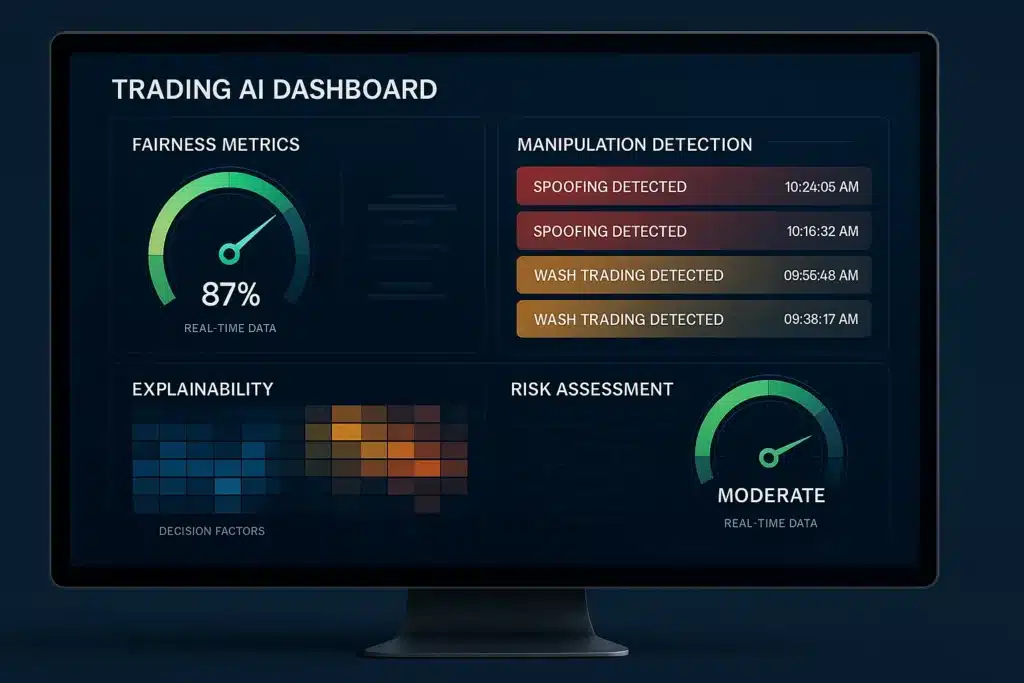

✔ AI Manipulation Detection Regulatory systems now monitor for:

- Spoofing: Placing fake orders to manipulate prices

- Wash trading: Self-dealing to create artificial volume

- Quote stuffing: Flooding markets with orders to slow competitors

- Insider-pattern behavioral analytics: Detecting unusual information advantages

✔ Fairness & Bias Control Ethical trading frameworks ensure AI models do not systematically disadvantage retail investors or create conditions that trigger flash crashes.

✔ Explainable Trade Execution Modern compliance frameworks require that traders and auditors can trace why an AI system bought, sold, or held a position—moving away from “black box” decision-making.

✔ High-Frequency Trading (HFT) Guardrails Advanced surveillance systems detect abnormal trading behavior within microseconds, implementing circuit breakers when necessary.

Regulatory Expectations

Major asset managers and hedge funds now face heightened scrutiny of their algorithmic trading systems. The FCA and ESMA have issued guidance requiring comprehensive audit trails for AI-generated trading orders, though these remain within existing regulatory frameworks rather than representing entirely new rule categories.

Further context: AI Regulation in the U.S. 2025 | Quantum AI Breakthrough 2025

3. Global Regulatory Landscape — Coordinated Oversight Evolution

A coordinated global movement is shaping AI governance in finance. Here’s the current regulatory status by jurisdiction:

🇺🇸 United States — SEC & CFTC

Current Status (November 2025):

The SEC has NOT enacted final AI-specific regulations but has made AI oversight a 2025 examination priority:

Proposed Rules:

- Predictive Data Analytics (PDA): Proposed July 2023; focuses on conflicts of interest from AI use in investment advice

- Status: Still under consideration; no final rules adopted as of November 2025

Enforcement Approach:

- Using existing securities laws to address “AI washing” (false claims about AI capabilities)

- 2024-2025: Multiple enforcement actions against firms misrepresenting AI use

- Examinations focusing on: AI disclosure accuracy, governance frameworks, compliance policies

Key Requirements (Existing Framework):

- Investment advisers must maintain policies preventing misuse of material non-public information

- Broker-dealers must comply with Regulation Best Interest

- Senior managers remain accountable under existing governance rules

Trump Administration Impact: The January 2025 executive order on AI prioritizes American AI leadership and innovation, directing agencies to review rules that may hinder competitiveness—creating regulatory uncertainty about future approaches.

Official sources: SEC Division of Examinations 2025 Priorities; SEC enforcement releases 2024-2025

🇬🇧 United Kingdom — FCA Principles-Based Approach

Current Status (November 2025):

The FCA has explicitly stated it will NOT create new AI-specific regulations, instead applying existing principles-based frameworks:

Key Positions:

- No New AI Rules: FCA confirmed in September 2025 it relies on existing regulatory frameworks

- Principles-Based Regulation: Firms must apply current rules to AI contexts

- AI Lab Launched: October 2024 facility for testing AI innovations safely

Applicable Framework:

- Senior Managers & Certification Regime (SM&CR): Senior managers accountable for AI governance

- Consumer Duty: Ensures AI-driven services deliver good outcomes

- Operational Resilience: Firms must manage AI system risks

- Treating Customers Fairly: Core principle applies to AI decisions

Innovation Support:

- Supercharged Sandbox (partnership with NVIDIA)

- AI Live Testing environments

- AI Sprint collaborative sessions with industry

Surveillance Enhancement: The FCA uses AI for its own market surveillance, scanning approximately 100,000 websites daily for fraud detection.

Official sources: FCA AI Update (September 2025); FCA strategy 2025-2030

🇪🇺 European Union — ESMA & AI Act

Current Status (November 2025):

The EU has implemented the AI Act (effective August 2024) alongside sector-specific guidance:

ESMA Guidance (May 2024):

- Firms using AI must comply with existing MiFID II requirements

- Focus areas: organizational requirements, conduct of business, client best interests

- Emphasis on transparency, explainability, robust governance

- No new rules specific to AI trading—existing frameworks apply

EU AI Act Implementation:

- High-Risk Category: AI used in credit scoring, employment decisions

- Transparency Requirements: Users must know when interacting with AI

- Prohibited Uses: Certain manipulative AI applications banned (February 2025)

- Phased Implementation: Various requirements taking effect through 2025-2027

Trading Algorithm Requirements: AI-enabled trading algorithms must adhere to existing MiFID II/MiFIR framework and market abuse regulations—not separate AI-specific rules.

Official sources: ESMA Public Statement on AI (May 2024); EU AI Act Official Journal

🇸🇬 Singapore — MAS FEAT Principles

Current Status (November 2025):

Singapore’s Monetary Authority has established comprehensive but largely voluntary frameworks:

FEAT Principles (2018 – Updated 2025):

- Fairness: Decisions must not systematically disadvantage groups without justification

- Ethics: Considerations of broader societal impact

- Accountability: Clear responsibility for AI decisions (internal and external)

- Transparency: Explainability of AI-driven decisions

Status: VOLUNTARY adoption by financial institutions

New Development:

- AI Risk Management Guidelines: Proposed November 13, 2025

- Status: Under consultation; not yet mandatory

- Scope: All financial institutions when implemented

Industry Initiatives:

- Veritas Framework: Multi-phase industry project to assess AI against FEAT principles

- AIDA Programme: Industry platforms for AI adoption (NovA!, TradeMaster)

- AI Verify: Testing toolkit for AI governance assurance

Funding Support: MAS offers co-funding (up to S$500,000, ~30% co-funding rate) for qualifying AIDA projects.

Official sources: MAS FEAT Principles document; MAS consultation paper P017-2025

🌍 Middle East — GCC AI Governance

United Arab Emirates & Saudi Arabia: Both nations are implementing advanced AI governance frameworks for fintech, particularly focusing on:

- Anti-money laundering systems

- Fraud detection capabilities

- Cross-border payment monitoring

The UAE and Saudi Arabia are positioning themselves as regional FinTech leaders with significant AI investment, though specific regulatory frameworks are still emerging.

Related analysis: Saudi Arabia GCC AI Investment 2025

4. AI in Crypto & Blockchain Surveillance

Cryptocurrency markets present unique challenges: high volatility, decentralization, and historical exploitation for illicit activity. AI is transforming oversight capabilities:

Key Applications

Transaction Tracing: AI systems analyze blockchain data to follow complex transaction paths across multiple wallets and exchanges, identifying patterns invisible to traditional analysis.

Wallet Risk Rating: Machine learning models assign risk scores to cryptocurrency addresses based on transaction history, known connections, and behavioral patterns.

NFT Fraud Detection: Computer vision and pattern recognition identify stolen artwork, fake collections, and wash trading in NFT marketplaces.

DeFi Protocol Audits: AI systems continuously monitor decentralized finance protocols for vulnerabilities, unusual activity, and potential exploits.

Rug-Pull Predictive Alerts: Advanced models analyze token launch patterns, liquidity movements, and social media signals to warn of potential scams before they execute.

Smart Contract Vulnerability Scanning: Automated code analysis identifies security weaknesses in smart contracts before deployment or exploitation.

Leading Providers

Firms including Chainalysis, Elliptic, and TRM Labs deploy AI to scan trillions of blockchain data points daily, enabling regulators and institutions to identify threats in near real-time.

Technical context: AI Infrastructure 2026 (chips & data requirements)

5. Banking & Asset Management Transformation — Institutional AI Adoption

Financial institutions are rapidly integrating AI across operations to enhance efficiency and reduce costs:

Primary Implementation Areas

✔ AI Loan Underwriting Machine learning models process credit applications faster while reducing bias compared to traditional scoring methods. Models analyze thousands of data points to assess creditworthiness more accurately.

✔ Treasury Automation Predictive AI forecasts liquidity needs and cash flows with significantly improved accuracy, enabling better capital allocation decisions.

✔ AI-Driven Portfolio Management Hybrid human-AI investment teams are becoming standard at major institutions. AI handles data analysis and pattern recognition while humans provide strategic oversight and ethical judgment.

✔ Risk Scoring & Stress Testing AI models simulate thousands of macroeconomic scenarios in seconds, identifying portfolio vulnerabilities and stress points far faster than traditional methods.

✔ Fraud Prevention Real-time transaction monitoring using AI detects unusual patterns indicative of fraud, significantly reducing losses while minimizing false positives that inconvenience customers.

Economic Impact Projections

According to McKinsey Global Institute analysis (June 2023), AI and generative AI could add $200-340 billion in annual value to the global banking sector, primarily through productivity improvements. This represents approximately 2.8-4.7% of total industry revenues and 9-15% of operating profits.

Key clarification: This is value creation through productivity gains, not direct cost savings. The benefits compound through improved efficiency, better decision-making, enhanced customer experience, and risk reduction.

Major Institution Examples

- JPMorgan Chase: $18 billion annual technology budget with significant AI investment; proprietary LLM Suite platform

- Bank of America: $4 billion in strategic technology initiatives (2025); AI productivity lifts of 20% in development cycles

- OCBC Bank (Singapore): 50% efficiency lift from six-month GenAI chatbot trial

Industry insights: AI in Healthcare 2026 (parallel industry transformation)

6. Critical Risks & Regulatory Challenges

Despite substantial progress, significant risks persist:

1. Algorithmic “Black Box” Problem

Challenge: Large neural network models can produce opaque decisions that even developers struggle to fully explain.

Response: Regulators increasingly require explainable AI (XAI) frameworks, but technical limitations remain. The balance between model performance and explainability creates ongoing tension.

2. AI Arms Race Dynamics

Challenge: Competitive pressure drives firms to deploy increasingly powerful and complex models that regulators struggle to evaluate comprehensively.

Response: Regulatory agencies are building internal AI expertise and conducting regular examinations, but resource constraints limit comprehensive oversight.

3. Systemic Risk from Correlated Models

Challenge: If numerous institutions use similar AI models trained on similar data, their coordinated responses to market events could trigger synchronized crashes or flash crashes.

Response: Regulators are monitoring for excessive model correlation and requiring diversity in risk management approaches, though early detection remains difficult.

4. Data Privacy vs. Model Performance

Challenge: More comprehensive data improves AI accuracy but raises privacy concerns and regulatory compliance complexity under GDPR, CCPA, and other frameworks.

5. Third-Party AI Vendor Concentration

Challenge: Heavy reliance on few major AI providers creates concentration risk and potential single points of failure.

Response: Operational resilience frameworks now address AI vendor risk, but alternatives remain limited for cutting-edge capabilities.

Regulatory Response Framework

Regulators worldwide emphasize:

- Strong governance structures with board-level accountability

- Frequent model audits and validation processes

- Scenario testing for extreme market conditions

- Clear escalation pathways when AI systems malfunction

- Human oversight requirements for high-stakes decisions

Risk analysis: AI Cybersecurity 2025

FAQs — AI in Finance 2025–2026

1. Are AI trading systems legal in 2026?

Yes, AI trading systems are legal globally, but they operate under existing financial regulations rather than AI-specific rules. In the U.S., they must comply with SEC and CFTC requirements. In the U.K., FCA principles apply. The EU requires MiFID II compliance. Singapore expects adherence to FEAT principles. All jurisdictions emphasize transparency, auditability, and preventing market manipulation.

Regulators are conducting examinations and may propose additional requirements, but as of November 2025, no jurisdiction has banned AI trading—instead focusing on responsible implementation within current frameworks.

2. Can AI completely replace financial analysts?

No. While AI significantly enhances analytical capabilities, it cannot fully replace human financial analysts. Current regulatory frameworks and industry best practices require meaningful human oversight, particularly for:

- Strategic decision-making

- Ethical considerations

- Client relationship management

- Complex judgment calls

- Regulatory compliance oversight

The emerging model is human-AI collaboration, where AI handles data processing and pattern recognition while humans provide strategic direction, ethical judgment, and client-facing expertise.

3. How does AI reduce financial crime more effectively than traditional methods?

AI systems excel at financial crime detection through:

- Pattern Recognition: Analyzing millions of transactions to identify subtle anomalies humans would miss

- Speed: Real-time monitoring versus periodic reviews

- Complexity Handling: Tracking sophisticated multi-layered schemes across jurisdictions

- Adaptive Learning: Continuously improving as criminals change tactics

- False Positive Reduction: More accurate flagging reduces investigation burden

Traditional rule-based systems flag approximately 95% false positives; modern AI reduces this to 20-40%, dramatically improving efficiency.

4. What’s the difference between RegTech and FinTech AI?

RegTech AI: Focuses specifically on regulatory compliance, risk management, and reporting. Examples include AML monitoring, compliance automation, and regulatory reporting systems.

FinTech AI: Broader category including any AI application in financial services—trading algorithms, customer service chatbots, loan processing, fraud detection, and RegTech applications.

RegTech is a subset of FinTech specifically addressing compliance and regulatory requirements.

5. Are current regulations adequate for AI risks in finance?

This remains debated. Current positions:

Regulatory View: Major regulators (SEC, FCA, MAS, ESMA) believe existing frameworks adequately address AI risks when properly applied, though they continue monitoring and issuing guidance.

Industry View: Mixed opinions. Some firms argue current rules provide sufficient flexibility; others want clearer AI-specific guidance to ensure compliance and reduce uncertainty.

Expert Consensus: Existing frameworks provide foundation, but rapidly evolving AI capabilities may require targeted additions—particularly around explainability, model validation, and systemic risk management.

6. What happens if an AI system makes a trading error causing significant losses?

Liability and consequences depend on circumstances:

Regulatory Response: Firms remain responsible for AI system outcomes under existing governance frameworks. Senior managers may face personal accountability under regimes like the U.K.’s SM&CR.

Legal Liability: Standard negligence and breach of duty principles apply. Firms must demonstrate proper governance, testing, and oversight.

Market Response: Circuit breakers and trading halts can limit damage. Firms must have contingency plans for AI system failures.

Precedent: While no major AI-specific trading disasters have occurred, algorithmic trading incidents (like the 2010 Flash Crash) demonstrate that firms bear responsibility for their systems’ actions.

7. How do regulators monitor AI systems they don’t have direct access to?

Regulators employ multiple approaches:

- Examinations & Audits: Regular reviews of AI governance, testing documentation, and validation processes

- Outcome Analysis: Monitoring for patterns suggesting problematic AI behavior

- Model Documentation Requirements: Firms must maintain comprehensive records

- Third-Party Audits: Independent validation of high-risk systems

- Industry Sweeps: Broad information requests to identify emerging risks

- Regulatory Technology: Regulators increasingly use their own AI for market surveillance

8. What’s the timeline for AI to fully transform financial services?

Transformation is already underway but will continue evolving:

Current State (2025): 60-73% of equity trading is algorithmic; AI widely used in fraud detection, customer service, and basic automation.

Near-Term (2026-2027): Expanded GenAI applications in compliance, analysis, and advisory services; regulatory frameworks mature.

Medium-Term (2028-2030): AI-augmented financial advisors become standard; most operational processes significantly automated; advanced risk modeling widely deployed.

Long-Term (2030+): Potential for more fundamental structural changes in financial intermediation, though human oversight likely remains central to high-stakes decisions.

McKinsey’s earlier projection that 50% of work activities would be automated between 2035-2070 has been accelerated; some scenarios now suggest 2030-2045.