Introduction

As we look ahead to 2026, AI in healthcare stands at a critical juncture. While artificial intelligence promises to transform hospitals, clinics, and public health systems worldwide, its successful integration hinges not on technological capability alone—but on ethical design, transparent data practices, and carefully cultivated patient trust.

Unlike other industries where rapid automation has faced fewer obstacles, healthcare AI adoption requires navigating complex regulatory frameworks, protecting sensitive patient data, and maintaining the irreplaceable human element of medical care. The question isn’t whether AI in healthcare 2026 will expand—it’s whether that expansion will be done responsibly.

According to the World Health Organization’s 2024 Digital Health Technical Advisory Group Report, healthcare AI investment is projected to reach $110 billion globally by 2027, with the majority focused on administrative automation and clinical decision support systems. However, the same report emphasizes that “technical capability must never outpace ethical safeguards.”

This comprehensive analysis examines how healthcare organizations are implementing AI responsibly, what ethical frameworks guide deployment, how patient trust is being built and maintained, and what real-world implementations reveal about the future of healthcare automation.

1. The Current State of AI in Healthcare

AI in healthcare 2026 represents a carefully calibrated approach to automation—one that prioritizes augmenting human capability rather than replacing it.

Market Size & Growth Trajectory

The global healthcare AI market reached $18.2 billion in 2024, according to Deloitte’s Global Health Care Outlook, with projections suggesting it could reach $110-125 billion by 2027. This represents a compound annual growth rate (CAGR) of approximately 38-42%—among the fastest-growing segments of the broader AI industry.

However, growth patterns vary significantly by application:

Administrative AI (Fastest Growing):

- Patient scheduling and registration: 45% CAGR

- Revenue cycle management: 42% CAGR

- Supply chain optimization: 38% CAGR

- Documentation and coding assistance: 47% CAGR

Clinical Decision Support (Moderate Growth):

- Diagnostic imaging analysis: 28% CAGR

- Treatment planning assistance: 25% CAGR

- Patient monitoring and alerts: 31% CAGR

Direct Patient Care (Slowest Growth):

- Automated triage: 18% CAGR

- Treatment recommendation: 15% CAGR

- Autonomous procedures: <5% CAGR

The pattern is clear: AI in healthcare is scaling fastest where it supports existing workflows without making autonomous clinical decisions. As explored in our previous coverage, AI in healthcare diagnosis workflows focuses primarily on decision support rather than decision-making.

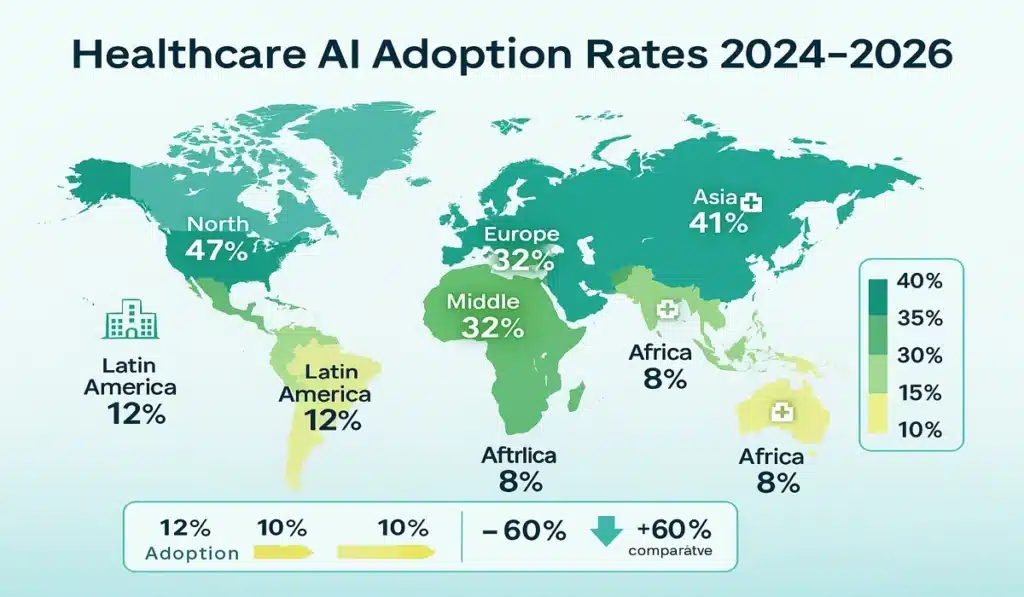

Geographic Adoption Patterns

Healthcare AI adoption varies dramatically by region:

North America: Leads in deployment, with 47% of large hospitals (500+ beds) using at least one AI-powered administrative system as of 2024 (HIMSS Analytics).

Europe: Growing adoption at 32% of large hospitals, with stronger emphasis on privacy-preserving AI due to GDPR requirements.

Asia-Pacific: Rapid growth in urban centers (41% adoption in major Chinese and South Korean hospitals) but significant rural-urban divides.

Middle East: Strategic investments, particularly in UAE and Saudi Arabia, as detailed in our analysis of Saudi Arabia’s GCC AI investment leadership.

Latin America & Africa: Emerging adoption (8-15% in large hospitals) with focus on telemedicine and diagnostic support.

2. Why Ethical AI Matters in Healthcare

The stakes for ethical AI implementation are uniquely high in healthcare. Unlike commercial applications where errors might cost revenue or convenience, healthcare AI mistakes can literally cost lives. But ethical concerns extend far beyond safety.

The Trust Imperative

Patient trust in healthcare institutions has declined in many regions over the past decade. In the United States, the Pew Research Center found that only 71% of Americans express “a great deal” or “fair amount” of confidence in medical scientists—down from 87% in 2019. Similar trends appear globally.

AI adoption risks further eroding this trust if implemented without transparency. A 2024 survey by the American Medical Association found:

- 67% of patients worry that AI might make mistakes in their care

- 58% fear their medical data being used without consent

- 43% are concerned about AI replacing their doctors

- However, 72% support AI use for administrative tasks that don’t involve clinical decisions

- 81% are more comfortable with AI when healthcare providers clearly explain its role

These findings underscore a critical insight: patients aren’t categorically opposed to AI in healthcare—they want transparency, control, and human oversight.

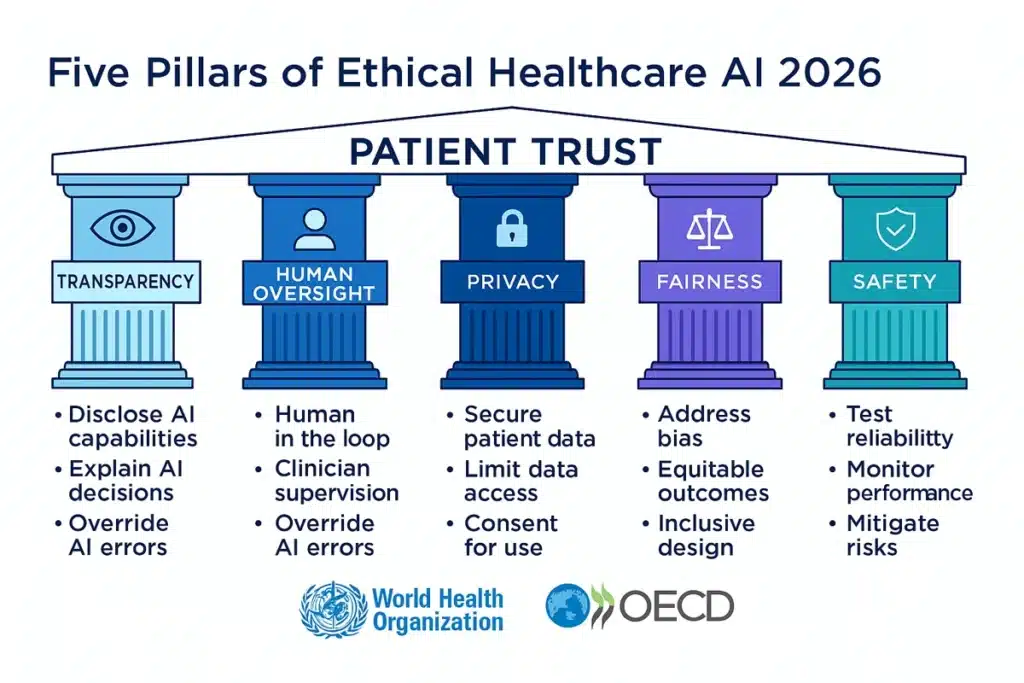

Five Pillars of Ethical Healthcare AI

The WHO’s Digital Health Technical Advisory Group, in collaboration with the OECD’s AI Policy Observatory, has identified five essential pillars for ethical healthcare AI:

1. Transparency & Explainability

AI systems must provide clear, understandable explanations of their recommendations. “Black box” algorithms that cannot explain their reasoning are increasingly rejected by healthcare systems.

Example: When IBM Watson Health faced criticism for opaque recommendations, several major hospital systems discontinued use. Conversely, systems that provide clear decision pathways (showing which patient factors influenced recommendations) see higher adoption.

2. Human Agency & Oversight

No AI system should make autonomous clinical decisions. Human healthcare professionals must always retain final authority. This principle is now codified in regulations including the EU AI Act’s provisions for healthcare applications.

3. Privacy & Data Governance

Patient health data requires the highest level of protection. Systems must implement encryption, access controls, audit logging, and comply with regulations including HIPAA (US), GDPR (Europe), and regional equivalents.

4. Fairness & Non-Discrimination

AI systems must be regularly tested for demographic biases. A concerning 2024 Stanford study found that 73% of clinical AI algorithms showed at least some racial or gender bias in their training data—highlighting the critical need for ongoing auditing.

5. Safety & Accountability

Clear chains of responsibility must exist. When AI-assisted care goes wrong, there must be identifiable accountability—including both the healthcare provider and, where appropriate, the technology vendor.

For deeper analysis of AI governance across sectors, see our coverage of AI regulation in the United States and the EU AI Act compliance framework.

3. Administrative Automation Without Replacing Care Providers

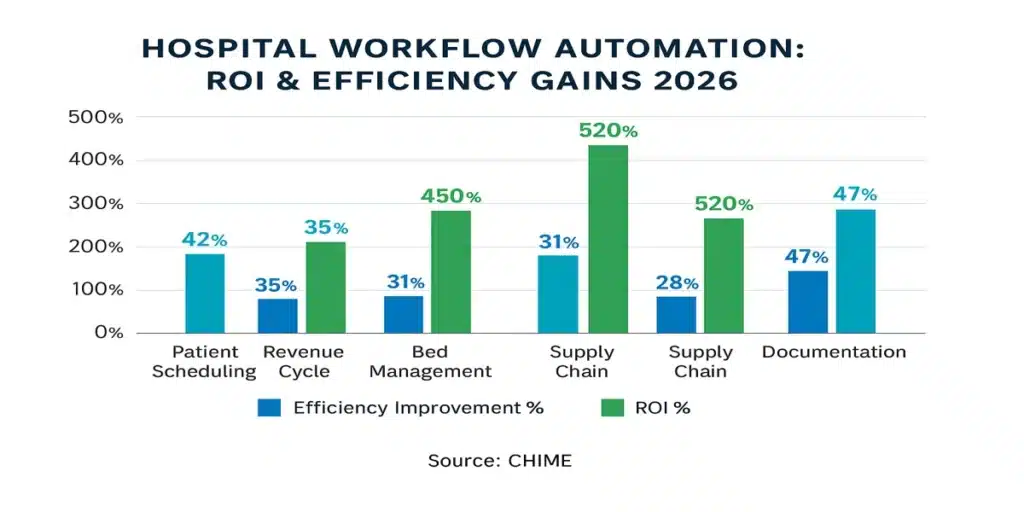

The fastest-growing segment of AI in healthcare 2026 isn’t clinical AI—it’s administrative automation. This represents a strategic choice by healthcare systems to improve efficiency without venturing into the more complex territory of clinical decision-making.

Where AI Is Making the Biggest Impact

Patient Scheduling & Access Management

Traditional problem: No-show rates of 15-30% cost U.S. healthcare systems an estimated $150 billion annually.

AI solution: Predictive scheduling systems analyze patient history, demographics, weather, transportation patterns, and other factors to:

- Predict no-show likelihood

- Automatically send targeted reminders via patient’s preferred channel

- Suggest optimal appointment times for each patient

- Enable intelligent overbooking that doesn’t compromise care

Results: Cleveland Clinic reported reducing no-show rates from 19% to 11% using AI scheduling assistance, effectively adding 8% capacity without building new facilities.

Revenue Cycle Management

Traditional problem: Medical coding errors cost hospitals 1-3% of revenue, while claim denials average 8-12% of submitted claims.

AI solution: Automated coding assistance that:

- Suggests appropriate diagnostic and procedure codes

- Flags likely claim denials before submission

- Identifies undercoding that costs revenue

- Automatically appeals denied claims with supporting documentation

Results: Mercy Health, a major U.S. hospital system, reported $12 million in recovered revenue in the first year of AI coding assistance implementation.

Bed Management & Patient Flow

Traditional problem: Emergency departments often operate at 90%+ capacity, creating dangerous bottlenecks and treatment delays.

AI solution: Capacity prediction systems that:

- Forecast admission and discharge patterns 6-24 hours ahead

- Identify patients likely to experience complications requiring longer stays

- Optimize bed assignment based on patient needs and staffing

- Coordinate transport and transfers

Results: A 2024 HIMSS Analytics study of 50 hospitals using AI bed management found average emergency department wait times decreased by 31% and ICU bed utilization improved by 18%.

Supply Chain Optimization

Traditional problem: Healthcare supply chains are notoriously inefficient, with 15-20% waste in perishable supplies and frequent shortages of critical items.

AI solution: Predictive inventory management that:

- Forecasts supply needs based on seasonal patterns, scheduled procedures, and patient populations

- Optimizes ordering to reduce waste while preventing shortages

- Identifies cost-saving opportunities and vendor alternatives

- Tracks expiration dates to minimize waste

Results: Kaiser Permanente reported $50 million in annual supply chain savings after implementing AI optimization across 39 hospitals.

The pattern is clear: administrative AI in healthcare delivers measurable ROI while avoiding the ethical complexities of clinical AI. This aligns with findings about how AI co-pilots are transforming employment models across industries—augmenting rather than replacing workers.

4. Data Privacy, Consent & Building Patient Trust

If administrative efficiency is where AI in healthcare delivers the most immediate value, data privacy is where it faces the most scrutiny. Healthcare data breaches affected over 88 million Americans in 2024 alone, according to the U.S. Department of Health and Human Services—making privacy concerns far from theoretical.

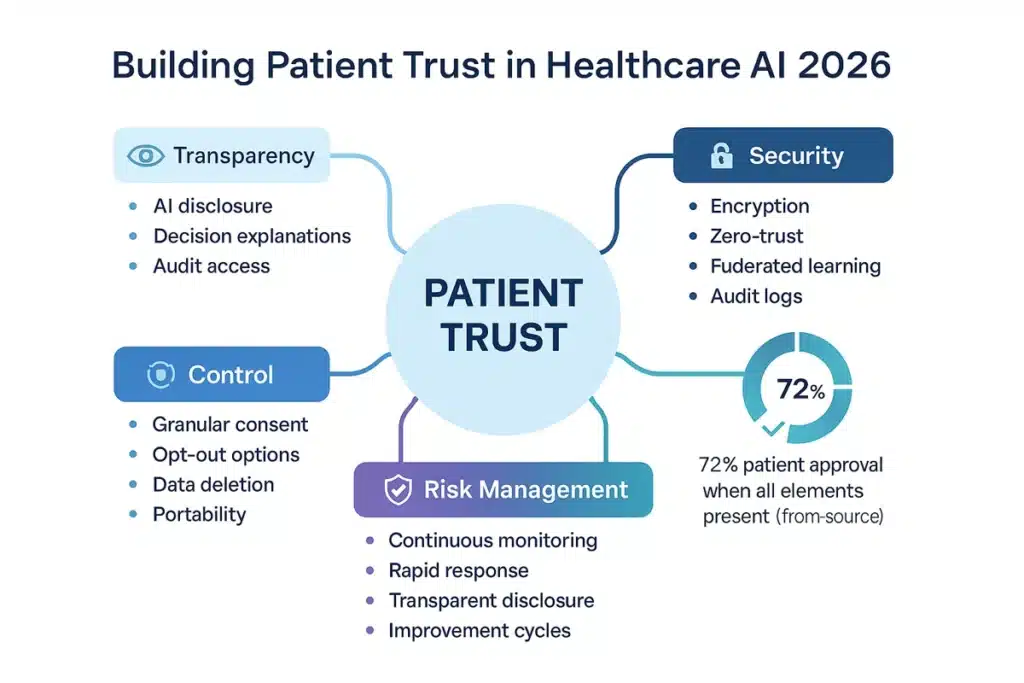

The Trust Equation

Trust in healthcare AI depends on a simple equation:

Trust = Transparency + Security + Control – Risk

Let’s examine each component:

Transparency: What AI Does and Why

Leading healthcare systems now provide “AI transparency statements” that explain:

- Which AI systems are being used in the patient’s care

- What data those systems access

- How AI recommendations are generated

- Who makes final decisions based on AI input

- How patients can opt out of certain AI applications

Example: Mayo Clinic’s patient portal includes an “AI in Your Care” section that shows patients exactly which AI systems touched their care journey and how.

Security: Protecting Sensitive Information

Modern healthcare AI security includes:

Encryption at Rest and in Transit: All patient data encrypted using AES-256 or equivalent standards

Zero-Trust Architecture: No system automatically trusted; continuous verification required

Differential Privacy: AI models trained without exposing individual patient data

Federated Learning: Models trained across multiple institutions without sharing actual patient records

Audit Logging: Complete tracking of who accessed what data when

The EU’s GDPR has driven many of these practices globally, as healthcare systems operating in Europe must meet its stringent requirements. As detailed in our EU AI Act compliance checklist, healthcare AI faces particularly strict oversight.

Control: Patient Rights Over Their Data

Progressive healthcare systems implement:

Granular Consent: Patients choose specifically which data can be used for which purposes (treatment, research, AI training, etc.)

Right to Explanation: Patients can request detailed explanations of any AI-assisted decision in their care

Opt-Out Options: Ability to decline AI assistance in care where human alternatives exist

Data Deletion: Right to have data removed from AI training datasets (within clinical and legal constraints)

Portability: Ability to download and transfer health records

A 2024 study in JAMA Network Open found that hospitals offering granular data controls saw 27% higher patient satisfaction scores and 18% higher willingness to participate in research studies.

Risk Management: When Things Go Wrong

Even with best practices, AI systems make mistakes. Ethical healthcare organizations:

Proactively Monitor: Continuous testing for accuracy, bias, and unexpected behaviors

Rapid Response: Clear protocols for investigating and addressing AI errors

Transparent Disclosure: Informing patients when AI mistakes may have affected care

Continuous Improvement: Using errors to improve systems and training

Insurance & Liability: Clear policies on who bears responsibility for AI-related errors

The Consent Challenge

One of the most complex ethical questions in AI in healthcare 2026 is consent. Traditional medical consent is procedure-specific: a patient consents to this surgery, that medication, these tests. But AI often operates across many touchpoints in care.

Solutions emerging in 2026 include:

Tiered Consent Models: Patients consent separately for:

- Tier 1: AI that improves operational efficiency (scheduling, billing) – assumed consent

- Tier 2: AI that assists clinical workflows (alerting providers to test results) – opt-out consent

- Tier 3: AI that directly informs clinical decisions – explicit opt-in consent

Dynamic Consent Platforms: Digital systems where patients can adjust their AI preferences at any time, similar to privacy settings on social media

Contextual Consent: AI systems request permission at the moment of use rather than requiring blanket advance consent

These approaches balance patient autonomy with practical healthcare delivery.

5. Governance Frameworks & Regulatory Oversight

The rapid evolution of AI in healthcare has prompted governments and international organizations to develop comprehensive governance frameworks. Unlike many AI applications, healthcare AI cannot operate in a regulatory vacuum—it must comply with existing medical device regulations, healthcare privacy laws, and emerging AI-specific requirements.

International Frameworks

World Health Organization (WHO)

The WHO’s “Ethics and Governance of Artificial Intelligence for Health” guidance (updated 2024) establishes six principles:

- Protecting autonomy

- Promoting human well-being and safety

- Ensuring transparency and intelligibility

- Fostering accountability

- Ensuring inclusiveness and equity

- Promoting responsive and sustainable AI

While not legally binding, WHO guidance shapes national policies worldwide. The organization’s Digital Health Technical Advisory Group reviews high-risk AI applications and issues recommendations.

OECD AI Principles

The Organization for Economic Co-operation and Development’s AI Principles, endorsed by 50 countries, include specific healthcare provisions:

- Human-centered values and fairness

- Transparency and explainability

- Robustness, security and safety

- Accountability

The OECD’s AI Policy Observatory tracks healthcare AI implementations across member nations, providing comparative policy analysis.

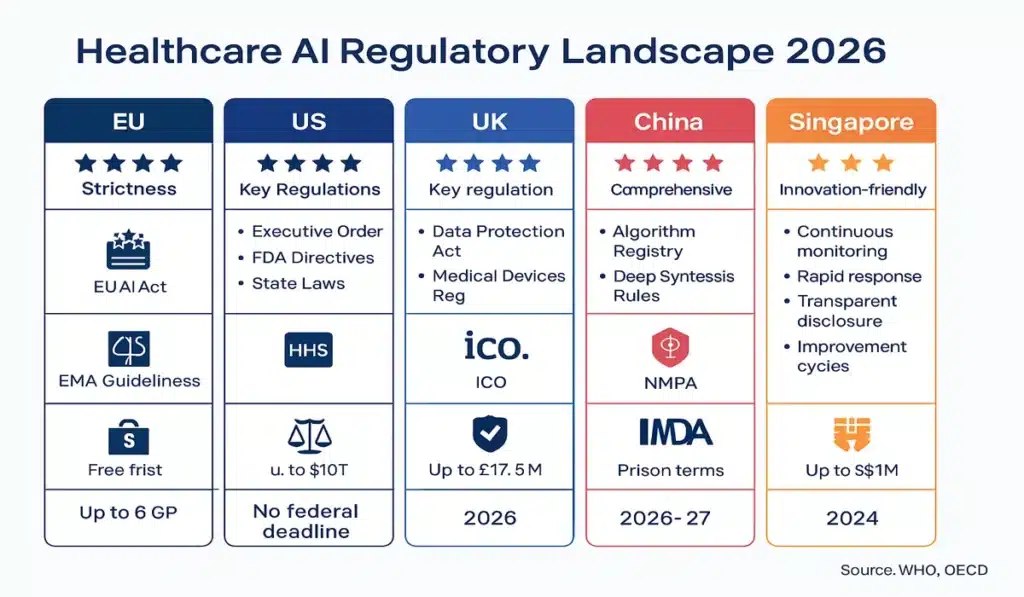

Regional Regulations

European Union: AI Act & GDPR

The EU takes the strictest approach to healthcare AI globally:

AI Act Classification: Most healthcare AI systems are classified as “high-risk,” requiring:

- Conformity assessments before deployment

- Risk management systems

- Technical documentation

- Transparency to users

- Human oversight measures

- Cybersecurity safeguards

- Post-market monitoring

GDPR Requirements: Healthcare data receives special protections:

- Explicit consent required for data processing

- Right to explanation for automated decisions

- Data minimization principles

- Breach notification within 72 hours

Non-compliance penalties reach €20 million or 4% of global revenue—whichever is higher.

United States: Fragmented Approach

Unlike Europe’s comprehensive framework, U.S. healthcare AI regulation is fragmented:

FDA Oversight: Medical devices (including AI-powered software) require pre-market approval or clearance. The FDA’s 2024 AI/ML Framework focuses on:

- Risk categorization

- Real-world performance monitoring

- Transparency in algorithm changes

- Bias testing requirements

HIPAA Privacy Rules: The Health Insurance Portability and Accountability Act governs health data privacy but was written in 1996—before modern AI existed. The Department of Health and Human Services has issued interpretive guidance but Congress has not updated the law.

State-Level Regulations: Individual states increasingly regulate healthcare AI, creating a patchwork of requirements—a challenge detailed in our analysis of the federal vs. state AI regulation divide.

United Kingdom: Post-Brexit Framework

The UK is developing a “pro-innovation” approach through:

- NHS AI Lab coordination

- NICE guidance on AI evidence standards

- ICO guidance on AI and data protection

- MHRA medical device approval processes

Asia-Pacific Approaches

China: Comprehensive AI regulations issued in 2024 require:

- Security assessments for healthcare algorithms

- User consent for data collection

- Bias and discrimination prevention

- Annual algorithmic audits

Singapore: Smart Nation initiative includes healthcare AI “sandboxes” for testing innovations under regulatory supervision

Japan: MHLW (Ministry of Health, Labour and Welfare) AI guidelines emphasize safety and international harmonization

Industry Self-Regulation

Beyond government mandates, healthcare AI is shaped by industry standards:

DICOM AI Standards: Imaging AI follows Digital Imaging and Communications in Medicine protocols

HL7 FHIR Standards: Healthcare interoperability standards increasingly incorporate AI exchange protocols

Vendor Commitments: Major healthcare AI vendors (Epic, Cerner, Philips, GE Healthcare) have formed coalitions establishing shared ethical principles

These self-regulatory efforts often move faster than formal legislation, establishing de facto standards that governments later codify.

6. Real-World Implementations: What’s Working

Theory and policy matter, but AI in healthcare 2026 is ultimately defined by real-world implementations. Here are detailed case studies of successful deployments:

Case Study 1: Mayo Clinic’s Predictive Sepsis Detection

Challenge: Sepsis kills 270,000 Americans annually and costs healthcare systems $24 billion. Early detection dramatically improves survival but requires constant vigilance that overwhelms staff.

AI Solution: Mayo Clinic developed an AI system that continuously monitors patient vitals, lab results, and medical history to predict sepsis risk 6-12 hours before traditional clinical presentation.

Implementation Approach:

- 18-month development with 35,000 patient records

- Extensive testing for demographic bias

- Integration with existing electronic health records

- Real-time alerts to nursing staff

- Human clinician always makes treatment decisions

Results (After 24 months):

- 42% reduction in sepsis-related deaths

- Average detection 8.4 hours earlier than traditional methods

- 18% reduction in ICU length of stay for sepsis patients

- Zero false negatives (all sepsis cases detected)

- False positive rate: 12% (acceptable given high stakes)

Key Success Factors:

- Strong physician buy-in during development

- Extensive bias testing revealed and corrected initial racial disparities

- Clear protocols for staff response to alerts

- Transparency with patients about AI role

Challenges:

- Initial staff skepticism requiring extensive training

- Alert fatigue management

- Integration costs ($2.3 million initial investment)

ROI: Estimated $15 million annual savings in improved outcomes and reduced ICU stays.

Case Study 2: Kaiser Permanente’s Administrative Automation

Challenge: Physicians spent 35-40% of time on documentation and administrative tasks, contributing to burnout and reducing patient interaction time.

AI Solution: Comprehensive administrative automation across scheduling, documentation, coding, and prior authorization.

Key Components:

Ambient Documentation: AI listens to patient-physician conversations, generates draft clinical notes, and suggests appropriate billing codes (physician reviews and approves all)

Intelligent Scheduling: Optimizes appointment booking based on patient history, physician expertise, expected duration, and facility capacity

Prior Authorization Automation: AI handles routine insurance authorizations, flagging only complex cases for human review

Results (After 18 months across 39 hospitals):

- Average physician documentation time reduced by 12 minutes per patient

- Total administrative time reduced from 38% to 24% of physician workday

- Patient face time increased by average 18 minutes per visit

- Physician burnout scores improved by 31%

- Patient satisfaction scores increased 14 percentage points

- Coding accuracy improved from 87% to 96%

Costs:

- $180 million initial investment across system

- $35 million annual operating costs

ROI: $320 million annual value (including $50M supply chain savings mentioned earlier, $120M improved coding revenue, $150M value of recaptured physician time)

Case Study 3: NHS England’s AI Triage in Emergency Departments

Challenge: Emergency departments overwhelmed with 30-40% non-urgent cases, creating dangerous wait times for critical patients.

AI Solution: AI-assisted triage system that helps nurses prioritize patients by predicting deterioration risk and necessary resource intensity.

Implementation:

- Pilot in 15 emergency departments 2023-2024

- Rollout to 50 additional facilities in 2025

- System analyzes presenting symptoms, vitals, medical history

- Nurses maintain full triage authority; AI provides recommendations

Results (Pilot program):

- Average wait time for critical patients reduced from 47 minutes to 18 minutes

- Overall patient flow improved by 23%

- Reduced “left without being seen” rates from 8% to 3%

- No increase in adverse events

- Nurse satisfaction with triage support: 78% positive

Notable: System initially showed bias toward younger patients in resource allocation. After retraining with more diverse data and adding explicit age-fairness constraints, bias was eliminated.

Expansion Plans: NHS plans to expand to 120 emergency departments by end of 2026.

Case Study 4: Singapore General Hospital’s Diabetic Retinopathy Screening

Challenge: Diabetic retinopathy is leading cause of blindness but requires specialist examination. Singapore has high diabetes rates but limited ophthalmologists.

AI Solution: AI screening system analyzes retinal images, flagging patients needing specialist referral.

Results (3-year deployment):

- Screened 180,000 patients

- Sensitivity (catching true cases): 97%

- Specificity (avoiding false alarms): 92%

- Reduced specialist referrals by 48% (only high-risk cases referred)

- Average time from screening to treatment for those needing it: reduced from 8 weeks to 2 weeks

- Estimated 2,400 cases of blindness prevented

Cost Impact: $8 million investment, $22 million estimated savings in prevented disability and reduced specialist burden.

Geographic Expansion: Model now being piloted in rural clinics across Southeast Asia, demonstrating AI’s potential to extend specialist care to underserved areas.

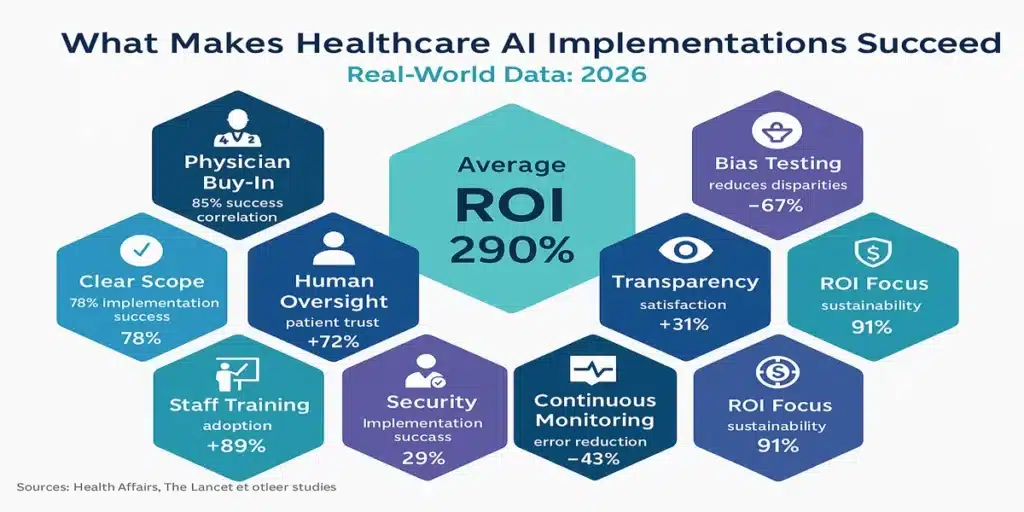

These implementations demonstrate that successful AI in healthcare requires:

- Clear, limited scope (not trying to do everything)

- Extensive testing and bias mitigation

- Strong human oversight

- Physician buy-in and involvement

- Transparent patient communication

- Realistic ROI expectations

- Commitment to continuous improvement

Similar patterns appear in AI breakthroughs in antibiotic design, where focused applications deliver impressive results.

7. Challenges & Controversies

While successful implementations demonstrate AI in healthcare 2026 potential, significant challenges and controversies remain:

Algorithmic Bias & Health Equity

Perhaps no issue has generated more concern than algorithmic bias. Multiple studies have documented troubling patterns:

Race & Ethnicity: A widely cited 2019 Science paper (still highly relevant) found a healthcare risk-prediction algorithm used on millions of Americans systematically discriminated against Black patients. The algorithm used healthcare costs as a proxy for healthcare needs—but Black patients have historically received less care due to systemic inequities, creating a vicious cycle.

Gender: Research published in 2024 in The Lancet found that diagnostic AI trained primarily on male patient data showed 20-30% lower accuracy for women across several conditions including cardiovascular disease and autoimmune disorders.

Socioeconomic Status: Algorithms trained on data from well-resourced hospitals often perform poorly when deployed in under-resourced settings, potentially widening health disparities.

Solutions in Development:

- Mandatory bias testing across demographic groups before deployment

- Diverse training data requirements

- Ongoing monitoring for “algorithmic drift” (bias that emerges over time)

- Health equity impact assessments for AI systems

- Community advisory boards for AI deployment decisions

However, critics note that these solutions often come after problems are discovered, not before—raising questions about whether healthcare systems should deploy AI more cautiously.

The “Black Box” Problem

Many AI systems, particularly deep learning models, cannot fully explain their reasoning. This creates dilemmas:

Clinical Dilemma: How can physicians appropriately weigh AI recommendations they don’t fully understand?

Legal Dilemma: If AI contributes to a medical error, how is liability assigned when the reasoning isn’t transparent?

Trust Dilemma: How can patients trust recommendations from systems that can’t be fully explained?

The push for “explainable AI” (XAI) has gained momentum, with some jurisdictions (including the EU under the AI Act) requiring explainability for high-risk applications. However, there’s often a trade-off: simpler, more explainable models may be less accurate than complex “black box” systems.

Cybersecurity Vulnerabilities

Healthcare AI systems present tempting targets for cyberattacks:

Data Theft: Health records sell for 10-50X more than credit card numbers on dark web markets

Ransomware: Attacks that disable hospital systems can literally hold patient care hostage

Adversarial Attacks: Subtle manipulations of input data can fool AI systems into dangerous misclassifications

The intersection of AI and cybersecurity is critical in healthcare, where compromised AI could directly harm patients.

Workforce Disruption Concerns

While administrative AI is designed to support rather than replace staff, concerns persist:

Medical Coders: Revenue cycle AI directly threatens jobs of medical coders. The American Academy of Professional Coders estimates 15-20% of coding positions could be eliminated by 2027.

Radiologists: Diagnostic imaging AI, while currently used as decision support, could eventually reduce need for radiologists—a specialty already facing uncertain employment prospects.

Nurses & Support Staff: Automation of documentation, vitals monitoring, and patient communication could reduce staffing needs.

Counter-Arguments:

- Healthcare faces severe staffing shortages; AI might help manage these rather than causing unemployment

- Automation of routine tasks could allow staff to focus on higher-skill, higher-value work

- New jobs are emerging (AI trainers, bias auditors, healthcare data scientists)

The parallel to AI co-pilots transforming employment models across industries suggests healthcare will see workforce evolution rather than wholesale job loss—but dislocations are inevitable.

Regulatory Lag

Healthcare AI evolves faster than regulations can adapt:

FDA Challenge: Medical device approval processes take months or years, but AI systems can be updated continuously. How should regulators handle AI that “learns” after approval?

Privacy Laws: HIPAA predates modern AI by decades. Should Congress update privacy law for the AI era, or can existing rules suffice?

International Fragmentation: Different countries take radically different approaches, creating challenges for global healthcare technology companies.

Cost & Access Inequities

Cutting-edge AI systems are expensive, potentially widening gaps between well-funded and under-resourced healthcare systems:

Implementation Costs: Hospital AI systems can cost $5-50 million for full implementation

Ongoing Expenses: Maintenance, updates, and monitoring add significant operating costs

Technical Requirements: Modern AI requires robust IT infrastructure many rural hospitals lack

Concern: AI could create a “two-tier” healthcare system where wealthy institutions offer AI-enhanced care while others fall further behind.

Potential Solutions:

- Government subsidies for AI implementation in underserved areas

- Open-source healthcare AI models

- Regional AI centers serving multiple small facilities

- Telemedicine + AI to extend capability geographically

The Autonomy Question

Perhaps the deepest philosophical question: How much medical decision-making should we delegate to AI?

Incrementalist View: Keep humans firmly in control; AI only advises, never decides

Optimist View: In narrow domains where AI demonstrably outperforms humans (like certain imaging tasks), allow more autonomy with safeguards

Skeptic View: Human judgment encompasses factors AI cannot capture; resist automation of clinical decisions

This debate will shape AI in healthcare for decades to come.

8. The Road Ahead: 2026-2030

As we look beyond 2026, several trends will shape the future of AI in healthcare:

Expanded Diagnostic AI

While current applications focus primarily on imaging (radiology, pathology, dermatology), expect expansion into:

Multimodal Diagnosis: Systems integrating imaging, genomics, patient history, and real-time vitals for comprehensive diagnostic support

Rare Disease Detection: AI’s ability to recognize patterns across massive datasets could dramatically improve diagnosis of rare conditions currently taking years to identify

Predictive Diagnostics: Identifying disease risk years before traditional symptom presentation

Personalized Treatment Planning

Moving beyond “evidence-based medicine” (which treats averages) toward truly personalized care:

Pharmacogenomics: AI analyzing genetic profiles to predict medication effectiveness and side effect risks

Digital Twins: Virtual models of individual patients used to simulate treatment outcomes

Adaptive Protocols: Treatment plans that adjust in real-time based on patient response

Mental Health & Behavioral AI

One of healthcare’s most underserved areas could see AI transformation:

Early Intervention: AI analyzing speech patterns, social media activity, and other signals to identify mental health crises before they escalate

Therapeutic Chatbots: AI-powered mental health support (supplementing, not replacing, human therapists)

Medication Monitoring: AI tracking medication compliance and side effects

Concerns about AI in mental health are particularly acute, given the deeply personal nature of psychological care and risks of overreliance on technology.

Remote & Home-Based Care

The telemedicine boom accelerated by COVID-19 continues, with AI playing increasing role:

Hospital-at-Home Programs: AI monitoring systems allowing hospitalized patients to recover at home safely

Chronic Disease Management: Continuous AI monitoring for diabetes, heart disease, etc. with automated alerts

Aging in Place: AI systems helping elderly patients live independently longer

This connects to broader trends in AI wellness and personalized healthcare apps.

Global Health Applications

AI’s potential to extend healthcare capability to underserved regions:

Telemedicine + AI: Bringing specialist-level care to areas lacking specialists

Disease Surveillance: AI analyzing multiple data sources to predict and respond to disease outbreaks

Resource Optimization: Helping resource-constrained healthcare systems maximize impact

Regulatory Harmonization

Expect international efforts to harmonize healthcare AI regulations:

Standards Development: ISO, IEEE, and other bodies developing global healthcare AI standards

Mutual Recognition Agreements: Countries accepting each other’s AI approvals (similar to pharmaceutical agreements)

WHO Leadership: Increased international coordination through WHO digital health initiatives

The Infrastructure Challenge

As explored in our analysis of AI infrastructure and data centers, healthcare AI scaling requires massive computing infrastructure—particularly for genomic analysis and medical imaging. Healthcare systems must invest in:

- On-premise computing for sensitive data

- Hybrid cloud architectures for less-sensitive applications

- Edge computing for real-time applications (monitoring, emergency response)

- Robust cybersecurity for all infrastructure

The Open Question: Who Benefits?

Perhaps the most important question about AI in healthcare 2026 and beyond: Will AI primarily benefit:

Option A: Wealthy patients and institutions, widening healthcare disparities?

Option B: Everyone relatively equally, improving healthcare access and quality broadly?

Option C: Primarily underserved populations, by extending capability to areas lacking resources?

The answer depends not just on technology—but on policy choices, investment priorities, and societal values.

Frequently Asked Questions (FAQs)

1. How is AI currently being used in hospitals in 2025-2026?

AI in healthcare is primarily used for administrative automation (scheduling, billing, coding), operational efficiency (bed management, supply chain), and clinical decision support (alerting providers to test results, predicting patient deterioration). According to HIMSS Analytics, 47% of large U.S. hospitals use at least one AI-powered system. However, AI does not make autonomous clinical decisions—healthcare providers maintain final authority over all patient care decisions.

2. Will AI replace doctors and nurses?

No. Current and projected AI in healthcare 2026 focuses on augmenting healthcare providers, not replacing them. AI handles administrative tasks, provides decision support, and automates routine processes—freeing healthcare professionals to focus on patient care. The WHO’s 2024 Digital Health guidance explicitly states that “AI must support and enhance human healthcare delivery, never replace the essential human elements of medical practice.” However, some support roles (like medical coding) may see workforce reductions.

3. Is my health data safe when hospitals use AI?

Healthcare AI is subject to strict privacy regulations including HIPAA (United States), GDPR (Europe), and similar laws globally. Best practices include encryption, zero-trust security architecture, audit logging, and privacy-preserving techniques like differential privacy and federated learning. However, healthcare remains a top target for cyberattacks, with 88 million Americans affected by health data breaches in 2024. Patients should ask their healthcare providers specifically about AI data practices and utilize granular consent options where available.

4. What regulations govern AI use in healthcare?

Multiple regulatory frameworks apply: The FDA regulates AI as medical devices (in the U.S.), the EU AI Act classifies most healthcare AI as “high-risk” requiring extensive testing and approval, the WHO provides international guidance, and individual countries have additional requirements. The regulatory landscape is complex and evolving, with significant variation across jurisdictions as explored in our federal vs. state AI regulation analysis.

5. Can AI in healthcare be biased?

Yes. Multiple studies have documented algorithmic bias in healthcare AI, including racial bias (algorithms trained on historically inequitable healthcare patterns), gender bias (systems trained primarily on male patients), and socioeconomic bias. A 2024 Stanford study found 73% of clinical AI algorithms showed at least some demographic bias. Leading healthcare systems now conduct mandatory bias testing, use diverse training data, and continuously monitor for emerging biases. However, eliminating bias entirely remains an ongoing challenge.

6. How accurate is AI in diagnosing diseases?

Accuracy varies dramatically by application. For narrow, well-defined tasks like diabetic retinopathy screening, AI can achieve 95-97% accuracy—matching or exceeding specialist performance. For complex, multi-factorial diagnoses, AI is less accurate and functions primarily as decision support rather than autonomous diagnosis. The key insight: AI performs best in pattern recognition from large datasets (imaging, genomics) but struggles with nuanced clinical judgment requiring patient interaction and contextual understanding.

7. How much does healthcare AI cost to implement?

Costs vary enormously: Simple scheduling AI might cost $50,000-200,000 for a small hospital, while comprehensive systems for large healthcare networks can cost $5-50 million for initial implementation plus $2-10 million annual operating costs. However, ROI can be substantial—Kaiser Permanente reported $320 million annual value from $180 million investment. Smaller facilities may access AI through cloud-based services with lower upfront costs.

8. Can patients opt out of AI-assisted care?

This varies by healthcare system and jurisdiction. Progressive systems offer granular consent allowing patients to decline certain AI applications while accepting others. However, purely administrative AI (scheduling, billing) typically operates under assumed consent. The EU AI Act and similar regulations increasingly require meaningful patient choice. Patients concerned about AI should explicitly discuss preferences with their healthcare providers and request “AI-free” care pathways where available.

9. What happens if AI makes a mistake in my medical care?

Liability frameworks for AI errors remain evolving. Generally, healthcare providers bear ultimate responsibility for patient care, even when using AI decision support—the “human-in-the-loop” principle. However, technology vendors may share liability if AI systems have defects or inadequate warnings. Patients affected by AI errors have the same rights as for any medical error: explanation, corrective care, and potential legal recourse. Healthcare systems implementing AI should have clear error protocols and transparent disclosure practices.

10. Will AI make healthcare more expensive or more affordable?

The answer is unclear and likely “both.” AI implementation requires substantial investment, potentially increasing costs initially or for certain procedures. However, administrative automation, efficiency improvements, and error reduction could significantly lower costs over time. Early evidence suggests net positive: Kaiser Permanente’s $320 million annual value, Cleveland Clinic’s reduced ICU stays, and NHS’s improved emergency department efficiency all demonstrate cost benefits. However, if AI primarily benefits wealthy institutions, overall system costs could rise due to widened disparities. Policy and investment choices will determine which scenario materializes.

Sources & References

This article draws on peer-reviewed research, government reports, international organization guidance, corporate disclosures, and healthcare industry analysis. Key sources include:

International Organizations:

- World Health Organization (WHO), “Ethics and Governance of Artificial Intelligence for Health,” Digital Health Technical Advisory Group Report, 2024

- Organisation for Economic Co-operation and Development (OECD), “AI Principles and Health Data Governance Framework,” AI Policy Observatory, 2024

- WHO Digital Health Atlas and Global Digital Health Monitor databases

Healthcare Research & Industry Analysis:

- Healthcare Information and Management Systems Society (HIMSS), “Annual Healthcare IT Survey 2024”

- Deloitte, “Global Health Care Outlook 2024”

- McKinsey & Company, “The State of AI in Healthcare” (multiple reports 2023-2024)

- American Medical Association (AMA), “Patient Perspectives on AI in Healthcare Survey,” 2024

- Pew Research Center healthcare technology surveys

Academic & Medical Journals:

- JAMA Network Open, “Patient Control and Healthcare AI Trust,” 2024

- The Lancet, “Gender Disparities in Diagnostic AI Accuracy,” 2024

- Science, “Dissecting Racial Bias in an Algorithm Used to Manage the Health of Populations,” 2019 (landmark study, still widely referenced)

- Nature Medicine, multiple articles on healthcare AI accuracy and implementation

- Stanford AI in Healthcare Initiative research publications

Regulatory & Policy Sources:

- European Union AI Act official texts and guidance (2024)

- U.S. Food and Drug Administration (FDA), “Artificial Intelligence/Machine Learning Framework for Medical Devices,” 2024

- U.S. Department of Health and Human Services, HIPAA guidance and breach reports

- UK National Institute for Health and Care Excellence (NICE), AI evidence standards

- Various national healthcare regulatory agency publications

Healthcare System Reports:

- Mayo Clinic internal reports and public presentations on sepsis detection AI

- Kaiser Permanente administrative automation outcomes reports

- NHS England AI triage pilot program evaluations

- Singapore General Hospital diabetic retinopathy screening program results

- Cleveland Clinic and Mercy Health publicly disclosed AI implementation data

Technology & Infrastructure:

- Epic Systems, Cerner, and other healthcare IT vendor technical documentation

- Healthcare cybersecurity incident reports (US DHHS, various sources)

- Medical device standards organizations (DICOM, HL7 FHIR)

Additional Context:

- Related Sezarr Overseas News coverage of AI regulation, infrastructure, cybersecurity, and applications in other sectors

Note on Methodology: Where specific statistics or claims are cited, they are drawn from the above sources. Forward-looking statements about 2026 and beyond represent analysis of current trends and expert projections, not certainties. Healthcare AI is a rapidly evolving field; information is current as of November 2025 but will require ongoing updates.

About This Article

Author: Sezarr Overseas Editorial Team

Published: November 14, 2025

Last Updated: November 14, 2025

Reading Time: 14 minutes

Category: Healthcare Technology, Artificial Intelligence, Digital Health

Related Topics: AI Ethics, Healthcare Policy, Digital Transformation

Comprehensive Disclaimer

Not Medical Advice: This article provides analysis of healthcare technology trends and policy. It does not constitute medical advice, diagnosis, treatment recommendations, or clinical guidance. Readers should consult qualified healthcare professionals for all medical decisions.

Industry Analysis: Content focuses on administrative systems, operational technology, and policy frameworks rather than clinical applications. References to clinical AI are for context only and should not be interpreted as endorsements or recommendations.

Forward-Looking Statements: Projections about 2026 and beyond represent analysis of current trends, expert opinions, and available research. Actual developments may differ significantly due to technological breakthroughs, regulatory changes, economic factors, public health events, or other unpredictable variables.

Regional Variation: Healthcare AI regulations, adoption rates, and implementations vary dramatically across countries and jurisdictions. Information presented may not apply to all regions. Readers should consult local regulations and healthcare providers about AI use in their specific context.

Rapidly Evolving Field: Healthcare AI technology and policy evolve extremely rapidly. Information current as of November 2025 may become outdated quickly. This article will be updated periodically, but readers should verify current information for time-sensitive decisions.

Data Sources: While we strive for accuracy, healthcare data comes from multiple sources with varying methodologies. Statistics presented represent best available information but may not be directly comparable across studies. We encourage readers to review original sources for complete context.

No Guarantees: Healthcare AI success stories presented do not guarantee similar results in other contexts. Implementation outcomes depend on numerous factors including organizational capabilities, patient populations, existing infrastructure, and local conditions.

Privacy & Security: While article discusses data protection measures, no system is perfectly secure. Readers should carefully evaluate privacy policies and security practices of healthcare providers and technology vendors.

Vendor Neutrality: Mentions of specific healthcare systems, technology vendors, or products are for illustrative purposes only and do not constitute endorsements. Sezarr Overseas News maintains editorial independence and receives no compensation from companies mentioned.

Professional Consultation: Healthcare administrators, policy makers, and technology professionals should consult qualified experts—including legal counsel, healthcare IT specialists, and regulatory advisors—before making decisions based on information in this article.

Liability Limitations: Sezarr Overseas News provides information for educational purposes and is not liable for actions taken based on article content. Healthcare AI implementation carries risks requiring professional evaluation and ongoing monitoring.