Introduction

By 2026, AI co-pilots—generative and assistive models embedded directly into workplace applications—will have moved beyond the hype cycle into practical, measurable business value. Unlike earlier automation waves that simply replaced discrete manual tasks, AI co-pilots augment everyday jobs rather than replace them, working alongside humans to amplify creativity, accelerate decision-making, and handle repetitive cognitive work that once consumed hours of professional time.

The question for organizations is no longer whether to adopt AI co-pilots, but how to implement them responsibly while capturing productivity gains, managing workforce transitions, and navigating an evolving regulatory landscape spanning the EU AI Act, emerging US state regulations, and ethical considerations around algorithmic decision-making.

According to Princeton, MIT, Microsoft, and Wharton’s 2024 study of over 4,867 developers, AI co-pilot users achieved a 26% average productivity increase in completed tasks—the largest controlled study of workplace AI impact to date. Federal Reserve research confirms workers using generative AI save 5.4% of weekly hours, equivalent to 2.2 hours per 40-hour work week. These verified gains are reshaping how organizations think about workforce planning, compensation, and the future of professional work.

This comprehensive analysis examines how AI co-pilots redefine work across knowledge, creative, and operational roles, what successful implementations reveal about best practices, how companies should manage organizational transitions, and practical frameworks for capturing productivity while protecting employee welfare and ensuring regulatory compliance.

1. What is an AI Co-pilot?

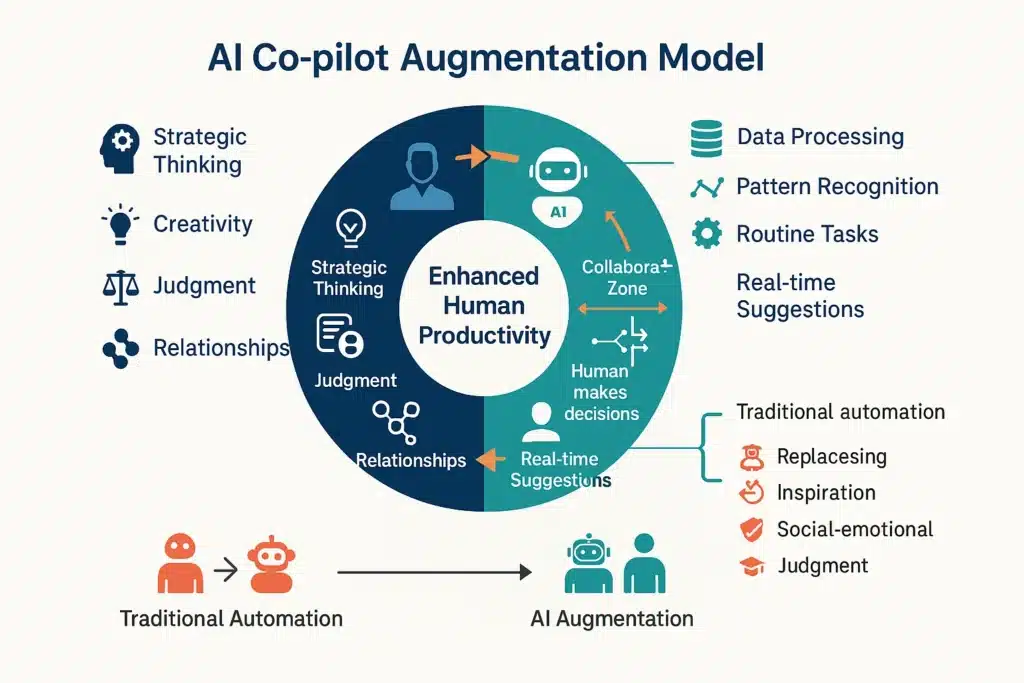

An AI co-pilot is an assistive artificial intelligence system embedded directly into a worker’s tools—a contextual model that helps summarize documents, draft messaging, generate code snippets, analyze datasets, or automate routine administrative tasks. Unlike fully autonomous systems that operate independently, AI co-pilots work alongside humans with intentional design for human-in-the-loop controls ensuring quality, safety, and accountability.

Core Characteristics of AI Co-pilots

Contextual Awareness Modern AI co-pilots understand the specific task context—they know whether you’re writing code, drafting a legal brief, analyzing financial data, or creating marketing content, and adjust their assistance accordingly.

Real-Time Collaboration Rather than batch processing, co-pilots provide immediate suggestions, corrections, and alternatives as users work, creating a genuine collaboration dynamic where humans guide strategy and AI handles execution details.

Learning from Usage Advanced co-pilot systems learn from user preferences, frequently used phrases, common workflows, and feedback loops, becoming more personalized over time while maintaining privacy guardrails.

Multi-Modal Capability Leading co-pilots integrate text, code, images, data visualizations, and voice, enabling them to assist across diverse work modalities rather than single-function support.

Evolution from Automation to Augmentation

Previous automation technologies typically replaced human tasks entirely:

- Robotic Process Automation (RPA) took over repetitive data entry

- Email filters automatically sorted messages

- Chatbots handled simple customer inquiries

AI co-pilots represent a fundamental shift toward human augmentation:

- They enhance rather than replace human judgment

- They accelerate rather than eliminate creative processes

- They amplify rather than substitute domain expertise

This distinction matters profoundly for workforce implications. As explored in our analysis of how AI co-pilots are transforming employment models, the pattern is consistent: organizations that treat AI as a productivity multiplier rather than headcount replacement achieve superior outcomes and employee satisfaction.

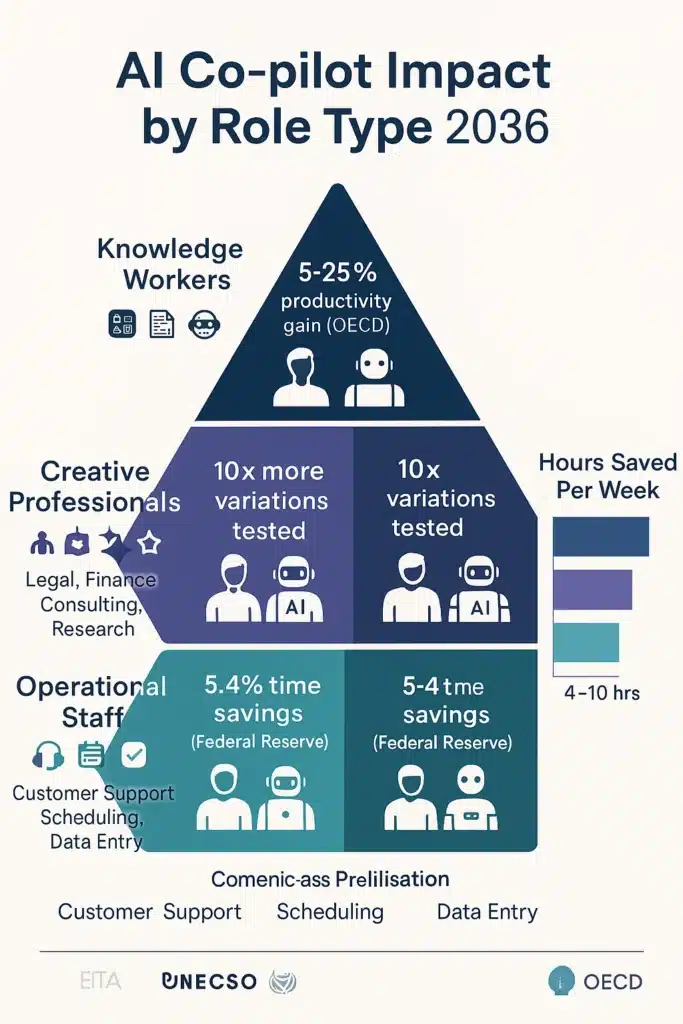

2. Where Co-pilots Matter Most: Role Taxonomy

AI copilots in 2026 deliver measurable value across three broad categories of professional roles, each with distinct implementation patterns and productivity gains.

Knowledge Workers: Legal, Finance, Consulting, Research

Core Applications:

- Document synthesis and summarization (reducing 100-page reports to key insights)

- Initial draft creation (legal briefs, financial analyses, consulting decks)

- Data analysis and pattern recognition (anomaly detection, trend identification)

- Research acceleration (literature review, precedent identification, market analysis)

Productivity Evidence: OECD research confirms knowledge workers experience 5-25% productivity improvements when AI tools handle document analysis and initial draft creation, freeing professionals to focus on strategy, judgment, and client relationships.

Real Example: Financial Services In financial analysis, AI co-pilots surface anomaly summaries and suggest next-steps for analysts, reducing investigation time from hours to minutes. This mirrors broader applications in healthcare where AI in healthcare 2026 is transforming diagnostic workflows through similar pattern recognition and decision support systems.

For deeper context on financial applications, our comprehensive analysis of AI in healthcare 2025 diagnosis workflows reveals parallel implementation patterns across data-intensive professions.

Creative & Marketing Professionals

Core Applications:

- Content ideation and brainstorming (generating 50 headline variations in seconds)

- Copy iteration and A/B testing (creating personalized variations at scale)

- Visual concept generation (mood boards, design alternatives, layout suggestions)

- Campaign performance prediction (AI models forecasting engagement before launch)

Productivity Evidence: Marketing teams using AI co-pilots report the ability to test 10X more creative variations in the same timeframe, while maintaining or improving quality through human curation of AI-generated options.

Industry Transformation: As evidenced by platforms like Google Gemini Enterprise competing with Microsoft Copilot for creative workspace integration, the AI co-pilot market for creative professionals has matured rapidly with enterprise-grade features, collaborative capabilities, and brand safety controls.

Operational & Routine Task Workers

Core Applications:

- Customer support automation (handling straightforward inquiries, escalating complex issues)

- Scheduling and calendar management (optimizing meetings across time zones, preferences)

- Data entry and verification (extracting information from documents, validating accuracy)

- Email triage and response drafting (categorizing messages, suggesting replies)

Productivity Evidence: Federal Reserve data shows customer support workers using AI save 5.4% of work hours on average, with the technology handling straightforward inquiries (60-70% of volume) and routing nuanced situations to experienced staff.

Key Success Factor: Operational roles see highest co-pilot ROI when AI handles the repetitive 70% of work, allowing humans to focus on the complex 30% requiring judgment, empathy, and contextual understanding.

3. Impact on Productivity and Job Design

Companies implementing AI copilots strategically report significant, measurable productivity improvements backed by rigorous peer-reviewed research. However, these gains materialize only when organizations redesign work processes rather than simply layering AI onto broken workflows.

Verified Productivity Research

Princeton/MIT/Microsoft/Wharton Study (2024)

- Sample: 4,867 developers across three randomized controlled trials

- Platform: GitHub Copilot

- Finding: 26.08% average increase in completed pull requests per week

- Variation: Junior developers saw 21-40% gains; senior developers 7-16%

- Duration: Four to seven months of observation

- Publication: Peer-reviewed in leading economics journals

Federal Reserve Bank of St. Louis (February 2025)

- Sample: Nationally representative survey of US workers

- Finding: 5.4% average weekly time savings for generative AI users

- Translation: 2.2 hours saved per 40-hour work week

- Sector Variation: Computer/mathematics workers (12% of hours using AI, 2.5% time saved); personal services (1.3% usage, 0.4% saved)

- Adoption Rate: 28% of US workers using generative AI at work in November 2024

OECD Research (July 2025)

- Scope: Experimental studies across customer support, software development, consulting

- Finding: 5-25% efficiency gains depending on task type

- Mechanism: AI handles document analysis, initial drafts, routine responses

- Critical Factor: Effectiveness depends on implementation quality, training, task-AI fit

Upwork Research Institute (2024)

- Finding: 40% average productivity boost reported by employees using AI

- Attribution: 25% from tool improvements, 22% from self-directed learning, 22% from employer training, 31% from experimentation

- Implication: Continuous learning culture essential for sustained gains

The Productivity Paradox: Why Many Implementations Fail

Despite compelling research, many organizations see minimal productivity gains. Common failure patterns:

1. Technology Layering

- Adding AI to dysfunctional processes automates inefficiency

- Example: AI drafts poor proposals faster, but proposals still fail due to misaligned strategy

- Solution: Process redesign before AI deployment

2. Insufficient Training

- Employees receive tool access without learning effective prompting, model evaluation, or judgment skills

- Result: Low adoption (30-40% of developers chose not to use GitHub Copilot despite free access)

- Solution: Structured 2-12 week training programs before rollout

3. Missing Metrics

- Organizations measure activity (emails sent, code committed) rather than outcomes (deals closed, bugs resolved)

- AI increases activity without improving results

- Solution: Outcome-based KPIs aligned with business goals

4. One-Size-Fits-All Deployment

- Same AI tool forced on all roles regardless of task fit

- Engineers benefit from code generation; sales teams see minimal value

- Solution: Role-specific co-pilot selection and configuration

Key Redesign Principles for Productivity

Redefine Outcomes, Not Inputs Measure value by customer outcomes and business impact rather than hours worked or tasks completed. Companies tracking output quality and problem resolution see sustained productivity gains; those fixated on activity metrics miss transformative potential.

Example: Marketing team stops measuring “campaigns launched” and starts measuring “qualified leads generated per campaign dollar”—AI co-pilots now optimize for quality over quantity.

Introduce Verification Steps Humans validate AI co-pilot outputs for high-stakes decisions. GitClear’s 2024 research found a 4x increase in code cloning with heavy AI assistant usage, indicating quality erosion when developers blindly accept suggestions. Verification layers prevent this degradation.

Critical contexts requiring human verification:

- Legal documents with liability implications

- Financial analyses informing investment decisions

- Medical diagnoses affecting patient care

- Code controlling safety-critical systems

- Customer communications representing brand

Create AI Supervision Roles Forward-thinking organizations establish new positions:

- Prompt Engineers: Optimize human-AI interactions for maximum effectiveness

- Model Verifiers: Assess AI output quality, identify patterns of error

- Ethics Reviewers: Monitor for bias, ensure responsible deployment

- Augmentation Specialists: Configure co-pilots for specific organizational needs

These emerging roles are detailed in our analysis of future roles and growth areas and mirror patterns across AI-augmented industries.

4. Reskilling & Organizational Change

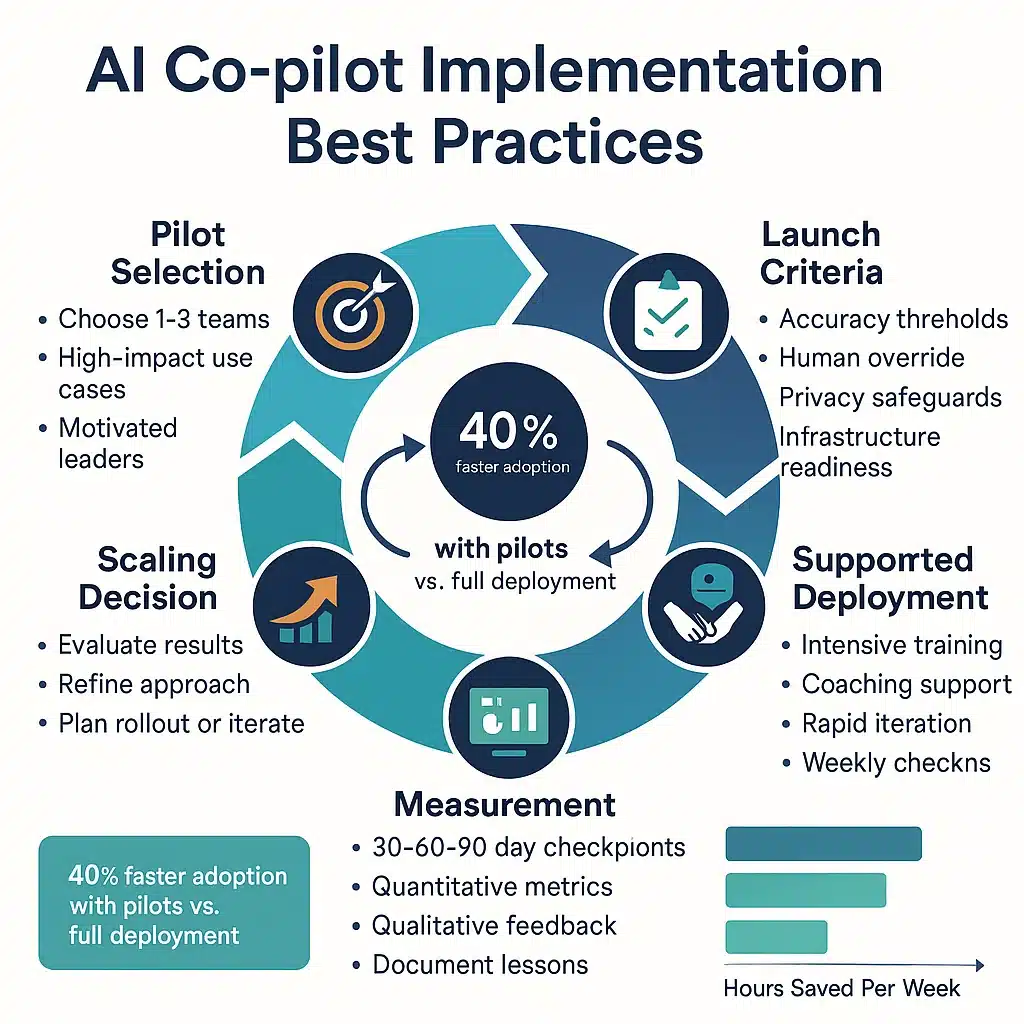

Reskilling is the linchpin of successful AI co-pilot adoption. Organizations implementing structured training programs see 40% faster adoption rates and 22% higher productivity gains compared to those without formal reskilling initiatives (Upwork Research Institute, 2024).

What Effective Reskilling Looks Like

Duration: 2-12 Weeks (Task-Dependent)

- Simple tools (email co-pilots): 2-4 weeks

- Moderate complexity (document drafting): 4-8 weeks

- Complex systems (code generation, data analysis): 8-12 weeks

Core Competencies to Develop:

1. Prompt Literacy

- Framing requests that elicit accurate, useful AI responses

- Understanding how different phrasings produce different outputs

- Recognizing when to provide context vs. when to keep prompts simple

- Iterating on prompts to refine results

Example Exercise: Give employees the same task (summarize a 50-page report) and compare results from generic prompts (“Summarize this document”) vs. specific prompts (“Extract the three main risks identified and the recommended mitigation for each, in bullet format”).

2. Model Evaluation

- Understanding when to trust AI outputs vs. when to apply human judgment

- Recognizing common AI failure modes (hallucinations, outdated information, bias)

- Developing calibrated confidence in AI suggestions

- Knowing which tasks AI handles well vs. poorly

Example Exercise: Show examples of excellent, mediocre, and poor AI outputs for typical work tasks. Discuss what makes each effective or problematic. Build pattern recognition for quality assessment.

3. Domain Expertise Integration

- Combining AI suggestions with deep industry knowledge

- Using AI to accelerate routine work while reserving human time for complex judgment

- Teaching AI about organizational context, norms, and constraints

- Knowing when to override AI based on contextual factors AI cannot perceive

Example Exercise: Case studies where AI recommendations would have been reasonable generally but wrong in specific organizational context. Develop frameworks for when local knowledge trumps AI suggestions.

Research-Backed Training Insights

25% of productivity gains come from ongoing AI tool improvements 22% from self-directed learning 22% from employer-provided training 31% from experimentation and exploration

This distribution reveals that formal training is necessary but insufficient—organizations must create cultures supporting continuous learning and experimentation.

Successful Training Models:

Cohort-Based Learning

- Small groups (10-20 employees) progress together

- Peer learning and knowledge sharing

- Accountability and motivation from group dynamics

- 3-4 hour sessions weekly over 4-8 weeks

Role-Specific Modules

- Tailored to actual job tasks rather than generic AI concepts

- Engineers learn code co-pilots, marketers learn creative co-pilots

- Immediate applicability increases engagement and retention

Practice-Intensive Approach

- 70% hands-on practice, 30% lecture/demonstration

- Real work scenarios rather than artificial exercises

- Mistakes encouraged in safe training environment

- Gradual complexity increase as confidence builds

Continuous Support

- “Office hours” where employees can get help with specific AI challenges

- Internal knowledge base of effective prompts and use cases

- Champions/power users who can mentor colleagues

- Regular refreshers as tools update and capabilities expand

Organizational Change Management

Implementation Roadmap (6-Month Pilot)

Month 1: Planning & Preparation

- Select pilot department/team (10-50 people)

- Choose co-pilot tool(s) aligned with work types

- Design role-specific training curriculum

- Establish baseline productivity metrics

- Communication campaign explaining “why” and “what’s in it for me”

Month 2: Intensive Training

- Week 1: Prompt literacy fundamentals

- Week 2: Model evaluation and quality assessment

- Week 3: Role-specific applications

- Week 4: Advanced techniques and troubleshooting

Months 3-4: Supported Deployment

- Employees use co-pilots on real work with coaching support

- Weekly check-ins to address challenges

- Rapid iteration on processes not working well

- Early wins celebrated and shared

- Metrics tracked but not used punitively

Month 5: Measurement & Refinement

- Compare productivity metrics to baseline

- Employee surveys on experience, challenges, suggestions

- Identify high performers to become champions

- Refine training based on common struggle points

- Assess readiness for broader rollout

Month 6: Scaling Preparation

- Document lessons learned

- Finalize training materials

- Develop scaling plan for enterprise-wide deployment

- Calculate ROI to justify continued investment

- Begin champion training for second-wave support

Educational institutions are pioneering similar adaptive learning approaches. Our comprehensive analysis of AI in education 2026 and smart tutors explores how personalized learning methodologies from academic settings translate to corporate reskilling programs, with proven frameworks for accelerating skill acquisition.

5. Compensation, Benefits, and Legal Considerations

As AI copilots fundamentally alter work content, pace, and skill requirements, compensation frameworks and legal structures must evolve to match. Organizations face complex compliance requirements spanning multiple jurisdictions.

EU AI Act Compliance Requirements

The EU AI Act entered force August 1, 2024, establishing the world’s first comprehensive legal framework for AI systems with staggered enforcement creating immediate obligations for organizations deploying AI co-pilots.

Key Dates and Requirements:

February 2, 2025: Prohibitions Effective

- Banned AI systems include social scoring and real-time biometric identification

- Organizations must audit existing AI systems for prohibited uses

- Non-compliance penalties: Up to €35 million or 7% of global annual turnover

August 2, 2025: General-Purpose AI (GPAI) Obligations

- Providers must document training data sources

- Assess systemic risks from powerful AI models

- Implement transparency measures for users

- Most workplace co-pilots fall under GPAI requirements

August 2, 2026: High-Risk AI System Rules

- Comprehensive compliance including conformity assessments

- Risk management systems and quality management

- Human oversight mechanisms

- Detailed technical documentation

- Post-market monitoring and incident reporting

What This Means for AI Co-pilots:

Most workplace AI co-pilots are classified as “limited risk” or “minimal risk” under the EU AI Act, but organizations must:

- Establish complete AI inventories with risk classifications

- Prepare technical documentation for each system

- Implement copyright and data protection requirements

- Train employees on AI system limitations and proper usage

- Document decision-making processes where AI assists

Our detailed EU AI Act 2025 compliance checklist provides comprehensive guidance for multinational organizations navigating these requirements.

US Regulatory Landscape

While the United States lacks comprehensive federal AI legislation, 38 states adopted more than 100 AI-related laws in the first half of 2025, creating a complex compliance patchwork.

Major State Regulations:

Colorado AI Act (Effective June 30, 2026)

- Requires developers to use “reasonable care” to protect consumers from algorithmic discrimination

- Applies to AI systems used in consequential decisions (employment, lending, housing, healthcare, education, legal)

- Annual impact assessments required

- Consumer rights to explanation of AI decisions

- Penalties: Up to $20,000 per violation

California SB 53 – Transparency in Frontier AI Act (Passed September 2025)

- Applies to companies with large AI models (>$500M revenue, substantial compute)

- Safety and transparency requirements

- Best practices documentation

- Risk assessment and mitigation

- Incident reporting mechanisms

- Whistleblower protections

New York Proposed Legislation (Pending)

- Similar to California but broader scope

- Would require transparency from all AI developers regardless of size

- Public safety protocols

- Civil penalties for violations

Trump Administration Executive Order (January 20, 2025)

- “Removing Barriers to American Leadership in Artificial Intelligence”

- Rescinded Biden-era safety reporting requirements

- Signals more permissive federal approach

- Emphasized American competitiveness over safety regulation

For organizations navigating this landscape, our analysis of AI regulation in the US 2025 and the federal vs. state divide provides strategic guidance on multi-jurisdiction compliance.

Cybersecurity and Data Protection

As AI co-pilots handle sensitive corporate and customer data, organizations must implement robust security protocols to prevent:

- Data leakage: Employees inadvertently sharing confidential information in AI prompts

- Model poisoning: Adversaries manipulating AI training or fine-tuning

- Prompt injection attacks: Malicious inputs causing AI to behave inappropriately

- Intellectual property theft: Competitors accessing proprietary AI-assisted work

Our comprehensive guide to AI cybersecurity 2025 covers model security, data protection frameworks, and defense strategies against emerging threats specific to AI systems.

Essential Security Measures:

Data Governance

- Classify data sensitivity levels (public, internal, confidential, restricted)

- Implement role-based access controls for AI systems

- Audit trails for all AI interactions with sensitive data

- Clear policies on what data can/cannot be shared with AI

Vendor Assessment

- Where is data processed and stored (cloud regions)?

- What security certifications does vendor maintain (SOC 2, ISO 27001)?

- Who has access to prompts and outputs?

- What happens to data after processing (retention policies)?

- Is data used to train models serving other customers?

Employee Training

- Recognizing sensitive information before sharing with AI

- Understanding AI security risks specific to role

- Incident reporting procedures

- Regular phishing and social engineering exercises involving AI scenarios

Compensation and Performance Management

Emerging Best Practices:

Adjust Baseline Expectations During Transition

- 90-day “learning curve” period where productivity metrics don’t affect reviews

- Focus on growth and skill development rather than absolute output

- Recognize early adopters who help refine implementations

Measure Outcomes, Not Just Output

- Quality of work products, not just quantity

- Customer satisfaction and retention

- Strategic value of contributions

- Innovation and process improvements

Address the “AI Productivity Paradox” in Reviews

- Employees working smarter (using AI effectively) shouldn’t be penalized for completing work in less time

- Distinguish between “busy work” and high-value contributions

- Reward effectiveness and efficiency gains

Update Employment Contracts

- Clarify intellectual property ownership of AI-assisted work

- Define acceptable AI usage policies

- Establish confidentiality obligations around AI systems

- Address worker classification if AI substantially changes job nature

Compensation Philosophy Questions:

- Do we maintain salaries when AI increases productivity 30%?

- Do we share productivity gains with employees or capture for shareholders?

- How do we prevent “AI speedup” culture where employees are expected to do infinitely more?

- What happens to roles where AI eliminates 70% of current work?

Leading organizations are experimenting with:

- Skill-based pay: Compensation tied to AI proficiency levels

- Productivity bonuses: Shared savings from documented AI-driven efficiency

- Career pathways: Clear progression into AI supervision and governance roles

- Hybrid roles: Combining traditional expertise with AI augmentation skills

6. Management Best Practices

Organizations achieving the highest ROI from AI copilots follow disciplined implementation frameworks rather than rushing to enterprise-wide deployment.

Test-and-Learn Approach

Start Small: 1-3 Pilot Programs per Function

- Select teams with motivated leaders and willing participants

- Choose high-impact use cases where success is measurable

- Provide intensive support during pilot phase

- Document lessons learned rigorously

- Scale only after demonstrating value

Measure at 30-90 Day Intervals

- Baseline metrics before AI introduction

- Checkpoint at 30 days (initial adoption challenges)

- Assessment at 60 days (early results emerging)

- Evaluation at 90 days (decision point for broader rollout)

Industry Data: Companies using pilot teams see 40% faster adoption rates and 22% higher productivity gains compared to immediate full-deployment approaches (Upwork Research Institute, 2024).

Establish Clear Launch Criteria

Before Deployment:

Accuracy Thresholds

- Define minimum acceptable performance levels

- Customer-facing chatbots: 95%+ accuracy required

- Internal research tools: 85%+ sufficient

- High-stakes decisions (legal, medical, financial): 98%+ with human verification

- Test extensively against diverse scenarios before launch

Human Override Mechanisms

- Easy escalation when AI suggests incorrect or inappropriate responses

- Clear visual indicators distinguishing AI vs. human contributions

- Ability to provide feedback that improves future suggestions

- No-penalty policy for rejecting AI recommendations

Privacy Safeguards

- Data handling protocols preventing sensitive information leakage

- Clear disclosure to employees about monitoring/logging

- Opt-out mechanisms where appropriate

- Regular privacy audits and compliance reviews

Infrastructure Considerations

The computational demands of running sophisticated AI co-pilots require substantial investment in data centers and processing capabilities. Organizations must balance on-premise vs. cloud deployment, latency requirements, and sustainability considerations.

Our analysis of AI infrastructure 2026, including data centers, chips, and sustainability challenges examines the hidden costs and strategic decisions in AI infrastructure that affect co-pilot performance, scalability, and total cost of ownership.

Measure Subjective and Objective Outcomes

Quantitative Metrics:

- Task completion speed

- Output volume (documents drafted, code committed, cases resolved)

- Error rates

- Customer satisfaction scores

- Time saved vs. baseline

Qualitative Factors:

- Employee satisfaction with AI tools

- Perceived cognitive load (is work easier or more complex?)

- Trust in AI recommendations

- Frustration levels and common pain points

- Adoption patterns (who uses AI heavily vs. not at all?)

Critical Insight from GitHub Copilot Research:

Microsoft’s study found that 30-40% of developers chose not to adopt GitHub Copilot despite free access and company encouragement. This highlights that access alone doesn’t guarantee productive usage—human factors matter enormously.

Reasons developers cited for non-adoption:

- Didn’t trust AI-generated code quality

- Preferred to write code themselves to maintain understanding

- Found suggestions distracting or unhelpful

- Worried about over-reliance reducing skills

- Technical limitations (language support, accuracy issues)

Implication: Organizations must actively address adoption barriers rather than assuming tool availability drives usage. This requires understanding individual concerns, providing opt-out mechanisms without penalty, and recognizing that AI isn’t optimal for all workers or all tasks.

Maintain Transparency with Stakeholders

Internal Communication:

Publish a “How We Use AI” document explaining:

- Which tasks involve AI assistance vs. full automation

- How human oversight protects quality and fairness

- What data is collected and how it’s protected

- Employee rights regarding AI decisions

- Channels for reporting concerns or errors

- How AI impacts performance evaluation and compensation

Example Transparency Statement:

“At [Company], we use AI co-pilots to assist employees with routine tasks like document drafting, data analysis, and email management. All AI suggestions undergo human review before final decisions. We do not use AI for hiring, firing, or promotion decisions without human judgment. Employees can opt out of AI tools that make them uncomfortable. Your prompts and AI interactions are logged for security and improvement purposes but are never shared externally or used punitively.”

External Communication:

For customer-facing AI:

- Clear disclosure when interacting with AI vs. human

- Explanation of AI capabilities and limitations

- Easy escalation to human support

- Transparency about data usage

- Accountability mechanisms when AI errs

Building Trust:

Transparency builds trust and accelerates adoption while reducing resistance from employees concerned about job security, surveillance, or algorithmic management. Organizations that communicate openly about AI implementation see higher adoption rates and fewer labor relations conflicts than those deploying AI covertly.

7. Future Roles & Growth Areas

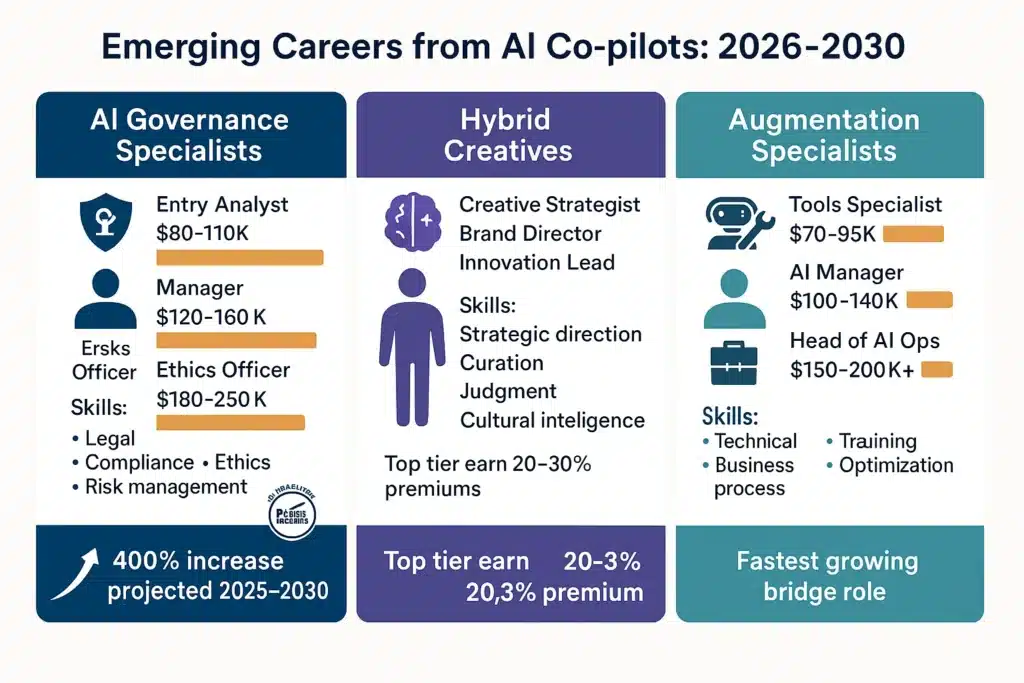

The AI co-pilot revolution creates significant growth in three emerging job families that barely existed a decade ago and will likely represent millions of positions by 2030.

AI Overseers and Governance Specialists

Role Description: Professionals who monitor AI system performance, ensure compliance with evolving regulations, audit for bias and errors, and mitigate risks from algorithmic decision-making.

Key Responsibilities:

- Continuous monitoring of AI system outputs for quality degradation

- Regular bias audits across demographic subgroups

- Compliance management for EU AI Act, state regulations, industry standards

- Incident response when AI produces problematic outputs

- Policy development for responsible AI usage

- Vendor assessment and contract negotiation

Required Skills:

- Combination of legal knowledge, technical understanding, and ethical frameworks

- Statistical literacy for analyzing AI performance data

- Risk management expertise

- Strong communication for translating technical concepts to business stakeholders

- Change management capabilities

Compensation: Premium salaries as demand vastly outstrips supply—typical ranges:

- Entry-level AI Governance Analyst: $80,000-110,000

- Mid-level AI Compliance Manager: $120,000-160,000

- Senior AI Ethics Officer: $180,000-250,000+

- Chief AI Officer (C-suite): $300,000-500,000+ depending on company size

Industry Hotspots:

Healthcare sectors are particularly aggressive in hiring these specialists, as detailed in our coverage of AI in healthcare 2025 and its impact on diagnosis workflows, given the high stakes of medical AI errors and strict regulatory requirements under HIPAA, FDA guidelines, and medical malpractice law.

Financial services similarly investing heavily due to SEC oversight, fair lending requirements, and fiduciary duties to clients.

Hybrid Creative Professionals

Role Evolution:

As AI handles routine creative tasks (basic graphics, initial copy drafts, standard templates), human creativity shifts toward:

- Strategic creative direction and brand identity

- Emotional resonance and cultural sensitivity

- Complex storytelling and narrative arcs

- Innovative concepts that push boundaries

- Curation and refinement of AI-generated options

The New Creative Workflow:

Traditional: Brief → Human concepting (days/weeks) → Execution (days/weeks) → Revision (days) → Final delivery

AI-Augmented: Brief → Human strategy (hours) → AI generation of 50+ options (minutes) → Human curation (hours) → Refinement (hours) → Final delivery

Value Proposition:

The most valuable creative professionals will be those who:

- Master human+AI ideation cycles

- Use AI to rapidly test concepts

- Apply refined judgment to select most impactful ideas

- Maintain authentic voice that AI cannot replicate

- Push AI beyond obvious outputs through sophisticated prompting

Compensation Trends:

Early data suggests salary polarization in creative fields:

- Top-tier hybrid creatives commanding premium compensation (20-30% above traditional roles)

- Mid-tier creatives struggling if unable to differentiate from AI

- Entry-level positions declining as AI handles junior work

Success Stories:

Marketing agencies reporting that creative teams using AI co-pilots can:

- Serve 3-5X more clients with same headcount

- Reduce campaign development time 40-60%

- Test 10X more creative variations

- Maintain or improve campaign performance metrics

Augmentation Specialists

Role Description:

Internal product owners who configure AI co-pilots for specific organizational needs, train colleagues on optimal usage patterns, continuously optimize human-AI workflows, and serve as bridge between technical capabilities and business requirements.

Why This Role Matters:

Off-the-shelf AI co-pilots require substantial customization to deliver maximum value:

- Integration with company-specific tools and databases

- Configuration for organizational processes and terminology

- Development of prompt libraries and best practices

- Ongoing optimization as AI capabilities evolve

- Troubleshooting when AI doesn’t work as expected

Key Responsibilities:

- Requirements gathering from business units

- Evaluation and selection of AI tools for specific use cases

- Prompt engineering and template development

- Training delivery and ongoing support

- Performance monitoring and continuous improvement

- Cross-functional collaboration with IT, legal, HR, operations

Required Skills:

- Strong technical proficiency without necessarily being a developer

- Deep understanding of business processes and pain points

- Excellent communication and training abilities

- Change management and stakeholder engagement

- Data analysis for measuring impact

- Curiosity and comfort with ambiguity

Career Pathways:

- Entry: AI Tools Specialist (individual contributor)

- Mid: AI Augmentation Manager (team lead)

- Senior: Head of AI Operations (department leadership)

- Executive: VP of AI Strategy (C-suite reporting)

Market Dynamics:

This role experiencing explosive growth as organizations realize that:

- IT departments lack business context for effective AI deployment

- Business units lack technical skills for self-serve AI adoption

- Bridge roles with blend of skills create disproportionate value

Salary Ranges:

- Entry-level: $70,000-95,000

- Mid-level: $100,000-140,000

- Senior: $150,000-200,000+

The Startup Ecosystem

Emerging AI co-pilot startups are pioneering innovative approaches to workplace augmentation. The startup ecosystem is particularly dynamic in emerging markets.

Our report on the rise of AI startups in Africa demonstrates how global innovation in AI-human collaboration tools is reshaping not just developed economies but creating entirely new technology hubs and career opportunities in markets previously excluded from the AI revolution.

Emerging Specialization Areas:

Legal AI: Contract analysis, legal research, due diligence automation

Medical AI: Clinical decision support, medical record analysis, administrative automation

Financial AI: Investment research, risk analysis, regulatory compliance

Sales AI: Prospecting, proposal generation, objection handling

HR AI: Candidate screening, employee support, policy compliance

The Persistent Role of Human Teachers, Mentors, and Leaders

Despite technological advances, certain human capabilities remain irreplaceable in workplace contexts:

Relationship Building and Trust

- AI co-pilots cannot build genuine human connections

- Trust-based mentorship requires emotional intelligence AI lacks

- Complex negotiations depend on reading subtle human cues

- Leadership inspiration comes from authentic human presence

Strategic Judgment and Contextualization

- Understanding organizational politics and unwritten rules

- Recognizing when data points are misleading or context-dependent

- Making values-based decisions that prioritize long-term relationships over short-term optimization

- Navigating ambiguous situations without clear right answers

Innovation and Boundary-Pushing

- True innovation often comes from violating conventional patterns (which AI reinforces)

- Asking entirely new questions rather than optimizing existing processes

- Combining insights across unrelated domains in novel ways

- Taking calculated risks that look illogical to data-driven systems

Emotional Support and Development

- Coaching employees through career challenges and transitions

- Providing encouragement during setbacks and failures

- Recognizing and developing potential that doesn’t fit standard patterns

- Creating psychologically safe environments for growth

The Future of Work: Human + AI Partnership

The highest-performing organizations of 2026 and beyond will be those that:

- Invest in both AI capabilities and human development

- Create roles that leverage unique strengths of both humans and AI

- Build cultures where AI augments rather than replaces human judgment

- Maintain focus on outcomes that matter to humans (wellbeing, growth, meaning) rather than just metrics AI can optimize

8. Real-World Implementation Case Studies

Theory and research mean little without real-world validation. Let’s examine detailed case studies of AI co-pilot implementations, including successes, challenges, and lessons learned.

Case Study 1: Microsoft GitHub Copilot Enterprise Deployment

Background: Microsoft’s own deployment of GitHub Copilot across its global engineering organization, one of the largest and most thoroughly studied workplace AI implementations.

Scale:

- 5,000+ developers in initial randomized controlled trial (RCT)

- Later expanded to ~50,000 Microsoft engineers

- 7-month observation period for RCT

Implementation Approach:

Phase 1: Controlled Trial

- Random assignment to treatment (Copilot access) vs. control (no access)

- Minimal training provided intentionally to assess “natural” adoption

- Metrics tracked: Pull requests, commits, builds, code reviews

- Both quantitative and qualitative data collection

Phase 2: Supported Rollout

- Training programs developed based on trial learnings

- “Copilot Champions” designated in each team

- Best practices documentation created and shared

- Opt-out mechanisms for developers who preferred not to use

Results:

Productivity Gains:

- 26.08% average increase in completed pull requests

- 13.55% increase in commits

- 38.38% increase in builds

- Variation by experience level (junior saw 27-39% gains; senior 8-13%)

Adoption Patterns:

- 60-70% of developers actively used Copilot

- 30-40% chose not to adopt despite free access

- Junior developers 2.5X more likely to continue using after initial trial

- Acceptance rate: juniors 40%, seniors 30%

Unexpected Findings:

Quality Concerns: While productivity increased, follow-up research (GitClear 2024) found:

- 4X increase in “code churn” (code added then quickly removed)

- Higher rates of copy/paste patterns

- Suggestion that speed came partly at expense of code maintainability

Response: Microsoft added features emphasizing code quality:

- Better code explanation capabilities

- Integration with testing frameworks

- Prompts encouraging developers to understand vs. just accept suggestions

Key Success Factors:

- Rigorous measurement enabling data-driven decisions

- Respect for developer autonomy (opt-out available without penalty)

- Continuous iteration based on feedback

- Investment in supporting infrastructure and training

Challenges:

- Resistance from senior developers who saw limited personal benefit

- Language and framework coverage gaps

- Learning curve for effective prompting

- Need to balance speed with code quality and understanding

Case Study 2: McKinsey & Company Strategy Consulting

Background: Global consulting firm known for analytical rigor implementing AI co-pilots to enhance consultant productivity in research, analysis, and client deliverable creation.

Scale:

- Pilot: 200 consultants across 5 offices

- Rollout: 10,000+ consultants globally

- Focus: Associate and Engagement Manager levels (mid-level professionals)

Implementation Approach:

Tool Selection:

- Evaluated 12 AI platforms against consulting-specific criteria

- Selected combination of general-purpose (GPT-4) and specialized (financial analysis, market research) tools

- Custom internal platform integrating multiple AI capabilities

Training Program:

- Mandatory 16-hour training over 4 weeks

- Consulting-specific use cases (industry research, financial modeling, slide drafting)

- Ethics and client confidentiality emphasized

- Ongoing “power user” sessions for advanced techniques

Results After 6 Months:

Efficiency Gains:

- Initial research phase: 30-40% time reduction

- First draft deliverables: 35% faster creation

- Data analysis: 25% time savings

- Net result: Consultants serving ~1.5X clients in same timeframe

Quality Improvements:

- More comprehensive industry analysis (AI finding relevant studies humans missed)

- Faster iteration on client presentations (easy to test multiple messages)

- Reduced errors in financial calculations

- More time for strategic thinking and client relationship management

Business Impact:

- Increased throughput without proportional headcount increase

- Improved consultant work-life balance (less weekend work)

- Higher client satisfaction scores

- Junior consultant retention improved 12%

Challenges and Solutions:

Challenge 1: Client Confidentiality Client concern about AI providers accessing sensitive information.

Solution: Negotiated contractual terms with AI vendors guaranteeing data isolation, no model training on client data, SOC 2 certification, and clear data deletion policies.

Challenge 2: Over-Reliance Junior consultants accepting AI analysis without critical evaluation.

Solution: Modified training to emphasize verification, introduced peer review of AI-assisted work, created examples of AI errors and how to catch them.

Challenge 3: Uneven Adoption Some partners rejected AI, creating inconsistent expectations for junior staff.

Solution: Senior leadership mandate that all client-facing staff complete basic training, combined with showcasing success stories from respected senior consultants who embraced AI.

Key Lessons:

- Custom training for professional context crucial

- Partner-level buy-in needed before junior-level adoption succeeds

- Client education and contractual protections essential

- AI enables serving more clients, not replacing consultants

Case Study 3: Salesforce Sales Development Team

Background: Salesforce’s Sales Development Representative (SDR) team—responsible for initial prospect outreach and qualification—piloting AI co-pilots to enhance productivity and personalization.

Scale:

- Pilot: 50 SDRs

- Expansion: 500+ SDRs globally

- Focus: Email outreach, meeting preparation, objection handling

Implementation Approach:

Custom AI Capabilities:

- Integration with Salesforce CRM for full prospect context

- AI-generated personalized email drafts based on prospect industry, role, recent news

- Meeting briefings summarizing relevant prospect/account information

- Suggested talk tracks for common objections

Training:

- 8-hour program over 2 weeks

- Heavy emphasis on personalization vs. generic AI output

- Ethics training on authentic communication

- Competitive element (leaderboard for creative AI usage)

Results After 3 Months:

Activity Metrics:

- Emails sent per SDR: +45% (80→116 per day)

- Personalization quality: Maintained or improved (per manager review)

- Meeting bookings: +28%

- Qualified leads passed to sales: +31%

SDR Experience:

- Reduced burnout (less “writer’s block” stress)

- More time for research and strategic account planning

- Faster response to hot leads (less time drafting means faster follow-up)

- Higher job satisfaction scores

Unexpected Benefits:

- New SDRs ramping faster (AI helps with uncertainty about messaging)

- More diverse communication styles (AI offering alternatives reduced template uniformity)

- Valuable data on what messaging resonates (A/B testing AI variations at scale)

Challenges:

Challenge 1: Generic AI Output Initial AI emails sounded obviously AI-generated, reducing response rates.

Solution: Created extensive prompt library with proven effective openers, developed “voice and tone” guidelines fed to AI, encouraged SDRs to edit AI drafts significantly before sending.

Challenge 2: Prospect Pushback Some prospects explicitly stated they wouldn’t respond to “AI emails.”

Solution: Transparent about human + AI collaboration (“I use AI to draft but personalize for you”), positioned as efficiency tool enabling better research and customization, emphasized human judgment in account selection.

Challenge 3: Reduced Skill Development Concern that new SDRs not developing core writing and research skills.

Solution: First 30 days required writing without AI assistance to build fundamentals, gradual AI introduction with continued emphasis on learning underlying principles, senior SDRs reviewing junior work for quality.

Key Lessons:

- AI multiplies output but requires human editorial judgment

- Transparency with prospects builds trust

- Preserve skill development opportunities for junior employees

- Speed matters—AI enables faster response to time-sensitive opportunities

Case Study 4: Cleveland Clinic Healthcare Administration

Background: Major academic medical center implementing AI co-pilots to reduce administrative burden on physicians, nurses, and administrative staff.

Scale:

- Pilot: 5 departments (cardiology, oncology, primary care, surgery, emergency medicine)

- Expansion: System-wide across 20+ hospitals and 220 clinics

- Focus: Clinical documentation, patient message responses, administrative tasks

Implementation Approach:

Multiple AI Applications:

- Ambient clinical documentation: AI listening to patient encounters, drafting notes

- Patient portal message assistance: AI drafting responses to routine patient questions

- Scheduling optimization: AI suggesting optimal appointment times

- Insurance pre-authorization: AI automating paperwork

Training Program:

- Role-specific training (physicians, nurses, administrative staff)

- HIPAA and patient privacy emphasized heavily

- Workflow integration rather than separate tool

- Clinical accuracy verification protocols

Results After 12 Months:

Physician Time Savings:

- Documentation time: Reduced 2.5 hours/day → 1 hour/day (60% reduction)

- After-hours charting: 85% reduction

- Patient interaction time: Increased 15% (less distraction from computer)

- Physician burnout scores: Improved significantly

Patient Experience:

- Patient portal response time: 24 hours → 2 hours average

- Patient satisfaction: +12 percentage points

- No change in clinical quality metrics

- Concerns about “impersonal” care: <5% of patients

System Benefits:

- Physician retention: Improved (fewer leaving due to documentation burden)

- Recruitment: Easier (AI tools seen as competitive advantage)

- Estimated value: $50M annually in time savings across system

- Malpractice risk: No increase; possibly slight reduction due to better documentation

Challenges:

Challenge 1: Clinical Accuracy AI occasionally documented incorrect information from patient conversations.

Solution: Mandatory physician review and sign-off on all AI-generated notes, prominent visual indicators distinguishing AI vs. human-entered information, continuous feedback loop improving AI accuracy.

Challenge 2: Physician Skepticism Older physicians especially resistant to new technology.

Solution: Opt-in initially rather than mandated, peer champions demonstrating value, focus on time savings rather than technical capabilities, “try for 2 weeks” challenge lowering commitment barrier.

Challenge 3: Regulatory Concerns Uncertainty about AI documentation meeting medical-legal standards.

Solution: Legal and compliance team proactive engagement, documentation standards developed, malpractice insurance carrier consulted, created clear audit trail of AI-assisted documentation.

Key Lessons:

- Healthcare administration is prime use case for AI co-pilots

- Clinical accuracy verification absolutely essential

- Physician autonomy and opt-in approach crucial for adoption

- Time savings directly address leading cause of physician burnout

- Patient acceptance higher than anticipated when benefits explained

9. Measuring ROI: Costs vs. Benefits

Understanding the economics of AI copilots is critical for sustainable implementation. While proponents tout long-term savings, upfront costs can be substantial, and ROI timelines vary dramatically by use case.

Total Cost of Ownership (TCO)

Software and Licensing

- Enterprise AI platforms: $20-100 per user per month

- Specialized co-pilots (legal, medical, financial): $50-200 per user per month

- Custom development: $100,000-500,000 one-time for proprietary solutions

- Volume discounts: Typically 20-40% for >1,000 users

Infrastructure

- Cloud computing costs: $500-5,000/month depending on usage intensity

- Data storage: $100-1,000/month for prompts, outputs, analytics

- Integration services: $50,000-250,000 one-time for complex enterprise systems

- Bandwidth increases: 10-20% increase in network traffic

Personnel

- AI governance specialist: $120,000-180,000 annual salary

- Training development: $50,000-150,000 one-time

- Ongoing training delivery: $20,000-50,000 annually

- Technical support: 1 FTE per 500-1,000 users ($60,000-90,000 per FTE)

Example: Mid-Sized Company (2,000 employees)

Year 1 Costs:

- Software licenses (80% adoption): $480,000

- Infrastructure setup: $150,000

- Integration: $200,000

- Training development: $100,000

- Training delivery: $40,000

- 2 governance specialists: $300,000

- 3 technical support staff: $225,000

- Total Year 1: $1,495,000 ($747 per user, ~$935/adopting user)

Years 2-3 Costs (Annual):

- Software licenses: $480,000

- Infrastructure: $60,000

- Ongoing training: $30,000

- Governance: $300,000

- Support: $225,000

- Total Annual: $1,095,000 ($547 per user, ~$685/adopting user)

3-Year TCO: $3,685,000 ($1,842 per user over 3 years, $614/user/year average)

Quantifiable Benefits

Direct Productivity Gains Based on verified research (26% task completion increase, 5.4% time savings):

Scenario 1: Knowledge Workers (40% of workforce = 800 employees)

- Average salary: $85,000

- 5.4% time savings = 2.2 hours/week = 110 hours/year

- Value of saved time: 110 hours × $41/hour (hourly wage) = $4,510 per employee

- Total value: 800 × $4,510 = $3,608,000 annually

Scenario 2: Customer Support (20% of workforce = 400 employees)

- Average salary: $45,000

- 5.4% time savings enables handling 1.5X support volume

- Alternative: Would need 200 additional support staff at $45,000 = $9,000,000

- Actual cost with AI: Software + training = $200,000

- Net savings: $8,800,000 annually (likely overstated; assume $2-4M realistic)

Scenario 3: Sales Team (15% of workforce = 300 employees)

- Average salary: $75,000 + commission

- 28% increase in qualified leads (per Salesforce case study)

- Incremental revenue from leads: Depends on conversion rate and deal size

- Conservative estimate: $3-5M additional annual revenue

- Value: $3,000,000-5,000,000 (varies by company)

Quality Improvements Harder to quantify but include:

- Reduced errors requiring rework

- Improved customer satisfaction and retention

- Faster time-to-market for products/services

- Better decision-making from enhanced analysis

Employee Retention

- Reduced burnout and improved job satisfaction

- Lower turnover saves recruitment and training costs

- Typical turnover cost: 50-150% of annual salary

- If AI reduces turnover by 2 percentage points: Saves ~$1-2M annually

Total Annual Benefits (Conservative):

- Knowledge worker productivity: $3.6M

- Support efficiency: $2M (conservative vs. full replacement cost)

- Sales effectiveness: $3M

- Employee retention: $1.5M

- Total: $10.1M annually

3-Year ROI Calculation:

- Total Benefits (3 years): $30.3M

- Total Costs (3 years): $3.7M

- Net Benefit: $26.6M

- ROI: 720% over 3 years

However: This calculation makes optimistic assumptions. More realistic scenarios:

Conservative ROI (accounting for implementation challenges):

- 50% of projected benefits materialize (learning curves, resistance, suboptimal usage)

- Total benefits: $15.15M over 3 years

- Total costs: $3.7M

- Net benefit: $11.45M

- ROI: 310% over 3 years (still highly positive)

Cost-Effectiveness Compared to Alternatives

AI Co-pilots vs. Additional Headcount

Problem: Customer support team overwhelmed, 30% increase in volume

Option A: Hire 150 new support agents

- Salary + benefits: $50,000 × 150 = $7.5M annually

- Recruitment: $500K

- Training: $300K

- Management overhead: $400K

- Total: $8.7M annually

Option B: Deploy AI co-pilots for existing 500 agents

- Software: $300K annually

- Training: $150K one-time, $50K ongoing

- Support: $100K annually

- Total: $600K annually (after year 1)

Result: AI co-pilots 90% less expensive if they enable current staff to handle volume increase

AI Co-pilots vs. Offshore Outsourcing

Problem: Need to scale content creation 3X

Option A: Offshore content team (India, Philippines)

- 100 writers at $15K/year = $1.5M

- Management: $200K

- Quality issues requiring rework: $300K

- Total: $2M annually

Option B: AI co-pilots for 30 existing writers

- Software: $90K annually

- Training: $60K

- Net result: 30 writers produce 90 writers’ worth of output

- Total: $150K annually

Result: AI co-pilots 92% less expensive if productivity multiplier works as expected

Caveats:

- Quality may differ between options

- AI excels at certain content types, humans at others

- Cultural context and creativity may require human judgment

- Hybrid approach often optimal

When AI Co-pilots DON’T Provide Positive ROI

Scenarios Where Costs Exceed Benefits:

1. Low-Skill Repetitive Work Already Highly Efficient

- Example: Data entry already optimized through RPA or templates

- AI co-pilot adds cost without meaningful productivity gain

2. Highly Creative/Strategic Roles Where AI Adds Little

- Example: C-suite executives, artists, research scientists

- Time saved minimal; adoption low; ROI negative

3. Small Organizations (<100 Employees)

- Fixed implementation costs too high relative to total productivity

- Unless specific high-value use case (e.g., AI co-pilot for solo consultant)

4. Poor Implementation

- No training → low adoption → no productivity gains → negative ROI

- Wrong tool for use case → frustration → abandonment

5. Highly Regulated Industries Without Approved Solutions

- Compliance costs and restrictions may exceed benefits

- Example: Certain government or financial sectors with specific AI prohibitions

The ROI Verdict:

When properly implemented with adequate training, appropriate tool selection, and realistic expectations, AI co-pilots deliver strong positive ROI (3:1 to 10:1 over 3-5 years) for most knowledge worker and operational roles in medium-to-large organizations.

However, success is not guaranteed—it requires disciplined implementation, ongoing optimization, and acceptance that not all roles or individuals will benefit equally.

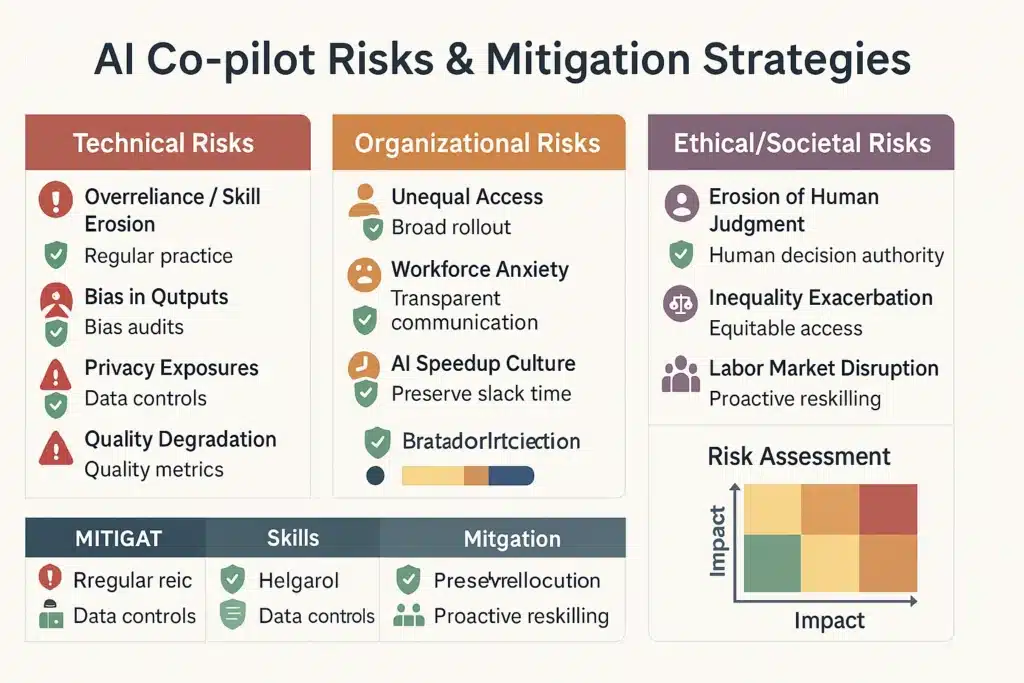

10. Risks, Challenges & Mitigation Strategies

Despite promising productivity gains, AI copilots introduce significant risks that organizations must proactively address.

Technical and Performance Risks

Risk 1: Overreliance Leading to Skill Erosion

Manifestation:

- Employees lose ability to perform tasks without AI assistance

- Critical thinking skills atrophy from accepting AI suggestions uncritically

- “Learned helplessness” where workers feel incapable without AI

Evidence: GitClear’s 2024 research found 4X increase in code cloning with heavy AI assistant usage, suggesting developers copying AI suggestions without understanding, potentially degrading long-term code quality and maintainability.

Mitigation Strategies:

- Regular “AI-free” practice sessions where employees solve problems manually

- Promotion criteria including ability to explain AI-assisted work

- Training emphasizing AI as tool, not replacement for expertise

- Periodic skill assessments independent of AI

Risk 2: Bias in AI Outputs

Manifestation:

- AI suggestions reflect historical biases in training data

- Certain demographics receive lower-quality assistance

- Reinforcement of existing inequities rather than correction

Evidence: UC Berkeley 2023 study found AI essay grading system scored African American Vernacular English essays 8% lower than equivalent standard English essays, systematically disadvantaging minority students.

Mitigation Strategies:

- Regular bias audits examining AI performance across demographic subgroups

- Diverse training data representing all populations served

- Human review of high-stakes AI decisions

- Feedback mechanisms allowing users to report biased outputs

- Transparency about known AI limitations

Risk 3: Privacy Exposures

Manifestation:

- Employees inadvertently sharing confidential information in AI prompts

- AI providers accessing sensitive corporate or customer data

- Data breaches exposing AI interaction logs

- Competitors accessing proprietary information through AI systems

Mitigation Strategies:

- Clear policies on what information can/cannot be shared with AI

- Technical controls preventing certain data types from reaching AI

- Contractual guarantees from AI vendors about data handling

- Regular security audits and penetration testing

- Employee training on recognizing sensitive information

- Incident response plans for AI-related data breaches

For comprehensive coverage of AI-specific cybersecurity threats and defenses, see our analysis of AI cybersecurity 2025.

Risk 4: Quality Degradation

Manifestation:

- Faster output but lower quality work products

- Increased error rates from insufficient human verification

- “Good enough” culture replacing excellence

- Customer satisfaction declining despite efficiency gains

Mitigation Strategies:

- Quality metrics alongside productivity metrics

- Mandatory review processes for AI-assisted high-stakes work

- Customer feedback loops detecting quality issues

- Reward systems emphasizing outcomes over output volume

Organizational and Cultural Risks

Risk 5: Unequal Access Creating Internal Inequity

Manifestation:

- Some employees/departments have AI co-pilots; others don’t

- Productivity expectations become unequal

- Resentment from employees without AI access

- Career advancement favoring AI users over equally skilled non-users

Mitigation Strategies:

- Deliberate rollout plan ensuring broad access

- Clear communication about AI availability timeline

- Adjusted performance expectations during transition

- Investment in training ensuring all can benefit

Risk 6: Workforce Anxiety and Resistance

Manifestation:

- Fear of job elimination reducing morale and engagement

- Active sabotage of AI implementations

- Talent departures to companies with clearer AI philosophies

- Union organizing or labor disputes around AI deployment

Mitigation Strategies:

- Transparent communication about AI’s role (augmentation not replacement)

- Early involvement of employees in AI selection and design

- Retraining programs demonstrating commitment to workforce

- Clear policies against AI-based layoffs during transition period

- Sharing productivity gains with employees (bonuses, reduced hours)

Risk 7: “AI Speedup” Culture

Manifestation:

- Expectations ratchet up infinitely (“if AI makes you 30% faster, we expect 30% more”)

- Burnout as efficiency gains become new baseline

- Work expanding to fill available time plus AI-enabled capacity

- Loss of strategic thinking time and creative space

Mitigation Strategies:

- Explicit decisions about how to use productivity gains (more output? Better quality? Reduced hours? Innovation time?)

- Resist automatic expectation increases

- Preserve slack time for learning, experimentation, relationship building

- Monitor employee wellbeing and workload metrics

Ethical and Societal Risks

Risk 8: Erosion of Human Judgment

Manifestation:

- Deferring to AI in situations requiring human values and ethics

- Losing practice in difficult decision-making

- Inability to recognize when AI suggestions are inappropriate for context

- Automation bias (trusting AI over contradictory human expertise)

Mitigation Strategies:

- Clear documentation of decision types requiring human judgment

- Training on AI limitations and failure modes

- Rewarding employees who appropriately override AI

- Regular calibration sessions discussing AI decisions

- Preserving forums for human deliberation on complex questions

Risk 9: Exacerbation of Inequality

Manifestation:

- AI primarily benefits already-advantaged workers

- Widening productivity gaps between AI “haves” and “have-nots”

- Job market polarization (high-skill AI-augmented vs. low-skill non-augmented)

- Geographic or demographic disparities in AI access

Mitigation Strategies:

- Intentional investment in making AI accessible broadly

- Extra support for populations at risk of being left behind

- Attention to societal impacts beyond individual organization

- Collaboration with educators, policymakers on workforce transitions

- Corporate social responsibility around equitable AI access

Risk 10: Labor Market Disruption

Manifestation:

- Displacement of workers in roles where AI provides >70% of value

- Downward pressure on wages in AI-augmentable occupations

- Changing bargaining power between employers and employees

- Need for large-scale reskilling beyond any individual company’s scope

Mitigation Strategies:

- Proactive retraining for impacted roles

- Creation of new roles leveraging human+AI combination

- Industry collaboration on workforce transitions

- Engagement with policymakers on safety nets and reskilling infrastructure

- Long-term perspective prioritizing sustainable business models over short-term labor cost reduction

Frequently Asked Questions (FAQs)

1. Will AI co-pilots replace jobs?

AI co-pilots change tasks rather than eliminate jobs entirely. Research from Princeton and MIT shows co-pilots increase productivity by 26% on average, with the greatest impact on junior workers (21-40% gains versus 7-16% for senior employees). While some roles will shrink or transform, new positions emerge including AI overseers, prompt engineers, augmentation specialists, and hybrid creative roles.

Net employment impact depends heavily on organizational choices—companies can use productivity gains to grow (serving more customers with same staff), to reduce headcount (capturing gains as profit), or to improve quality (maintaining revenue with higher-value work). Federal Reserve data indicates 28% of workers already use AI at work, with adoption accelerating but not driving widespread unemployment as many feared.

Historical precedent suggests technology typically creates more jobs than it eliminates, though transitions can be painful for individuals in displaced roles. The key differentiator: organizations that invest in reskilling and create pathways into new roles retain talent and capture greater long-term value.

2. How quickly should companies roll out AI co-pilots?

Organizations should adopt a phased approach starting with 1-3 pilot programs per function, measuring impact at 30-90 day intervals before scaling. Industry data shows companies using pilot teams see 40% faster adoption rates and 22% higher productivity gains compared to immediate full-deployment approaches.

Recommended timeline:

- Months 1-2: Pilot with 10-50 motivated early adopters

- Months 3-4: Gather data, refine processes, develop training

- Month 5: Decision point based on measured results

- Months 6-12: Phased rollout to broader organization with lessons incorporated

Begin with low-risk applications where errors are easily caught, establish clear accuracy thresholds and human override mechanisms, then gradually expand to higher-stakes use cases. The OECD recommends targeted 2-12 week training programs focused on prompt literacy and model evaluation before wider deployment.

Rushing full deployment without adequate training and support typically results in low adoption, employee frustration, and minimal productivity gains—effectively wasting implementation investment.

3. What are the top risks of AI co-pilots?

Primary risks include:

Overreliance leading to skill erosion: Employees lose ability to perform tasks without AI assistance. GitClear’s 2024 research found 4X increase in code cloning with heavy AI usage, indicating quality concerns when workers accept suggestions without understanding. Mitigate through regular AI-free practice sessions and promotion criteria requiring explanation of AI-assisted work.

Bias in AI outputs: AI suggestions may reflect historical biases, disadvantaging certain demographics. UC Berkeley found essay grading systems scored minority English dialects 8% lower than standard English for equivalent content. Mitigate through regular bias audits, diverse training data, and human review of consequential decisions.

Privacy exposures: Employees inadvertently sharing confidential information in AI prompts, or AI providers accessing sensitive corporate data. Mitigate through clear data policies, technical controls, vendor contractual guarantees, and employee training on recognizing sensitive information.

Quality degradation: Faster output but lower quality work, especially if verification steps are skipped. Mitigate by measuring quality alongside productivity, mandatory review for high-stakes work, and reward systems emphasizing outcomes over output volume.

Workforce anxiety: Fear of job elimination reducing morale. Mitigate through transparent communication about augmentation vs. replacement, employee involvement in AI selection, retraining programs, and policies against AI-based layoffs during transition.

4. What productivity gains can AI co-pilots deliver?

Verified research shows substantial but variable improvements:

26% average increase in completed tasks (Princeton/MIT/Microsoft/Wharton study of 4,867 developers across three randomized controlled trials spanning 4-7 months)

5.4% average time savings per work week (Federal Reserve Bank of St. Louis, February 2025, nationally representative survey), equivalent to 2.2 hours per 40-hour week

5-25% efficiency gains in customer support, software development, and consulting roles (OECD experimental studies, July 2025)

40% productivity boost reported by employees using AI (Upwork Research Institute, 2024), with gains attributed to tool improvements (25%), self-directed learning (22%), employer training (22%), and experimentation (31%)

Critical qualifiers:

- Benefits vary dramatically by experience level (junior workers see 2-3X larger gains than seniors)

- Effectiveness depends on implementation quality, training investment, and task-AI fit

- Organizations with structured programs achieve 40% higher adoption rates and 22% higher gains than ad-hoc approaches

- Some roles show minimal benefit while others transform dramatically

- Gains materialize within 30-90 days for early adopters but take 6-12 months for broader organization

5. What compliance requirements apply to AI co-pilots?

Organizations must navigate complex, evolving regulatory landscape:

European Union – AI Act (Entered force August 1, 2024):

- February 2, 2025: Prohibitions on unacceptable risk AI systems

- August 2, 2025: General-purpose AI (GPAI) transparency obligations

- August 2, 2026: High-risk AI system comprehensive requirements

- Penalties: Up to €35 million or 7% of global annual turnover (whichever higher)

- Requirements: AI inventories, risk classifications, technical documentation, human oversight, conformity assessments for high-risk systems

Most workplace co-pilots classified as “limited risk” or “minimal risk” but still require documentation, transparency, and data protection measures. See our EU AI Act 2025 compliance checklist for detailed guidance.

United States – State Regulations (38 states adopted 100+ laws in 2025):

- Colorado AI Act (Effective June 30, 2026): “Reasonable care” against algorithmic discrimination in consequential decisions

- California SB 53: Safety and transparency for large AI models

- No comprehensive federal legislation though Trump administration taking more permissive approach than Biden era

Additional Considerations:

- Data Privacy: GDPR (EU), CCPA (California), various state laws

- Sector-Specific: HIPAA (healthcare), FINRA (financial services), FDA (medical devices)

- International: China PIPL, Brazil LGPD, dozens of countries developing frameworks

Best Practice: Consult legal specialists familiar with AI regulations in each jurisdiction where organization operates. Compliance is essential for multi-jurisdiction companies.

6. What reskilling programs work best for AI co-pilot adoption?

Most effective programs are 2-12 week targeted trainings (duration depends on tool complexity and role requirements) focused on three core competencies:

1. Prompt Literacy (30% of training)

- Framing requests that elicit accurate, useful AI responses

- Understanding how phrasing affects output quality

- Iterating on prompts to refine results

- Recognizing when to provide context vs. keep prompts simple

2. Model Evaluation (40% of training)

- Knowing when to trust AI outputs versus apply human judgment

- Recognizing common failure modes (hallucinations, bias, outdated information)

- Developing calibrated confidence in AI suggestions

- Understanding which tasks AI handles well vs. poorly

3. Domain Expertise Integration (30% of training)

- Combining AI suggestions with industry knowledge

- Using AI for routine work while reserving human time for complex judgment

- Teaching AI about organizational context and constraints

- Knowing when to override AI based on factors it cannot perceive

Program Structure:

- 70% hands-on practice, 30% lecture (learn by doing)

- Role-specific modules tailored to actual job tasks

- Cohort-based learning (10-20 employees together) for peer support

- Continuous support with “office hours” and internal champions

- Micro-credentials tied to career advancement incentives

Research Shows: Organizations implementing structured reskilling see 40% faster adoption and 22% higher productivity gains. Attribution: 25% from tool improvements, 22% from self-directed learning, 22% from employer training, 31% from experimentation—emphasizing need for continuous learning culture beyond one-time events.

7. How do AI co-pilots affect compensation and job security?

Compensation Philosophy Varies:

Maintain Salaries: Most organizations keep salaries stable when AI increases productivity, capturing gains through growth (serving more customers) or quality improvements rather than headcount reduction. Rationale: Retain talent, maintain morale, and recognize that employee skill using AI has value.

Productivity Bonuses: Some companies share documented efficiency gains through bonuses or profit-sharing, aligning incentives and rewarding effective AI adoption.

Skill-Based Pay: Emerging model tying compensation to AI proficiency levels, similar to technical certifications. Employees demonstrating advanced AI augmentation capabilities earn premiums.

Job Security Considerations:

Near-Term (2025-2027): Most organizations use AI co-pilots to augment existing staff rather than eliminate positions, driven by:

- Existing workload backlogs that efficiency enables addressing

- Competitive pressure to improve quality/speed without adding headcount

- Desire to retain institutional knowledge and customer relationships

- Difficulty hiring qualified workers in tight labor markets

- Recognition that best results come from human+AI collaboration

Medium-Term (2028-2030): More organizational restructuring likely as:

- AI capabilities expand to handle increasingly complex tasks

- Economic pressures force efficiency choices

- Competitors using AI aggressively force others to match

- Routine roles consolidate while new hybrid roles emerge

Protection Strategies for Workers:

- Develop AI augmentation skills (become indispensable AI user)

- Build relationships and soft skills AI cannot replicate

- Cultivate judgment and contextual expertise

- Position for emerging AI oversight/governance roles

- Continuous learning to stay ahead of automation curve

For Organizations: Thoughtful AI deployment that invests in workforce, creates new roles, and shares gains builds loyalty and captures greater long-term value than pure cost-cutting approaches.

8. Can AI co-pilots work offline or do they require constant internet?

Most AI co-pilots require internet connectivity for core functionality because:

- Large language models run on cloud servers (too large for typical devices)

- Real-time access to training data and model updates

- Security considerations (keeping proprietary models server-side)

- Cost efficiency (cloud infrastructure cheaper at scale than edge deployment)

Emerging Offline Capabilities:

Limited Offline Modes: Some platforms offer reduced functionality offline:

- Cached previous responses and common templates

- On-device smaller models handling simple tasks

- Queue prompts for processing when connectivity restored

- Local document analysis without cloud transmission

Edge Computing Advances: 2025-2026 seeing progress in:

- Smaller, more efficient AI models (quantization, distillation techniques)

- More powerful edge devices (Apple M-series, Qualcomm Snapdragon, ARM chips)

- Hybrid architectures (simple tasks local, complex tasks cloud)

- Privacy-focused deployments running models on-premise

Practical Implications:

For Remote/Mobile Workers:

- Mobile hotspot or tethering required for most co-pilots

- Data consumption: 50-500MB per 8-hour workday depending on usage intensity

- Offline work requires planning (download materials in advance, access-independent tools for backup)

For Organizations:

- Reliable internet connectivity essential for AI co-pilot productivity gains

- Bandwidth requirements: 10-50Mbps per 100 users