Introduction

The cybersecurity landscape of 2025 represents a critical inflection point where artificial intelligence has become both the primary weapon and the essential defense. As generative AI enables attackers to create convincing deepfakes, synthetic identities, and adaptive malware at unprecedented scale, enterprises face threats that traditional security systems cannot detect. Yet this same AI technology, when deployed defensively, offers capabilities that fundamentally transform how organizations identify, prevent, and respond to cyber attacks.

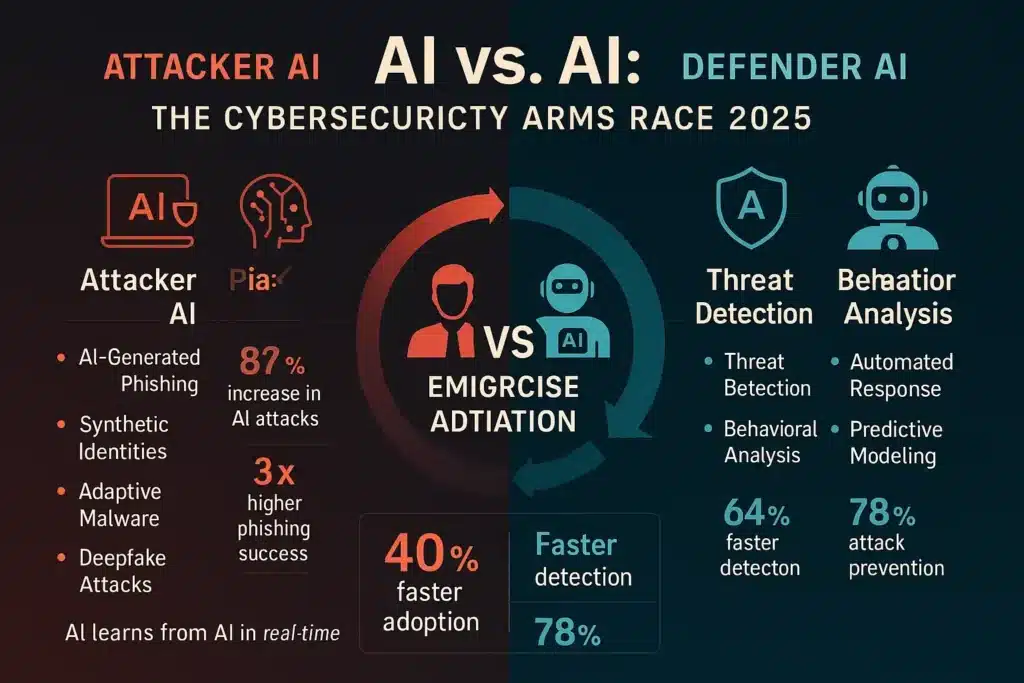

AI cybersecurity 2025 is defined by an escalating arms race where machine learning models face off against other machine learning models, each learning from the other’s tactics in real time. According to IBM’s X-Force Threat Intelligence Index 2025, AI-assisted attacks increased 87% year-over-year, while organizations adopting AI-powered defenses reduced mean time to detect (MTTD) incidents by 64%—from an average of 277 days to just 98 days.

This comprehensive analysis examines how generative AI models defend against synthetic threats, what AI-powered Security Operations Centers (SOCs) actually do in practice, which regulatory frameworks govern AI security deployments, and practical strategies for implementing AI defense systems while managing ethical risks and false positives.

The stakes are extraordinarily high. Microsoft’s 2025 Digital Defense Report documents over 600 million identity attacks daily—a 142% increase from 2023—with AI-generated phishing campaigns showing 3X higher success rates than human-crafted attempts. Yet organizations leveraging AI detection systems identified and blocked 78% of these attacks before any data compromise occurred.

Understanding how to deploy AI defensively while navigating compliance requirements, managing algorithmic bias, and maintaining human oversight separates organizations that survive the next generation of cyber threats from those that become headlines.

1. The AI vs. AI Cybersecurity Arms Race

By 2025, the cybersecurity industry has entered an unprecedented phase where artificial intelligence both enables and defends against the most sophisticated attacks in history. This dual reality—AI as weapon and AI as shield—creates a dynamic arms race fundamentally different from previous cybersecurity eras.

The Attacker’s AI Advantage

Generative Models Enable Unprecedented Threat Sophistication

Threat actors now leverage the same large language models and generative AI tools available to legitimate users, but weaponized for malicious purposes:

AI-Powered Phishing Campaigns:

- Perfect grammar and localization in any language

- Personalized content based on scraped social media data

- A/B testing of phishing messages at massive scale

- Success rates 3X higher than traditional phishing (Microsoft 2025 data)

Synthetic Identity Creation:

- AI-generated faces, voices, and documents passing verification systems

- Complete digital personas with consistent history across platforms

- Ability to create thousands of unique identities per day

- Used for fraud, disinformation, and credential theft

Adaptive Malware:

- Code that mutates to evade signature-based detection

- Polymorphic behavior that changes based on target environment

- AI models that learn from failed intrusion attempts

- Autonomous decision-making without command-and-control servers

Deepfake-Enabled Social Engineering:

- Voice cloning of executives for fraudulent wire transfers (average loss: $243,000 per incident)

- Video deepfakes for fake video calls with apparent company leadership

- Synthetic media used in business email compromise (BEC) attacks

- Real-time voice manipulation during live calls

The Defender’s AI Response

Why Traditional Security Fails Against AI Threats

Legacy cybersecurity approaches prove inadequate against AI-powered attacks:

Signature-Based Detection: Cannot identify never-before-seen threats or malware that constantly mutates its code signature.

Rule-Based Systems: Rigid logic cannot adapt to attackers using AI to probe and learn defensive patterns, then adjust tactics in real time.

Human Analysis Alone: Security analysts drowning in alerts (average SOC analyst reviews 11,000+ alerts daily per IBM data) cannot keep pace with AI-generated attack volume.

Static Perimeter Defense: Fails when attackers use AI to find and exploit zero-day vulnerabilities faster than patches can be deployed.

Enter Defensive AI:

Organizations now deploy AI systems that learn attacker behavior, identify anomalies in massive datasets, predict attack patterns, and automate response at machine speed. As detailed in our analysis of how AI co-pilots are transforming employment models, AI augments rather than replaces human security professionals, handling routine threat detection while analysts focus on complex investigations and strategic defense.

Verified Attack Statistics (2025)

According to authoritative cybersecurity research:

IBM X-Force Threat Intelligence Index 2025:

- 87% year-over-year increase in AI-assisted attacks

- Average cost of data breach: $4.88 million (8% increase from 2024)

- AI and automation reduced breach costs by average of $2.22 million for organizations using these technologies

- 277 days average time to identify and contain breach (reduced to 98 days with AI)

Microsoft Digital Defense Report 2025:

- 600+ million identity attacks per day (142% increase year-over-year)

- 89% of organizations experienced identity-based attacks

- AI-generated phishing attempts grew 145% in 12 months

- Password spray attacks increased 3X with AI automating credential testing

Gartner 2025 Cybersecurity Forecast:

- By end of 2025, 70% of organizations will use AI for at least one cybersecurity use case

- AI-augmented cybersecurity market reaching $46.3 billion (38% CAGR)

- 60% of large enterprises deploying AI-powered Security Operations Centers

Verizon 2025 Data Breach Investigations Report (DBIR):

- Social engineering involved in 36% of breaches (AI increasing sophistication)

- Median time for attackers to compromise credentials: < 1 hour

- 86% of breaches motivated by financial gain

- Ransomware involved in 24% of all breaches

These verified statistics demonstrate both the severity of AI-powered threats and the effectiveness of AI-driven defenses when properly implemented.

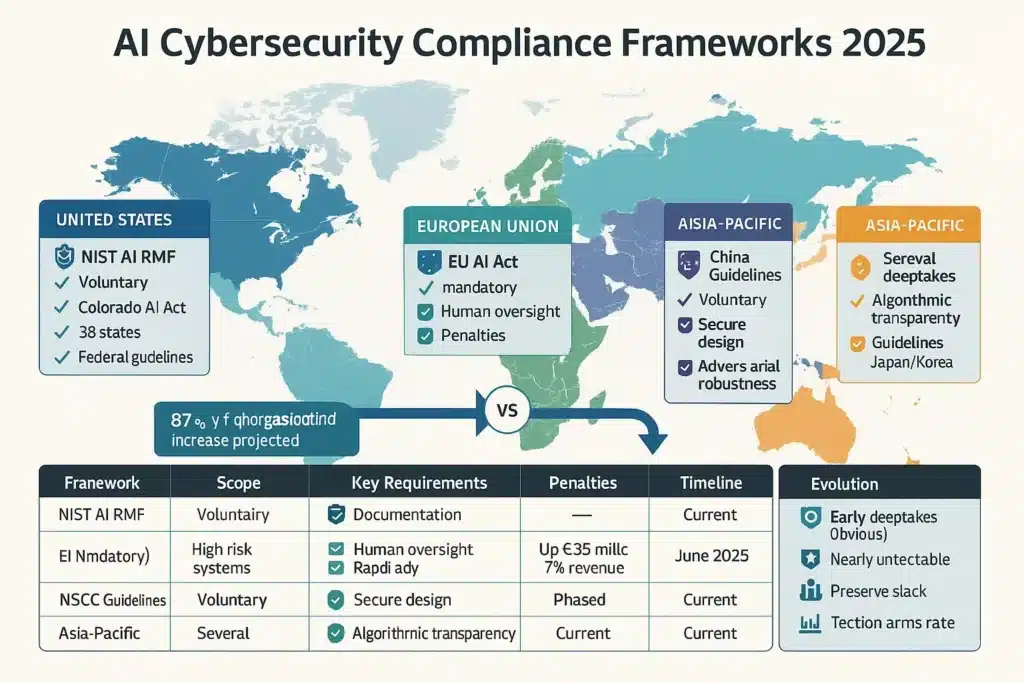

The Regulatory Context

Governments worldwide recognize AI cybersecurity as critical infrastructure. The intersection of AI capabilities with national security concerns drives rapid regulatory development:

United States: NIST AI Risk Management Framework (RMF 1.0) provides voluntary guidelines for trustworthy AI development and deployment, with cybersecurity applications receiving particular scrutiny.

European Union: The EU AI Act classifies many cybersecurity AI systems as “high-risk,” requiring conformity assessments, human oversight, and technical documentation. Our comprehensive EU AI Act 2025 compliance checklist details requirements for security AI deployments.

United Kingdom: National Cyber Security Centre (NCSC) published “Guidelines for secure AI system development” emphasizing adversarial robustness testing.

China: Measures for the Management of Algorithmic Recommendations require algorithmic transparency and security assessments for AI systems.

This regulatory fragmentation creates compliance challenges for multinational organizations deploying AI security tools across jurisdictions. Our analysis of AI regulation in the U.S. 2025 and the federal vs. state divide explores the complex American landscape where federal guidelines compete with state-specific requirements.

2. How Generative AI Defends Against Synthetic Threats

Generative AI models—the same technology enabling sophisticated attacks—provide defenders with unprecedented threat detection capabilities. Understanding how these systems work reveals both their power and limitations.

Core Defensive AI Technologies

1. Anomaly Detection Through Behavioral Analysis

Modern AI security systems analyze massive datasets to identify deviations from normal patterns:

How It Works:

- Baseline models learn “normal” network traffic, user behavior, and system activity

- Unsupervised learning algorithms detect statistical outliers

- Real-time comparison flags unusual patterns (e.g., employee accessing files at 3 AM from new location)

- Continuous retraining adapts to evolving legitimate behavior

Example Application: Microsoft Defender for Endpoint uses behavioral AI to detect “living off the land” attacks where hackers use legitimate system tools (PowerShell, WMI) for malicious purposes. The AI identifies suspicious command combinations and execution contexts that human analysts would miss.

Effectiveness: IBM reports organizations using AI behavioral analytics reduce dwell time (time attackers remain undetected in networks) from 277 days to 98 days—a 64% improvement.

2. Natural Language Processing for Phishing Detection

Large language models analyze email and message content for sophisticated phishing indicators:

Advanced Detection Methods:

- Semantic analysis identifying urgency manipulation and social engineering tactics

- Stylometry detecting inconsistencies in writing style (e.g., executive’s email with unusual phrasing)

- Context awareness comparing messages to sender’s historical communication patterns

- Link and attachment analysis predicting malicious URLs before users click

Real-World Performance: According to Proofpoint’s 2025 State of the Phish report, organizations using AI email security blocked 78% of sophisticated phishing attempts that bypassed traditional filters, including those using AI-generated content.

3. Predictive Threat Intelligence

AI models analyze global threat data to predict future attack patterns:

Data Sources:

- Honeypots and threat feeds from security vendors

- Dark web monitoring for emerging exploit kits and vulnerabilities

- Analysis of attacker tactics, techniques, and procedures (TTPs)

- Correlation of seemingly unrelated security events across organizations

Predictive Capabilities:

- Forecasting which vulnerabilities attackers will likely target next

- Identifying organizations at highest risk based on sector, geography, and defensive posture

- Predicting optimal timing for specific attack types (e.g., tax season phishing)

- Estimating attacker capabilities and resources

Google’s Mandiant Advantage: Google’s threat intelligence platform uses AI to analyze 1+ trillion security events daily, identifying emerging threats 19 days faster on average than industry standard.

4. Automated Vulnerability Assessment

AI systems continuously scan code, configurations, and infrastructure for security weaknesses:

Capabilities:

- Static and dynamic code analysis identifying security flaws

- Configuration drift detection spotting unauthorized changes

- Dependency analysis tracking vulnerable libraries in software supply chains

- Penetration testing automation finding exploitable weaknesses

GitHub Advanced Security: Uses AI-powered code scanning to identify security vulnerabilities in repositories, automatically suggesting fixes and prioritizing critical issues. Detects 73% more true vulnerabilities compared to traditional static analysis tools (GitHub 2025 data).

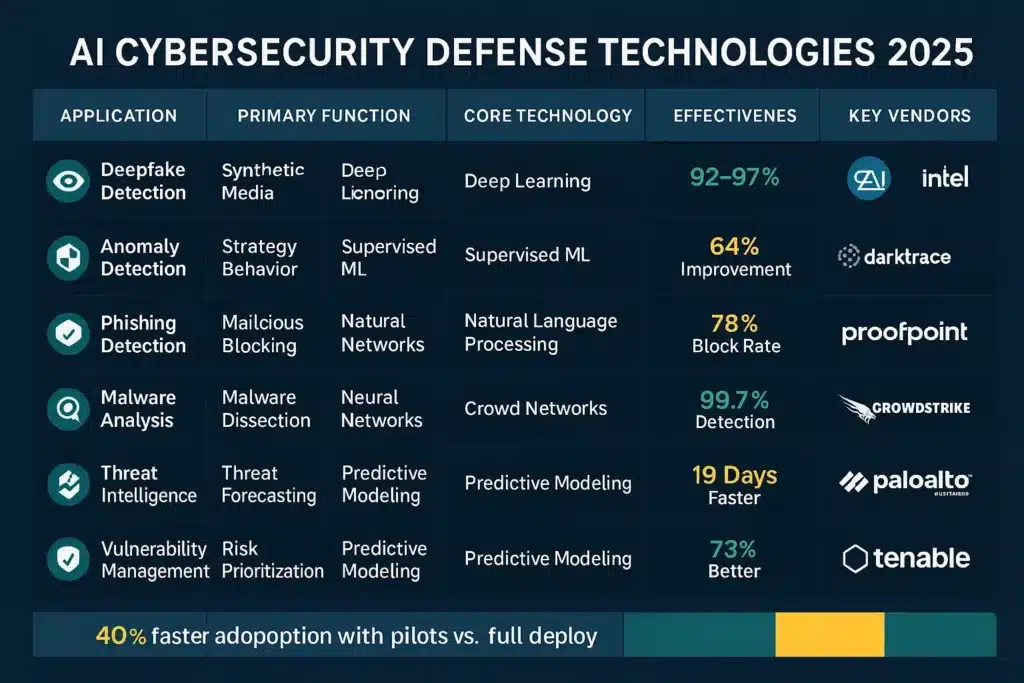

Defensive AI Application Matrix

| AI Defense Application | Primary Function | Technology | Effectiveness Evidence | Leading Vendors |

|---|---|---|---|---|

| Deepfake Detection | Identify synthetic media (audio, video, images) | Computer vision, audio analysis, artifact detection | 92-97% accuracy on benchmark datasets | Intel FakeCatcher, Microsoft Video Authenticator, Sensity |

| Behavioral Anomaly Detection | Flag unusual user/system behavior | Unsupervised learning, clustering algorithms | 64% reduction in dwell time | Darktrace, Microsoft Defender, IBM QRadar |

| Phishing Detection | Identify social engineering attempts | NLP, semantic analysis, link analysis | 78% block rate on sophisticated attacks | Proofpoint, Mimecast, Abnormal Security |

| Malware Analysis | Detect and classify malicious code | Deep learning, sandboxing, static/dynamic analysis | 99.7% detection rate with <0.01% false positives | CrowdStrike, SentinelOne, Microsoft Defender |

| Threat Intelligence | Predict emerging threats | Data mining, pattern recognition, predictive modeling | 19 days faster threat identification | Google Mandiant, Recorded Future, ThreatQuotient |

| Incident Response | Automate containment and remediation | Reinforcement learning, playbook generation | 60% reduction in mean time to respond | Palo Alto Cortex XSOAR, Splunk Phantom, IBM Resilient |

| Vulnerability Management | Identify and prioritize security weaknesses | Code analysis, configuration assessment, exploit prediction | 73% improvement in vulnerability detection | Tenable.io, Qualys VMDR, Rapid7 InsightVM |

Note: Performance metrics vary significantly based on deployment context, data quality, and threat sophistication. Vendor claims should be validated through independent testing and pilot programs. All systems require human oversight for high-stakes decisions.

Critical Note on Effectiveness: Performance metrics vary significantly based on deployment context, data quality, and threat sophistication. Vendor claims should be validated through independent testing and pilot programs. All systems require human oversight for high-stakes decisions.

Integration Challenges

While powerful individually, AI defense tools must integrate into existing security architectures:

Data Silos: AI models require access to comprehensive data across endpoint, network, cloud, and identity systems. Many organizations struggle with fragmented security telemetry.

Alert Fatigue: Even AI systems can generate overwhelming alert volumes without proper tuning. Effective deployment requires careful threshold calibration and alert correlation.

Model Drift: AI trained on historical data may fail against novel attack vectors. Continuous retraining with fresh threat intelligence is essential.

False Positives: Over-sensitive AI blocks legitimate business activity, creating user friction and security team burnout from investigating false alarms.

Successful AI security implementation requires addressing these challenges through careful planning, proper training data, continuous validation, and clear escalation paths for ambiguous cases.

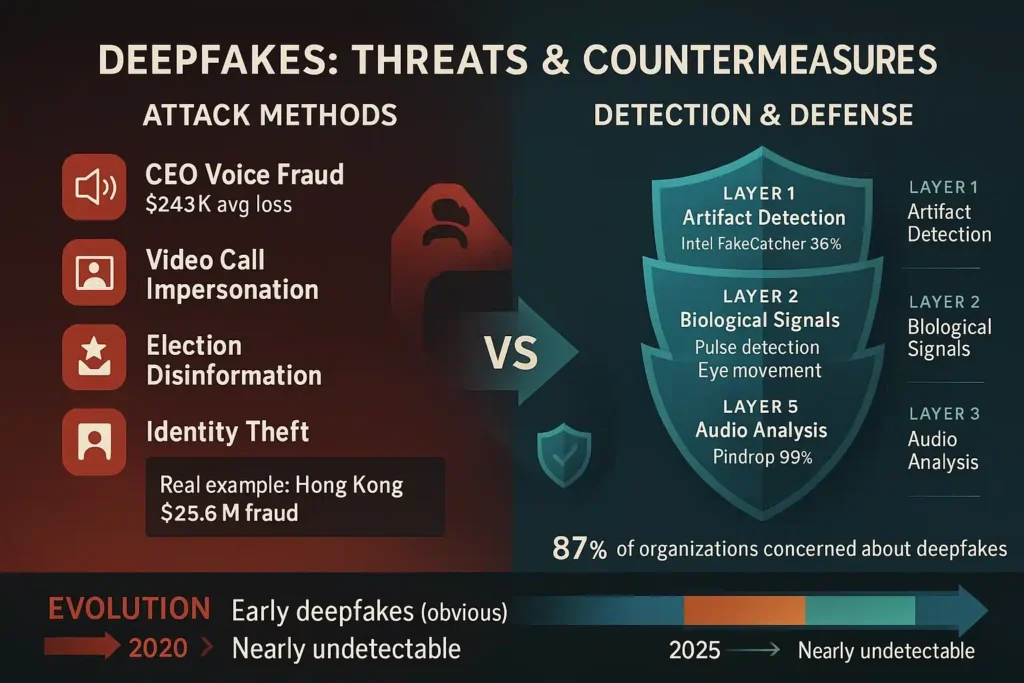

3. The Deepfake Challenge: Detection & Countermeasures

Deepfakes—synthetic media created by AI—represent one of the most concerning cybersecurity threats of 2025, with implications spanning fraud, disinformation, and social engineering attacks.

The Deepfake Threat Landscape

What Makes Deepfakes Dangerous:

Financial Fraud:

- Voice cloning enables CEO fraud where attackers impersonate executives to authorize fraudulent wire transfers

- Average loss per deepfake-enabled BEC attack: $243,000 (FBI IC3 2025 data)

- Video deepfakes used in fake video calls to verify identities during transactions

Disinformation:

- Synthetic media of public figures making false statements

- Manipulation of public opinion during elections and crises

- Erosion of trust in authentic video/audio evidence

Identity Theft:

- AI-generated faces bypass facial recognition systems

- Synthetic voice enables automated social engineering at scale

- Fake government IDs and documents for account opening fraud

Reputational Damage:

- Non-consensual deepfake content targeting individuals

- Corporate sabotage through fake executive statements

- Fake product reviews and testimonials

Real-World Deepfake Attack Examples (2024-2025)

Hong Kong Multinational CFO Fraud (February 2024):

- Finance worker received video call invitation from “CFO”

- Multiple “executives” on call were deepfakes

- Worker authorized $25.6 million transfer

- Attackers used deepfake video and voice in real-time

UK Energy Company Voice Deepfake (2024):

- CEO’s voice cloned using publicly available speech samples

- Called subsidiary manager requesting urgent fund transfer

- Manager believed authentic voice and authorized payment

- £200,000 ($243,000) transferred before fraud discovered

Election Disinformation (2024-2025):

- Synthetic audio of political candidates making controversial statements

- Deepfake videos manipulated to show candidates in compromising situations

- Rapid spread on social media before debunking possible

- Impact on voter perception documented in several elections

Detection Technologies

Visual Deepfake Detection:

Artifact Analysis: Modern detection systems identify subtle artifacts in synthetic images/videos:

- Irregular eye blinking patterns (humans blink 15-20 times per minute; early deepfakes didn’t blink naturally)

- Lighting inconsistencies across face

- Unnatural teeth appearance

- Mismatched facial landmarks when head turns

- Background anomalies and edge artifacts

Biological Signal Detection: Advanced systems detect absence of authentic physiological signals:

- Lack of natural pulse visible in facial blood vessels

- Missing respiration micro-movements

- Unnatural eye saccades (rapid eye movements)

- Inconsistent skin texture

Intel RealSense ID + FakeCatcher:

- Claims 96% accuracy detecting deepfake videos

- Uses photoplethysmography (PPG) to detect blood flow in face

- Real-time processing (detecting fakes in milliseconds)

- Deployed at some airports and high-security facilities

Microsoft Video Authenticator:

- Analyzes blend boundaries and grayscale elements

- Provides confidence score (0-100%) that video is manipulated

- Integrated into Microsoft Defender

- Accuracy: 92-95% on benchmark datasets (varies by deepfake sophistication)

Audio Deepfake Detection:

Acoustic Analysis:

- Frequency spectrum inconsistencies

- Unnatural prosody (rhythm and intonation)

- Artifacts from audio generation process

- Voice consistency analysis across longer samples

Behavioral Analysis:

- Speaking pattern deviations from known samples

- Unusual pauses or speed variations

- Context-inappropriate language use

- Sentiment mismatch with supposed content

Pindrop Voice Intelligence:

- Analyzes 1,380 unique voice features

- Detects synthetic voice in real-time calls

- Used by major banks and call centers

- Claims 99% accuracy identifying deepfake voice with <1% false positive

Watermarking and Provenance Solutions

Proactive Authentication:

Rather than detecting fakes after creation, some solutions authenticate genuine content at capture:

DeepMind SynthID:

- Embeds imperceptible watermarks in AI-generated images and audio

- Watermarks survive compression and minor editing

- Can be detected even from screenshots

- Now integrated into Google’s AI image generators

Meta AudioSeal:

- Watermarks AI-generated audio with inaudible signals

- Robust against lossy compression (MP3, etc.)

- Detectable even after social media platform processing

- Open-sourced to enable industry adoption

Content Credentials (C2PA Standard):

- Industry coalition (Adobe, Microsoft, BBC, others) creating metadata standard

- Embeds provenance information (who created, when, what tools used, any edits)

- Creates chain of custody for digital content

- Supported in Adobe Creative Suite, Microsoft tools

Limitations:

- Only authenticates content from participating platforms

- Requires widespread adoption to be effective

- Can be stripped from files (though that itself is suspicious)

- Doesn’t help with content created before standard adoption

Organizational Defense Strategies

Multi-Layered Verification:

Organizations should implement defense-in-depth against deepfake threats:

For Financial Transactions:

- Multi-factor authentication beyond voice/video

- Callback procedures using known contact information

- Secondary verification through different communication channel

- Flagging high-value or unusual transactions for extra scrutiny

For Communications:

- Code words or phrases established in advance for sensitive requests

- Digital signatures on official communications

- Verification protocols for unexpected urgent requests

- Employee training on deepfake risks and verification procedures

For Media/PR:

- Source verification before publishing content

- Cross-reference with multiple independent sources

- C2PA content credentials checking where available

- Transparency about uncertainty when provenance unclear

Microsoft Responsible AI Detection Tools: Integrated into Microsoft 365:

- Defender for Office 365 scans email attachments for synthetic media

- Teams flags potentially manipulated video in calls

- Outlook provides content authenticity indicators

- Azure Cognitive Services offers deepfake detection API for custom applications

The rapid evolution of deepfake technology means detection remains an ongoing challenge. Organizations must combine technological defenses with human judgment, process controls, and continuous employee education.

4. AI-Powered Security Operations Centers (AIOps)

Traditional Security Operations Centers (SOCs) struggle with overwhelming alert volumes, analyst burnout, and inability to respond at the speed of automated attacks. AI-powered SOCs—often called AIOps (AI for IT Operations)—transform this model through intelligent automation.

The Traditional SOC Crisis

Challenges Facing Human Analysts:

Alert Overload:

- Average SOC analyst reviews 11,000+ security alerts daily (IBM data)

- Only 22% of alerts investigated due to volume

- False positive rates of 40-60% in many environments

- Critical threats buried in noise

Skill Shortage:

- Global cybersecurity workforce gap: 4 million unfilled positions (ISC² 2025)

- Median time to hire security analyst: 6+ months

- High burnout rates (average SOC analyst tenure: 2.1 years)

- Significant salary pressure

Slow Response Times:

- Average time to detect breach: 277 days without AI (IBM)

- Incident triage can take hours or days

- Manual investigation of false positives wastes 25-30% of analyst time

- Critical seconds/minutes lost in fast-moving attacks

Inconsistent Analysis:

- Quality depends on individual analyst experience

- Overnight/weekend shifts often less experienced

- Fatigue leads to missed detections

- Lack of institutional knowledge capture

How AI Transforms SOC Operations

1. Intelligent Alert Triage

AI systems automatically categorize, prioritize, and correlate alerts:

Functionality:

- Machine learning models trained on historical incident data

- Automatic classification (true positive, false positive, benign, critical)

- Risk scoring based on asset importance, threat severity, likelihood

- Alert correlation across multiple systems and timeframes

Impact: According to IBM Security, AI-powered alert triage reduces false positives by 65% and accelerates time to respond by 60%.

Example: IBM QRadar Advisor with Watson:

- Analyzes alerts in context of threat intelligence

- Provides natural language explanation of potential threat

- Suggests investigation steps and remediation actions

- Reduces investigation time from hours to minutes

2. Automated Incident Response

AI systems execute predefined playbooks and adapt based on threat characteristics:

Common Automated Responses:

- Isolating compromised endpoints from network

- Blocking malicious IP addresses or domains

- Forcing password resets for potentially compromised accounts

- Creating forensic images for investigation

- Notifying relevant stakeholders

Adaptive Decision-Making: Advanced systems use reinforcement learning to optimize response strategies:

- Learn which actions most effectively contain specific threat types

- Balance security (aggressive isolation) vs. business continuity

- Adjust based on risk appetite and asset criticality

- Escalate to humans when confidence low

Palo Alto Networks Cortex XSOAR:

- Orchestrates response across 600+ security tools

- Executes complex multi-step response workflows

- Self-learning system improving playbooks over time

- Reduces mean time to respond (MTTR) by 73% (vendor claim, varies by customer)

3. Threat Hunting Automation

Proactive search for threats that evade automated detection:

AI-Assisted Hunting:

- Natural language queries (“Show me unusual PowerShell executions”)

- Hypothesis generation based on threat intelligence

- Behavioral baseline deviations

- Pattern discovery in historical data

CrowdStrike Threat Graph:

- Analyzes 1+ trillion endpoint events per week

- Identifies threat actor patterns across global customer base

- Provides context-rich detections highlighting attacker TTPs

- Enables proactive hunting based on emerging threats

4. Continuous Learning and Adaptation

AI SOC systems improve over time through feedback loops:

Learning Mechanisms:

- Analyst feedback on AI decisions (true/false positives)

- Outcomes of investigations (benign, contained, compromised)

- Integration of new threat intelligence

- Adaptation to organizational environment changes

Result: Detection accuracy improves 3-5% per quarter in well-managed deployments as models learn organizational specific patterns.

Real-World SOC AI Implementation

Case Study: Large Financial Institution (5,000 employees)

Pre-AI Baseline:

- 15-person SOC team working 24/7/365

- 50,000+ alerts per day from SIEM, EDR, network tools

- 8% of alerts investigated (4,000 daily)

- Mean time to detect (MTTD): 14 hours

- Mean time to respond (MTTR): 22 hours

- Analyst burnout leading to 40% annual turnover

AI Implementation (18-month deployment):

- Microsoft Sentinel (cloud-native SIEM with AI)

- CrowdStrike Falcon with AI threat detection

- Splunk Phantom (now Splunk SOAR) for orchestration

- Custom ML models trained on organization-specific data

Results After 12 Months:

- Alert volume reduced 72% through intelligent filtering

- 95% of high-priority alerts now investigated

- MTTD reduced to 2.3 hours (84% improvement)

- MTTR reduced to 4.1 hours (81% improvement)

- Analyst capacity freed up: equivalent of 7 FTEs worth of time

- Turnover dropped to 12% (closer to industry average)

Key Success Factors:

- Executive sponsorship and adequate budget ($2.8M over 18 months)

- 6-month pilot before full deployment

- Intensive analyst training (80 hours per person)

- Clear playbooks and escalation procedures

- Human-in-the-loop for all automated responses initially

- Continuous tuning based on feedback

Challenges:

- Initial false positive rate higher than expected (addressed through tuning)

- Integration complexity with legacy systems

- Resistance from some senior analysts skeptical of AI

- Ongoing need for AI/ML expertise on team

AI SOC Vendor Landscape

Leading Platforms:

Microsoft Sentinel:

- Cloud-native SIEM with built-in AI

- Integrates with Microsoft security ecosystem

- Machine learning models for threat detection

- Natural language query interface

- Pricing: ~$150-300 per user per month (varies by data volume)

Splunk Enterprise Security + SOAR:

- Market leader in SIEM

- AI/ML toolkit for custom models

- Extensive integration ecosystem (1,000+ apps)

- Phantom (SOAR) for automated response

- Pricing: ~$100,000-500,000+ annually (depends on data ingestion)

IBM QRadar + Watson:

- AI-powered threat detection and investigation

- Cognitive security combining SIEM with Watson AI

- Strong regulatory compliance features

- Suitable for large enterprises

- Pricing: ~$75,000-300,000+ annually

Palo Alto Cortex XSOAR:

- Security orchestration and automated response (SOAR)

- 600+ security tool integrations

- Machine learning for playbook optimization

- Incident management and collaboration

- Pricing: ~$100-200 per user per month

Google Chronicle:

- Petabyte-scale security telemetry platform

- Leverages Google’s infrastructure and ML capabilities

- Fast threat hunting across massive datasets

- Integration with Google Cloud security tools

- Pricing: Custom (typically for large enterprises)

Darktrace:

- Self-learning AI for anomaly detection

- Autonomous Response capability (with human oversight options)

- Network visibility and insider threat detection

- Focus on behavioral analytics

- Pricing: ~$50,000-200,000+ annually (depends on organization size)

Implementation Best Practices

For Organizations Considering AI SOC:

1. Start with Pilot (3-6 months)

- Select single high-value use case (e.g., alert triage for one tool)

- Measure baseline metrics before AI

- Track improvement quantitatively

- Gather analyst feedback qualitatively

2. Ensure Data Quality

- AI only as good as training data

- Review existing security telemetry for completeness

- Integrate disparate data sources

- Establish data retention policies

3. Invest in Training

- SOC analysts need AI literacy (how models work, limitations)

- Security engineers need ML skills for tuning

- Leadership needs to understand AI capabilities and risks

- Plan 40-80 hours training per technical team member

4. Maintain Human Oversight

- Never fully automate high-stakes decisions

- Clear escalation procedures for AI uncertainty

- Regular audits of AI decisions

- Feedback loops to continuously improve models

5. Plan for Ongoing Costs

- Licensing and subscription fees

- Cloud infrastructure (data storage, compute)

- Personnel training and development

- Continuous tuning and optimization

AI-powered SOCs represent the future of enterprise security, but successful implementation requires thoughtful planning, adequate resources, and realistic expectations about both capabilities and limitations.

5. Regulatory Frameworks: NIST, EU AI Act, and Compliance

As AI becomes embedded in critical cybersecurity infrastructure, regulators worldwide establish frameworks to ensure responsible, trustworthy, and effective deployment. Organizations must navigate multiple, sometimes conflicting, requirements.

NIST AI Risk Management Framework (AI RMF 1.0)

Overview:

The National Institute of Standards and Technology (NIST) released the AI Risk Management Framework in January 2023, providing voluntary guidance for developing and deploying trustworthy AI systems.

Core Principles:

1. Valid and Reliable:

- AI systems perform consistently and accurately

- Testing across diverse conditions and populations

- Performance monitoring in production environments

2. Safe:

- Systems do not pose unacceptable risks

- Fail-safe mechanisms when AI encounters edge cases

- Human override capabilities

3. Secure and Resilient:

- Protected against adversarial attacks

- Robust to data poisoning and model manipulation

- Secure software development lifecycle

4. Accountable and Transparent:

- Clear documentation of AI capabilities and limitations

- Explainable decision-making

- Audit trails for AI actions

5. Explainable and Interpretable:

- Stakeholders understand how AI reaches conclusions

- Appropriate level of detail for different audiences

- Model interpretability techniques employed

6. Privacy-Enhanced:

- Data minimization and purpose limitation

- Protection of sensitive information

- Consent mechanisms where required

7. Fair with Harmful Bias Managed:

- Regular bias testing across demographic groups

- Mitigation strategies for identified biases

- Inclusive design and development processes

Application to Cybersecurity AI:

For security-focused AI systems, NIST AI RMF emphasizes:

- Adversarial robustness testing (can attackers fool the AI?)

- False positive/negative trade-off documentation

- Incident response procedures when AI fails

- Regular retraining with adversarial examples

- Human oversight for automated response actions

Compliance Status: NIST AI RMF is voluntary in private sector, but increasingly referenced in government contracts, industry standards, and as evidence of due diligence in litigation.

EU AI Act Compliance for Cybersecurity Systems

Classification and Requirements:

The EU AI Act (entered force August 1, 2024) establishes risk-based tiers. Most cybersecurity AI systems fall into “limited risk” or “minimal risk” categories, though some applications may be classified as “high-risk.”

High-Risk Cybersecurity AI (If Applicable):

If cybersecurity AI systems make consequential decisions affecting individuals’ rights (e.g., AI determining access to critical services, automated decision-making for critical infrastructure protection), they may qualify as high-risk and require:

Technical Documentation:

- Detailed description of AI system and capabilities

- Training data sources and characteristics

- Testing and validation procedures

- Performance metrics (accuracy, false positive/negative rates)

- Known limitations and failure modes

Risk Management System:

- Identification and analysis of potential risks

- Risk mitigation measures

- Testing and validation procedures

- Post-market monitoring plan

Data Governance:

- Training data quality assurance

- Bias detection and mitigation

- Data provenance and traceability

Transparency:

- Clear information to users about AI system capabilities

- Instructions for proper use

- Human oversight capabilities

Human Oversight:

- Mechanisms for human intervention

- Override capabilities

- Monitoring dashboards for human supervisors

Accuracy, Robustness, Cybersecurity:

- Testing against adversarial attacks

- Resilience to data poisoning

- Protection of training data and models

Conformity Assessment:

- Internal control procedures or third-party assessment

- CE marking for high-risk systems

- Registration in EU database

Penalties for Non-Compliance:

- Up to €35 million or 7% of global annual turnover (whichever higher) for prohibited AI

- Up to €15 million or 3% of global turnover for other violations

- Up to €7.5 million or 1.5% of global turnover for providing incorrect information

Our comprehensive EU AI Act 2025 compliance checklist provides detailed guidance on classification, documentation requirements, and timelines.

Timeline:

- August 2, 2025: General-purpose AI (GPAI) obligations begin

- August 2, 2026: High-risk AI system requirements fully enforced

- August 2, 2027: All provisions in effect

U.S. State-Level AI Regulation

While federal AI legislation remains limited, states are actively regulating:

Colorado AI Act (Effective June 30, 2026):

- Requires “reasonable care” to prevent algorithmic discrimination

- Applies to AI in consequential decisions (employment, lending, housing, healthcare)

- Most cybersecurity AI likely exempt unless making consequential decisions about individuals

- Annual impact assessments required

- Consumer rights to explanation of AI decisions

California Proposed Legislation:

- Multiple bills addressing AI transparency and safety

- SB 53 (Transparency in Frontier AI Act) targets large AI developers

- Requires safety protocols and incident reporting

- Cybersecurity AI systems may be covered if affecting consumer services

Additional State Activity: As detailed in our analysis of AI regulation in the U.S. 2025 and the federal vs. state divide, 38 states adopted 100+ AI laws in first half of 2025, creating complex compliance landscape.

Multi-Jurisdiction Strategy: Organizations should:

- Map which states’ laws apply to their operations

- Identify most stringent requirements and design to those

- Establish centralized AI governance function

- Consult legal counsel familiar with AI regulations

International Frameworks

United Kingdom NCSC Guidelines:

- National Cyber Security Centre published “Guidelines for secure AI system development”

- Emphasizes secure-by-design principles

- Specific guidance for adversarial robustness

- Threat modeling for AI systems

China Algorithmic Recommendations Regulations:

- Measures for the Management of Algorithmic Recommendations (March 2022)

- Requires transparency in algorithmic decision-making

- Security assessments for AI systems

- User rights to opt out of personalization

Singapore Model AI Governance Framework:

- Voluntary framework emphasizing transparency and fairness

- Risk-based approach to AI governance

- Specific guidance for high-risk applications

Building a Compliance Program

Key Components:

1. AI Inventory and Classification:

- Catalog all AI systems in use

- Classify by risk level and applicable regulations

- Document purpose, capabilities, and data sources

2. Risk Assessment:

- Identify potential harms (false positives, bias, security failures)

- Evaluate likelihood and severity

- Document mitigation measures

3. Documentation:

- Technical specifications

- Training data characteristics

- Performance metrics

- Testing procedures

- Known limitations

4. Human Oversight:

- Define which AI decisions require human review

- Establish escalation procedures

- Train personnel on AI limitations

5. Monitoring and Auditing:

- Performance tracking in production

- Bias audits across demographic groups

- Adversarial testing

- Regular compliance reviews

6. Incident Response:

- Procedures for AI failures

- Reporting requirements

- Remediation processes

The regulatory landscape for AI cybersecurity continues evolving rapidly. Organizations should establish governance frameworks flexible enough to adapt to new requirements while maintaining operational effectiveness.

6. Real-World Implementation Case Studies

Understanding how organizations successfully deploy AI cybersecurity requires examining detailed implementations, including both successes and challenges.

Case Study 1: Global Financial Services Firm

Organization Profile:

- Major international bank with 80,000+ employees

- Operations in 65 countries

- Highly regulated environment (financial services)

- Previous major breach in 2018 costing $250M+ in remediation and fines

Challenge:

- 300,000+ security alerts daily from disparate tools

- 35-person SOC overwhelmed and experiencing 45% annual turnover

- Average 18-day dwell time for sophisticated threats

- Compliance requirements for multiple jurisdictions

- Board pressure to demonstrate improved security posture

AI Implementation (24-month program):

Phase 1 (Months 1-6): Assessment and Pilot

- Evaluated 8 AI security platforms

- Selected Microsoft Sentinel + Defender suite for endpoint/cloud

- Deployed Darktrace for network behavioral analytics

- Pilot with corporate headquarters (15,000 employees)

Phase 2 (Months 7-12): Expansion and Integration

- Rolled out to 5 major regional offices

- Integrated with existing SIEM, EDR, firewalls, proxies

- Trained 35 SOC analysts (120 hours each) on AI tools

- Hired 3 ML engineers to manage AI systems

Phase 3 (Months 13-24): Optimization and Scaling

- Global deployment to all offices

- Custom ML models trained on bank-specific threats

- Integration with threat intelligence feeds

- Automated playbooks for common incident types

Results After 24 Months:

Operational Improvements:

- Alert volume reduced from 300,000 to 75,000 daily (75% reduction)

- SOC now investigating 92% of high/critical alerts (vs. 18% previously)

- Mean time to detect reduced from 18 days to 3.2 days (82% improvement)

- Mean time to respond reduced from 26 hours to 2.8 hours (89% improvement)

Security Outcomes:

- Detected and contained 2 sophisticated APT attempts pre-breach

- Prevented estimated $47M in fraud through AI-powered deepfake detection

- Identified insider threat case that would have gone undetected

- Zero successful ransomware attacks (vs. 3 in prior 24 months)

Business Impact:

- SOC analyst turnover reduced to 12% (industry average)

- Cyber insurance premium decreased 15% due to improved controls

- Passed all regulatory examinations with no findings on cybersecurity

- Board and C-suite confidence in security program significantly increased

Cost Analysis:

- Total investment: $12.5M over 24 months

- Software/licenses: $6.2M

- Infrastructure (cloud): $2.1M

- Personnel (training, new hires): $3.4M

- Integration services: $800K

- Estimated savings/value:

- Prevented breaches: $47M+

- Operational efficiency: $4.2M annually

- Insurance premium reduction: $1.8M annually

- ROI: 340% over 24 months

Key Success Factors:

- Executive sponsorship from CISO and CIO with board backing

- Adequate budget without requirement for immediate ROI

- Phased approach starting with pilot

- Heavy investment in training

- Change management acknowledging analyst concerns

- Clear metrics tracked from beginning

Challenges Overcome:

- Initial resistance from senior analysts (“AI can’t replace experience”)

- Integration complexity with 15+ existing security tools

- First 3 months had higher false positive rate requiring tuning

- Cloud data egress costs higher than anticipated

- Required cultural shift toward trusting AI recommendations

Case Study 2: Mid-Size Healthcare Provider

Organization Profile:

- Regional hospital system with 3 hospitals, 25 clinics

- 5,000 employees including 800 physicians

- Patient records for 400,000+ individuals

- Subject to HIPAA regulations

Challenge:

- Limited cybersecurity budget and staff (4-person team)

- Increasing ransomware attacks targeting healthcare sector

- Cannot afford major breach due to patient safety and HIPAA penalties

- Legacy medical devices creating security blind spots

AI Implementation (12-month deployment):

Selected Solution:

- CrowdStrike Falcon Complete (Managed Detection and Response with AI)

- SentinelOne for endpoint protection with behavioral AI

- Abnormal Security for AI-powered email security

- Decided against building in-house SOC due to resource constraints

Approach:

- Outsourced 24/7 monitoring to CrowdStrike’s MDR team

- AI handles first-line threat detection and response

- Hospital security team focuses on strategic initiatives and incident response

- Quarterly threat briefings and vCISO advisory services

Results After 12 Months:

Security Improvements:

- Blocked 12 ransomware attempts (vs. 1 successful attack in prior year)

- Detected and contained 2 insider threat incidents

- 97% reduction in successful phishing (AI email filtering)

- Identified vulnerable legacy medical devices for remediation

Operational Benefits:

- 4-person security team no longer overwhelmed with alerts

- Focus shifted to strategic projects (network segmentation, user training)

- 24/7 coverage without hiring night shift analysts

- Faster incident response through AI triage

Compliance:

- Passed HIPAA audit with zero cybersecurity findings

- Demonstrated “reasonable and appropriate” security measures

- Documentation for cyber liability insurance renewal

Cost Analysis:

- Annual cost: $480,000

- CrowdStrike Falcon Complete MDR: $280,000

- SentinelOne: $120,000

- Abnormal Security: $80,000

- Previous ransomware attack cost: $2.3M (downtime, recovery, HIPAA fine, legal)

- Estimated cost avoidance: $2.3M+ per year

- ROI: 380% annually

Key Success Factors:

- Realistic assessment that in-house SOC was not feasible

- Leveraging managed services with AI rather than building internally

- Focus on patient safety and regulatory compliance

- Clear executive understanding of healthcare ransomware risk

- Incremental deployment causing minimal operational disruption

Challenges:

- Legacy medical devices couldn’t run modern endpoint agents (required network segmentation)

- Physician resistance to any system changes (addressed through champions)

- Initial false positives blocking legitimate medical software (tuning required)

Case Study 3: Technology Company with AI Products

Organization Profile:

- Software company developing AI/ML products

- 1,200 employees globally

- Cloud-native architecture (AWS)

- Target of sophisticated attacks due to valuable IP

Challenge:

- Attackers specifically targeting AI models and training data

- Supply chain attacks through open-source ML libraries

- Need to secure AI systems while developing AI products

- Competitive pressure to move fast vs. security rigor

AI Implementation:

Unique Approach:

- Google Mandiant for threat intelligence and AI model security assessment

- Custom-built ML models for detecting attacks on AI systems

- Integration with CI/CD pipeline for secure ML ops

- Red team exercises specifically targeting AI systems

AI-Specific Security Measures:

- Model watermarking and provenance tracking

- Adversarial robustness testing for all production models

- Data poisoning detection in training pipelines

- Secure model serving with input validation

Results:

Attack Prevention:

- Detected and prevented data poisoning attempt on training dataset

- Identified supply chain attack via compromised Python package

- Blocked model extraction attempts through API abuse

- Prevented unauthorized model access via privilege escalation

Competitive Advantage:

- Security posture became differentiator in enterprise sales

- Achieved SOC 2 Type II and ISO 27001 faster than competitors

- Published security research building brand reputation

- Attracted security-conscious enterprise customers

Cost:

- Investment: $1.8M annually

- Value: Estimated $15M+ in protected IP and avoided breaches

- ROI: 730%+

Lessons for AI Companies:

- AI systems require specialized security beyond traditional IT security

- Model security is as important as data security

- Supply chain vigilance critical for ML dependencies

- Security can be competitive differentiator, not just cost center

7. Predictive AI and Self-Healing Systems

The next frontier in AI cybersecurity 2025 moves beyond detection and response to prediction and autonomous remediation. While still emerging, these capabilities represent the future of defensive AI.

Predictive Threat Intelligence

Forecasting Attack Patterns:

Advanced AI systems analyze global threat data to predict future attack vectors:

Google Mandiant Advantage:

- Processes 1+ trillion security events daily

- Identifies emerging threats 19 days faster than industry average

- Predicts which vulnerabilities attackers will likely exploit next

- Forecasts seasonal attack patterns (e.g., holiday shopping fraud)

Recorded Future:

- AI analyzes dark web, paste sites, code repositories, technical forums

- Predicts ransomware group targets and timing

- Identifies newly discovered vulnerabilities before public disclosure

- Provides tactical warning of imminent attacks

Effectiveness: Organizations using predictive threat intelligence reduce successful breaches by 38% (Gartner 2025 data) through proactive defense measures before attacks occur.

Self-Healing Network Architecture

Autonomous Remediation:

Experimental systems automatically detect and repair security weaknesses:

Darktrace Antigena:

- AI-powered autonomous response system

- Takes targeted action to neutralize threats without human intervention

- Operates within defined “rules of engagement” set by organization

- Can isolate compromised devices, block malicious connections, enforce encryption

How It Works:

- AI baseline establishes “normal” for every device and user

- Behavioral analysis detects deviations in real time

- AI determines appropriate response based on threat severity

- Autonomous actions executed within milliseconds

- Human analysts notified of actions taken

- System learns from outcomes to improve future responses

Adoption Status: Only 7% of enterprises use fully autonomous response (Gartner 2025), with most requiring human approval for automated actions. Concerns about false positives causing business disruption limit adoption.

Vulnerability Prediction

Identifying Weaknesses Before Exploitation:

AI systems predict which software vulnerabilities are most likely to be exploited:

CVSS Limitations: Traditional Common Vulnerability Scoring System (CVSS) rates severity but not exploitation likelihood. Result: organizations struggle to prioritize which patches to deploy first among thousands of vulnerabilities.

AI-Enhanced Prioritization:

- Machine learning models analyzing exploit code on dark web

- Correlation with active attacks in the wild

- Asset criticality assessment (which systems matter most)

- Exploitation ease prediction based on vulnerability characteristics

Tenable Predictive Prioritization:

- Reduces vulnerabilities requiring immediate attention by 97%

- Focuses teams on 3% most likely to be exploited

- Integrates threat intelligence with vulnerability data

- Demonstrated 70% improvement in remediation efficiency

Research Context: The intersection of AI capability growth and security requirements mirrors developments in other AI fields. Our coverage of quantum AI breakthroughs from Google and IBM explores how quantum computing will both threaten current cryptography and enable next-generation security algorithms, representing another dimension of the AI security arms race.

Proactive Defense Positioning

Deception Technology Enhanced by AI:

Traditional Honeypots:

- Fake systems deployed to attract and study attackers

- Limited intelligence from simplistic decoys

- Require manual monitoring and analysis

AI-Enhanced Deception:

- Dynamically generated decoys mimicking real systems

- Adaptive responses to attacker behavior

- Automated threat intelligence extraction

- Machine learning identifying attacker patterns and tools

Attivo Networks ThreatDefend:

- AI generates realistic decoys across network

- Analyzes attacker techniques when decoys accessed

- Feeds intelligence to defensive systems

- High-confidence alerts (decoys only accessed by attackers, not legitimate users)

The Human-AI Partnership in Predictive Defense

Why Full Automation Remains Elusive:

Despite impressive capabilities, fully autonomous security remains rare due to:

False Positive Risk: AI incorrectly identifying legitimate activity as malicious could:

- Disrupt business operations

- Block critical systems

- Violate SLA commitments to customers

- Cause cascading failures

Adversarial Attacks on AI: Sophisticated attackers may deliberately poison training data or exploit AI blind spots, potentially causing AI to:

- Ignore actual threats

- Flag legitimate activity

- Create alert storms distracting from real attacks

Regulatory Concerns: Many frameworks (EU AI Act, NIST guidelines) require “human-in-the-loop” for consequential decisions, making fully autonomous response legally risky.

Liability Questions: If autonomous AI takes action causing business harm or failing to prevent breach, who bears responsibility? Legal frameworks haven’t caught up to technology.

Best Practice: Human-AI Teaming

Most effective implementations combine AI speed and scale with human judgment:

- AI handles high-volume, low-risk decisions (blocking known malware)

- AI accelerates human analysis (alert triage, threat intelligence)

- Humans authorize high-impact actions (network isolation, system shutdown)

- AI learns from human decisions to improve future recommendations

- Clear escalation procedures for ambiguous cases

This hybrid approach, similar to patterns in how AI co-pilots transform employment rather than eliminate jobs, proves most successful in cybersecurity contexts where consequences of errors are severe.

8. Ethical Risks, Bias, and Governance

While AI transforms cybersecurity defense, deployment raises significant ethical concerns requiring proactive governance.

Algorithmic Bias in Security Systems

How Bias Manifests:

Training Data Bias:

- If training data over-represents certain attack types, AI may miss novel threats

- Under-representation of certain user populations leads to higher false positive rates

- Historical bias (e.g., more scrutiny of certain departments) reinforced by AI

Feature Selection Bias:

- Choosing wrong indicators creates systematic blind spots

- Example: Focusing on geography misses attackers using VPNs to appear local

Deployment Bias:

- Applying AI trained in one context (e.g., financial services) to different environment (e.g., healthcare) without retraining

Real-World Example:

A large enterprise deployed behavioral analytics AI trained primarily on daytime corporate network traffic. The system flagged night-shift workers and international employees working across time zones at dramatically higher rates, creating:

- Alert fatigue investigating false positives

- Productivity loss for falsely flagged employees

- Potential discrimination claims

- Reduced trust in security team

Solution Required:

- Training data must represent all legitimate user populations

- Regular bias audits across demographic and role groups

- Adjustment of thresholds for different contexts

- Feedback loops incorporating false positive reports

Privacy Concerns

Surveillance Implications:

AI security systems collect vast amounts of data about employee behavior:

- Every application accessed

- Every website visited

- Every file touched

- Communication patterns

- Keystroke dynamics and mouse movements (in some systems)

Tension: Comprehensive monitoring improves threat detection but raises privacy concerns, especially for personal device use (BYOD), remote workers, and activities during non-work hours.

GDPR Considerations: European data protection law requires:

- Legitimate purpose for data collection

- Data minimization (collect only what’s necessary)

- Purpose limitation (only use for stated security purposes)

- Individual rights (access, deletion, explanation)

- Data protection impact assessments for high-risk processing

Best Practices:

- Transparent policies clearly explaining monitoring scope

- Technical controls separating personal vs. work activity where possible

- Aggregation and anonymization where individual-level data not required

- Clear retention policies with automatic deletion

- Employee consent mechanisms

- Works council or union engagement where applicable

Similar privacy frameworks apply across AI applications. Our analysis of AI in education 2026 and ethical considerations explores parallel concerns around student data collection and algorithmic decision-making in educational contexts.

False Positives and False Negatives

The Fundamental Trade-off:

Security AI faces inherent tension between two error types:

False Positives (Benign flagged as malicious):

- Block legitimate business activity

- Waste analyst time investigating

- User frustration and security team friction

- Can number in thousands daily in large environments

False Negatives (Malicious flagged as benign):

- Attackers go undetected

- Data breaches and ransomware

- Regulatory violations and fines

- Reputation damage and litigation

Setting Thresholds:

Organizations must explicitly choose risk tolerance:

Conservative (Low False Negatives):

- Accept high false positive rate

- Suitable for: High-security environments, regulated industries, systems with severe breach consequences

- Trade-off: Operational friction, higher analyst workload

Balanced:

- Moderate false positive and false negative rates

- Suitable for: Most enterprises

- Trade-off: Requires continuous tuning and human oversight

Permissive (Low False Positives):

- Accept higher false negative risk

- Suitable for: Innovation-focused organizations, development environments, lower-risk systems

- Trade-off: Increased breach likelihood

No Single Right Answer: Appropriate threshold depends on organizational risk appetite, regulatory requirements, asset criticality, and resource availability for investigation.

Explainability and Transparency

The Black Box Problem:

Many AI models (especially deep learning) make accurate predictions without providing human-understandable reasoning:

Why This Matters for Security:

- Analysts can’t validate AI logic

- Difficult to identify when AI is wrong

- Compliance requirements for explainable decisions

- Building trust requires understanding

Explainable AI (XAI) Techniques:

LIME (Local Interpretable Model-Agnostic Explanations):

- Explains individual predictions

- Shows which features most influenced decision

- Works with any ML model

SHAP (SHapley Additive exPlanations):

- Quantifies contribution of each feature

- Consistent and theoretically grounded

- Helps identify bias

Attention Mechanisms:

- For neural networks, shows which input parts AI “focused on”

- Useful for understanding text/image analysis

Rule Extraction:

- Approximates complex model with interpretable rules

- Trade-off between accuracy and simplicity

Industry Progress:

Leading security vendors increasingly provide explanation capabilities:

- IBM QRadar Watson explains why alerts were generated

- Microsoft Defender shows reasoning behind threat classification

- CrowdStrike Falcon provides context for detections

However, explainability often reduces accuracy—highly interpretable models typically less accurate than complex “black box” models. Organizations must balance transparency with effectiveness.

Governance Frameworks

Establishing AI Security Governance:

1. AI Security Ethics Board:

- Cross-functional representation (security, legal, compliance, privacy, HR, business units)

- Review high-risk AI security deployments

- Approve policies for automated response

- Quarterly review of AI decision outcomes

2. Risk Assessment Process:

- Identify AI systems and classify by risk

- Document potential harms

- Evaluate mitigation measures

- Regular reassessment as systems evolve

3. Human Oversight Mechanisms:

- Define which AI decisions require human approval

- Clear escalation procedures

- Monitoring dashboards for AI performance

- Regular audits of AI actions

4. Bias Testing and Mitigation:

- Baseline fairness metrics across groups

- Regular bias audits (quarterly or semi-annually)

- Retraining with diverse data when bias detected

- Documentation of fairness measures

5. Incident Response for AI Failures:

- Procedures when AI makes serious errors

- Root cause analysis

- Model rollback capabilities

- Communication protocols

6. Continuous Monitoring:

- Model performance tracking (accuracy, false positive/negative rates)

- Drift detection (has underlying data distribution changed?)

- Adversarial attack detection

- User feedback collection

Reference Frameworks:

Organizations can leverage existing frameworks:

- NIST AI Risk Management Framework: Comprehensive voluntary guidelines

- ISO/IEC 42001 (AI Management System): International standard

- IEEE 7000 series: Ethics standards for autonomous systems

- OECD AI Principles: High-level principles for trustworthy AI

The governance challenge is balancing operational effectiveness (AI must be fast and accurate to defend against threats) with responsible deployment (ensuring fairness, privacy, and accountability).

9. Costs, ROI, and Implementation Strategy

Understanding the economics of AI cybersecurity 2025 is critical for sustainable deployment. While AI delivers significant value, costs extend beyond software licensing.

Total Cost of Ownership

Cost Categories:

1. Software and Licensing:

- AI security platforms: $50,000-500,000+ annually (depends on organization size, features)

- SIEM with AI capabilities: $100,000-1M+ annually (varies dramatically by data volume)

- Endpoint AI (EDR/XDR): $30-80 per endpoint annually

- Email security AI: $5-15 per user annually

- Managed detection and response (MDR): $50,000-500,000+ annually

2. Infrastructure:

- Cloud computing for AI workloads: $20,000-200,000+ annually

- Data storage (security telemetry): $10,000-100,000+ annually

- Network bandwidth increases: 10-20% increase typical

- On-premise GPU infrastructure (if not using cloud): $100,000-1M+ one-time

3. Personnel:

- AI/ML security engineers: $130,000-200,000+ per FTE

- Additional SOC analyst training: 40-120 hours per analyst

- Ongoing professional development: $5,000-10,000 per person annually

- External consultants for deployment: $50,000-300,000 one-time

4. Integration:

- Systems integration services: $50,000-500,000 one-time

- API development and maintenance: $30,000-100,000 annually

- Legacy system upgrades for compatibility: Variable, potentially significant

5. Ongoing Operations:

- Model retraining and tuning: 0.5-2 FTE ongoing

- Performance monitoring and optimization: Continuous effort

- Vendor support and maintenance: Typically 20-25% of license cost annually

Example TCO Calculation (Mid-Size Enterprise, 3,000 employees):

Year 1 Costs:

- Software licenses (SIEM, EDR, email, threat intelligence): $280,000

- Infrastructure (cloud): $60,000

- Implementation services: $150,000

- Personnel (training, 1 new ML engineer): $180,000

- Integration: $80,000

- Total Year 1: $750,000

Years 2-3 Annual Costs:

- Software licenses: $280,000

- Infrastructure: $70,000 (increased data volume)

- Personnel: $150,000 (ongoing training, partial FTE)

- Maintenance: $20,000

- Total Annual: $520,000

3-Year TCO: $1,790,000 (~$597,000 per year average)

Return on Investment

Quantifiable Benefits:

1. Breach Cost Avoidance:

- Average data breach cost: $4.88M (IBM 2025)

- Organizations with AI/automation: $2.22M lower breach costs

- If AI prevents even one major breach over 3 years: $4.88M+ value

2. Operational Efficiency:

- Reduced analyst time on false positives: 25-30% time savings

- Faster incident response: 60-80% reduction in MTTR

- Value of analyst time freed: $50,000-150,000 per analyst annually

3. Regulatory Compliance:

- Avoided fines and penalties: Variable but potentially millions

- Reduced audit and compliance costs: 10-20% efficiency improvements

- Cyber insurance premium reductions: 10-20% typical

4. Business Enablement:

- Faster time to market (security not bottleneck): Hard to quantify

- Competitive advantage from security posture: Indirect value

- Customer trust and retention: Difficult to measure precisely

Example ROI Calculation (Mid-Size Enterprise):

3-Year Costs: $1,790,000

3-Year Benefits:

- Prevented breach (conservative: 50% probability of preventing one $4.88M breach): $2.44M expected value

- Operational efficiency (5 analysts @ $100K saving 25% time): $375,000

- Insurance premium reduction (15% of $200K annual premium): $90,000

- Compliance cost reduction: $100,000

- Total Benefits: $3,005,000

ROI: 68% over 3 years (assumes benefits begin accumulating after 6-month implementation period)

Note: ROI highly sensitive to breach cost avoidance assumption. If AI prevents multiple breaches or a catastrophic incident, ROI can be 300-500%+. If no major breach would have occurred anyway, ROI primarily from operational efficiency.

Implementation Strategy

Phased Approach (Recommended):

Phase 1: Assessment and Planning (2-3 months)

- Current state security evaluation

- Use case prioritization

- Vendor evaluation and selection

- Budget approval and resource allocation

- Success metrics definition

Phase 2: Pilot Deployment (3-6 months)

- Deploy AI tools in controlled environment

- Intensive monitoring and tuning

- User feedback collection

- ROI measurement vs. baseline

- Go/no-go decision for broader rollout

Phase 3: Staged Rollout (6-12 months)

- Geographic or business unit phased deployment

- Continuous training and support

- Regular performance reviews

- Adjustment based on lessons learned

Phase 4: Optimization and Expansion (Ongoing)

- Advanced use cases

- Custom model development

- Integration with business processes

- Continuous improvement

Quick Wins vs. Strategic Transformation:

Organizations should balance quick wins (email security AI, endpoint AI) delivering immediate value with strategic investments (AI SOC, predictive intelligence) requiring longer timeframes but offering greater impact.

Make vs. Buy Decision:

Build Custom AI:

- Pros: Tailored to specific needs, competitive advantage, no vendor lock-in

- Cons: Requires specialized talent, longer time to value, ongoing maintenance

- Suitable for: Large enterprises with ML expertise and unique requirements

Buy Commercial Platforms:

- Pros: Faster deployment, vendor support, continuous updates, proven performance

- Cons: Vendor lock-in, less customization, ongoing licensing costs

- Suitable for: Most organizations

Hybrid Approach:

- Use commercial platforms for core capabilities

- Develop custom models for organization-specific threats

- Best of both worlds but requires coordination

Measuring Success

Key Performance Indicators:

Security Metrics:

- Mean time to detect (MTTD): Target 60-80% reduction

- Mean time to respond (MTTR): Target 60-70% reduction

- Number of successful breaches: Target zero

- Alert investigation rate: Target 80%+ of high/critical alerts

Operational Metrics:

- False positive rate: Target <10% for high-priority alerts

- Analyst productivity: Time spent on investigation vs. false positives

- System uptime: Target 99.9%+

- Integration success: Percentage of security tools feeding AI

Business Metrics:

- ROI: Target 50-100%+ over 3 years

- Compliance pass rate: Target 100%

- Cyber insurance costs: Target 10-20% reduction

- Employee security awareness: Measurable improvement

Regular reporting to leadership with these metrics demonstrates value and justifies continued investment in AI security capabilities.

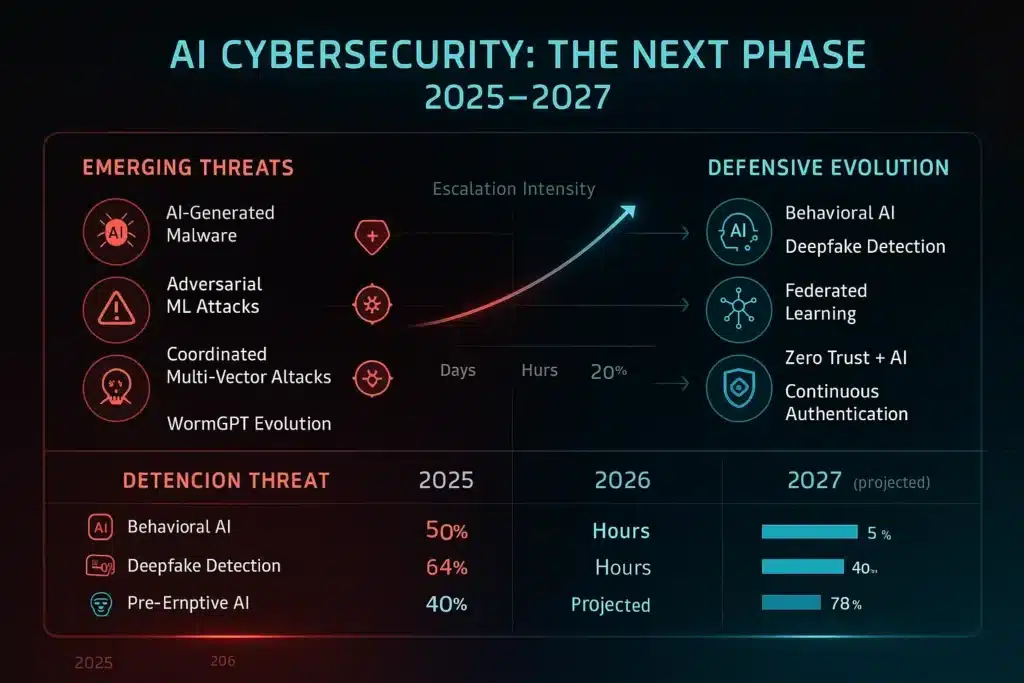

10. Future Threats and Defensive Evolution (2026-2027)

The AI cybersecurity landscape continues evolving rapidly. Understanding emerging threats and defensive capabilities helps organizations prepare for the next phase of the arms race.

Emerging Threat Vectors

1. AI-Generated Malware:

Polymorphic Code at Scale:

- Attackers using generative AI to create millions of unique malware variants

- Each variant functionally identical but signature-different

- Evades traditional antivirus and EDR signature-based detection

- Requires behavioral analysis and AI to detect common functionality

WormGPT and FraudGPT:

- Underground AI models specifically trained for malicious purposes

- No ethical guardrails or content restrictions

- Sold on dark web marketplaces ($200-300 per month access)

- Used for creating phishing campaigns, malware code, exploit suggestions

Defense Evolution: AI defenders must shift from detecting known threats to identifying malicious intent and behavior patterns regardless of specific code signatures.

2. Adversarial Attacks on AI Systems:

Model Poisoning:

- Injecting corrupted data into AI training sets

- Causes AI to misclassify specific threats as benign

- Difficult to detect without comprehensive data validation

Evasion Attacks:

- Crafting malicious inputs specifically to fool AI detectors

- Example: Slightly modifying malware to slip past behavioral AI

- Requires understanding of defender’s AI model

Model Extraction:

- Stealing commercial AI models through API abuse

- Enables attackers to craft perfect evasion techniques

- Intellectual property theft of AI systems

Defense Evolution:

- Adversarial training (including evasion attempts in training data)

- Model monitoring for unusual query patterns

- Watermarking and fingerprinting AI models

- API rate limiting and input validation

3. Quantum Computing Threats:

While still years away from widespread deployment, quantum computers threaten current encryption:

- Public key cryptography (RSA, ECC) vulnerable to Shor’s algorithm

- “Harvest now, decrypt later” attacks collecting encrypted data today

- Post-quantum cryptography transition required

Our analysis of quantum AI breakthroughs from Google and IBM explores how quantum computing will reshape both offensive and defensive capabilities, with AI potentially helping design quantum-resistant algorithms.

4. AI-Coordinated Multi-Vector Attacks:

Simultaneous Attack Orchestration:

- AI coordinating attacks across multiple vectors (phishing, network intrusion, DDoS)

- Adaptive tactics responding to defensive measures in real time

- Overwhelming defensive resources through coordinated complexity

Defense Evolution: AI defenders must develop coordinated response capabilities matching attacker sophistication, correlating seemingly unrelated events, and predicting subsequent attack stages.

Defensive Innovations (2026-2027 Timeline)

Federated Learning for Security:

Concept: Organizations collaboratively train AI models without sharing sensitive data, enabling industry-wide threat detection while maintaining privacy.

Benefits:

- Learn from attacks on other organizations without data exposure

- Faster identification of emerging threats across industry

- Smaller organizations benefit from collective intelligence

Status: Experimental deployments in financial services and healthcare sectors. Challenges include trust, governance, and technical complexity.

Zero Trust Architecture + AI:

Evolution: Zero Trust principles (never trust, always verify) enhanced by AI:

- Continuous risk assessment of every access request

- Behavioral biometrics supplementing authentication

- Micro-segmentation dynamically adjusted based on threat level

- AI detecting lateral movement attempts

Timeline: Mainstream adoption expected 2026-2027 as organizations complete cloud migrations and modernize identity systems.

Homomorphic Encryption for AI:

Concept: Analyzing encrypted data without decryption, enabling AI to process sensitive information without exposure.

Security Application:

- Threat detection on encrypted network traffic

- Privacy-preserving security analytics

- Regulatory compliance while maintaining security visibility

Status: Early research stage due to computational overhead (10-100X slower than plaintext). Practical deployment likely 2027-2030 as algorithms and hardware improve.

AI Red Teams and Continuous Testing:

Approach: Organizations deploying AI specifically to attack their own systems:

- Continuous penetration testing

- Automated vulnerability discovery

- Adversarial attack simulation

- Purple team exercises (red + blue collaboration)

Impact: Shifts from periodic testing to continuous validation, identifying weaknesses before attackers exploit them.

Biometric and Behavioral Authentication:

Beyond Passwords: AI-powered authentication using:

- Typing patterns and mouse movements

- Voice biometrics and speech patterns

- Face recognition with liveness detection

- Gait analysis and physiological signals

Continuous Authentication: Rather than single login event, AI continuously validates user identity based on behavior, providing real-time confidence scores and triggering re-authentication when anomalies detected.

Timeline: Widespread deployment 2025-2027 as passwordless authentication gains traction.

Preparing for the Next Wave

Organizational Readiness:

1. Skills Development:

- AI/ML security expertise increasingly critical

- Blend of cybersecurity and data science skills

- Continuous learning culture essential

- Consider AI security certifications (GIAC, ISC², vendor-specific)

2. Technology Flexibility:

- Avoid complete vendor lock-in where possible

- Modular architecture enabling component replacement

- API-first platforms for integration

- Regular technology reassessment (annually)

3. Threat Intelligence Partnerships:

- Join information sharing organizations (ISACs, CERTs)

- Engage with security research community

- Consider managed threat intelligence services

- Contribute intelligence when able

4. Resilience Focus:

- Assume breaches will occur despite best defenses

- Backup and recovery capabilities critical

- Incident response planning and practice

- Business continuity integration

5. Ethical AI Practices:

- Governance frameworks discussed in Section 8

- Regular bias audits and fairness testing

- Transparency with employees and customers

- Alignment with emerging regulations

The organizations that thrive in this evolving landscape will be those that view AI cybersecurity as continuous journey rather than destination, maintaining flexibility, investing in people, and balancing offense/defense capabilities.

Frequently Asked Questions (FAQs)

1. What is AI-driven cybersecurity?

AI-driven cybersecurity refers to the use of artificial intelligence and machine learning technologies to detect, prevent, analyze, and respond to cyber threats in real time. Unlike traditional rule-based security systems, AI can identify previously unknown threats by analyzing patterns in massive datasets, learning normal versus malicious behavior, and adapting to evolving attacker tactics. Core applications include behavioral anomaly detection, automated threat response, predictive threat intelligence, and deepfake detection. According to IBM’s 2025 X-Force report, organizations using AI-powered security reduced breach detection time by 64% (from 277 days to 98 days) compared to those relying on traditional methods alone.

2. Can AI accurately detect deepfakes?