TL;DR

The EU AI Act uses a risk-based approach and has been phased into application since 2024. Key compliance milestones in 2025 include the ban on prohibited systems and the introduction of obligations for general-purpose AI (GPAI) models. More stringent rules for high-risk AI systems arrive in later stages, with full applicability by 2026–2027. Businesses that develop, supply or deploy AI in the EU must map where their systems fall in the Act’s risk taxonomy, document data and governance controls, implement human oversight and register certain systems. Non-compliance carries steep fines — up to €35 million or 7% of global turnover. This article provides a step-by-step checklist with dates, sources and practical next steps for compliance preparation.

IMPORTANT UPDATE (November 2025): Recent developments suggest the EU Commission may delay certain enforcement provisions due to industry and political pressure, potentially pushing some high-risk AI compliance deadlines to 2027. Monitor official EU communications for timeline updates.

1. Current Status Update — What’s in Force and What’s Coming

Entry into Force: The AI Act was published and entered into force on August 1, 2024. Its provisions apply in carefully planned stages, with Chapter I & II and certain prohibitions starting from February 2, 2025.

GPAI & Governance Rules: Obligations for general-purpose AI models (GPAI) and key governance rules became applicable from August 2, 2025. Major AI providers like those behind Google Gemini and Microsoft Copilot now face comprehensive transparency and documentation requirements under these new GPAI provisions.

High-Risk Systems Timeline: The full set of obligations for listed high-risk AI systems phase in through 2026–2027, with many high-risk requirements becoming applicable from August 2, 2026, and extended transitional measures running until August 2, 2027 for certain existing systems.

These dates come from the EU’s official implementation timeline and confirmed regulatory guidance, though recent political developments including Trump administration pressure may influence enforcement timelines.

2. Who Must Comply — Scope & Affected Actors

Providers: Anyone placing an AI system on the EU market or putting it into service — including non-EU providers whose systems are used in the EU. Providers bear the primary obligations for high-risk and GPAI systems.

Deployers: Organizations using AI systems professionally (hospitals, banks, HR departments) have duties for safe operation, human oversight and monitoring.

Distributors & Importers: Actors placing products on the EU market must ensure upstream conformity.

Users & Consumers: While most obligations target providers/deployers, consumer protections and transparency obligations apply in specific contexts.

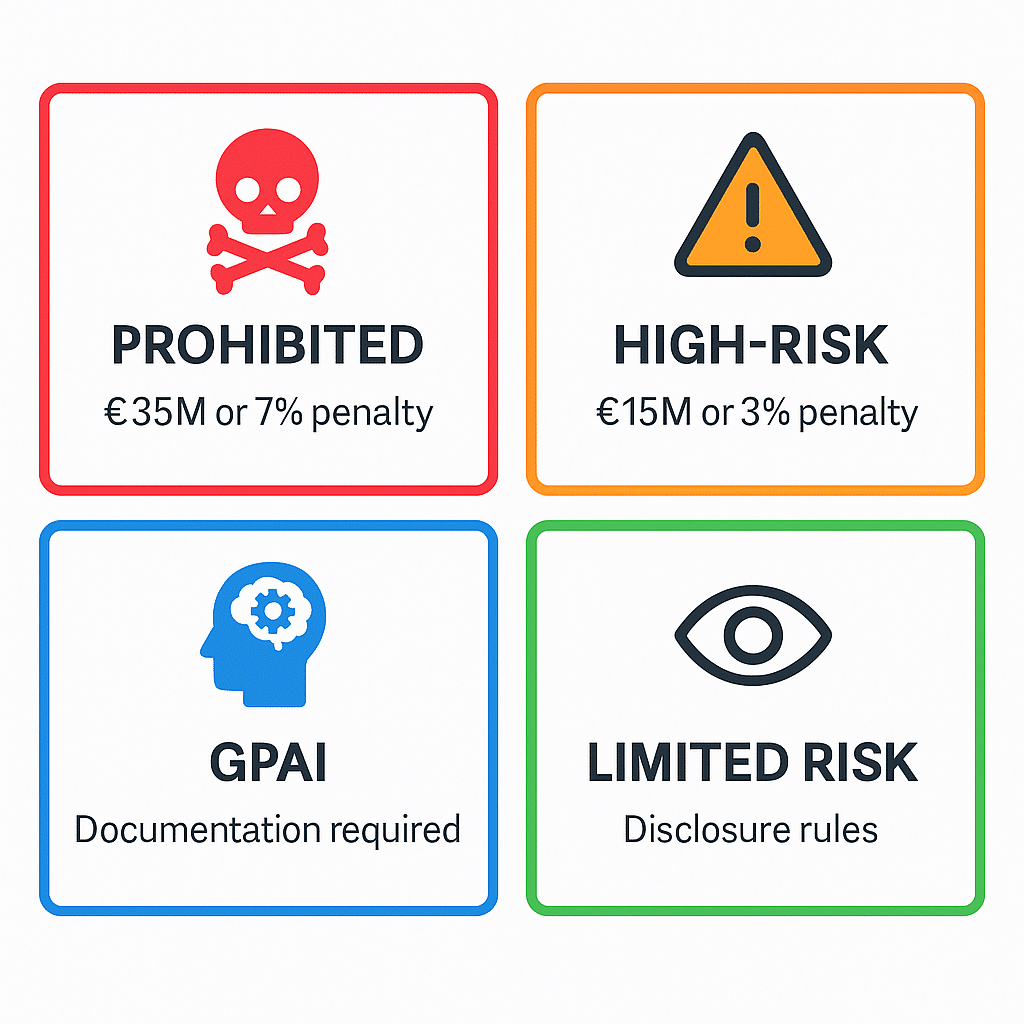

3. The Act’s Risk Taxonomy — Quick Reference Guide

Unacceptable Risk (Prohibited): AI systems that manipulate human behavior causing harm, social scoring by public authorities, and untargeted biometric identification in public spaces — prohibited since February 2, 2025.

High-Risk: Systems used in critical infrastructure, education, employment, credit scoring, law enforcement, migration/asylum, biometric ID for border control, and certain medical devices including AI wellness applications — subject to the strictest requirements. High-risk obligations phase in through 2026–2027.

General-Purpose AI (GPAI): Large models that can perform many tasks are now explicitly covered by governance obligations (transparency, risk management and documentation) from August 2, 2025. Major cloud deals like OpenAI’s $38B AWS partnership highlight the commercial significance of GPAI compliance.

Limited/Other Risk: Transparency obligations (deepfake labeling, chatbot disclosure) and basic consumer protections apply depending on context.

4. Five Critical Compliance Obligations — Implementation Required Now

Risk Management System (Article 9)

Design and maintain a continuous risk-management system for AI lifecycles, including identifying harms, evaluating likelihood/impact, implementing mitigation measures, and ongoing monitoring. This forms the foundation of high-risk compliance.

Technical Documentation & Record-Keeping (Articles 11–12)

Maintain complete technical documentation demonstrating conformity (data governance, model training details, test results) and automated logs/records for at least six months. This documentation faces inspection by national authorities.

Transparency & Information to Users (Articles 13–15)

Communicate the AI’s purpose, capabilities and limitations. Label when users interact with AI (deepfakes, chatbots), provide instructions for safe use and human oversight requirements. GPAI-specific transparency rules apply.

Human Oversight (Article 14)

Implement appropriate human oversight measures to prevent or minimize risks, with fail-safes for high-risk decisions where human intervention remains possible and meaningful.

Conformity Assessment & Market Surveillance (Chapter III)

High-risk systems must undergo conformity assessments (internal or third-party), carry CE-style declarations and submit to national market surveillance. The EU continues publishing guidance on Annex III listings and conformity routes.

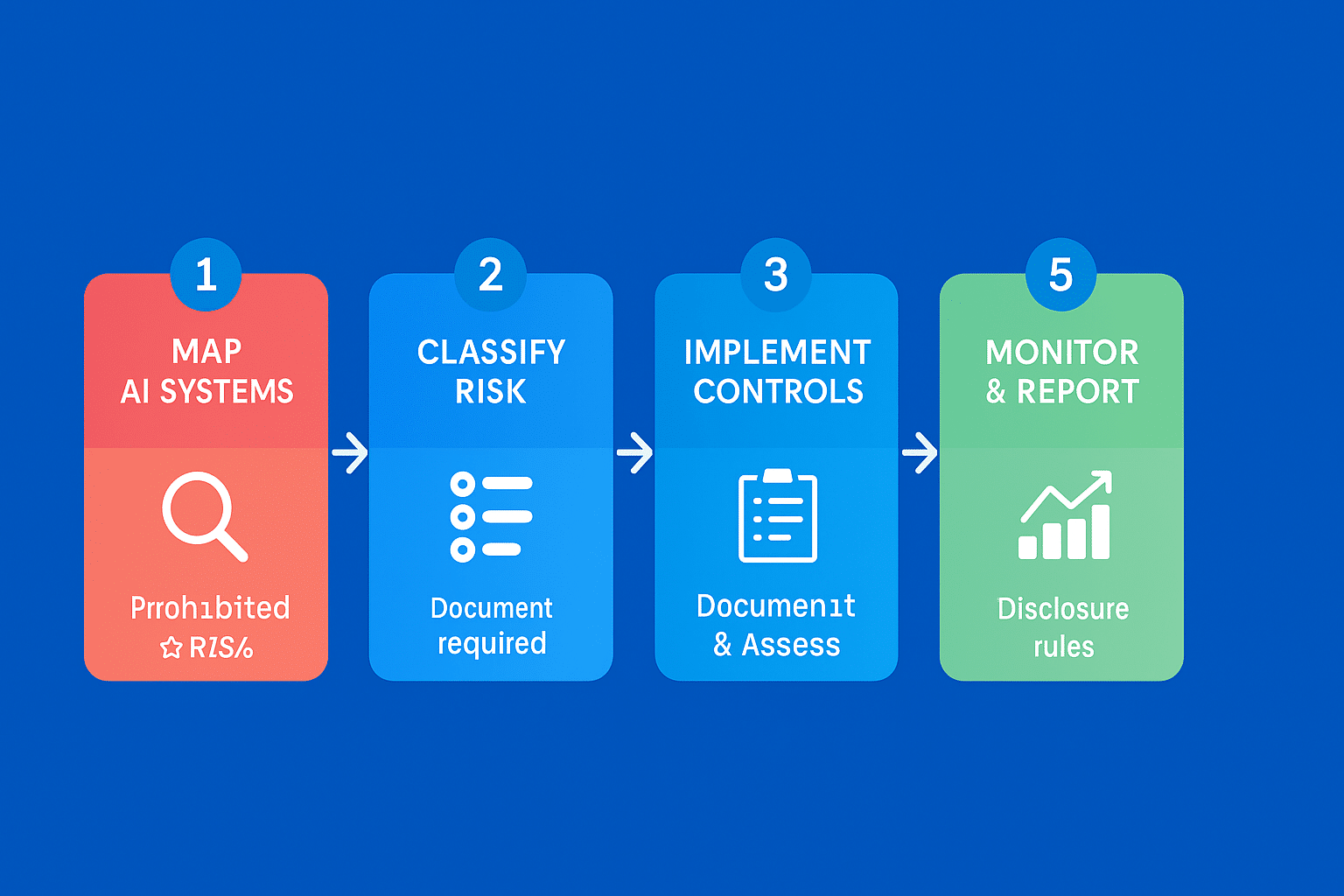

5. Practical Compliance Checklist — Step-by-Step Implementation

A. Immediate Actions (0–3 months)

Map ALL AI Systems: Classify every system (in-house and third-party) against Act categories (unacceptable/high-risk/GPAI/limited). Document classification decisions and assign ownership.

Flag High-Risk & GPAI Systems: Begin enhanced documentation collection for systems requiring strict compliance.

Appoint Compliance Leadership: Designate an AI compliance lead or cross-functional team (legal, security, data, product).

Initial Risk Assessment: Conduct risk-assessment workshop and create remediation roadmap.

B. Short-Term Implementation (3–6 months)

Deploy Risk Management System: Implement documented risk-management system (Article 9) with measurable KPIs and mitigation plans.

Standardize Documentation: Collect and standardize technical documentation and logs (data sources, pre-processing steps, training/validation datasets, model versions, performance metrics). Ensure proper retention policies.

GPAI Preparation: For GPAI systems, prepare model cards, training summaries, safety testing reports and governance materials aligned with Commission obligations.

C. Medium-Term Readiness (6–12 months)

High-Risk System Certification: Schedule conformity assessments (internal or notified body as required); prepare CE-style declaration of conformity.

Human Oversight Implementation: Deploy human oversight mechanisms and conduct red-team testing/adversarial robustness checks.

User Transparency Layers: Implement transparency features for end-users (labels, explainability summaries, documentation portals).

D. Ongoing Compliance Management

Monitoring Infrastructure: Establish monitoring pipelines and incident response procedures (alerting, human review, reporting to authorities as required).

Audit Trail Maintenance: Keep comprehensive records of all changes to models, datasets and production behavior.

Supplier Contract Updates: Update supplier contracts to include compliance warranties and data provenance clauses (third-party providers can transfer liability).

6. Penalties, Enforcement & Market Reality

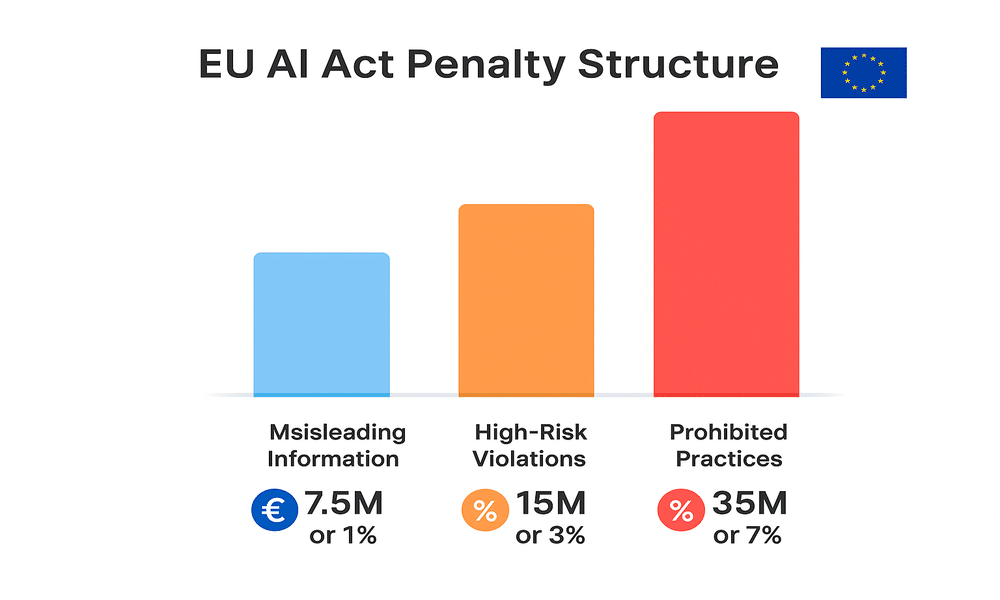

Administrative Fines: The Act provides for substantial penalties — up to €35 million or 7% of global turnover for prohibited practice violations, €15 million or 3% for other violations, and €7.5 million or 1% for providing misleading information. The scale of these penalties reflects the broader trend of AI industry consolidation and increasing regulatory scrutiny.

Enforcement Approach: The European Commission coordinates while national “market surveillance” authorities investigate and act. Expect enforcement initially focused on high-risk use cases and GPAI governance, with regulators proceeding despite industry requests for delays.

November 2025 Update: Recent reports indicate the Commission may introduce a one-year grace period for high-risk AI compliance and delay transparency violation penalties until August 2027, following pressure from the Trump administration and major technology companies.

7. Real Business Examples — How Companies Are Preparing

Large Cloud Providers & Model Developers: Major tech companies are forming specialized AI teams and publishing “AI Act” readiness playbooks. Many have signed voluntary codes while updating governance documentation to meet GPAI and transparency requirements.

Banks & Healthcare: Sectors with high-risk AI use (credit scoring, diagnostic tools) are mapping conformity routes and seeking notified bodies for assessments well ahead of 2026–2027 deadlines.

Manufacturing & Automotive: Companies developing embedded AI systems are aligning with both AI Act requirements and existing product safety regulations.

8. Quick Implementation Templates

Risk Classification Template

System name: [X]

Function: [e.g., scoring job applicants]

Classification: High-risk (Article X, Annex III)

Owner: [Name]

Next action: Start technical documentation & schedule conformity reviewUser Transparency Statement Template

"You are interacting with an AI system that [describe function]. This system produces automated outputs under human supervision. For details about data sources and limitations, see [link to documentation]."9. Frequently Asked Questions

Q: Does the AI Act apply to non-EU companies? A: Yes — the Act applies to providers placing AI systems on the EU market or serving EU users, regardless of where they’re established. Non-EU providers must comply if their systems affect EU individuals.

Q: Are small companies exempt from compliance? A: No blanket exemption exists. The Commission has indicated proportionality considerations and possible SME guidance, but obligations remain applicable depending on the system’s risk classification.

Q: What exactly is GPAI and why does it matter for business? A: GPAI refers to general-purpose AI models (large, widely-applicable models). From August 2, 2025, GPAI governance rules require providers to publish information on model data, safety testing and risk management.

Q: How do recent political developments affect compliance timelines? A: While the official timeline remains unchanged, November 2025 reports suggest potential delays to certain provisions. Businesses should continue compliance preparation while monitoring official EU announcements.

10. Authoritative Sources & Verification

Primary Legal References:

- European Commission AI Act Official Pages & Implementation Timeline

- ArtificialIntelligenceAct.eu — consolidated text & article-by-article analysis

- IAPP Industry Summaries — practical obligation breakdowns

- Reuters & Financial Times reporting on enforcement and recent policy developments

Industry Consultation Papers:

- Eurelectric and legal firm analyses on Annex III and conformity processes

- National implementation progress reports from member states

11. Legal & Editorial Disclaimers

This article summarizes the EU AI Act, official timelines and reputable reporting as of November 11, 2025. It is not legal advice. Businesses should consult qualified legal counsel and regulatory specialists for binding interpretations and to tailor compliance work to their specific circumstances.

Recent Developments Disclaimer: This article reflects the regulatory framework as officially published. Given ongoing political and industry discussions about potential timeline adjustments, readers should monitor official EU communications for any enforcement changes.