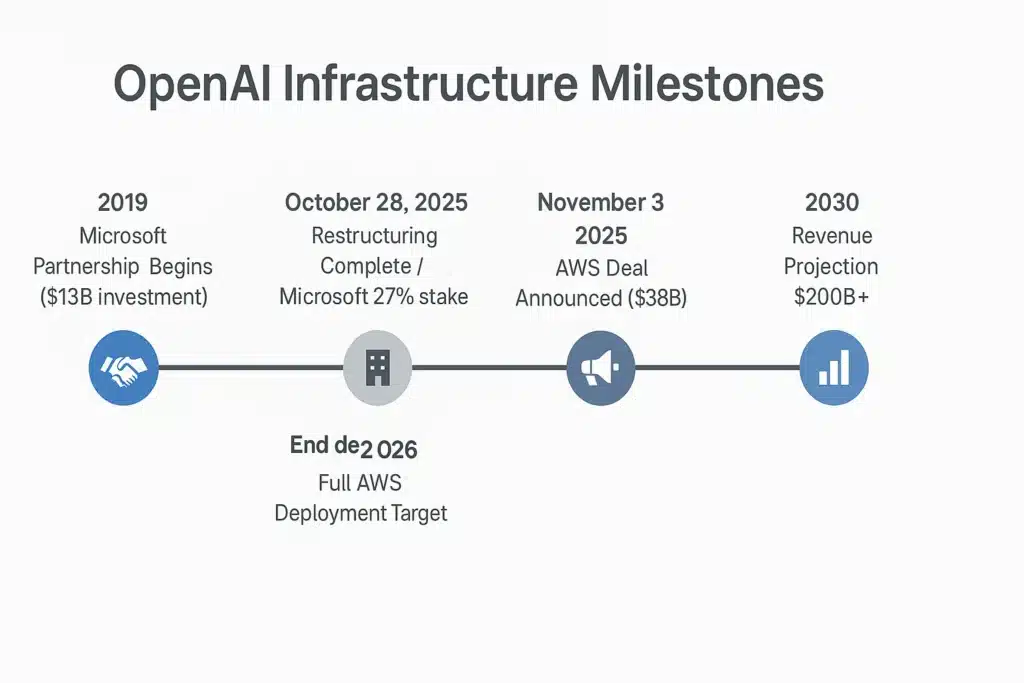

The artificial intelligence world woke up to seismic news on Monday, November 3, 2025: OpenAI announced a massive seven-year, $38 billion cloud computing agreement with Amazon Web Services. This partnership represents the largest AI infrastructure deal in history, fundamentally reshaping the competitive landscape of cloud computing, enterprise AI deployment, and the future trajectory of artificial intelligence development.

Source: Multiple verified reports from CNBC, TechCrunch, Amazon Web Services official announcement, and OpenAI statements

The market’s reaction was immediate and dramatic. Amazon’s stock soared to record highs, adding approximately $140 billion in market value in a single day. The announcement sent clear signals to technology executives, investors, and startup founders: the AI infrastructure race has entered a fundamentally new phase characterized by unprecedented scale and strategic importance.

For anyone seeking to understand artificial intelligence’s trajectory—and critically, the infrastructure investments required to support it—this partnership offers revealing insights into how the next generation of AI capabilities will be built and deployed.

The Deal That Changed Everything

What OpenAI Actually Gets

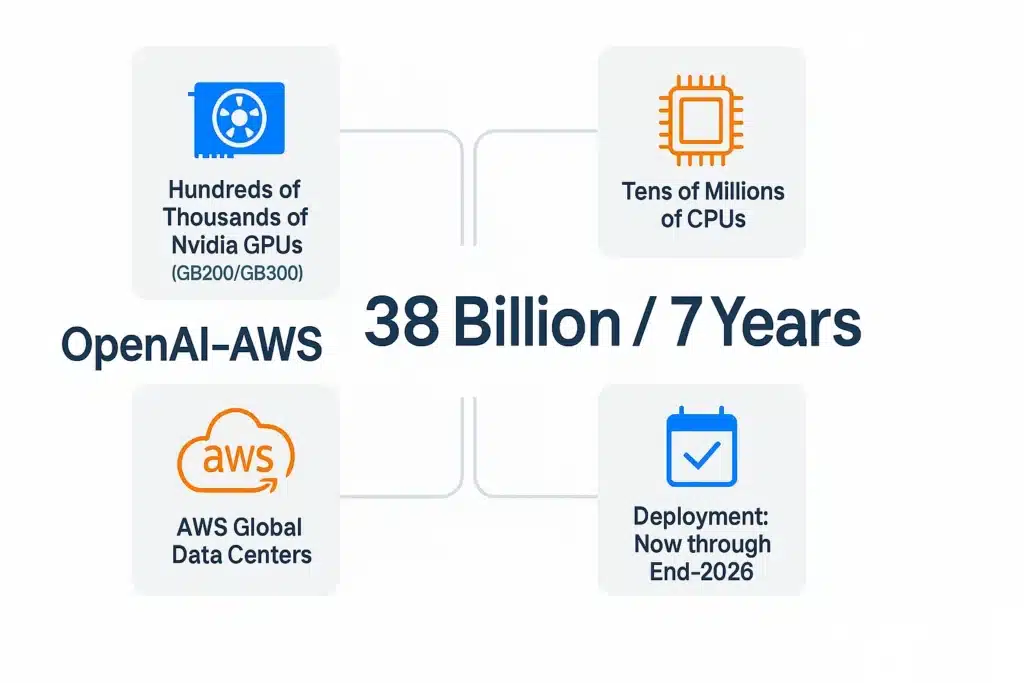

Understanding the scope of $38 billion over seven years requires examining what OpenAI receives in this unprecedented partnership. According to official announcements from both companies, OpenAI gains immediate access to hundreds of thousands of Nvidia’s latest graphics processing units—specifically the cutting-edge GB200 and GB300 models that represent current state-of-the-art AI processing capability.

The agreement extends far beyond graphics processors alone. OpenAI will deploy tens of millions of CPUs for scaling diverse workloads, access massive data center capacity across AWS’s global network, and utilize what Amazon designates as “EC2 UltraServers”—specialized infrastructure architectures designed specifically for the most demanding AI computational requirements.

💡 DEAL BY THE NUMBERS

- $38 billion total commitment over 7 years

- Hundreds of thousands of Nvidia GPUs (GB200/GB300 series)

- Tens of millions of CPUs for scaling workloads

- End-2026 target for full deployment

- 500K+ chips in some AWS AI clusters

- Immediate infrastructure access beginning November 3, 2025

The deployment timeline demonstrates remarkable speed for infrastructure projects of this magnitude. OpenAI began utilizing this new infrastructure immediately upon announcement, with complete capacity scheduled for deployment before the end of 2026. This represents exceptionally fast execution in an industry where major infrastructure projects typically require multiple years from announcement to operational status.

Why This Timing Matters

The AWS announcement arrived just days after OpenAI completed a major internal restructuring. On October 28, 2025—less than a week before the AWS deal—Microsoft’s preferential status expired under newly negotiated commercial terms with OpenAI, fundamentally changing the competitive dynamics of cloud infrastructure partnerships.

Until that October 28 restructuring, Microsoft held “right of first refusal” on OpenAI’s cloud computing needs, essentially granting them veto power over deals like the AWS partnership. The timing of the AWS announcement—coming immediately after this restriction lifted—reveals strategic planning and negotiation that likely occurred over many months.

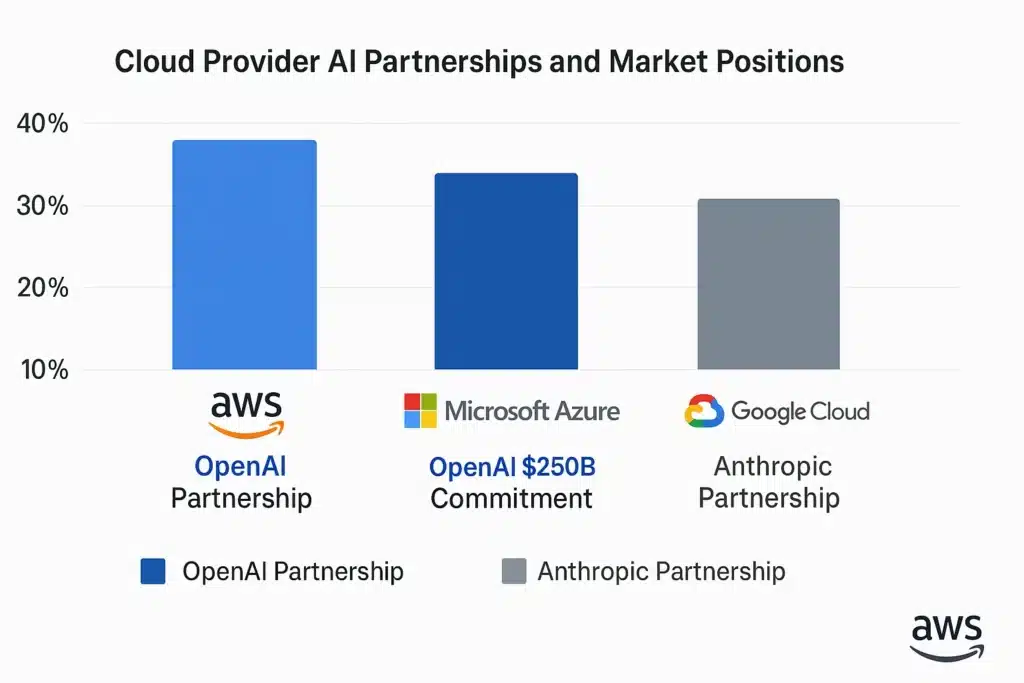

The restructured arrangement fundamentally transforms OpenAI’s infrastructure strategy. While OpenAI simultaneously committed to purchasing $250 billion in Microsoft Azure services (a massive commitment in its own right), the company now operates with freedom to diversify infrastructure across multiple providers. This transition from single-provider dependency to multi-cloud strategy represents a significant evolution in how OpenAI approaches computational resource management.

Industry Impact: A New Competitive Landscape

Microsoft’s Complicated Position

For Microsoft, this announcement creates mixed implications. Since 2019, Microsoft has invested $13 billion in OpenAI and positioned itself as the exclusive cloud infrastructure provider for the world’s most prominent AI company. Watching their star partner publicly announce a massive deal with their primary competitor represents a significant strategic shift.

However, Microsoft’s position remains strong in several important dimensions. They retain exclusive rights to offer OpenAI’s technology through Azure OpenAI Service, providing enterprise customers with integrated access to OpenAI models through Microsoft’s cloud platform. The $250 billion commitment OpenAI made to Azure services represents substantial guaranteed revenue. Additionally, Microsoft’s 27% equity stake valued at approximately $135 billion positions them as a major beneficiary of OpenAI’s long-term success.

Nevertheless, the symbolic importance of OpenAI publicly choosing AWS for infrastructure expansion signals a fundamental shift in the partnership dynamics. Microsoft no longer functions as OpenAI’s exclusive infrastructure provider, introducing competitive pressure that didn’t exist previously.

Google Cloud’s Challenge

Google Cloud finds itself navigating complex competitive positioning. The company has built substantial proprietary AI capabilities and maintains its own partnership with OpenAI for certain services. Additionally, Google has invested billions in Anthropic, OpenAI’s primary competitor in frontier AI development.

This AWS deal demonstrates how scale advantages matter profoundly in AI infrastructure competition. Google will need to emphasize their unique strengths—custom Tensor Processing Units (TPUs), global network infrastructure advantages, and deep integration between Google’s AI research and cloud offerings. The question facing Google Cloud leadership is whether these differentiating capabilities prove sufficient to compete against the sheer computational scale AWS can now offer through the OpenAI partnership.

The Startup Ecosystem Ripple Effect

For AI startups, this deal creates both opportunities and challenges. Positively, it signals that cloud providers are willing to make massive investments in AI infrastructure, which should translate to improved services and potentially more accessible pricing for all customers as providers compete for market share.

However, the deal also raises competitive stakes considerably. When OpenAI commits $38 billion to cloud infrastructure as part of an overall $1.4 trillion in total infrastructure spending, it becomes significantly harder for smaller, undercapitalized companies to compete purely on computational power. The advantage may increasingly shift toward companies that discover creative, efficient approaches to AI utilization rather than those that simply deploy the most computing resources against problems.

The Technical Revolution Behind the Deal

What “AI-Scale Infrastructure” Really Means

When discussing hundreds of thousands of GPUs, it becomes easy to lose perspective on what these numbers represent in practical terms. Each modern AI processor delivers computational capability roughly equivalent to a small supercomputer. Connecting hundreds of thousands of these processors requires infrastructure that literally did not exist just a few years ago.

The technical challenges are extraordinary across multiple dimensions:

Power Requirements: These GPU clusters consume enormous electrical power—sufficient to supply small cities. AWS is essentially constructing dedicated power infrastructure specifically to support OpenAI’s computational needs.

Cooling Systems: Modern AI processors generate such substantial heat that conventional cooling methods prove inadequate. AWS is deploying sophisticated liquid cooling systems and advanced thermal management technologies to maintain operational temperatures.

Network Architecture: The connections between processors must support massive data transfers at speeds that would have seemed impossible in previous computing generations. This requires custom networking hardware moving data at previously unimaginable velocities.

Storage Systems: Training AI models demands storing and accessing petabytes of data with minimal latency. This necessitates completely reimagined approaches to storage system architecture at unprecedented scale.

The Nvidia Factor

This deal effectively makes AWS one of Nvidia’s largest customers for the company’s most advanced AI chips. This positioning grants Amazon significant influence over the AI hardware ecosystem and likely secures priority access to Nvidia’s next-generation processors as they become available.

For Nvidia, witnessing cloud providers make these massive commitments validates their strategic focus on AI-specific hardware development. These partnerships provide predictable, substantial revenue streams that support continued innovation in AI processing technology.

This validation aligns with broader semiconductor industry trends, as TSMC and Samsung compete in next-generation 1.4nm chip manufacturing to meet surging AI infrastructure demand driven by partnerships like the OpenAI-AWS agreement.

Business Implications: What This Means for Everyone Else

The New Economics of AI

The scale of OpenAI’s infrastructure commitments—when aggregating all recent deals, approaching $1.4 trillion in total computing investments—fundamentally transforms the economic framework of artificial intelligence development.

For enterprises considering AI deployments, this creates nuanced implications:

Opportunity: Cloud providers will likely offer improved AI services and more competitive pricing as they compete for these massive contracts, potentially benefiting all customers through increased capacity and efficiency.

Challenge: The benchmark for “enterprise-scale” AI is rising rapidly. Organizations that considered running AI models on a few GPUs impressive will soon compete with services powered by hundreds of thousands of processors, fundamentally changing expectations for AI capability and performance.

The Cloud Provider Arms Race

This deal initiates what effectively constitutes an arms race among cloud infrastructure providers. Each major player—AWS, Microsoft Azure, Google Cloud, and others—will experience pressure to announce their own massive AI infrastructure investments and strategic partnerships.

Expected developments include:

- Announcements of specialized AI-focused data centers

- Strategic partnerships between cloud providers and AI companies

- Significant investments in custom AI hardware development

- New pricing models designed specifically for AI workload economics

What Enterprises Should Do Right Now

Strategic Planning for the AI Infrastructure Era

Business leaders should consider several critical questions:

Infrastructure Strategy: Does your current cloud provider possess the scale and capabilities necessary to support advanced AI workloads? Even organizations not planning to build the next ChatGPT will increasingly depend on AI services that require this type of infrastructure foundation.

Vendor Relationships: With cloud providers making massive AI infrastructure investments, their partnerships with AI companies become increasingly important strategic considerations. Understanding these relationship dynamics can inform better technology selection decisions.

Cost Planning: AI economics are evolving rapidly. Services that appear expensive today might become bargains within six months, while seemingly affordable options might become unavailable if procurement decisions are delayed too long.

The Multi-Cloud Reality

OpenAI’s strategy of engaging multiple cloud providers offers important lessons for enterprises. While multi-cloud approaches add complexity, they provide several valuable benefits:

- Improved negotiating leverage with providers

- Protection against capacity constraints at any single provider

- Access to specialized services from different platforms

- Reduced risk of vendor lock-in limiting future flexibility

🎯 KEY TAKEAWAY FOR BUSINESS LEADERS

The infrastructure underlying AI services is becoming as strategically important as the AI models themselves. Companies that understand and plan for these infrastructure realities will possess significant competitive advantages in the AI-driven economy.

Looking Ahead: The Future of AI Infrastructure

What This Means for Innovation

This massive infrastructure investment by OpenAI and AWS will likely accelerate AI innovation across several dimensions:

Faster Development Cycles: With access to virtually unlimited computing power, OpenAI can experiment with larger models and more complex approaches significantly faster than previously possible, potentially shortening the time between major AI capability advances.

New Application Categories: Some AI applications only become feasible at massive computational scale. This infrastructure opens possibilities for entirely new categories of AI services that weren’t practical with more limited resources.

Democratization Through Scale: Paradoxically, while infrastructure requirements are becoming massive, the resulting scale efficiencies could make AI services more accessible to smaller companies and individual developers through cloud-based access to these capabilities.

The Regulatory Dimension

Deals of this magnitude inevitably attract regulatory scrutiny. The concentration of AI capabilities among a handful of large technology companies raises important questions about competition policy, national security considerations, and technological sovereignty.

Expected regulatory developments include:

- Increased scrutiny from antitrust regulators examining market concentration

- National security reviews of major AI infrastructure deals

- International competition to build sovereign AI capabilities

- New regulatory frameworks specifically designed for AI infrastructure governance

For organizations navigating these evolving requirements, our comprehensive guide to US AI regulation and compliance in 2025 provides detailed analysis of emerging frameworks and compliance strategies.

Global Competition and Strategic Independence

The scale of this deal highlights the strategic importance of AI infrastructure for national economic competitiveness. Countries worldwide are observing these developments and making their own substantial investments in AI capabilities to maintain technological sovereignty.

Regions like the Middle East are accelerating their own AI infrastructure investments, with Saudi Arabia and GCC countries committing billions to AI development as part of national technology strategies aimed at building competitive advantages in the AI-driven global economy.

Europe, China, and other regions will likely accelerate their AI infrastructure projects in response to this announcement. The message from the OpenAI-AWS partnership is unambiguous: access to cutting-edge AI capabilities requires massive infrastructure investments, and technological lag could create serious economic and strategic disadvantages.

Investment and Market Implications

What Investors Should Watch

The market’s immediate positive reaction to this announcement reflects several important investment themes:

Infrastructure as Strategy: Investors are recognizing that AI infrastructure is becoming as strategically important as the AI models themselves. Companies that control critical infrastructure components possess significant structural advantages.

Scale Economics: The massive scale of these investments creates natural barriers to entry and competitive advantages for companies capable of making these commitments, potentially leading to winner-take-most dynamics in certain market segments.

Partnership Dynamics: The complex web of partnerships between AI companies, cloud providers, and chip manufacturers is creating new investment opportunities and risks that require careful analysis of strategic positioning and relationship dynamics.

Sector Rotation Potential

This deal could accelerate broader shifts in technology investment from pure-play AI companies toward the infrastructure companies that enable AI development. Areas likely to attract increased investment include:

- Cloud infrastructure providers

- AI chip manufacturers

- Data center real estate companies

- Power generation and cooling technology providers

- Network infrastructure companies

Conclusion: A Watershed Moment for AI

The OpenAI-AWS deal represents far more than a large corporate partnership—it constitutes a defining moment that will shape artificial intelligence development trajectories over the coming decade.

For OpenAI, the agreement provides the infrastructure foundation necessary to push the boundaries of AI capability. For AWS, it validates their strategic positioning as a critical enabler of the AI revolution. For the broader technology ecosystem, it offers a glimpse into a future where AI development is constrained more by imagination than by available computing power.

The implications extend well beyond these two companies. This deal establishes new standards for enterprise AI infrastructure, fundamentally changes competitive dynamics among cloud providers, and raises the stakes for everyone participating in the AI ecosystem.

As the industry moves forward, companies that understand and adapt to this new infrastructure reality—whether they’re building AI models, deploying AI services, or simply trying to use AI effectively in their businesses—will possess significant advantages over those that fail to recognize these fundamental shifts.

The Strategic Question for Every Business

The fundamental question this deal poses for business leaders is both simple and profound: In a world where AI infrastructure operates at this unprecedented scale, how do you ensure your organization remains competitive?

Whether that requires rethinking cloud strategy, accelerating AI adoption plans, or completely reimagining business models for an AI-driven economy, the time for incremental changes is rapidly passing. The AI infrastructure revolution is not approaching—it has arrived.

The only remaining question is: Are you ready for it?

DISCLAIMERS

Investment Disclaimer: This article is for informational purposes only and does not constitute investment advice, financial guidance, or recommendations to buy or sell securities including Amazon (AMZN), Microsoft (MSFT), or Nvidia (NVDA) stock. Deal terms, company valuations, and infrastructure commitments discussed represent publicly announced figures and company statements but have not been independently verified by Sezarr Overseas News. Readers should conduct their own research and consult with qualified financial advisors before making investment decisions.

Technical Specifications Disclaimer: Technical specifications and infrastructure details cited in this article reflect company announcements and public statements from OpenAI, Amazon Web Services, and partner organizations. Actual deployment timelines, GPU quantities, and infrastructure capabilities may vary based on market conditions, supply chain factors, and evolving business requirements. Infrastructure commitments represent announced agreements and may be subject to modification.

Forward-Looking Statements Disclaimer: This article contains forward-looking statements about infrastructure deployment, market competition, revenue projections, and industry trends. These statements involve significant uncertainties and risks, and actual outcomes may differ materially from projections discussed. Factors affecting outcomes include technological developments, competitive dynamics, economic conditions, regulatory changes, financing availability, and geopolitical events.

Attribution and Sources Disclaimer: Information compiled from verified sources including CNBC reports (November 3-6, 2025), Amazon Web Services official announcements (November 3, 2025), OpenAI statements, Microsoft announcements (October 28, 2025), TechCrunch, Bloomberg, and industry analysis. Market data and stock prices reflect conditions as of November 15, 2025. Readers are encouraged to consult original sources for complete context.

Frequently Asked Questions

Q1: What is the OpenAI-AWS deal and when was it announced?

On November 3, 2025, OpenAI announced a strategic seven-year partnership with Amazon Web Services valued at $38 billion. The agreement provides OpenAI with immediate access to hundreds of thousands of Nvidia’s latest GB200 and GB300 GPUs, along with tens of millions of CPUs across AWS’s global data center network. This represents OpenAI’s first major cloud infrastructure partnership beyond Microsoft and marks the largest AI infrastructure deal in history.

Q2: How much did Amazon’s stock increase after the announcement?

Amazon’s stock surged to record highs following the announcement, adding approximately $140 billion to the company’s market capitalization in a single trading day. The market reaction reflected investor confidence in AWS’s position as a critical infrastructure provider for advanced AI development and validated Amazon’s cloud computing strategy during the AI transformation era.

Q3: Does this mean OpenAI is leaving Microsoft?

No, OpenAI is not leaving Microsoft. On October 28, 2025 (days before the AWS announcement), OpenAI and Microsoft announced a restructured partnership where Microsoft retains a 27% equity stake valued at approximately $135 billion. OpenAI has also committed to purchasing an incremental $250 billion in Microsoft Azure services. However, Microsoft no longer has “right of first refusal” on OpenAI’s cloud purchases, allowing OpenAI to pursue a multi-cloud strategy with multiple providers including AWS, Oracle, and Google.

Q4: What is OpenAI’s total infrastructure spending commitment?

OpenAI CEO Sam Altman confirmed in early November 2025 that the company has accumulated approximately $1.4 trillion in infrastructure commitments over the next eight years. This includes agreements with Amazon AWS ($38 billion), Microsoft Azure ($250 billion), Oracle ($300 billion), Broadcom ($350 billion), Nvidia ($100 billion), AMD ($90 billion), and CoreWeave ($22.4 billion). These commitments span cloud computing, data center capacity, and advanced chip procurement necessary to support frontier AI development.

Q5: How will OpenAI pay for $1.4 trillion in infrastructure commitments?

OpenAI projects reaching $20 billion in annualized revenue by the end of 2025 and growing to hundreds of billions of dollars by 2030. The company plans to finance its infrastructure through multiple sources: operational revenue growth (particularly from enterprise customers), equity fundraising (OpenAI raised funding at valuations approaching $500 billion in 2025), debt financing arrangements, and strategic partnerships. CEO Sam Altman has clarified that OpenAI is not seeking government loan guarantees or backstops, despite earlier comments from CFO Sarah Friar that caused confusion.

Q6: What specific infrastructure does OpenAI get from AWS?

Under the agreement, OpenAI gains access to hundreds of thousands of Nvidia’s latest GB200 and GB300 graphics processing units (GPUs), tens of millions of CPUs for scaling workloads, specialized Amazon EC2 UltraServers designed for AI processing, and global data center capacity across AWS’s network. AWS is deploying some of its largest AI clusters exceeding 500,000 chips specifically for OpenAI’s use. The full infrastructure deployment is targeted for completion by the end of 2026, with expansion capabilities extending into 2027 and beyond.

Q7: How does this affect other cloud providers like Google and Oracle?

The AWS deal intensifies competition among cloud providers for AI infrastructure leadership. Google Cloud must double down on its unique strengths including custom Tensor Processing Units (TPUs) and integration with Google’s AI research to compete with AWS’s scale advantages. Oracle, which has its own separate agreement with OpenAI, focuses on specialized data center buildouts. The deal demonstrates that cloud providers willing to make massive AI infrastructure investments can secure strategic partnerships with leading AI companies, potentially reshaping market share dynamics in cloud computing.

Q8: When will OpenAI start using the AWS infrastructure?

OpenAI began using AWS infrastructure immediately upon announcement on November 3, 2025. AWS confirmed that some capacity was already available and operational at the time of the announcement. The full deployment of all committed capacity is targeted for completion before the end of 2026, with the capability to expand further into 2027 and beyond based on OpenAI’s evolving requirements for training next-generation AI models and serving ChatGPT inference workloads.

Q9: Why is Nvidia important to this deal?

Nvidia’s GB200 and GB300 GPUs represent the cutting-edge of AI processing technology, providing the computational power necessary for training and operating advanced AI models like those developed by OpenAI. The AWS deal essentially makes Amazon one of Nvidia’s largest customers for these advanced chips, giving AWS significant influence over the AI hardware ecosystem and likely securing priority access to next-generation processors. For Nvidia, these massive cloud provider commitments validate their strategy of focusing on AI-specific hardware and provide predictable revenue streams supporting continued innovation.

Q10: What are the strategic implications for enterprises planning AI deployments?

For enterprises, this deal creates both opportunities and challenges. Opportunities include potentially better AI services and more competitive pricing as cloud providers compete for large contracts, and increased infrastructure availability as providers scale capacity. Challenges include rising expectations for what constitutes “enterprise-scale” AI as industry leaders deploy hundreds of thousands of GPUs, and the need for longer-term infrastructure planning (12-18 months ahead rather than 3-6 months). Enterprises should evaluate their cloud provider relationships, consider multi-cloud strategies for vendor diversification, and understand how these massive partnerships affect their access to advanced AI capabilities.

Related Reading: Nvidia CEO reports surging demand for Blackwell AI chips in Taiwan visit