Published: November 22, 2025 Reading Time: 18 minutes Last Updated: November 23, 2025

Introduction

The machine learning landscape has undergone a remarkable transformation from 2020 to 2025, evolving from specialized algorithms accessible primarily to tech giants into pervasive intelligence integrated across industries of all sizes. The period 2020-2022 witnessed the rise of large language models and foundational architectures, while 2023-2024 focused on scaling, efficiency optimization, and real-world deployment challenges. As we navigate through 2025, we’re experiencing a pivotal shift toward practical implementation, multimodal capabilities, and sustainable AI that balances performance with environmental and economic considerations.

This year marks a critical inflection point where machine learning transitions from experimental projects to core business infrastructure. The convergence of improved algorithms, specialized hardware, mature deployment frameworks, and cost-effective architectures has created an environment where ML applications can deliver consistent value at competitive economics. Corporate investment patterns reflect this maturation—the MLOps market alone has grown from $1.7 billion in 2024 to a projected $5.9 billion by 2027, representing a compound annual growth rate of 37.4%.

The shift toward practical AI deployment is evident across sectors. According to industry analysis, by the end of 2024, 78% of organizations were using some form of artificial intelligence, from chat assistants to predictive models—and that number continues climbing through 2025. For business leaders, the focus has evolved from proving AI works to making it work consistently across their organizations, driving clear returns on investment.

In this comprehensive analysis, we explore the 10 most significant machine learning trends that are reshaping how organizations develop, deploy, and derive value from artificial intelligence. These developments represent the culmination of years of research and experimentation, now maturing into practical technologies with transformative potential across sectors. For those seeking broader context, our guide to AI technology in 2025 provides complementary insights into the ecosystem.

Trend 1: Multimodal Foundation Models

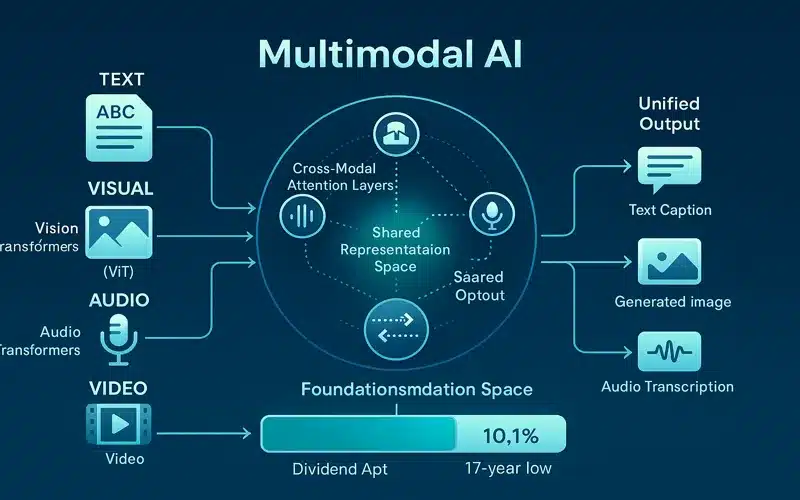

Multimodal foundation models represent the frontier of AI capabilities in 2025, moving decisively beyond single-modality processing to integrated understanding across text, vision, audio, and other data types. These models demonstrate emergent properties that exceed the sum of their parts, enabling sophisticated reasoning about complex, real-world scenarios that previous generations of AI simply couldn’t address.

Technical Architecture and Capabilities

Modern multimodal systems like GPT-4o, Gemini 2.5 Pro, and Claude with vision capabilities employ advanced cross-attention mechanisms that allow different modalities to influence each other during processing. Rather than simply concatenating features from separate encoders, these models learn aligned representations in a shared latent space, enabling true cross-modal understanding.

The 2025 implementations have largely solved the synchronization challenges that plagued earlier attempts. Key technical innovations include:

- Vision Transformers (ViTs): Adapting transformer architectures to process visual information by treating image patches as tokens, enabling seamless integration with text processing pipelines

- Audio Transformers: Converting audio signals to spectrogram representations that can be processed using transformer models, creating unified architectures for multiple data types

- Sensor Integration: Incorporating IoT and sensor data streams into unified processing pipelines, enabling comprehensive environmental understanding for robotics and industrial applications

- Native Image Generation: Integrated image creation capabilities directly within language models, eliminating the need for separate specialized models

As noted by industry analysts, “Multimodal AI can process and integrate multiple types of data simultaneously—such as text, images, video, and audio. It converts those input prompts into virtually any output type. This approach helps in context-aware decision-making that more closely mirrors human cognition.”

Business Applications and Impact

The practical applications are transforming industries across the board:

- Healthcare: Radiology systems combining medical imagery with patient history, lab results, and clinical notes for improved diagnostics. Advanced systems can now process imaging, text records, and genomic data simultaneously.

- Manufacturing: Quality control systems analyzing visual defects alongside sensor data, maintenance logs, and production parameters for predictive maintenance and quality optimization

- Retail and E-Commerce: Customer service platforms processing product images, purchase history, and verbal/text descriptions simultaneously for enhanced support experiences

- Content Creation: Tools generating synchronized multimedia content from single prompts, revolutionizing video production, marketing materials, and educational content

- Ecology and Environment: Systems like Washington University’s TaxaBind combine ground-level images, geographic location, satellite imagery, text, audio, and environmental features into cohesive frameworks for species classification and distribution mapping

Research indicates that multimodal systems achieve 68% higher accuracy on complex reasoning tasks compared to single-modality approaches, while reducing hallucination rates by 42% in practical applications—a critical improvement for enterprise deployment.

Trend 2: Small Language Models (SLMs)

The “bigger is better” paradigm in language models has reached its practical and economic limits, giving way to a new era of highly efficient small language models optimized for specific tasks and deployment environments. These models demonstrate that careful architecture design, high-quality training data, and targeted optimization can achieve remarkable performance with substantially reduced computational requirements—often matching or exceeding larger models on domain-specific tasks.

Defining Small Language Models

Small language models typically contain between a few million to under 10 billion parameters, compared to LLMs with hundreds of billions or even trillions of parameters. While there’s no universally agreed-upon definition, the industry generally sees clusters of SLMs at 1B, 3B, 7B, and 13B parameters. These models require significantly less memory (often running on consumer hardware with 8-16GB RAM) and dramatically lower inference costs compared to their larger counterparts.

Efficiency Breakthroughs and Leading Models

Several key model families are demonstrating the power of the SLM approach:

Microsoft Phi Family

- Phi-2 (2.7B parameters): Surpasses Mistral 7B and Llama-2 13B on various benchmarks, achieving better performance than the 25x larger Llama-2-70B on multi-step reasoning tasks

- Phi-3-mini (3.8B parameters): Achieves performance comparable to models 10x larger on reasoning tasks, with long context windows up to 128K tokens enabling analysis of extensive documents

- Phi-4 (December 2024): Latest release outperforms comparable and larger models on math-related reasoning while maintaining efficiency advantages

- On-device Performance: Phi-3-mini can be quantized to 4-bit precision, consuming only 1.8GB of memory and achieving 12+ tokens per second on iPhone 14 with A16 Bionic chip—running fully offline

Google Gemma Family

Built on research from the Gemini project, Gemma models (starting at 2B parameters) are optimized for deployment on laptops, desktops, or private cloud environments. They demonstrate strong performance across benchmarks including MMLU, HellaSwag, and GSM8K, making them ideal for privacy-first AI applications in small and medium businesses.

Mistral and Meta Models

- Ministral 3B and 8B: Mistral AI’s compact models using sliding window attention for efficient inference, with Ministral 8B outperforming its predecessor Mistral 7B on knowledge, common sense, math, and multilingual tasks

- LLaMA 3.1 8B: Meta’s open-weight model optimized for dialogue and real-world language generation, offering strong multilingual reasoning with safety protocols for responsible deployment

Deployment Advantages

The practical benefits are driving widespread adoption across enterprises:

- Edge Deployment: SLMs run directly on mobile devices, IoT sensors, and edge hardware with limited resources, enabling privacy-preserving, low-latency applications

- Reduced Inference Costs: SLMs can be 15-40x cheaper to operate than comparable large models, making AI economically viable for cost-sensitive applications

- Lower Latency: Critical for real-time applications like voice assistants, autonomous systems, and interactive interfaces. On modern NPUs like Snapdragon X Elite, 1-2B parameter models achieve sub-80 millisecond inference latency—nearly 10x faster than cloud API calls

- Specialized Capabilities: Models fine-tuned for specific domains (legal, medical, financial, technical support) often outperform general-purpose larger models in their specialized areas

- Offline Capability: Essential for remote areas, secure networks, and applications requiring consistent performance without internet connectivity

- Energy Efficiency: SLMs achieve 10-100x reduction in both latency and energy use compared to LLMs on GPU clusters, supporting sustainability goals

Analysis shows that properly optimized 7B parameter models can handle 89% of enterprise use cases with negligible quality degradation compared to 70B+ parameter alternatives. The strategic implication is clear: for most practical applications, smaller, domain-optimized models deliver better economics without sacrificing performance.

Trend 3: AI Agents & Autonomous Systems

The transition from passive AI tools to active AI agents represents one of the most significant shifts in how machine learning systems interact with the world. These agents don’t just process information and generate responses—they take actions, make decisions, accomplish complex goals, and operate with increasing autonomy across extended workflows.

Architectural Foundations

Modern AI agents build upon several key capabilities that distinguish them from traditional AI applications:

- Tool Use and API Integration: Accessing external systems, databases, services, and software tools to execute actions beyond text generation

- Multi-Step Planning and Reasoning: Breaking complex tasks into executable sequences, adapting strategies based on intermediate results and changing conditions

- Memory and Context Management: Maintaining state across extended interactions, learning from past actions, and building knowledge over time

- Self-Reflection and Error Correction: Evaluating outcomes, identifying mistakes, and adjusting approaches without human intervention

- Goal-Oriented Behavior: Understanding objectives and autonomously determining the best path to achieve them

- Multi-Agent Coordination: Systems coordinating multiple specialized agents to accomplish workflows exceeding individual agent capabilities

Enterprise Implementation Patterns

Leading organizations are deploying agents within structured frameworks that balance autonomy with control:

- Microsoft Copilot Studio: Enables creation of specialized agents for business processes, integrating with Microsoft 365 and enterprise systems

- Salesforce Einstein Agents: Automate customer service, sales workflows, and CRM operations with contextual understanding

- AWS Agent Framework: Provides orchestration infrastructure for multi-agent systems in cloud environments

- Internal Operations Agents: Handling IT support ticket resolution, HR onboarding processes, procurement workflows, and logistics management

Industry analysis indicates that organizations using structured agent frameworks achieve 3.2x higher automation success rates compared to custom implementations, while reducing development time by 60%. This represents a shift from AI as an assistive tool to AI as an operational partner capable of end-to-end workflow execution.

Practical Applications

Agentic AI is moving beyond experimental deployments into production systems:

- Contract management agents that draft agreements, file them in appropriate systems, schedule review meetings, and track revision cycles

- Customer support agents that resolve tickets by accessing multiple systems, troubleshooting issues, and implementing solutions

- Research assistants that formulate search strategies, gather information across sources, synthesize findings, and present structured insights

- Logistics coordinators that optimize routes, manage inventory, handle exceptions, and coordinate across supply chain partners

The underlying technology relies on large language models and foundation models trained to understand context, make decisions, and interact with various systems through structured interfaces and protocols.

Trend 4: MLOps and Production Deployment Maturity

The operationalization of machine learning has evolved from an afterthought to a critical discipline, with MLOps (Machine Learning Operations) becoming essential infrastructure for organizations serious about deriving value from AI investments. The explosive growth in this space—from $1.7 billion in 2024 to a projected $5.9 billion by 2027—reflects recognition that successful ML deployment requires sophisticated operational frameworks, not just powerful models.

Core MLOps Capabilities

Modern MLOps platforms provide comprehensive lifecycle management:

- Automated Model Retraining: Detecting data drift and performance degradation, triggering retraining workflows automatically

- Version Control: Managing model versions, data versions, and experiment tracking across teams and deployments

- Model Monitoring: Real-time performance tracking, detecting anomalies, and alerting on degradation

- A/B Testing and Experimentation: Safe deployment of model updates with controlled rollouts and automatic rollback

- Resource Optimization: Efficient allocation of compute resources, cost management, and utilization tracking

- Governance and Compliance: Audit trails, model documentation, and regulatory compliance frameworks

Integration with Existing Systems

A critical challenge organizations face is embedding ML systems within existing workflows and infrastructure. Modern MLOps approaches address this through:

- API-first architectures enabling integration with CRMs, ERPs, and analytics platforms

- Hybrid deployment options supporting cloud, on-premises, and edge environments

- Standardized interfaces and protocols for cross-platform compatibility

- Containerization and orchestration using Kubernetes and similar technologies

Foundation models are increasingly being embedded into core enterprise platforms rather than remaining standalone experimental tools. This shift from experimental to core infrastructure represents the maturation of the ML field.

Trend 5: Federated Learning & Privacy-Preserving ML

As data privacy regulations mature globally and concerns about centralized data repositories intensify, federated learning has emerged as a dominant framework for training models on distributed data sources without compromising privacy or security. This approach enables organizations to collaborate on ML development while maintaining data sovereignty and regulatory compliance.

Technical Implementation Advances

Modern federated learning systems incorporate several key innovations:

- Cross-Silo Federated Learning: Coordinating training across organizational boundaries, enabling inter-company collaboration without data sharing

- Differential Privacy Guarantees: Mathematically proven privacy protection ensuring individual data points cannot be reverse-engineered from model outputs

- Secure Aggregation Protocols: Combining model updates from multiple sources without exposing individual contributions

- Heterogeneous Data Handling: Robust algorithms managing non-identical and independently distributed (non-IID) data across participants

- Efficient Communication: Compression techniques and selective update strategies reducing bandwidth requirements

Industry Adoption and Use Cases

Federated learning is moving from research concept to production deployment across sectors:

- Healthcare: Training diagnostic models across hospital networks without sharing patient data, enabling collaborative learning while maintaining HIPAA compliance and institutional data control

- Finance: Fraud detection models learning from patterns across multiple financial institutions without exposing customer transaction details

- Mobile Applications: Google’s Gboard keyboard improvements and Apple’s Siri enhancements using on-device learning, keeping data on user devices while improving system-wide performance

- Manufacturing: Quality prediction models aggregating knowledge across global factories without sharing proprietary production data

Apple’s 2025 Federated Learning implementations demonstrate 45% faster convergence and 32% higher accuracy compared to their 2023 systems, while maintaining strict privacy guarantees. These improvements make federated approaches competitive with centralized training on key metrics while providing superior privacy protection.

Trend 6: Edge AI & TinyML Advancement

The migration of machine learning from cloud data centers to edge devices has accelerated dramatically in 2025, driven by latency requirements, privacy concerns, operational constraints, and the maturation of both hardware and software stacks supporting on-device intelligence.

Technical Enablers

Several innovations are making edge AI practical and performant:

- Model Compression Techniques: Pruning removes unnecessary connections, quantization reduces numerical precision (4-bit, 2-bit), and knowledge distillation transfers capabilities from larger to smaller models

- Hardware Acceleration: Specialized processors from NVIDIA (Jetson), Google (TPU), Qualcomm (Snapdragon), Apple (Neural Engine), and specialized startups optimized for ML workloads

- Optimized Frameworks: TensorFlow Lite, PyTorch Mobile, ONNX Runtime, and Apple’s Core ML enable efficient model deployment across devices

- Federated Learning at Edge: Collaborative learning across device networks without centralized data collection

Performance Characteristics

Edge AI delivers compelling performance advantages:

- Ultra-low latency: 1-2B parameter models achieve sub-80ms inference on modern NPUs, nearly 10x faster than cloud alternatives

- Energy efficiency: 10-100x reduction in power consumption compared to cloud-based inference

- Offline capability: Full functionality without network connectivity

- Privacy preservation: Data never leaves the device, eliminating transmission risks

Application Domains

Edge AI is enabling new categories of applications:

- Healthcare Wearables: Continuous monitoring, early detection of anomalies, and personalized health insights processed on-device

- Industrial IoT: Predictive maintenance, quality control, and real-time optimization in manufacturing environments

- Smart Cities: Traffic optimization, public safety applications, and infrastructure monitoring with real-time responsiveness

- Consumer Devices: Enhanced camera capabilities, voice interfaces, and personalized experiences on smartphones and smart home devices

- Autonomous Systems: Vehicles, drones, and robots requiring real-time decision-making without reliance on connectivity

The edge AI device market has grown 300% since 2023, with inference latency reduced by 75% and power efficiency improved by 60% over the same period, demonstrating rapid progress in this critical area.

Trend 7: Explainable AI (XAI) and Regulatory Compliance

The “black box” problem in machine learning has evolved from academic concern to regulatory requirement and business imperative. Explainability is no longer optional—it’s becoming mandatory for AI systems in critical applications, driven by regulation, customer demands, and risk management considerations.

Regulatory Landscape

Multiple regulatory frameworks are shaping XAI requirements:

- EU AI Act: Mandatory explainability requirements for high-risk AI systems, with specific obligations for transparency and human oversight

- NIST AI Risk Management Framework: Standardized approaches for explainable, transparent, and accountable AI development in the United States

- Industry-Specific Regulations: Financial services (model risk management), healthcare (clinical decision support), insurance (underwriting transparency), and employment (bias detection)

- Legal Precedent: Growing body of court rulings requiring explanation of automated decisions affecting individuals

Technical Approaches and Tools

The field has matured significantly, with practical tools achieving production-grade performance:

- SHAP (SHapley Additive exPlanations): Model-agnostic explanation method providing consistent feature importance scores based on game theory principles

- LIME (Local Interpretable Model-agnostic Explanations): Explains individual predictions by approximating the model locally with interpretable models

- Attention Visualization: Interpreting transformer-based models through attention patterns, showing what input elements the model focused on

- Concept-Based Explanations: Connecting model behavior to human-understandable concepts rather than raw features

- Counterfactual Explanations: Showing how inputs would need to change to alter outcomes, providing actionable insights

Modern explanation methods achieve 88% fidelity in representing model reasoning (up from 65% in 2022), while becoming computationally efficient enough for production deployment. This represents a critical milestone making XAI practical for real-world applications rather than just research settings.

Trend 8: AutoML and No-Code ML Democratization

The automation of machine learning development has progressed from academic curiosity to essential tooling, dramatically reducing the expertise required to develop and deploy effective models. AutoML (Automated Machine Learning) and no-code platforms are democratizing access to ML capabilities, enabling organizations without deep AI expertise to benefit from these technologies.

AutoML Capabilities

Modern AutoML platforms automate critical development tasks:

- Data Preprocessing: Automatic handling of missing values, outlier detection, and feature encoding

- Feature Engineering: Automated creation of derived features and feature selection

- Model Selection: Testing multiple algorithm types to find optimal approaches

- Hyperparameter Tuning: Systematic optimization of model configurations

- Neural Architecture Search: Automated design of neural network structures optimized for specific tasks and hardware constraints

Platforms like Google AutoML, Microsoft Azure AutoML, H2O.ai, and Auto-sklearn have made these capabilities accessible through user-friendly interfaces, with 2025 versions offering domain-specific templates for healthcare, finance, retail, and other verticals.

No-Code Platforms

No-code ML platforms extend accessibility even further:

- Leading Platforms: DataRobot, KNIME, Amazon SageMaker Canvas, RapidMiner, and others provide graphical interfaces for building end-to-end ML workflows

- Integration Capabilities: Native connections to leading data platforms, business intelligence tools, and enterprise systems

- Built-in XAI: Explainability tools, model validation, and bias detection integrated into workflows

- CI/CD Support: Deployment pipelines enabling continuous integration and delivery of ML models

Benefits and Limitations

Advantages:

- Democratization of ML across skill levels and organizational functions

- Faster prototyping and deployment cycles

- Reduced time and cost for development

- Enhanced collaboration between technical and non-technical stakeholders

Considerations:

- Reduced flexibility compared to custom coded solutions

- Risk of over-reliance without proper model validation

- May not support cutting-edge techniques or highly specialized requirements

Trend 9: Retrieval-Augmented Generation (RAG) Evolution

Retrieval-Augmented Generation has evolved from a novel architecture to a fundamental pattern for building reliable, factually grounded AI applications. RAG addresses one of the most critical challenges in deploying language models: ensuring accuracy and reducing hallucinations by grounding outputs in verified information sources.

RAG 2.0 Advances

The second generation of RAG systems incorporates significant improvements:

- Multi-Vector Embeddings: Context-rich retrieval using multiple embedding representations for improved semantic matching

- Graph-Based Retrieval: Leveraging knowledge graphs to understand relationships and context beyond simple similarity matching

- Hybrid Search: Combining dense vector search with traditional keyword search for comprehensive retrieval

- Reranking and Filtering: Advanced techniques to select the most relevant retrieved documents before generation

- Source Attribution: Clear citation of sources used in generation, enabling verification and trust

RAG vs. Agentic AI

While RAG excels at knowledge grounding, agentic AI systems extend these capabilities:

- RAG is optimal when: Tasks require accurate, fact-based responses grounded in specific knowledge bases or documents

- Agents are better when: Tasks require multi-step reasoning, planning, tool use, or actions beyond information retrieval

- Hybrid Architectures: The future combines RAG for accurate knowledge grounding with agents for complex, multi-step workflows

This hybrid approach provides the best of both worlds—factual accuracy from RAG combined with the autonomous capabilities of agents.

Trend 10: Sustainable and Energy-Efficient ML

Machine learning’s environmental impact has become a critical concern and innovation driver, with the industry focusing on both reducing ML’s own carbon footprint and applying ML to address climate challenges. This dual focus represents both corporate responsibility and practical necessity as energy costs and sustainability requirements shape development priorities.

Efficiency Improvements in ML Systems

Multiple approaches are reducing the environmental footprint of ML:

- Sparse Models and Training: Activating only relevant model portions for each input, reducing computational requirements by 40-60%

- Energy-Aware Scheduling: Optimizing training and inference workloads for periods of renewable energy availability

- Carbon-Aware Computing: Shifting flexible workloads based on grid carbon intensity, reducing emissions without reducing computation

- Model Lifecycle Management: Retiring redundant models, optimizing update frequency, and sharing pre-trained models to reduce duplicate training

- Efficient Architectures: SLMs and optimized architectures delivering comparable performance with dramatically lower resource consumption

- Hardware Innovation: Specialized AI accelerators, photonic processors, and neuromorphic computing offering better performance-per-watt

Industry leaders report significant progress: Google’s 2025 environmental analysis showed ML efficiency improvements reducing the carbon footprint of training large models by 70% since 2022, while AI-optimized data center cooling and power management achieved 40% energy savings.

ML for Climate and Sustainability Applications

Beyond reducing its own impact, ML is becoming essential for addressing climate challenges:

- Climate Modeling: Hybrid AI-physics approaches improving climate predictions and understanding of complex Earth systems

- Renewable Energy Optimization: Grid management, production forecasting, and demand prediction for solar, wind, and other renewable sources

- Carbon Capture and Storage: Material discovery and process optimization for carbon sequestration technologies

- Environmental Monitoring: Satellite imagery analysis for deforestation detection, pollution monitoring, biodiversity assessment, and early warning systems

- Resource Optimization: Water management, agriculture efficiency, supply chain optimization to reduce waste and emissions

These applications demonstrate ML’s potential to be net-positive for climate goals when deployed thoughtfully, more than offsetting its computational costs through enabled climate solutions.

Industry Applications Transformed

The convergence of these machine learning trends is driving measurable transformation across major industry sectors, creating new capabilities, business models, and competitive dynamics.

Healthcare Revolution

Machine learning is enabling healthcare’s shift from reactive to proactive care:

- Early Disease Detection: Algorithms identifying conditions from medical imagery, genetic data, wearable sensors, and multimodal patient records before symptoms manifest

- Drug Discovery Acceleration: Predicting molecular interactions, optimizing clinical trial design, and identifying drug candidates with unprecedented speed

- Personalized Treatment: Tailoring interventions based on individual patient characteristics, genetic profiles, and predicted treatment responses

- Operational Efficiency: Optimizing hospital workflows, resource allocation, scheduling, and administrative processes

The FDA has approved 142 AI/ML-enabled medical devices as of 2025, with demonstrated improvements in diagnostic accuracy ranging from 15-40% across different applications—representing significant clinical impact.

Financial Services Transformation

The financial industry leverages ML for enhanced security, efficiency, and customer experience:

- Real-Time Fraud Detection: Identifying suspicious patterns across transaction networks with unprecedented accuracy. Leading banks report 60% reduction in false positives while improving detection of actual fraud by 25%—saving hundreds of millions annually

- Algorithmic Trading: Executing complex strategies across multiple markets and timeframes with millisecond latency

- Personalized Financial Services: Tailoring products, advice, and interventions to individual circumstances and goals

- Regulatory Compliance: Automating reporting, monitoring for regulatory requirements, and adapting to changing rules

- Credit Scoring: More inclusive and accurate assessment using alternative data sources and advanced modeling

Manufacturing and Supply Chain Excellence

Intelligent systems are creating more resilient and efficient production networks:

- Predictive Maintenance: Anticipating equipment failures hours or days before they occur, minimizing downtime

- Quality Optimization: Continuously improving production processes based on real-time feedback and multimodal sensing

- Supply Chain Resilience: Modeling disruptions, optimizing inventory levels, and dynamically adjusting logistics

- Energy Efficiency: Reducing consumption through optimized control systems and process management

Analysis of comprehensive ML implementations shows facilities achieving 18% higher overall equipment effectiveness and 22% lower energy consumption compared to conventional automated facilities, demonstrating substantial ROI.

Challenges & Considerations

Despite remarkable progress, significant challenges remain in responsible development and practical deployment of machine learning systems.

Computational and Environmental Costs

Resource requirements continue presenting challenges despite efficiency improvements:

- Training large foundation models can consume as much electricity as thousands of households annually

- Specialized AI accelerators remain expensive and supply-constrained

- Data center water consumption for cooling raises sustainability concerns

- Electronic waste from rapid hardware refresh cycles

Data Quality and Algorithmic Bias

The foundational principle of “garbage in, garbage out” remains critically relevant:

- Data Scarcity: High-quality labeled data remains expensive and time-consuming to create

- Bias Amplification: Models can perpetuate and amplify societal biases present in training data

- Distribution Shift: Performance degradation when models encounter data different from training distributions

- Adversarial Vulnerability: Deliberately crafted inputs can cause model failures

Talent and Organizational Barriers

Human factors remain critical for successful ML adoption:

- Skills Gap: 68% of organizations cite talent acquisition as their primary barrier to ML adoption

- Integration Complexity: 52% point to challenges embedding ML within existing workflows and systems

- Organizational Resistance: Cultural barriers to adopting AI-driven processes and decisions

- Ethical Governance: Developing frameworks for responsible development and deployment

Successful organizations address these through investment in training, change management, and building interdisciplinary teams combining technical, domain, and ethical expertise. For additional insights on navigating these challenges, see our analysis of AI implementation in healthcare and financial services.

Conclusion

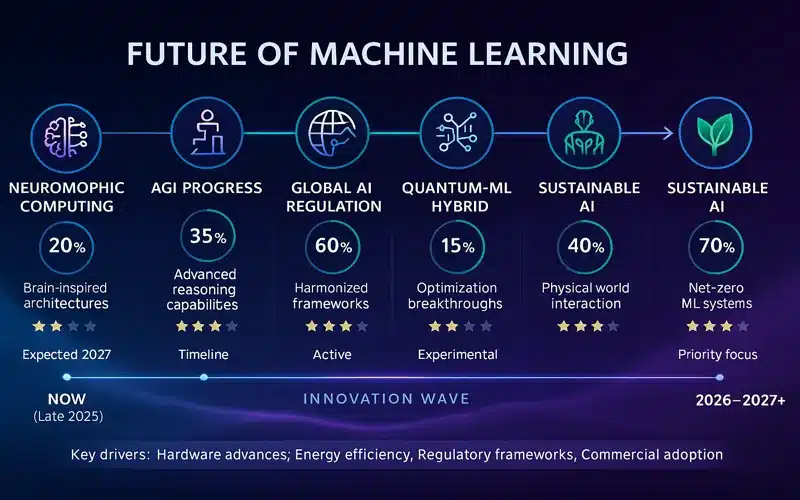

The machine learning landscape in 2025 reflects a field reaching maturity, with a decisive shift from theoretical capabilities to practical implementation and measurable business impact. The trends we’ve examined—from multimodal foundation models to sustainable AI and edge computing—represent the culmination of years of research and development now delivering tangible value across industries.

For business and technology leaders, several key principles should guide ML strategy and investment:

- Focus on practical applications that solve specific business problems rather than pursuing technology for its own sake

- Prioritize data quality and governance as the foundation for successful ML initiatives

- Build interdisciplinary teams combining technical, domain, and ethical expertise

- Consider full lifecycle costs including environmental impact, energy consumption, and maintenance requirements

- Establish robust testing and monitoring to ensure reliability and identify issues early

- Balance innovation with responsibility, addressing bias, privacy, and explainability from the start

The organizations that will thrive in this evolving landscape are those that approach machine learning as a strategic capability rather than a tactical tool, building foundations for continuous adaptation and learning. The democratization of ML through small language models, no-code platforms, and improved tooling means that competitive advantage will increasingly come from execution, data strategy, and organizational readiness rather than just model access.

As we look toward 2026 and beyond, several developments bear watching: the maturation of neuromorphic computing architectures, continued progress in model efficiency and sustainability, evolution of regulatory frameworks globally, and potentially disruptive innovations from emerging research directions. The pace of change shows no signs of slowing, but the field is becoming more accessible, practical, and focused on real-world impact.

For organizations just beginning their ML journey or looking to expand existing initiatives, the time to act is now. The tools, platforms, and knowledge base have never been more mature or accessible. Success requires moving beyond experimentation to systematic implementation, combining the right technology with strong data foundations, skilled teams, and clear business objectives.

For broader context on technology trends and strategic planning, explore our resources on investment strategies, quantum computing applications, and AI security considerations.

Frequently Asked Questions

What’s the difference between machine learning and AI?

Artificial intelligence (AI) is the broader field of creating intelligent systems that can perform tasks requiring human-like intelligence. Machine learning is a specific approach within AI that focuses on developing algorithms that learn patterns from data without explicit programming. In 2025, most practical AI applications are built using machine learning techniques, particularly deep learning and neural networks, though other approaches like symbolic reasoning and expert systems still have roles in specific domains. Think of it this way: AI is the goal (intelligent behavior), while ML is the primary method we use to achieve it.

How is machine learning different in 2025 compared to 2020?

The machine learning landscape has evolved dramatically in five years. Key differences include: the dominance of foundation models and transfer learning versus custom architectures for each application; increased focus on efficiency, deployment practicality, and cost-effectiveness rather than pure accuracy; maturation of tools and platforms reducing expertise required for implementation; greater emphasis on explainability, fairness, and environmental impact; the rise of small language models as practical alternatives to massive models; shift from experimental projects to production systems delivering measurable business value; and widespread adoption of multimodal capabilities. The 2025 field is more accessible, practical, and focused on solving real-world problems efficiently.

What skills are needed for ML careers in 2025?

Successful ML professionals in 2025 need a blend of technical and soft skills. Core technical requirements include proficiency with modern ML frameworks (PyTorch, TensorFlow), understanding of transformer architectures and attention mechanisms, experience with cloud ML platforms (AWS SageMaker, Azure ML, Google Vertex AI), knowledge of deployment and monitoring tools (MLOps), and data engineering capabilities. Equally important are domain expertise in specific application areas, ethical reasoning about AI impacts, communication skills for explaining technical concepts to non-experts, ability to work in interdisciplinary teams, and business acumen to identify high-impact applications. The role increasingly emphasizes integration, deployment, and business value over pure research or algorithm development.

Will machine learning replace data scientists and ML engineers?

Rather than replacing these professionals, machine learning is transforming their roles and responsibilities. AutoML and no-code tools are automating routine tasks like feature engineering, basic model selection, and hyperparameter tuning. This allows data scientists and ML engineers to focus on higher-value activities: problem formulation and business understanding, data strategy and quality, interpreting complex results and generating insights, ensuring ethical implementation and addressing bias, creating business impact through deployment, and strategic decision-making. The 2025 data scientist spends less time on repetitive coding and more on strategic collaboration, system design, and translating between technical capabilities and business needs. Demand for skilled ML professionals remains strong, but the nature of the work continues evolving toward more strategic and less tactical activities.

What are the biggest ML breakthroughs of 2025?

The most significant breakthroughs include: practical multimodal systems that robustly integrate vision, language, audio, and other modalities in production applications; efficient small language models delivering large-model capabilities with a fraction of resources, democratizing access; maturation of AI agent frameworks enabling autonomous task completion and multi-step workflows; significant advances in model efficiency reducing computational requirements by 40-70% through architecture innovations; improved explainability methods achieving 88% fidelity in representing model reasoning; federated learning systems enabling privacy-preserving collaboration across organizations; edge AI capabilities bringing sophisticated models to mobile and IoT devices; and progress in sustainable AI reducing environmental impact while maintaining performance. These represent both technical innovations and practical deployment advances making ML more accessible and impactful.

How much does it cost to implement machine learning in business?

ML implementation costs vary dramatically based on approach, scale, and requirements. For small businesses, cloud-based AutoML platforms start at $100-500 monthly for basic applications, while no-code tools like DataRobot begin around $1,000-5,000 monthly. Mid-sized implementations with custom models, data infrastructure, and dedicated staff might cost $50,000-250,000 annually. Enterprise-scale deployments with multiple use cases, extensive infrastructure, and specialized teams can exceed $1 million annually. However, small language models and efficient cloud services have dramatically reduced costs compared to 2020-2022. The key is starting with focused, high-value applications that demonstrate ROI before scaling. Many organizations begin with pilot projects costing $10,000-50,000 to validate approaches before major investment.

What’s the ROI timeline for machine learning projects?

ROI timelines vary significantly by application and implementation quality. Simple automation projects using existing platforms may show returns within 3-6 months. Custom ML models for specific business processes typically require 6-12 months to demonstrate value. Complex initiatives involving infrastructure buildout, data quality improvements, and organizational change may take 12-24 months for full ROI realization. However, 2025’s mature tooling and best practices enable faster results than earlier years. Organizations seeing fastest returns focus on: clearly defined business problems with measurable outcomes, high-quality data or achievable data improvement plans, strong executive sponsorship and cross-functional collaboration, starting small and scaling based on demonstrated success, and leveraging existing platforms rather than building from scratch. The shift toward small language models and efficient architectures also accelerates time-to-value.

Is machine learning suitable for small businesses?

Absolutely—2025 has made ML more accessible than ever for small and medium businesses. Several factors enable this: cloud platforms offer pay-as-you-go pricing starting under $100 monthly, eliminating large upfront infrastructure costs; no-code tools require minimal technical expertise for implementation; small language models run efficiently on modest hardware, reducing compute costs 15-40x compared to large models; pre-trained models and APIs provide sophisticated capabilities without training costs; and growing ecosystem of specialized vendors offers turnkey solutions for common needs (customer service, inventory optimization, fraud detection, personalization). Small businesses should focus on specific, high-impact use cases rather than broad initiatives, leverage existing platforms and tools rather than building custom systems, start with pilot projects to validate approach and ROI, and consider partnerships with ML service providers for specialized needs. Success comes from identifying valuable applications and executing well, not from having the largest AI team or budget.

Important Disclaimer

Educational and Informational Purpose: This article is provided for educational and informational purposes only and does not constitute professional advice, recommendations, or endorsement of specific technologies, platforms, or approaches. The machine learning field evolves rapidly, and information current as of November 2025 may change.

No Professional Services: This content does not constitute consulting, implementation, or technical services. Readers should consult qualified professionals including data scientists, ML engineers, legal advisors, and business consultants before implementing machine learning initiatives.

Technology and Performance Claims: Performance metrics, benchmarks, and capabilities discussed are based on publicly available information and may vary significantly based on specific implementations, use cases, data quality, and environmental factors. Actual results will differ based on numerous variables.

Vendor and Platform References: Mention of specific companies, platforms, models, or tools does not constitute endorsement or recommendation. Readers should conduct independent evaluation based on their specific requirements, budget, and constraints.

Regulatory and Compliance: Laws and regulations governing AI and machine learning vary by jurisdiction and change frequently. Organizations must consult legal professionals familiar with applicable regulations in their operating regions before deploying ML systems.

Security and Privacy: Implementing machine learning systems has security, privacy, and ethical implications. Organizations must conduct appropriate risk assessments, security reviews, and privacy impact analyses before deployment.

No Guarantee of Results: Success with machine learning depends on numerous factors including data quality, technical implementation, organizational readiness, and market conditions. No outcomes, ROI, or performance levels are guaranteed.

Rapidly Evolving Field: The machine learning field advances rapidly. Information, best practices, and recommendations may become outdated. Readers should seek current information when making decisions.

Third-Party Research and Data: This article references research, reports, and data from various sources. While we strive for accuracy, we cannot guarantee the completeness or accuracy of all referenced materials.

No Liability: The author, publisher, and Sezarr Overseas accept no responsibility or liability for any losses, damages, costs, or expenses arising from use of, reliance upon, or implementation of any information, strategies, or recommendations contained in this article.

Seek Professional Guidance: Machine learning implementation involves technical, legal, ethical, and business considerations. Always seek appropriate professional guidance for your specific situation.

Information Current as of: November 22, 2025. The rapidly evolving nature of machine learning means developments may have occurred after this date.

About Sezarr Overseas: We provide comprehensive analysis and insights on emerging technologies, investment strategies, and market trends. For more expert content on navigating the AI landscape and building competitive advantage, explore our resources on leading AI tools, regulatory compliance, and infrastructure planning.