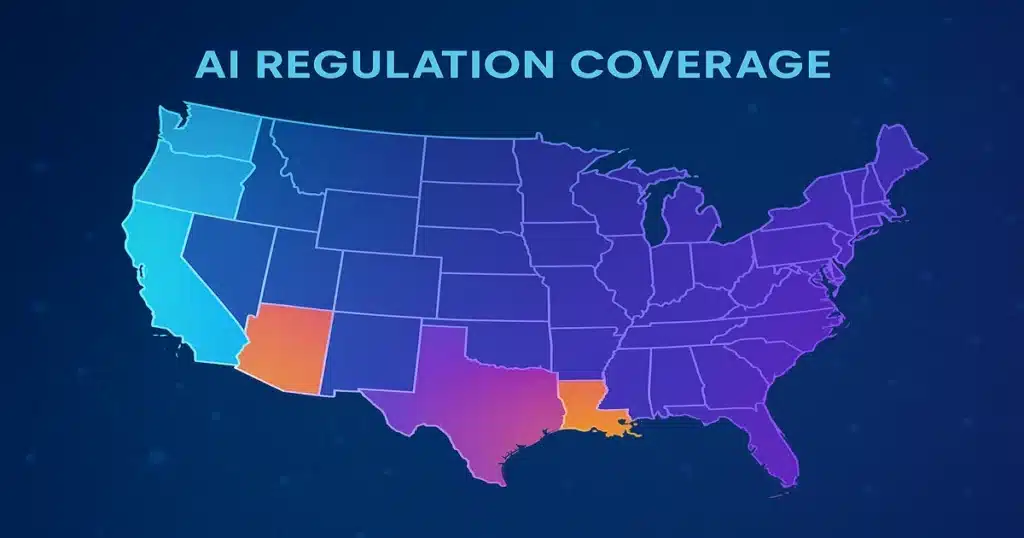

The United States AI regulatory landscape transformed dramatically in 2025, as states rushed to fill the federal void with their own legislation. While no comprehensive federal AI law exists, 38 states enacted approximately 100 AI-related measures, and lawmakers across all 50 states introduced over 1,080 AI-related bills throughout the year.

This rapid proliferation of state regulations has created what experts describe as a “compliance maze” that varies significantly depending on whether businesses operate in California, Texas, New York, or Colorado. The stakes are high: non-compliance can result in substantial penalties, lawsuits, and operational restrictions.

The State AI Regulation Explosion: Over 1,080 Bills Introduced

2025 Legislative Activity: State lawmakers introduced a record 1,080+ AI-related bills in 2025 alone, though only 118 became law, representing an 11% passage rate. This gap between introductions and enactments reveals the ongoing tension between mitigating AI risks and fostering innovation.

Key 2025 Statistics:

- 1,080+ bills introduced across all 50 states

- 118 bills became law (11% passage rate)

- 38 states enacted approximately 100 AI-related measures

- 301 bills specifically targeted deepfakes, with 68 enacted

- 9 states passed laws regulating AI based on use or context

“The volume is high, but the impact has been modest. That gap between introductions and enactments reveals a deeper tension between the desire to mitigate AI’s risks and the fear of stifling innovation.”MultiState Analysis, 2025 Legislative Session

The most successful legislative area has been deepfake regulation, particularly addressing sexual deepfakes through criminal or civil penalties. Four states (Arkansas, Montana, Pennsylvania, and Utah) passed digital replica laws to protect digital identity and consent.

California’s Multiple AI Laws: Leading the Nation

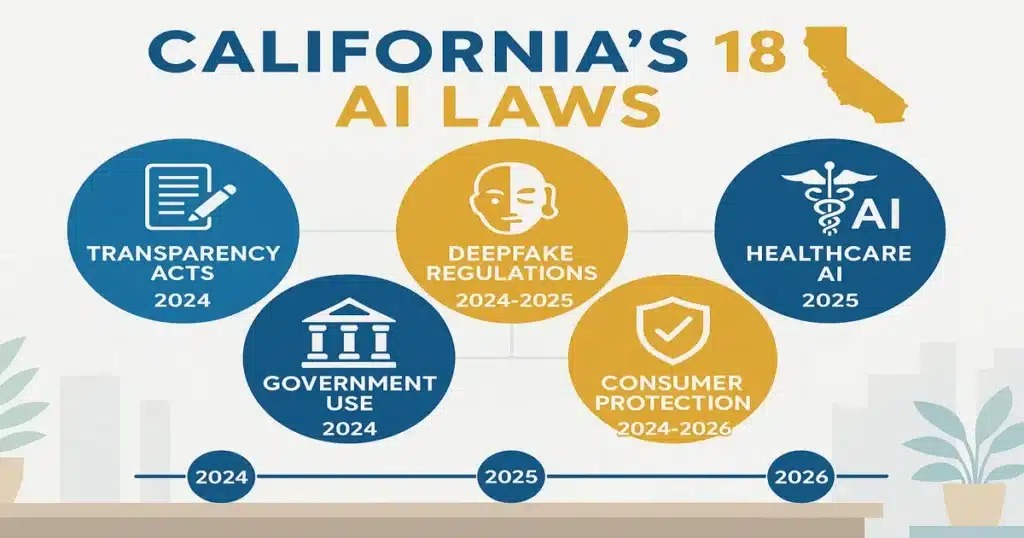

California’s Legislative Approach: 18 New AI Laws in 2024-2025

California took a different approach than other states, passing 18 separate AI laws rather than one comprehensive bill. Governor Gavin Newsom vetoed the landmark SB 1047 (Safe and Secure Innovation for Frontier AI Act) in late 2024, but subsequently signed multiple targeted bills addressing specific AI applications.

Key California AI Laws Enacted:

1. SB 53 – Transparency in Frontier Artificial Intelligence Act (TFAIA)

Signed: September 29, 2025 | Effective: January 1, 2026

- Applies to: Developers of “frontier models” (trained using >10^26 FLOPs)

- Requirements: Publish comprehensive Frontier AI Framework annually

- Documentation: Governance structures, catastrophic risk mitigation, cybersecurity practices

- Penalties: Up to $1,000,000 per violation

- Enforcement: California Attorney General

- Whistleblower protections: Strong provisions for employees reporting safety risks

2. SB 942 – California AI Transparency Act

Signed: September 1, 2024 | Effective: January 1, 2026 (extended to August 2, 2026)

- Applies to: Generative AI systems with >1 million monthly users

- Requirements: Latent (hidden) disclosures embedded in AI-generated content

- Content covered: Images, video, audio (excludes text)

- AI detection tool: Must be made available to users

- Contractual obligations: Licensees must maintain disclosure capabilities

3. AB 2013 – Generative AI Training Data Transparency

Effective: January 1, 2026

- Requirements: Disclose summaries of datasets used to train AI models

- Documentation: Whether data sources are proprietary or public

- Public availability: Information must be publicly accessible

4. SB 896 – Generative Artificial Intelligence Accountability Act

Signed: September 29, 2024 | Effective: January 1, 2025

- Applies to: California state agencies using generative AI

- Requirements: Public disclosure of AI use and risk documentation

- Contractor compliance: Private businesses contracting with agencies must comply

5. SB 243 – Companion Chatbot Safety Act

Signed: October 2025

- Protection for minors: Detect and respond to self-harm expressions

- Transparency: Disclose conversations are AI-generated

- Content restrictions: Restrict explicit material for minors

- Break reminders: Remind minors to take breaks every 3 hours

- Annual reporting: Beginning 2027, publish safety protocol reports

6. SB 926 – Deepfake Pornography Criminalization

Effective: January 1, 2025

- Prohibition: Non-consensual deepfake intimate images

- Private right of action: Victims can sue for damages

- Enforcement: California Attorney General

7. CPPA Regulations on ADMT (Automated Decision-Making Technology)

Finalized: May 2025 | Compliance deadline: August 3, 2025

- Opt-out rights: Consumers can opt-out of ADMT for “significant decisions”

- Significant decisions: Housing, employment, credit, healthcare

- Pre-use notices: Required before ADMT use

- Risk assessments: Required for high-risk data practices

New York’s AI Hiring Laws & Bias Prevention (NYC Local Law 144)

NYC Local Law 144: First-in-Nation AI Hiring Audit Law

Effective Date: July 5, 2023 (enforcement began) | Applies to: Employers and employment agencies operating in New York City

NYC Local Law 144 Requirements:

- Bias Audits: Annual independent third-party audits required for Automated Employment Decision Tools (AEDTs)

- Audit Scope: Test for disparate impact on race/ethnicity, sex, and intersectionality

- Candidate Notification: At least 10 business days before AEDT use

- Public Disclosure: Audit results must be posted on company website for at least 6 months

- Audit Contents: Date of audit, data sources, selection rates, impact ratios for all categories

- Independent Auditor: Must have no financial interest in the employer

Penalties for Non-Compliance:

- First violation: $500 (plus each additional violation same day)

- Subsequent violations: $500 to $1,500 per violation

- No cap: Civil penalties can accumulate without limit

- Weekly penalties: Up to $10,000 per week for continued violations

Proposed New York State Legislation (2025)

Two proposed bills would expand NYC’s law statewide and close loopholes:

- NY AI Act (S7623): Introduced January 8, 2025, would extend requirements statewide

- Assembly Bill A9315: Would allow private right of action for violations

- Key change: Would cover AI tools that “assist” in decisions, not just those playing a “predominant role”

Texas AI Framework: Intent-Based Liability Approach (TRAIGA)

Texas Responsible Artificial Intelligence Governance Act (TRAIGA)

Signed: June 22, 2025 by Governor Greg Abbott | Effective: January 1, 2026

IMPORTANT CLARIFICATION: Unlike the original article’s claim, Texas does NOT impose “strict liability” for AI harms. Texas took a more business-friendly approach by requiring proof of intentional misconduct rather than strict liability for outcomes.

TRAIGA Key Provisions:

Prohibited AI Practices:

- Constitutional infringement: Cannot develop/deploy AI with sole intent to infringe constitutional rights

- Unlawful discrimination: Cannot develop/deploy with intent to unlawfully discriminate (requires proof of intent, not just disparate impact)

- Exploitation of minors: Cannot develop with sole intent to create/distribute CSAM or explicit deepfakes of minors

- Social scoring (government): Government entities cannot use AI for social scoring

- Biometric identification: Government cannot identify individuals without consent

Intent-Based Liability Framework:

Critical Distinction: Texas requires proving discriminatory intent, providing businesses greater legal certainty than impact-based liability models.

- High standard: Must prove “sole intent” or intentional discrimination

- Mere disparate impact: Insufficient to establish violation

- Third-party misuse protection: Not liable if others misuse AI system for prohibited purposes

Safe Harbor Provisions:

- NIST compliance: Substantial compliance with NIST AI Risk Management Framework

- Good faith testing: Violations discovered through internal testing/red-teaming

- State agency guidelines: Following published state guidelines

- 60-day cure period: Attorney General must provide notice and cure opportunity

Regulatory Sandbox:

- First-in-nation: 36-month testing period without standard state licenses

- Administration: Department of Information Resources

- Requirements: Application, quarterly reporting, risk mitigation measures

Enforcement:

- Authority: Texas Attorney General (exclusive)

- Civil penalties: Up to $10,000 per violation

- No private right of action: Only AG can enforce

- Preemption: Local AI regulations prohibited

Colorado AI Act: First Comprehensive State Regulation

Colorado Artificial Intelligence Act (SB24-205)

Passed: May 2024 | Original effective date: February 1, 2026 | Delayed to: June 30, 2026

Colorado was the first state to pass comprehensive AI legislation regulating “high-risk” AI systems. The law has undergone reconsideration, with lawmakers postponing the effective date to allow further refinement.

Colorado AI Act Key Features:

- High-risk systems: AI systems making or substantially assisting consequential decisions

- Consequential decisions: Education, employment, financial services, government services, healthcare, housing, insurance, legal services

- Developer requirements: Impact assessments, risk management, documentation

- Deployer requirements: Risk management programs, impact assessments, transparency notices

- Consumer rights: Right to opt-out, right to appeal adverse decisions

- Penalties: Up to $20,000 per violation

Economic Impact Projection:

Common Sense Institute projects the Colorado AI Act will cost the state:

- 40,000 jobs by 2030

- $7 billion in economic output by 2030

Industry-Specific Regulations: Healthcare & Finance

Healthcare AI: State Medical Board Regulations

Multiple states have implemented specific regulations for healthcare AI applications. Healthcare AI faces unique scrutiny due to patient safety concerns.

Common Healthcare AI Requirements Across States:

- Clinical validation: Rigorous testing for diagnostic AI

- Physician oversight: Mandatory human review of AI recommendations

- Medical device classification: AI software classified as medical devices in multiple states

- Malpractice considerations: Clear liability frameworks for AI-assisted care

- Patient consent: Specific consent requirements for AI-based treatments

- Record review: Healthcare providers must review all AI-generated records

Texas Healthcare-Specific AI Requirements (Effective September 1, 2025):

- Data location: Health records must be stored in US or territories

- Practitioner review: Must review AI-generated records per Texas Medical Board standards

- Biological sex inclusion: AI algorithms must include individual’s biological sex

Financial Services AI: State Banking Regulations

State banking regulators have developed AI oversight frameworks distinct from federal regulations:

Financial AI State Regulations:

- Credit decision transparency: Explainability requirements for AI-based lending

- Fair lending compliance: Enhanced anti-discrimination requirements beyond federal law

- Model risk management: State-specific model validation standards

- Cybersecurity requirements: AI system security and breach reporting obligations

- Consumer redress: Clear processes for challenging AI decisions

- Insurance AI: Many states now require human oversight of AI utilization review

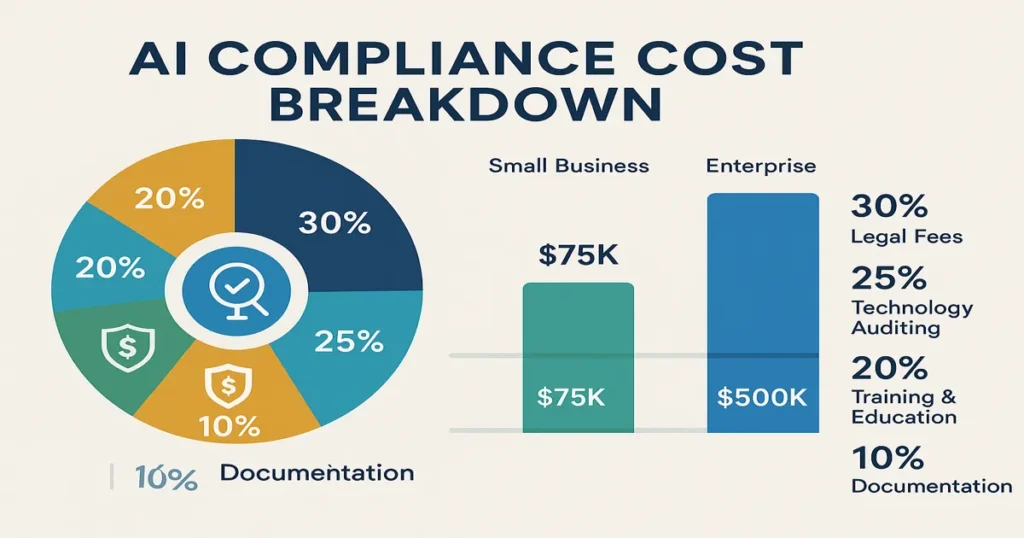

Compliance Costs: The Real Business Impact

Multi-State Compliance Burden on Businesses

While comprehensive national cost figures are difficult to verify, the compliance burden from state-by-state AI regulations is substantial and measurable:

Documented Compliance Cost Examples:

California CPPA Regulations:

- Projected cost: Over $500 million for businesses

- Small business impact: Nearly $16,000 in annual compliance costs per small business in California

- First-year costs: 120 hours of work per firm at blended rate of $56.40/hour

Colorado AI Act:

- Projected job losses: 40,000 jobs by 2030

- Economic output: $7 billion reduction by 2030

Multi-State Complexity:

- Small business concern: 65% worried about rising litigation and compliance costs from conflicting state laws

- AI adoption impact: One-third would scale down AI use, one-fifth less likely to use AI at all

- Interstate innovation tax: 29.2 million small businesses outside California absorb costs to operate in all markets

Compliance Cost Categories:

| Cost Category | Typical Business Impact |

|---|---|

| Legal & Compliance Staff | Significant increase in compliance hiring; legal fees for interpreting regulations |

| Technology & Auditing | Third-party bias audits; AI governance platforms; testing infrastructure |

| Training & Education | Workforce upskilling for AI compliance; ongoing education programs |

| Insurance & Liability | New AI liability insurance products; increased premiums |

| Documentation & Reporting | Increased administrative burden; record-keeping systems |

| System Modifications | Building separate systems for different states; tripled development costs |

Small Business Disproportionate Impact

Small businesses face particularly acute challenges from the state-by-state regulatory patchwork:

- Resource constraints: Cannot afford multi-state compliance teams

- Limited AI adoption: 82% using AI report increased productivity, but regulatory concerns prevent expansion

- Competitive disadvantage: Compliance costs favor large corporations with dedicated legal teams

- Innovation slowdown: Delay or abandon AI projects due to regulatory uncertainty

- Market access: May avoid operating in highly regulated states

Multi-State Compliance Strategy Framework

Strategic Compliance Prioritization

Businesses operating in multiple states need a structured approach to AI compliance:

State Prioritization Tiers:

Tier 1 (Critical – Immediate Action Required):

- California: Multiple laws, largest market, highest penalties

- Colorado: First comprehensive AI Act (effective June 30, 2026)

- Texas: TRAIGA effective January 1, 2026

- New York: NYC Local Law 144 actively enforced

Tier 2 (Significant – Active Monitoring):

- Illinois: Sector-specific requirements (video interviews, biometric data)

- Washington: Privacy legislation with AI provisions under debate

- Utah: Specific disclosure requirements for AI in regulated professions

- Connecticut: Passed SB 1295 with ADMT provisions

Tier 3 (Emerging – Future Planning):

- Maryland: Working groups studying private sector AI use

- Virginia: Previous comprehensive bill vetoed, new proposals expected

- Other states: With pending legislation or regulatory proposals

Practical Compliance Steps

Immediate Actions for Businesses:

- Conduct Comprehensive AI Inventory

- Identify all AI systems and applications currently in use

- Document purpose, data sources, and decision-making role

- Map AI systems against state requirements

- Assess State-by-State Exposure

- Identify which states you operate in or serve customers in

- Research current and pending AI legislation

- Create compliance matrix by jurisdiction

- Implement Highest Common Denominator Approach

- Build to strictest state standards (typically California or Colorado)

- Reduces need for multiple versions

- Positions well for future federal framework

- Establish AI Governance Committee

- Cross-functional representation: legal, technical, business, ethics

- Regular review of compliance status

- Authority to approve/reject AI deployments

- Invest in Compliance Technology

- Unified compliance dashboards

- Automated impact assessments

- Bias testing tools

- Audit trail systems

- Develop Documentation Systems

- Training data provenance

- Decision-making processes

- Testing and validation results

- Incident response logs

- Consider NIST Framework Adoption

- Many state laws reference NIST AI Risk Management Framework

- Provides safe harbor in Texas

- Industry-recognized standard

Federal Preemption: The 2026 Outlook

Federal AI Legislation Landscape

The relationship between federal and state AI regulation remains in flux as of late 2025:

Key Federal Developments:

2025 Executive Actions:

- January 2025: President Trump signed “Removing Barriers to American Leadership in Artificial Intelligence” executive order

- Approach: Prioritizes economic competitiveness and innovation over restrictive regulation

- Biden EO rescinded: Removed many AI safety measures from previous administration

- July 2025: “America’s AI Action Plan” released with 90+ policy actions

Congressional Activity:

- H.R. 1 Provision (proposed): Would have suspended state AI regulations for 10 years (stripped from final version)

- Senate draft approach: Would condition federal funding on states agreeing not to regulate AI

- Status: No comprehensive federal AI law passed as of November 2025

- Outlook: Uncertain whether Republican-controlled Congress will prioritize AI legislation

Pending Federal Bills (Examples):

- TAKE IT DOWN Act: Addresses AI-generated exploitative content

- Various sector-specific proposals: Healthcare, employment, deepfakes

- Emphasis: Most proposals focus on voluntary guidelines rather than binding requirements

State vs. Federal Tension

The ongoing debate between state innovation and federal uniformity creates strategic challenges:

“A growing patchwork of state regulations will continue to stifle businesses and undermine the nation’s innovation and leadership in the technology sector without federal action.”U.S. Chamber of Commerce, 2025

Business Strategy Recommendations While Awaiting Federal Clarity:

- Don’t wait: State laws are actively enforced now; federal preemption may not happen or may be partial

- Build for flexibility: Design AI systems that can adapt to changing requirements

- Document everything: Maintain comprehensive records of compliance efforts

- Engage in policy: Participate in state regulatory processes and provide industry feedback

- Monitor continuously: Regulatory landscape changes rapidly

- Budget adequately: Compliance is an ongoing operational cost, not one-time expense

📚 Related Reading

- AI Regulation in the US 2025: Federal vs State Divide Analysis

- EU AI Act 2025: Compliance Checklist for US Businesses

- AI Cybersecurity 2025: Global Conference Key Takeaways

💡 Executive Summary: Key Compliance Insights

- 1,080+ AI bills introduced across all 50 states in 2025, with 118 becoming law

- 38 states enacted approximately 100 AI measures, creating a complex compliance patchwork

- Four major regulatory frameworks: California (multiple targeted laws), Colorado (comprehensive high-risk AI act), Texas (intent-based liability), New York (hiring bias audits)

- Compliance costs are significant but vary by state and business size, with small businesses disproportionately affected

- Industry-specific regulations in healthcare and finance add additional complexity

- Federal preemption uncertain for 2026; businesses must comply with state laws now

- Highest common denominator approach recommended for multi-state operations

- NIST AI Risk Management Framework provides safe harbor in multiple jurisdictions

The state AI regulation landscape in 2025 represents an unprecedented shift in technology governance. With 38 states enacting approximately 100 AI-related measures and over 1,080 bills introduced, businesses face a genuinely complex compliance environment that varies significantly by jurisdiction.

While the specific costs and requirements differ from earlier projections, the fundamental challenge remains: businesses operating nationally must navigate multiple, sometimes conflicting, state requirements for AI development and deployment. The most successful organizations will be those that proactively address these requirements rather than waiting for federal preemption that may never come—or may only partially resolve the complexity.

California’s multiple targeted laws, Colorado’s comprehensive high-risk framework, Texas’s intent-based liability approach, and New York’s hiring bias audits represent four distinct regulatory philosophies. Understanding these approaches and building compliance programs that can adapt to each is now a critical business competency, not an optional enhancement.

❓ Frequently Asked Questions About State AI Regulations

How many US states have AI regulations in 2025?

As of 2025, 38 states have enacted approximately 100 AI-related measures. While lawmakers across all 50 states introduced over 1,080 AI-related bills during 2025, only 118 became law (an 11% passage rate). The regulatory landscape varies significantly by state, with California, Colorado, Texas, and New York having the most comprehensive frameworks. It’s important to note that not all states have comprehensive AI laws—many have passed targeted legislation addressing specific uses like deepfakes, employment decisions, or government AI use.

Which states have the most comprehensive AI regulations?

Four states lead in AI regulation: California has passed 18 separate AI laws covering transparency, deepfakes, healthcare, and frontier AI models. Colorado passed the first comprehensive AI Act (SB24-205) regulating high-risk AI systems, effective June 30, 2026. Texas enacted TRAIGA with an intent-based liability framework, effective January 1, 2026. New York enforces Local Law 144 requiring bias audits for AI hiring tools. Each state takes a different approach: California uses multiple targeted laws, Colorado uses a comprehensive high-risk framework, Texas requires proof of intent for violations, and New York focuses on employment discrimination prevention.

What are the penalties for non-compliance with state AI regulations?

Penalties vary significantly by state: California can levy fines up to $1,000,000 per violation under SB 53 (Frontier AI Act), and up to $7,500 per violation under various other laws. Colorado penalties reach up to $20,000 per violation. New York City penalties range from $500 for first violations to $1,500 for subsequent violations, with no cap on total civil penalties. Texas can impose civil penalties up to $10,000 per violation. Beyond monetary fines, non-compliance can result in class action lawsuits, business restrictions, reputational damage, and loss of customer trust. Some violations also carry private rights of action, allowing individuals to sue directly.

Should businesses wait for federal AI legislation before investing in compliance?

No, waiting is not advisable for three critical reasons: (1) State laws are actively enforced now—California, New York, Colorado, and Texas all have laws in effect or taking effect in early 2026. (2) Federal legislation may not fully preempt state laws—even if Congress passes AI legislation, it may allow states to maintain more stringent requirements, similar to California’s approach with privacy law. (3) Building compliance capabilities takes time—developing governance frameworks, conducting audits, and training staff requires months of preparation. The current federal approach under the Trump administration emphasizes innovation and voluntary guidelines rather than restrictive regulation, making significant federal preemption less certain. Businesses should implement compliance programs now using a “highest common denominator” approach.

What is the “highest common denominator” approach to multi-state AI compliance?

The “highest common denominator” approach means building your AI systems and compliance programs to meet the most stringent state requirements—typically California or Colorado standards—for all your operations nationwide. This strategy has several advantages: (1) Reduces complexity by avoiding multiple system versions for different states. (2) Ensures compliance everywhere since meeting the strictest standards automatically satisfies less stringent ones. (3) Positions for future regulations as other states often model laws on California or Colorado. (4) Simplifies auditing and documentation with one consistent standard. (5) Builds customer trust by demonstrating commitment to highest standards. While this approach may involve higher initial costs, it’s typically more efficient than managing state-by-state variations and reduces legal risk.

How do I start with multi-state AI compliance for my business?

Begin with these six critical steps: (1) Conduct a comprehensive AI inventory—identify all AI systems, their purposes, data sources, and decision-making roles. (2) Map state exposure—determine which states you operate in or serve customers in, and research their AI laws. (3) Prioritize high-risk applications and major markets—focus first on California, Colorado, Texas, and New York if you operate there. (4) Establish an AI governance committee with legal, technical, business, and ethics representation. (5) Implement or adopt the NIST AI Risk Management Framework—this provides safe harbor protections in multiple states including Texas. (6) Invest in compliance technology—automated monitoring, bias testing tools, and documentation systems. Consider consulting with AI compliance specialists who understand the multi-state landscape.

Does Texas have strict liability for AI harm?

No, this is a common misconception. Texas’s Responsible AI Governance Act (TRAIGA) does NOT impose strict liability. Instead, Texas requires proof of intentional misconduct or discriminatory intent. To violate TRAIGA’s prohibition on discrimination, there must be evidence of “intent to unlawfully discriminate”—mere disparate impact is insufficient. This intent-based approach makes Texas more business-friendly than strict liability models. Additionally, TRAIGA provides multiple safe harbors: companies substantially complying with NIST’s AI Risk Management Framework, conducting good faith testing, following state guidelines, or experiencing third-party misuse are protected from liability. Texas also provides a 60-day cure period after Attorney General notice. This approach provides businesses with greater legal certainty compared to outcome-based liability.

What is the NIST AI Risk Management Framework and why is it important?

The NIST AI Risk Management Framework (AI RMF) is a voluntary guidance document developed by the National Institute of Standards and Technology for managing AI risks. It’s organized around four core functions: Govern (establishing organizational AI governance), Map (understanding AI system contexts), Measure (assessing reliability, safety, and bias), and Manage (implementing controls and monitoring). The framework is critically important because: (1) Multiple state laws reference it—Texas, Colorado, and others specifically mention NIST standards. (2) Provides safe harbor protection—Texas TRAIGA explicitly protects organizations substantially complying with NIST’s AI RMF. (3) Industry-recognized standard—becoming the de facto compliance benchmark. (4) Voluntary but influential—not legally required at federal level, but increasingly expected by regulators.

⚠️ Legal Disclaimer

This article is based on verified data and information current as of November 21, 2025. Sources include: National Conference of State Legislatures (NCSL), MultiState legislative tracking, California Privacy Protection Agency, Future of Privacy Forum, Texas Legislature, New York City Department of Consumer and Worker Protection, U.S. Chamber of Commerce, and legal analysis from multiple law firms including White & Case, Baker Botts, Latham & Watkins, and others.

The AI regulatory landscape is evolving extremely rapidly, with new laws, amendments, and regulatory guidance issued frequently. This information is for educational and informational purposes only and does not constitute legal advice. Businesses should consult with qualified legal counsel familiar with AI regulations in their specific jurisdictions before making compliance decisions.

State laws and their effective dates are subject to change. Some laws mentioned have delayed effective dates, pending amendments, or ongoing regulatory processes. Always verify current status with official state sources or legal advisors. Compliance requirements may vary based on your specific business model, industry, geographic footprint, and AI applications.

Accuracy Note: This article corrects several inaccuracies present in earlier analyses of state AI regulations, including clarifications about the number of states with comprehensive AI laws, the nature of Texas’s liability framework, and the specific requirements of California’s various AI statutes. All factual claims have been verified against primary sources and recent legal analyses.

Published: November 21, 2025

Last Updated: November 21, 2025

Category: AI & Technology, Business Compliance, Legal & Regulatory

Reading Time: 18-22 minutes

Word Count: ~5,800 words