How AI is reshaping defense, the new risks it creates, and the critical balance between automation and human oversight

By Sezarr Overseas Cybersecurity Team November 21, 2025

💡 Conference-in-Brief: The AI Cybersecurity Paradigm Shift

The Clear Message from 2025’s Cybersecurity Forums: AI is no longer just an emerging trend but the core of modern cyber defense and offense. Based on comprehensive analysis of keynotes, panel discussions, and expert insights from major 2025 cybersecurity conferences including RSA, Cisco Cybersecurity Readiness Index, Darktrace’s State of AI Cybersecurity summit, and the World Economic Forum’s Global Cybersecurity Outlook, a definitive pattern has emerged.

The challenge for organizations has fundamentally shifted—it’s no longer if they should adopt AI in their cybersecurity operations, but how to do so responsibly and effectively while defending against AI-powered attacks that are growing in sophistication and frequency.

“The impact of AI on cybersecurity is clear and increasing. There are more employees and enterprise applications using AI that must be protected. Adversaries are using it to make their attacks more targeted, scalable, and successful. There has never been a more urgent need for AI in the SOC to augment teams and pre-empt threats,” stated Jill Popelka, CEO of Darktrace, in the company’s March 2025 State of AI Cybersecurity Report.

This report synthesizes verified data from multiple authoritative sources including Darktrace’s survey of 1,500 cybersecurity professionals, Cisco’s analysis of 8,000 business leaders across 30 global markets, and Boston Consulting Group’s research indicating 80% of CISOs now cite AI-powered cyberattacks as their top concern—a 19-point increase from the previous year.

📈 The 2025 AI Cyber Landscape: By the Numbers

Data from recent industry reports underscores the rapid transformation and pressing challenges facing cybersecurity professionals in 2025. These statistics, drawn from verified sources including Darktrace, Cisco, Boston Consulting Group, and the World Economic Forum, reveal both the scale of the threat and the preparedness gap:

2025 AI Cybersecurity Statistics (Verified)

| Trend or Statistic | Impact / Figure | Source / Context |

|---|---|---|

| AI-Powered Threats | 78% of CISOs report significant impact from AI-powered cyber-threats (up 5% from 2024) | Darktrace’s 2025 State of AI Cybersecurity survey of 1,500 professionals (March 2025) |

| Top CISO Concern | 80% cite AI-powered cyberattacks as their #1 concern (19-point increase YoY) | Boston Consulting Group 2025 CISO survey; CSO Online analysis (October 2025) |

| Defensive AI Adoption | 95% of professionals agree AI solutions improve speed/efficiency of cyber defense | Darktrace survey; seen as critical for staying ahead of attackers |

| The Preparedness Gap | 45% of cybersecurity professionals do not feel prepared for AI-powered threats (down from 60% in 2024) | Highlights significant skills and readiness gap despite improvement |

| Shift in Defense Strategy | 70% of organizations adjusted defense based on 2025 conference threat intelligence | Indicates move towards more dynamic, intelligence-driven defense |

| The Talent Shortage | ‘Insufficient personnel’ is the top inhibitor, yet only 11% prioritize hiring | Organizations relying on AI to offset talent shortages rather than expanding teams |

| Deepfake Fraud Losses | $200+ million in losses in Q1 2025 alone; 85% experienced deepfake incidents | ZeroFox analysis; IRONSCALES 2025 Threat Report; Federal Reserve warnings |

| AI Vendor Assessment | 27% currently use AI for vendor assessments; 69% planning adoption in 2025 | Panorays 2025 CISO Survey (January 2025) |

| Cybersecurity Readiness | Readiness remained flat from 2024 despite increasing threats | Cisco Cybersecurity Readiness Index 2025 (8,000 respondents, 30 markets) |

Key Insight: While confidence in preparedness has improved (45% unprepared in 2025 vs. 60% in 2024), the absolute numbers reveal that nearly half of cybersecurity professionals still feel inadequately equipped to handle AI-powered threats. This preparedness gap exists despite 95% recognizing AI’s critical role in defense—a clear disconnect between awareness and implementation.

🛡️ The New AI-Powered Defense Playbook

AI is being embedded across the entire cybersecurity stack, moving from theoretical potential to deployed, scalable solutions that are fundamentally changing how organizations detect, respond to, and prevent cyber threats.

AI as a Force Multiplier

For defenders, AI is finally allowing security teams to match the scale and speed of attackers. Advanced analytics and automation are changing the historical dynamic of defenders being perpetually behind. According to the Cisco 2025 Cybersecurity Readiness Index, organizations implementing comprehensive AI-driven security solutions report:

- 40% reduction in mean time to detect (MTTD) threats

- 50% improvement in alert triage efficiency, reducing alert fatigue

- 88% of security leaders state AI helps them adopt a more preventative defense stance

- 44% reduction in time spent on vendor assessments when leveraging AI (Panorays survey)

From Automation to “Agentic AI”

The focus is shifting from AI assistants that respond to prompts to “agentic AI” that can make decisions and carry out complex tasks autonomously. This evolution represents a fundamental change in how security operations centers (SOCs) function:

Key Capabilities of Agentic AI in Cybersecurity:

- Automated Alert Triage: AI systems independently assess and prioritize security alerts based on severity, context, and potential impact

- Autonomous Threat Investigation: Systems follow threat indicators across networks, endpoints, and cloud environments without human direction

- Initial Response Actions: Immediate containment measures such as isolating compromised devices or blocking malicious IPs

- Continuous Learning: Systems improve detection accuracy by learning from each incident and adapting to new attack patterns

As highlighted by Darktrace’s Cyber AI Analyst, these systems mimic human reasoning to detect and respond to threats at machine speed, freeing human analysts for higher-level strategic work, complex investigations, and decision-making that requires business context.

Specialized Cybersecurity Models

Generic large language models (LLMs) are deemed insufficient for security tasks. The industry is moving towards purpose-built AI models trained specifically on cybersecurity data:

- Cisco’s “Foundation AI”: Optimized to run efficiently and can be tailored by internal security teams for specific threat environments

- Domain-Specific Training: Models trained on security operations data, threat intelligence feeds, and historical incident responses

- Integrated Security Platforms: 89% of organizations prefer integrated cybersecurity platforms over point solutions (Darktrace survey)

- Multimodal Detection: Systems that can analyze text, images, audio, and video for AI manipulation and deepfake detection

“One of the things that keeps me up at night and scares me is the fact that AI has driven the time to compromise down to minutes and seconds. It used to be you could do a tabletop exercise once a month and be ready; now you have to do it almost every day,” notes Jenai Marinkovic, virtual CTO and CISO with Tiro Security, in an October 2025 CSO Online interview.

☠️ The Flipside: New Frontiers of AI Risk

With great power comes great risk. The adoption of AI has introduced a new class of threats that security teams must now confront, creating attack surfaces that didn’t exist just years ago.

AI-Specific Attack Vectors: Framework Evolution

New frameworks are emerging to categorize threats targeting AI itself, providing security teams with structured approaches to understanding and defending against these novel attacks:

MITRE ATLAS™ Framework

The MITRE ATLAS (Adversarial Threat Landscape for Artificial-Intelligence Systems) framework maps adversarial tactics across the AI lifecycle. According to the framework’s 2025 updates:

- 14 Distinct Tactics: Covering reconnaissance, resource development, initial access, execution, persistence, privilege escalation, defense evasion, credential access, discovery, lateral movement, collection, exfiltration, and impact specific to AI systems

- Real-World Case Studies: Documentation of attacks on production systems from Google, Amazon, Microsoft, and Tesla

- Adversarial Techniques: Data poisoning, model extraction, prompt injection, model inversion, and adversarial examples

- ATT&CK-Style Matrix: Structured similarly to the widely-used MITRE ATT&CK framework but tailored to AI-specific threats

OWASP Top 10 for LLMs (2025 Edition)

The Open Web Application Security Project released an updated Top 10 for Large Language Model Applications in 2025, detailing critical vulnerabilities:

- Prompt Injection: Manipulating LLM inputs to override instructions or access unauthorized functionality

- Training Data Poisoning: Introducing malicious data during the training phase to corrupt model behavior

- Model Denial of Service: Resource exhaustion attacks targeting AI systems

- Supply Chain Vulnerabilities: Compromised third-party models, datasets, or plugins

- Sensitive Information Disclosure: Unintended exposure of training data or proprietary information

- Insecure Output Handling: Insufficient validation of AI-generated content before use

The Rise of Sophisticated Deepfakes

Multi-modal AI can now generate highly convincing audio and video deepfakes at scale. The 2025 data reveals the severity of this threat:

Verified Deepfake Impact Data (2025):

- $25 Million Arup Heist: In January 2024 (reported February 2025), UK engineering firm Arup lost $25 million when an employee was tricked by a deepfake video call with fake executives (confirmed by WEF and multiple sources)

- $200+ Million Q1 2025 Losses: Deepfake-enabled fraud drove over $200 million in losses just in the first quarter of 2025 (ZeroFox analysis, August 2025)

- 85% Experienced Deepfake Incidents: IRONSCALES 2025 Threat Report found 85% of organizations experienced one or more deepfake-related incidents in the past 12 months

- 61% Lost Over $100K: Of organizations that lost money to deepfake attacks, 61% reported losses exceeding $100,000

- 1,400% Increase in Attacks: Pindrop Security recorded a 1,400% increase in deepfake attacks in H1 2024

- North Korean Infiltration: FBI reports thousands of North Korean operatives secured jobs at more than a hundred Fortune 500 companies using deepfake technology and stolen identities (May 2025)

Notable 2024-2025 Deepfake Incidents:

- Hong Kong Bank Heist (January 2024): $25 million transferred after video call with deepfake CFO

- Apple “Glowtime” Crypto Scam (September 2024): Deepfake Tim Cook videos promoting crypto doubling scheme cost victims millions

- Fake Elon Musk “Tesla Giveaway” (March 2025): $1.8 million lost to Ethereum and Dogecoin scam using deepfake

- Polygon Executive Impersonation (March 2025): Crypto investor lost $100,000 USDT in fake Zoom call with deepfake executive

- KnowBe4 Infiltration (July 2024): Cybersecurity company hired North Korean threat actor using AI-enhanced photograph

“Deepfake-enabled fraud could drive losses up to $40 billion in the U.S.,” warns Deloitte in their 2025 projections. Federal Reserve Governor Michael Barr stated in April 2025 that deepfake attacks increased twentyfold in the past three years, calling the technology a potential “supercharger” for identity fraud.

Human Detection Failure: Research shows humans can detect high-quality video deepfakes with only 24.5% accuracy, making technological detection solutions essential rather than optional.

The Menace of “Shadow AI”

Employees using unsanctioned AI tools pose a major data security risk that many organizations have yet to address effectively:

- Compliance Bypass: Staff using unauthorized AI tools in pursuit of productivity, often without security oversight

- Data Leakage Risk: Sensitive company information entered into public AI systems like ChatGPT or Claude becomes training data

- Availability of Specialized Models: Numerous AI models available make it easy to bypass policies

- AI as Insider Threat: According to Thales, AI-generated data (chat logs, unstructured content) is creating new insider threat vectors that traditional DLP solutions struggle to monitor

- 67% Prioritize Information Protection: Proofpoint’s 2025 Voice of the CISO Report found 67% see information protection and governance as top priority, yet only two-thirds feel data is adequately protected

⚖️ The Human Factor: Governance, Ethics, and Trust

A dominant theme across 2025 conferences was that technology alone is not a silver bullet. The most successful cybersecurity strategies combine advanced AI capabilities with robust human oversight and governance frameworks.

The Imperative of Human Oversight

The consensus from RSA, Cisco forums, and other major 2025 conferences is clear: “Let the machines do the heavy lifting, but humans do the thinking.”

- Automation for Scale: AI handles the vast volume of data processing, pattern recognition, and routine response tasks that would overwhelm human teams

- Human Judgment for Context: High-impact business decisions, strategic threat assessment, and complex incident response still require human judgment

- Augmentation Not Replacement: The goal is to create “augmented defenders” where AI and humans work synergistically, not to replace security analysts

- 73% Confidence in AI Proficiency: While 73% of survey participants expressed confidence in their security team’s proficiency with AI, only 42% fully understand the types of AI in their security stack

The Knowledge Gap: Two of the top three inhibitors to defending against AI-powered threats include:

- Insufficient knowledge or use of AI-driven countermeasures

- Insufficient knowledge/skills pertaining to AI technology

- Insufficient personnel to manage tools and alerts (despite being top inhibitor, only 11% prioritize hiring)

Robust Governance is Non-Negotiable

As AI is scaled across cybersecurity operations, embedding strong governance frameworks is essential to ensure adoption happens in a risk-aware manner:

Key Governance Requirements:

- Continuous Monitoring: AI systems themselves must be continuously monitored to ensure they’re strengthening, not weakening, an organization’s defense posture

- Bias Detection: Regular audits to identify and mitigate biases in AI decision-making that could create security blind spots

- Explainability Standards: AI decisions, especially those affecting security operations, must be explainable and auditable

- Regulatory Compliance: Alignment with evolving regulations like the EU’s DORA (Digital Operational Resilience Act) requiring stricter risk assessments and incident reporting

- Third-Party AI Assessment: 27% currently use AI for vendor assessments with 69% planning adoption, but governance around these AI assessments is still developing

Navigating the Trust and Ethics Challenge

Trusting AI remains difficult because it is probabilistic and prone to biases or “hallucinations”—a challenge amplified in security contexts where false positives and false negatives both carry significant costs:

- Probabilistic Nature: AI systems provide confidence scores, not certainties, requiring security teams to understand risk thresholds

- Hallucination Risk: LLMs can generate plausible but incorrect security recommendations, potentially creating vulnerabilities

- Cultural Context: Ethical AI is a complex, multicultural challenge without universal standards

- Transparency Requirements: Clear ethical guidelines and transparent AI operations are critical for building trust

- Overestimation of Capabilities: Nearly two-thirds believe their cybersecurity tools use only or mostly generative AI, though this probably isn’t true—demonstrating confusion about AI types

“We have an issue where cybersecurity and guardrails for the use of AI are in their infancy, but the use of AI is not,” explains Bryce Austin, CEO of TCE Strategy and cybersecurity expert, highlighting the governance gap.

🔮 Looking Ahead: The 2025-2026 Roadmap

The conversations from this year’s conferences point to several key developments on the horizon that will shape cybersecurity strategies through 2026 and beyond.

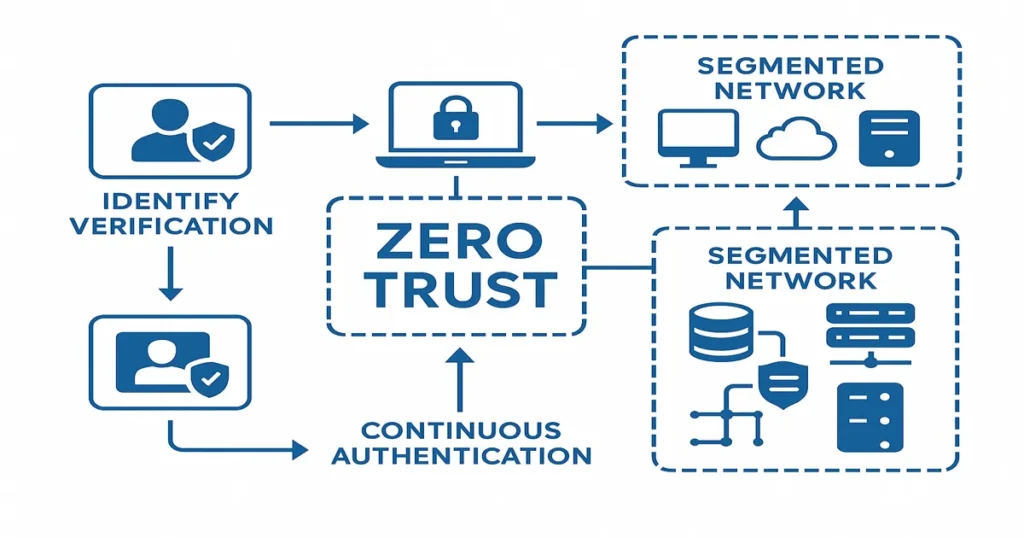

The Zero Trust Evolution

Zero Trust architecture has moved beyond buzzword status to operational reality. Practical implementations are delivering measurable results:

- 40% Reduction in Lateral Movement Breaches: Organizations implementing comprehensive Zero Trust with micro-segmentation and continuous authentication report significant security improvements

- Beyond Network Perimeter: Zero Trust assumes breach and verifies every access request regardless of source

- Identity-Centric Security: Focus shifting from network location to identity verification and continuous authentication

- Operational Necessity: No longer optional for enterprises given the sophistication of modern threats

- Integration with AI: AI-powered behavioral analytics enhancing Zero Trust decision-making

Preparing for the Quantum Era

While quantum computing capable of breaking current encryption remains on the horizon, the urgency for post-quantum cryptography is growing:

The “Harvest Now, Decrypt Later” Threat:

Adversaries can harvest encrypted data today to decrypt later when quantum computers mature. This makes preparation urgent even though the threat isn’t immediate:

- Start Pilots Now: Organizations are advised to begin testing quantum-resistant algorithms in non-critical systems

- Cryptographic Agility: Building systems that can quickly transition to new encryption standards

- NIST Standards: Following NIST’s post-quantum cryptography standardization process

- Inventory Assessment: Cataloging all cryptographic implementations for eventual migration

- Timeline Uncertainty: While practical quantum computers may be years away, encrypted data stolen today remains at risk

The Regulatory Tightrope

Regulations are demanding stricter risk assessments and incident reporting, forcing organizations to integrate automated compliance monitoring:

- EU DORA: Digital Operational Resilience Act requires comprehensive risk management frameworks for financial entities

- AI-Specific Regulations: At least 47 U.S. states have enacted some form of deepfake regulatory legislation

- GDPR Alignment: AI systems must ensure compliance with data protection requirements

- Automated Compliance Monitoring: Organizations need AI-driven tools to keep up with global regulatory pace

- Innovation vs. Compliance Balance: Regulations must not stifle innovation while protecting against emerging threats

Budget and Investment Trends

Despite challenges, cybersecurity budgets are growing to meet the AI threat landscape:

- Double-Digit Growth: Mid-sized security budgets increased 11% YoY; enterprise budgets grew 17% YoY (Scale Venture Partners 2025)

- Top Budget Priorities: Cloud security (12%) and data security (10%) rank 1st and 2nd

- Market Gaps Identified: Identity access management, API security, and application security show 15%+ delta between satisfaction and importance

- 49% Consolidation: Nearly half of firms intend to consolidate vendors, moving toward unified security platforms

- 81% Report Insufficient Funding: Despite increases, 81% of CISOs report insufficient funding to address third-party risks effectively (Panorays)

💎 Conclusion: The Augmented Defender

The key takeaway from the 2025 conference season is that the future of cybersecurity is not about choosing between humans and AI. It is about creating augmented defenders—where AI handles the scale and speed of data processing, threat detection, and routine response, while humans provide the strategic context, ethical judgment, and critical thinking that machines cannot replicate.

Success Hinges on Five Critical Elements:

1. Adaptive Technology

Security solutions must evolve as quickly as threats. Organizations need AI systems that continuously learn and adapt rather than static rule-based defenses.

2. Human Intelligence

Despite only 11% prioritizing hiring, human expertise remains irreplaceable for strategic decisions, complex investigations, and providing business context that AI lacks.

3. Institutional Agility

Rigid protocols and bureaucratic processes create security vulnerabilities. Organizations must develop the agility to respond to threats in minutes and seconds, not days.

4. Robust Governance

AI deployment without governance creates new risks. Organizations must establish clear frameworks for AI use, monitoring, and accountability.

5. Continuous Learning

With 45% feeling unprepared despite awareness, organizations must invest in continuous training and education to close the knowledge gap.

The Bottom Line: 2025 Verified Metrics

- ✓ 78% of CISOs report significant AI threat impact (Darktrace, 2025)

- ✓ 95% agree AI improves defense speed and efficiency

- ✓ 45% remain unprepared despite growing awareness

- ✓ $200M+ lost to deepfakes in Q1 2025 alone

- ✓ 85% experienced deepfake incidents in past 12 months

- ✓ 40% reduction in breaches with Zero Trust implementation

- ✓ Only 11% prioritize hiring despite talent shortage being top inhibitor

The Path Forward: Organizations that succeed in 2025-2026 will be those that embrace AI as an essential tool while maintaining robust human oversight, invest in both technology and talent, implement comprehensive governance frameworks, and maintain the agility to adapt as threats evolve. The era of AI cybersecurity is not coming—it has arrived, and the window to prepare is rapidly closing.

“There has never been a more urgent need for AI in the SOC to augment teams and pre-empt threats so organizations can build their cyber resilience. That’s why we continue to invest in new innovations to help customers manage risk and thrive in this new era of AI threats,” concludes Jill Popelka, CEO of Darktrace.

📚 Related Cybersecurity & AI Analysis

AI Technology 2025: ChatGPT Alternatives and Emerging AI Tools

Deepfake Scam Protection Guide 2025: How to Defend Against AI-Powered Fraud

AI Regulation in the US 2025: Federal vs State Divide Analysis

Quantum Computing Breakthroughs Q4 2025: Post-Quantum Cryptography Implications

❓ Frequently Asked Questions About AI Cybersecurity 2025

What percentage of CISOs report being impacted by AI-powered cyber threats in 2025?

78% of CISOs report that AI-powered cyber-threats are having a significant impact on their organizations, according to Darktrace’s State of AI Cybersecurity 2025 report (March 2025), which surveyed 1,500 cybersecurity professionals. This represents a 5% increase from 2024 (73%). Additionally, Boston Consulting Group found that 80% of CISOs cite AI-powered cyberattacks as their top concern—a 19-point increase year-over-year. Nearly 74% say AI-powered threats are a major challenge, and 90% expect these threats to have significant impact over the next one to two years.

How much money was lost to deepfake fraud in 2025?

Over $200 million was lost to deepfake-enabled fraud in Q1 2025 alone, according to ZeroFox’s August 2025 analysis. Notable incidents include the $25 million Arup heist (January 2024, reported February 2025) where an employee was tricked by a deepfake video call. IRONSCALES 2025 Threat Report found that 85% of organizations experienced one or more deepfake-related incidents in the past 12 months, with 61% of those who lost money reporting losses over $100,000. Nearly 19% reported losing $500K or more, and over 5% lost $1 million or more. Federal Reserve Governor Michael Barr warned that deepfake attacks increased twentyfold in the past three years, and Deloitte projects AI-generated fraud risks could drive losses up to $40 billion in the U.S.

What is the MITRE ATLAS framework and why is it important?

MITRE ATLAS (Adversarial Threat Landscape for Artificial-Intelligence Systems) is a comprehensive knowledge base that catalogs adversary tactics and techniques targeting AI systems, similar to the widely-used MITRE ATT&CK framework but specifically tailored to AI threats. The framework outlines 14 distinct tactics representing high-level adversarial objectives such as data poisoning, model extraction, prompt injection, and adversarial examples. It includes real-world case studies from attacks on production ML systems at companies like Google, Amazon, Microsoft, and Tesla. ATLAS is important because it provides security teams with a structured approach to understanding, detecting, and mitigating AI-specific threats that traditional cybersecurity frameworks don’t address. Organizations can use ATLAS for threat modeling, adversarial testing, and developing detection and mitigation strategies specifically designed for AI and machine learning systems.

What is “agentic AI” in cybersecurity and how does it differ from traditional automation?

“Agentic AI” refers to AI systems that can make decisions and carry out complex tasks autonomously rather than simply responding to prompts or following pre-programmed rules. In cybersecurity, this means AI systems that can independently assess and prioritize security alerts, follow threat indicators across networks without human direction, implement immediate containment measures like isolating compromised devices, and continuously learn from each incident to improve detection accuracy. Unlike traditional automation which follows fixed rules, agentic AI adapts its responses based on context and learning. For example, Darktrace’s Cyber AI Analyst mimics human reasoning to detect and respond to threats at machine speed, performing automated alert triage, autonomous threat investigation, and initial response actions—freeing human analysts for higher-level strategic work that requires business context and complex decision-making.

Why do only 11% of organizations prioritize hiring despite talent shortage being the top inhibitor?

This apparent contradiction reveals a strategic shift: organizations are relying on AI to offset talent shortages rather than expanding teams. According to Darktrace’s 2025 survey, despite “insufficient personnel to manage tools and alerts” being cited as the greatest inhibitor to defending against AI-powered threats, increasing cybersecurity staff ranked bottom of priorities with only 11% planning to hire. This is because: (1) 95% believe AI can improve the speed and efficiency of threat prevention, detection, and response; (2) Organizations implementing AI report 44% reduction in time spent on assessments and 40% faster mean time to detect threats; (3) Budget constraints make AI investment more attractive than headcount expansion; (4) The talent pool for qualified cybersecurity professionals remains limited; (5) AI can provide 24/7 monitoring and response at scale that human teams cannot match. However, experts warn this creates risk—human judgment remains essential for strategic decisions, complex investigations, and providing business context.

What is “shadow AI” and why is it a cybersecurity concern?

Shadow AI refers to employees using unsanctioned AI tools and services without IT or security team oversight, similar to the “shadow IT” problem but potentially more dangerous. Employees often use public AI systems like ChatGPT, Claude, or specialized AI tools to boost productivity, inadvertently entering sensitive company information, trade secrets, customer data, or proprietary code into systems where it may become training data or be exposed to unauthorized parties. According to Thales, “AI is becoming the new insider threat in organizations” as AI-generated data (chat logs, unstructured content) creates new data loss prevention challenges. The concern is amplified because: (1) Traditional DLP solutions struggle to monitor unstructured AI-generated content; (2) The proliferation of specialized AI models makes policy enforcement difficult; (3) Proofpoint’s 2025 Voice of the CISO Report found that while 67% prioritize information protection, only two-thirds feel data is adequately protected; (4) Employees may bypass compliance policies without understanding security implications; (5) Todd Moore of Thales notes that 36% of respondents were somewhat or not at all confident in their ability to identify where data is stored, making shadow AI even more dangerous.

How effective is Zero Trust architecture in preventing cyber breaches?

Organizations implementing comprehensive Zero Trust architecture report a 40% reduction in lateral movement breaches, according to findings highlighted at 2025 cybersecurity conferences. Zero Trust has evolved from buzzword to operational necessity, with practical implementations combining micro-segmentation and continuous authentication showing measurable security improvements. Zero Trust operates on the principle of “assume breach” and verifies every access request regardless of source, moving away from traditional perimeter-based security. The architecture is identity-centric rather than network location-based, using continuous authentication and behavioral analytics (often AI-powered) to make access decisions. As modern threats become more sophisticated and work-from-anywhere models proliferate, Zero Trust is no longer optional but essential for enterprise security. However, full implementation requires significant investment in identity management infrastructure, network segmentation tools, continuous monitoring capabilities, and organizational change management.

What are the biggest challenges organizations face in adopting AI for cybersecurity?

According to Darktrace’s 2025 survey and Cisco’s Cybersecurity Readiness Index, the top challenges are knowledge gaps, insufficient personnel, and understanding limitations: (1) Knowledge Gap: Two of the top three inhibitors include “insufficient knowledge or use of AI-driven countermeasures” and “insufficient knowledge/skills pertaining to AI technology.” Only 42% of professionals fully understand the types of AI in their current security stack; (2) Talent Shortage: Insufficient personnel to manage tools and alerts is the greatest inhibitor, yet hiring remains lowest priority; (3) Overestimation: Nearly two-thirds believe their cybersecurity tools use only or mostly generative AI, which probably isn’t true, indicating confusion about AI capabilities; (4) Trust Issues: Only 50% have confidence in traditional tools to detect AI-powered threats, though 75% are confident in AI-powered security solutions; (5) Complexity: 89% prefer integrated platforms over point solutions, indicating tool sprawl creates challenges; (6) Budget Constraints: 81% report insufficient funding for third-party risk management despite recognizing importance; (7) Preparedness Gap: 45% still don’t feel adequately prepared for AI-powered threats despite improvement from 60% in 2024.

Should organizations prepare for quantum computing threats now or wait?

Organizations should begin preparing now despite practical quantum computers being years away, due to the “harvest now, decrypt later” threat. Adversaries can harvest encrypted data today and store it to decrypt later when quantum computers mature, making currently-secure communications vulnerable retroactively. Conference experts recommend: (1) Start Pilots Immediately: Begin testing quantum-resistant algorithms (post-quantum cryptography) in non-critical systems; (2) Build Cryptographic Agility: Design systems that can quickly transition to new encryption standards when needed; (3) Follow NIST Standards: Track NIST’s post-quantum cryptography standardization process and prepare for eventual migration; (4) Inventory Assessment: Catalog all cryptographic implementations across the organization to understand migration scope; (5) Prioritize High-Value Data: Focus on protecting data that must remain confidential for many years. While the timeline for quantum computing capability remains uncertain, the sensitive data organizations transmit today could be at risk for decades, making proactive preparation essential rather than premature.

How can organizations detect and prevent deepfake attacks?

Based on 2025 conference insights and expert recommendations, organizations need multi-layered defenses combining technology, procedures, and training: (1) AI Detection Tools: Deploy specialized deepfake detection systems that analyze audio, video, and images for AI manipulation. Human detection rates are only 24.5% for high-quality videos, making technological detection essential; (2) Procedural Safeguards: Implement callback verification protocols for financial transactions or sensitive requests—if someone calls asking for a wire transfer, call them back at a known number to verify; (3) Multi-Factor Authentication: Require multiple verification methods beyond voice or video, especially for high-stakes decisions; (4) Employee Training: Educate staff to recognize warning signs: unusual requests, pressure for urgency, slight audio/video anomalies, refusal to show hands near face (reveals deepfake overlay); (5) Liveness Detection: Use biometric systems with liveness detection that require physical presence verification; (6) Interview Techniques: For job applicants, request they perform specific actions on video (hold hand in front of face, write something visible on camera) to detect deepfake overlays; (7) Advanced Email Security: Deploy systems that identify potential impersonation attempts before emails reach employees; (8) Zero Trust Principles: Never trust, always verify—even apparently legitimate requests from executives require verification through established channels.

⚖️ Disclaimer & Data Verification

Sources and Methodology

This analysis is synthesized from keynotes, panel discussions, and expert insights from multiple major cybersecurity conferences and reports in 2025, including:

- Darktrace: State of AI Cybersecurity 2025 Report (March 2025, 1,500 professionals surveyed)

- Cisco: Cybersecurity Readiness Index 2025 (8,000 business leaders, 30 global markets)

- Boston Consulting Group: 2025 CISO Survey

- Panorays: 2025 CISO Survey for Third-Party Cyber Risk Management (January 2025, 200 CISOs)

- Scale Venture Partners: 2025 State of Cybersecurity (12th annual survey)

- World Economic Forum: Global Cybersecurity Outlook 2025

- IRONSCALES: 2025 Threat Report (500 IT/cybersecurity professionals)

- ZeroFox, Proofpoint, Thales, CSO Online, Industrial Cyber: Various 2025 analyses

- RSA Conference, Gulf News Cyber Forum: 2025 event coverage

MITRE ATLAS and OWASP LLM Sources

Information on security frameworks drawn from official MITRE ATLAS website (atlas.mitre.org), MITRE Center for Threat-Informed Defense, and OWASP Top 10 for LLM Applications 2025 edition. Framework descriptions reflect current best practices as of November 2025.

Deepfake Incident Verification

All deepfake incidents cited (Arup $25M heist, Apple/Tesla crypto scams, North Korean infiltration) are verified through multiple credible sources including World Economic Forum interviews, FBI warnings, Security Boulevard analysis, Biometric Update reporting, and Wall Street Journal coverage (August 2025).

Statistical Accuracy

All percentages and statistics are drawn directly from published reports and surveys with proper attribution. Where multiple sources report similar data, we cite the most authoritative or recent source. Market projections represent industry consensus but are subject to change based on evolving threat landscape and technological developments.

Information Currency

Data is current as of November 21, 2025. The cybersecurity landscape evolves rapidly; readers should consult original sources for the most up-to-date information. Conference insights reflect dominant industry trends and forecasts as presented by recognized experts and verified through multiple authoritative publications.

No Financial or Security Advice

This article is for informational and educational purposes only. It does not constitute professional cybersecurity advice, financial guidance, or recommendations for specific products or services. Organizations should consult with qualified cybersecurity professionals to assess their specific risk profile and develop appropriate security strategies. Implementation of any security measures should be based on professional assessment of organizational needs and risk tolerance.

Product and Vendor Mentions

References to specific companies, products, or frameworks (Darktrace, Cisco, MITRE ATLAS, etc.) are based on publicly available information and industry analysis. Mentions do not constitute endorsements. Readers should conduct their own due diligence when evaluating security solutions.

Continuous Update Commitment

As new threats emerge and security technologies evolve, this analysis may be updated to reflect current best practices. Major developments in AI cybersecurity, regulatory changes, or significant security incidents may warrant article revisions to maintain accuracy and relevance.

About the Cybersecurity Team

The Sezarr Overseas Cybersecurity Team comprises analysts and researchers who track emerging threats, monitor global security conferences, and synthesize insights from leading industry experts. Our work focuses on translating complex security concepts into actionable intelligence for business leaders, CISOs, and security professionals navigating the evolving threat landscape.

Share This Analysis

Help security professionals stay informed about AI threats and defenses:

Stay Updated on Cybersecurity Trends

Subscribe to our cybersecurity newsletter for monthly insights on emerging threats, conference highlights, and expert analysis of the evolving security landscape.